Advanced Docker

Advanced Docker 1. Introduction to Docker Docker, an open-source platform, revolutionizes the way we develop, ship, and run applications in today’s digital landscape. Its key components include Docker Engine—the runtime that allows users to create, deploy, and manage containers; Docker Hub—a registry for sharing and distributing containerized applications globally; and Docker Compose—which simplifies multi-container deployments through a single configuration file. Understanding advanced Docker concepts is essential in today's fast-paced development environment, where software applications continuously evolve in complexity and demand streamlined management. By leveraging these advanced features, developers enhance productivity, ensure consistent deployments, and optimize resource management across diverse environments. Mastery of Docker lays the foundation for efficient troubleshooting and robust deployment strategies, making it indispensable for modern DevOps workflows. Below is a Mermaid diagram illustrating the core components and interactions in Docker architecture: 2. Basic Docker Commands Docker provides a command-line interface to interact with containers effectively. Here are some essential Docker commands: docker run: Starts a new container from a specified image. # Run Nginx in detached mode, mapping container port 80 to host port 80 docker run -d -p 80:80 nginx docker build: Builds a new image from a Dockerfile. # Build an image tagged 'myapp' from the current directory context docker build -t myapp . docker pull: Downloads a specified image from Docker Hub. # Pull the latest Ubuntu image docker pull ubuntu docker ps: Lists all running containers; use -a for all (including stopped) containers. docker ps -a docker stop: Stops a running container. # Stop a container by its ID docker stop Mastering these commands forms the basis for efficient container management. 3. Docker Images vs. Containers Docker images and containers are two fundamental elements. An image is a read-only blueprint containing instructions for building a container. In contrast, a container is a runtime instance of an image, complete with its own filesystem, networking, and isolated process space. Consider the following Mermaid diagram that clarifies their relationship: This diagram illustrates that while an image serves as a recipe, containers are the actual running applications built from that recipe. The immutability of images ensures consistent environments, while the flexibility of containers facilitates rapid development, testing, and scaling. 4. Dockerfiles: Creating Custom Images A Dockerfile is a script with a set of instructions to build a Docker image. This file automates application environment setup, ensuring repeatability and consistency. Here’s a breakdown of a typical Dockerfile: FROM: Specifies the base image. FROM node:14 WORKDIR: Sets the working directory in the container. WORKDIR /app COPY: Copies files from the host into the container. COPY . . RUN: Executes commands, e.g., to install dependencies. RUN npm install CMD: Defines the default command when starting the container. CMD ["node", "server.js"] Using Dockerfiles streamlines the creation of custom images, ensuring that development and production environments remain aligned. 5. Multi-Stage Builds Multi-stage builds allow using multiple FROM statements in a Dockerfile, optimizing the final image by separating build-time dependencies from runtime environments. This technique results in leaner, more secure images. Example multi-stage Dockerfile: # Stage 1: Build the application FROM golang:1.16 as builder WORKDIR /app COPY . . RUN go build -o myapp # Stage 2: Create the final image FROM alpine:latest WORKDIR /root/ COPY --from=builder /app/myapp . CMD ["./myapp"] In this example, the application is compiled in the first stage and only the resulting binary is copied into the lightweight final image. This separation minimizes unnecessary components in production, thus reducing security risks and image size. 6. Networking in Docker Docker networking enables communication between containers and external systems, while maintaining isolation and enhanced security. Various network types are available: Bridge Network: Default network for single-host container communication. docker network create my_bridge Host Network: Uses the host’s network stack directly. docker run --network host my_app Overlay Network: Enables multi-host container communication, essential for orchestration platforms. docker network create --driver overlay my_overlay Macvlan Network: Assigns unique MAC addresses to containers for direct network integration. docker network create -d

Advanced Docker

1. Introduction to Docker

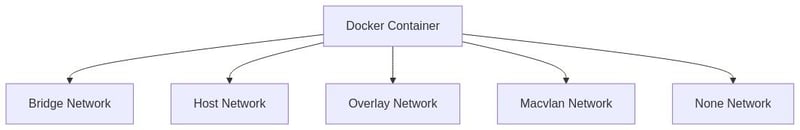

Docker, an open-source platform, revolutionizes the way we develop, ship, and run applications in today’s digital landscape. Its key components include Docker Engine—the runtime that allows users to create, deploy, and manage containers; Docker Hub—a registry for sharing and distributing containerized applications globally; and Docker Compose—which simplifies multi-container deployments through a single configuration file. Understanding advanced Docker concepts is essential in today's fast-paced development environment, where software applications continuously evolve in complexity and demand streamlined management. By leveraging these advanced features, developers enhance productivity, ensure consistent deployments, and optimize resource management across diverse environments. Mastery of Docker lays the foundation for efficient troubleshooting and robust deployment strategies, making it indispensable for modern DevOps workflows.

Below is a Mermaid diagram illustrating the core components and interactions in Docker architecture:

2. Basic Docker Commands

Docker provides a command-line interface to interact with containers effectively. Here are some essential Docker commands:

- docker run: Starts a new container from a specified image.

# Run Nginx in detached mode, mapping container port 80 to host port 80

docker run -d -p 80:80 nginx

- docker build: Builds a new image from a Dockerfile.

# Build an image tagged 'myapp' from the current directory context

docker build -t myapp .

- docker pull: Downloads a specified image from Docker Hub.

# Pull the latest Ubuntu image

docker pull ubuntu

-

docker ps: Lists all running containers; use

-afor all (including stopped) containers.

docker ps -a

- docker stop: Stops a running container.

# Stop a container by its ID

docker stop

Mastering these commands forms the basis for efficient container management.

3. Docker Images vs. Containers

Docker images and containers are two fundamental elements. An image is a read-only blueprint containing instructions for building a container. In contrast, a container is a runtime instance of an image, complete with its own filesystem, networking, and isolated process space.

Consider the following Mermaid diagram that clarifies their relationship:

This diagram illustrates that while an image serves as a recipe, containers are the actual running applications built from that recipe. The immutability of images ensures consistent environments, while the flexibility of containers facilitates rapid development, testing, and scaling.

4. Dockerfiles: Creating Custom Images

A Dockerfile is a script with a set of instructions to build a Docker image. This file automates application environment setup, ensuring repeatability and consistency. Here’s a breakdown of a typical Dockerfile:

- FROM: Specifies the base image.

FROM node:14

- WORKDIR: Sets the working directory in the container.

WORKDIR /app

- COPY: Copies files from the host into the container.

COPY . .

- RUN: Executes commands, e.g., to install dependencies.

RUN npm install

- CMD: Defines the default command when starting the container.

CMD ["node", "server.js"]

Using Dockerfiles streamlines the creation of custom images, ensuring that development and production environments remain aligned.

5. Multi-Stage Builds

Multi-stage builds allow using multiple FROM statements in a Dockerfile, optimizing the final image by separating build-time dependencies from runtime environments. This technique results in leaner, more secure images.

Example multi-stage Dockerfile:

# Stage 1: Build the application

FROM golang:1.16 as builder

WORKDIR /app

COPY . .

RUN go build -o myapp

# Stage 2: Create the final image

FROM alpine:latest

WORKDIR /root/

COPY --from=builder /app/myapp .

CMD ["./myapp"]

In this example, the application is compiled in the first stage and only the resulting binary is copied into the lightweight final image. This separation minimizes unnecessary components in production, thus reducing security risks and image size.

6. Networking in Docker

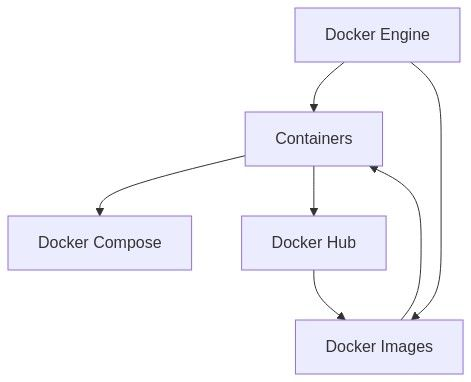

Docker networking enables communication between containers and external systems, while maintaining isolation and enhanced security. Various network types are available:

- Bridge Network: Default network for single-host container communication.

docker network create my_bridge

- Host Network: Uses the host’s network stack directly.

docker run --network host my_app

- Overlay Network: Enables multi-host container communication, essential for orchestration platforms.

docker network create --driver overlay my_overlay

- Macvlan Network: Assigns unique MAC addresses to containers for direct network integration.

docker network create -d macvlan --subnet=192.168.1.0/24 --gateway=192.168.1.1 -o parent=eth0 my_macvlan

- None Network: Disables networking for the container.

docker run --network none my_app

The following Mermaid diagram provides a visual overview of Docker networking types and their use cases:

Understanding these networks helps in architecting secure, efficient communication channels for your containerized applications.

7. Docker Compose for Multi-Container Applications

Docker Compose simplifies multi-container application deployment by allowing developers to define all necessary services in a single YAML file, reducing complexity and ensuring seamless communication between containers.

Basic docker-compose.yml example:

version: '3'

services:

web:

image: nginx:alpine

ports:

- "8080:80"

volumes:

- ./html:/usr/share/nginx/html

database:

image: postgres:alpine

environment:

POSTGRES_DB: mydb

POSTGRES_USER: user

POSTGRES_PASSWORD: pass

With a single command (docker-compose up), this setup launches both the Nginx web server and PostgreSQL database. Compose is essential for efficient development, scaling (using docker-compose up --scale web=3), and clean environment teardowns (docker-compose down).

8. Persisting Data in Docker Containers

Data persistence in Docker is managed using volumes and bind mounts:

- Volumes: Managed by Docker and stored in a dedicated directory on the host.

# Create a volume and run container with it

docker volume create my_volume

docker run -d -v my_volume:/data my_app

- Bind Mounts: Map a specific host directory to a container directory.

# Mount the current directory to /app in the container

docker run -d -v $(pwd):/app my_app

Volumes are ideal for databases and production data persistence, while bind mounts excel in development scenarios where real-time changes are desired.

9. Docker Swarm: Orchestrating Containers

Docker Swarm is Docker’s native orchestration tool to manage a cluster of containers across multiple hosts. It provides service scaling, load balancing, and high availability.

Steps to setup a Docker Swarm:

- Initialize the swarm:

docker swarm init

- Retrieve a join token for worker nodes:

docker swarm join-token worker

- Deploy a replicated service:

docker service create --name my_service --replicas 3 nginx:alpine

To update the service with zero downtime:

docker service update --image nginx:latest my_service

Docker Swarm streamlines deployment and scaling while ensuring continuous availability.

10. Security Best Practices in Docker

Security is paramount when working with Docker. Here are key practices:

- Use Trusted Images: Always pull images from reputable sources and scan for vulnerabilities.

- Limit Container Privileges: Avoid running containers as root; use non-root users.

- Employ Resource Constraints: Set CPU and memory limits to prevent resource hogging.

- Regularly Update Docker: Keep Docker and its components up to date to mitigate vulnerabilities.

- Implement Network Security: Isolate containers via Docker networks and use firewall rules.

- Use Secrets Management: Securely manage sensitive data using Docker Secrets or environment variables.

Following these guidelines significantly fortifies Docker deployments against potential threats.

11. Troubleshooting Common Docker Issues

Troubleshooting Docker can seem challenging. Below are common issues and solutions:

-

Container Won't Start: Use

docker logsfor error details. -

Port Conflicts: Use unique port mappings and verify with tools like

netstat. -

Memory Issues: Monitor resource usage with

docker statsand adjust resource limits. - Network Problems: Ensure containers are on the correct network and verify firewall settings.

-

Image Not Found: Double-check image names/tags and run

docker pullif necessary.

A systematic approach to these issues ensures smoother Docker operations.

12. Resources for Further Learning

Enhance your Docker expertise with these resources:

-

Books:

- Docker Deep Dive by Nigel Poulton

- The Docker Book by James Turnbull

-

Online Courses:

- Docker Mastery: with Kubernetes + Swarm from a Docker Captain (Udemy)

- Learn Docker & Kubernetes: The Complete Course (Coursera)

-

Documentation & Blogs:

- Docker Official Documentation – https://docs.docker.com

- Docker Blog – https://www.docker.com/blog

-

Community Forums:

- Stack Overflow

- Docker Community Forums – https://forums.docker.com

By leveraging these resources, you can further explore advanced Docker functionalities, refine your containerization strategies, and enhance your overall DevOps prowess.

Happy Dockering!

![Apple Reorganizes Executive Team to Rescue Siri [Report]](https://www.iclarified.com/images/news/96777/96777/96777-640.jpg)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![[The AI Show Episode 138]: Introducing GPT-4.5, Claude 3.7 Sonnet, Alexa+, Deep Research Now in ChatGPT Plus & How AI Is Disrupting Writing](https://www.marketingaiinstitute.com/hubfs/ep%20138%20cover.png)

.jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

![Release: Rendering Ranger: R² [Rewind]](https://images-3.gog-statics.com/48a9164e1467b7da3bb4ce148b93c2f92cac99bdaa9f96b00268427e797fc455.jpg)