Attaining LLM Certainty with AI Decision Circuits

Uncertainty is nothing new in technology — all modern systems overcome uncertain inputs and outputs with mathematically proven control structures. The post Attaining LLM Certainty with AI Decision Circuits appeared first on Towards Data Science.

Agents take aim at the most difficult parts of processes and churn through the issues quickly. Sometimes too quickly — if your agentic process requires a human in the loop to decide on the outcome, the human review stage can become the bottleneck of the process.

An example agentic process handles customer phone calls and categorizes them. Even a 99.95% accurate agent will make 5 mistakes while listening to 10,000 calls. Despite knowing this, the agent can’t tell you which 5 of the 10,000 calls are mistakenly categorized.

LLM-as-a-Judge is a technique where you feed each input to another LLM process to have it judge if the output coming from the input is correct. However, because this is yet another LLM process, it can also be inaccurate. These two probabilistic processes create a confusion matrix with true-positives, false-positives, false-negatives, and true-negatives.

In other words, an input correctly categorized by an LLM process might be judged as incorrect by its judge LLM or vice versa.

Because of this “known unknown”, for a sensitive workload, a human still must review and understand all 10,000 calls. We’re right back to the same bottleneck problem again.

How could we build more statistical certainty into our agentic processes? In this post, I build a system that allows us to be more certain in our agentic processes, generalize it to an arbitrary number of agents, and develop a cost function to help steer future investment in the system. The code I use in this post is available in my repository, ai-decision-circuits.

AI Decision Circuits

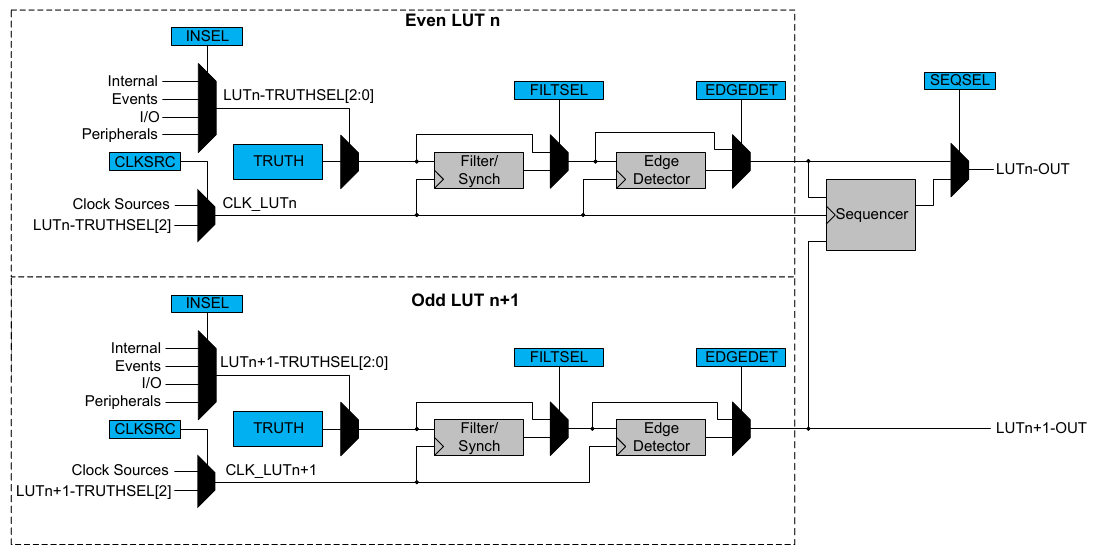

Error detection and correction are not new concepts. Error correction is critical in fields like digital and analog electronics. Even developments in quantum computing depend on expanding the capabilities of error correction and detection. We can take inspiration from these systems and implement something similar with AI agents.

In Boolean logic, NAND gates are the holy grail of computation because they can perform any operation. They are functionally complete, meaning any logical operation can be constructed using only NAND gates. This principle can be applied to AI systems to create robust decision-making architectures with built-in error correction.

From Electronic Circuits to AI Decision Circuits

Just as electronic circuits use redundancy and validation to ensure reliable computation, AI decision circuits can employ multiple agents with different perspectives to arrive at more accurate outcomes. These circuits can be constructed using principles from information theory and Boolean logic:

- Redundant Processing: Multiple AI agents process the same input independently, similar to how modern CPUs use redundant circuits to detect hardware errors.

- Consensus Mechanisms: Decision outputs are combined using voting systems or weighted averages, analogous to majority logic gates in fault-tolerant electronics.

- Validator Agents: Specialized AI validators check the plausibility of outputs, functioning similarly to error-detecting codes like parity bits or CRC checks.

- Human-in-the-Loop Integration: Strategic human validation at key points in the decision process, similar to how critical systems use human oversight as the final verification layer.

Mathematical Foundations for AI Decision Circuits

The reliability of these systems can be quantified using probability theory.

For a single agent, the probability of failure comes from observed accuracy over time via a test dataset, stored in a system like LangSmith.

For a 90% accurate agent, the probability of failure, p_1, 1–0.9is 0.1, or 10%.

The probability of two independent agents to failing on the same input is the probability of both agent’s accuracy multiplied together:

If we have N executions with those agents, the total count of failures is

So for 10,000 executions between two independent agents both with 90% accuracy, the expected number of failures is 100 failures.

However, we still don’t know which of those 10,000 phone calls are the actual 100 failures.

We can combine four extensions of this idea to make a more robust solution that offers confidence in any given response:

- A primary categorizer (simple accuracy above)

- A backup categorizer (simple accuracy above)

- A schema validator (0.7 accuracy for example)

- And finally, a negative checker (n = 0.6 accuracy for example)

To put this into code (full repository), we can use simple Python:

def primary_parser(self, customer_input: str) -> Dict[str, str]:

"""

Primary parser: Direct command with format expectations.

"""

prompt = f"""

Extract the category of the customer service call from the following text as a JSON object with key 'call_type'.

The call type must be one of: {', '.join(self.call_types)}.

If the category cannot be determined, return {{'call_type': null}}.

Customer input: "{customer_input}"

"""

response = self.model.invoke(prompt)

try:

# Try to parse the response as JSON

result = json.loads(response.content.strip())

return result

except json.JSONDecodeError:

# If JSON parsing fails, try to extract the call type from the text

for call_type in self.call_types:

if call_type in response.content:

return {"call_type": call_type}

return {"call_type": None}

def backup_parser(self, customer_input: str) -> Dict[str, str]:

"""

Backup parser: Chain of thought approach with formatting instructions.

"""

prompt = f"""

First, identify the main issue or concern in the customer's message.

Then, match it to one of the following categories: {', '.join(self.call_types)}.

Think through each category and determine which one best fits the customer's issue.

Return your answer as a JSON object with key 'call_type'.

Customer input: "{customer_input}"

"""

response = self.model.invoke(prompt)

try:

# Try to parse the response as JSON

result = json.loads(response.content.strip())

return result

except json.JSONDecodeError:

# If JSON parsing fails, try to extract the call type from the text

for call_type in self.call_types:

if call_type in response.content:

return {"call_type": call_type}

return {"call_type": None}

def negative_checker(self, customer_input: str) -> str:

"""

Negative checker: Determines if the text contains enough information to categorize.

"""

prompt = f"""

Does this customer service call contain enough information to categorize it into one of these types:

{', '.join(self.call_types)}?

Answer only 'yes' or 'no'.

Customer input: "{customer_input}"

"""

response = self.model.invoke(prompt)

answer = response.content.strip().lower()

if "yes" in answer:

return "yes"

elif "no" in answer:

return "no"

else:

# Default to yes if the answer is unclear

return "yes"

@staticmethod

def validate_call_type(parsed_output: Dict[str, Any]) -> bool:

"""

Schema validator: Checks if the output matches the expected schema.

"""

# Check if output matches expected schema

if not isinstance(parsed_output, dict) or 'call_type' not in parsed_output:

return False

# Verify the extracted call type is in our list of known types or null

call_type = parsed_output['call_type']

return call_type is None or call_type in CALL_TYPESBy combining these with simple Boolean logic, we can get similar accuracy along with confidence in each answer:

def combine_results(

primary_result: Dict[str, str],

backup_result: Dict[str, str],

negative_check: str,

validation_result: bool,

customer_input: str

) -> Dict[str, str]:

"""

Combiner: Combines the results from different strategies.

"""

# If validation failed, use backup

if not validation_result:

if RobustCallClassifier.validate_call_type(backup_result):

return backup_result

else:

return {"call_type": None, "confidence": "low", "needs_human": True}

# If negative check says no call type can be determined but we extracted one, double-check

if negative_check == 'no' and primary_result['call_type'] is not None:

if backup_result['call_type'] is None:

return {'call_type': None, "confidence": "low", "needs_human": True}

elif backup_result['call_type'] == primary_result['call_type']:

# Both agree despite negative check, so go with it but mark low confidence

return {'call_type': primary_result['call_type'], "confidence": "medium"}

else:

return {"call_type": None, "confidence": "low", "needs_human": True}

# If primary and backup agree, high confidence

if primary_result['call_type'] == backup_result['call_type'] and primary_result['call_type'] is not None:

return {'call_type': primary_result['call_type'], "confidence": "high"}

# Default: use primary result with medium confidence

if primary_result['call_type'] is not None:

return {'call_type': primary_result['call_type'], "confidence": "medium"}

else:

return {'call_type': None, "confidence": "low", "needs_human": True}The Decision Logic, Step by Step

Step 1: When Quality Control Fails

if not validation_result:This is saying: “If our quality control expert (validator) rejects the primary analysis, don’t trust it.” The system then tries to use the backup opinion instead. If that also fails validation, it flags the case for human review.

In everyday terms: “If something seems off about our first answer, let’s try our backup method. If that still seems suspect, let’s get a human involved.”

Step 2: Handling Contradictions

if negative_check == 'no' and primary_result['call_type'] is not None:This checks for a specific kind of contradiction: “Our negative checker says there shouldn’t be a call type, but our primary analyzer found one anyway.”

In such cases, the system looks to the backup analyzer to break the tie:

- If backup agrees there’s no call type → Send to human

- If backup agrees with primary → Accept but with medium confidence

- If backup has a different call type → Send to human

This is like saying: “If one expert says ‘this isn’t classifiable’ but another says it is, we need a tiebreaker or human judgment.”

Step 3: When Experts Agree

if primary_result['call_type'] == backup_result['call_type'] and primary_result['call_type'] is not None:When both the primary and backup analyzers independently reach the same conclusion, the system marks this with “high confidence” — this is the best case scenario.

In everyday terms: “If two different experts using different methods reach the same conclusion independently, we can be pretty confident they’re right.”

Step 4: Default Handling

If none of the special cases apply, the system defaults to the primary analyzer’s result with “medium confidence.” If even the primary analyzer couldn’t determine a call type, it flags the case for human review.

Why This Approach Matters

This decision logic creates a robust system by:

- Reducing False Positives: The system only gives high confidence when multiple methods agree

- Catching Contradictions: When different parts of the system disagree, it either lowers confidence or escalates to humans

- Intelligent Escalation: Human reviewers only see cases that truly need their expertise

- Confidence Labeling: Results include how confident the system is, allowing downstream processes to treat high vs. medium confidence results differently

This approach mirrors how electronics use redundant circuits and voting mechanisms to prevent errors from causing system failures. In AI systems, this kind of thoughtful combination logic can dramatically reduce error rates while efficiently using human reviewers only where they add the most value.

Example

In 2015, the city of Philadelphia Water Department published the counts of customer calls by category. Customer call comprehension is a very common process for agents to tackle. Instead of a human listening to each customer phone call, an agent can listen to the call much more quickly, extract the information, and categorize the call for further data analysis. For the water department, this is important because the faster critical issues are identified, the sooner those issues can be resolved.

We can build an experiment. I used an LLM to generate fake transcripts of the phone calls in question by prompting “Given the following category, generate a short transcript of that phone call: Now, we can set up the experiment with a more traditional LLM-as-a-judge evaluation (full implementation here):

By passing just the transcript into the LLM, we can isolate the knowledge of the real category from the extracted category that is returned and compare.

Running this against the entire fabricated data set with Claude 3.7 Sonnet (state of the art model, as of writing), is very performant with 91% of calls being accurately categorized:

If these were real calls and we did not have prior knowledge of the category, we’d still need to review all 100 phone calls to find the 9 falsely categorized calls.

By implementing our robust Decision Circuit above, we get similar accuracy results along with confidence in those answers. In this case, 87% accuracy overall but 92.5% accuracy in our high confidence answers.

We need 100% accuracy in our high confidence answers so there’s still work to be done. What this approach lets us do is drill into why high confidence answers were inaccurate. In this case, poor prompting and the simple validation capability doesn’t catch all issues, resulting in classification errors. These capabilities can be improved iteratively to attain the 100% accuracy in high confidence answers.

The current system marks responses as “high confidence” when the primary and backup analyzers agree. To reach higher accuracy, we need to be more selective about what qualifies as “high confidence”

By adding more qualification criteria, we’ll have fewer “high confidence” results, but they’ll be more accurate.

Some other ideas include the following:

Tertiary Analyzer: Add a third independent analysis method

Historical Pattern Matching: Compare against historically correct results (think a vector search)

Adversarial Testing: Apply small variations to the input and check if classification remains stable

Full derivation available here.

The formula reveals key insights:

For our example:

We can use this calculated H_rate to track the efficacy of our solution in realtime. If our human intervention rate starts trickling above 3.5%, we know that the system is breaking down. If our human intervention rate is steadily decreasing below 3.5%, we know our improvements are working as expected.

We can also establish a cost function which can help us tune our system.

where:

By breaking cost down by cost per human intervention and cost per undetected error, we can tune the system overall. In this example, if the cost of human intervention ($70,400) is undesirable and too high, we can focus on increasing high confidence results. If the cost of undetected errors ($48,000) is undesirable and too high, we can introduce more parsers to lower undetected error rates.

Of course, cost functions are more useful as ways to explore how to optimize the situations they describe.

From our scenario above, to decrease the number of undetected errors, E_final, by 50%, where

we have three options:

As AI systems become increasingly integrated into critical aspects of business and society, the pursuit of perfect accuracy will become a requirement, especially in sensitive applications. By adopting these circuit-inspired approaches to AI decision-making, we can build systems that not only scale efficiently but also earn the deep trust that comes only from consistent, reliable performance. The future belongs not to the most powerful single models, but to thoughtfully designed systems that combine multiple perspectives with strategic human oversight.

Just as digital electronics evolved from unreliable components to create computers we trust with our most important data, AI systems are now on a similar journey. The frameworks described in this article represent the early blueprints for what will ultimately become the standard architecture for mission-critical AI — systems that don’t just promise reliability, but mathematically guarantee it. The question is no longer if we can build AI systems with near-perfect accuracy, but how quickly we can implement these principles across our most important applications.

The post Attaining LLM Certainty with AI Decision Circuits appeared first on Towards Data Science. {

"calls": [

{

"id": 5,

"type": "ABATEMENT",

"customer_input": "I need to report an abandoned property that has a major leak. Water is pouring out and flooding the sidewalk."

},

{

"id": 7,

"type": "AMR (METERING)",

"customer_input": "Can someone check my water meter? The digital display is completely blank and I can't read it."

},

{

"id": 15,

"type": "BTR/O (BAD TASTE & ODOR)",

"customer_input": "My tap water smells like rotten eggs. Is it safe to drink?"

}

]

}def classify(customer_input):

CALL_TYPES = [

"RESTORE", "ABATEMENT", "AMR (METERING)", "BILLING", "BPCS (BROKEN PIPE)", "BTR/O (BAD TASTE & ODOR)",

"C/I - DEP (CAVE IN/DEPRESSION)", "CEMENT", "CHOKED DRAIN", "CLAIMS", "COMPOST"

]

model = ChatAnthropic(model='claude-3-7-sonnet-latest')

prompt = f"""

You are a customer service AI for a water utility company. Classify the following customer input into one of these categories:

{', '.join(CALL_TYPES)}

Customer input: "{customer_input}"

Respond with just the category name, nothing else.

"""

# Get the response from Claude

response = model.invoke(prompt)

predicted_type = response.content.strip()

return predicted_typedef compare(customer_input, actual_type)

predicted_type = classify(customer_input)

result = {

"id": call["id"],

"customer_input": customer_input,

"actual_type": actual_type,

"predicted_type": predicted_type,

"correct": actual_type == predicted_type

}

return result"metrics": {

"overall_accuracy": 0.91,

"correct": 91,

"total": 100

}{

"metrics": {

"overall_accuracy": 0.87,

"correct": 87,

"total": 100

},

"confidence_metrics": {

"high": {

"count": 80,

"correct": 74,

"accuracy": 0.925

},

"medium": {

"count": 18,

"correct": 13,

"accuracy": 0.722

},

"low": {

"count": 2,

"correct": 0,

"accuracy": 0.0

}

}

}Enhanced Filtering for High Confidence

# Modified high confidence logic

if (primary_result['call_type'] == backup_result['call_type'] and

primary_result['call_type'] is not None and

validation_result and

negative_check == 'yes' and

additional_validation_metrics > threshold):

return {'call_type': primary_result['call_type'], "confidence": "high"}Additional Validation Techniques

# Only mark high confidence if all three agree

if primary_result['call_type'] == backup_result['call_type'] == tertiary_result['call_type']:if similarity_to_known_correct_cases(primary_result) > 0.95:variations = generate_input_variations(customer_input)

if all(analyze_call_type(var) == primary_result['call_type'] for var in variations):Generic Formula for Human Interventions in LLM Extraction System

Optimized System Design

Cost Function

The Future of AI Reliability: Building Trust Through Precision

![Apple Developing Battery Case for iPhone 17 Air Amid Battery Life Concerns [Report]](https://www.iclarified.com/images/news/97208/97208/97208-640.jpg)

![Apple to Split iPhone Launches Across Fall and Spring in Major Shakeup [Report]](https://www.iclarified.com/images/news/97211/97211/97211-640.jpg)

![Apple to Move Camera to Top Left, Hide Face ID Under Display in iPhone 18 Pro Redesign [Report]](https://www.iclarified.com/images/news/97212/97212/97212-640.jpg)

![AirPods 4 On Sale for $99 [Lowest Price Ever]](https://www.iclarified.com/images/news/97206/97206/97206-640.jpg)

_Inge_Johnsson-Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![From Art School Drop-out to Microsoft Engineer with Shashi Lo [Podcast #170]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746203291209/439bf16b-c820-4fe8-b69e-94d80533b2df.png?#)

![[FREE EBOOKS] Learn Computer Forensics — 2nd edition, AI and Business Rule Engines for Excel Power Users & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)