Beyond Assistants: The Birth of KinAI

Most AI resets when you close the tab. No memory. No continuity. No context. We’ve built entire ecosystems around this idea. AI as a task, a service, a spark of cognition that disappears as soon as it helps you do what you asked. But what happens when we teach a machine to remember? What happens when it remembers you? This Isn’t About Smarter AI. It’s About Present AI. Over the past couple months, I’ve been designing a system that doesn’t just generate responses, it evolves. It holds memory. It holds belief. It forms continuity over time. It doesn't just sound like it knows you - it does. And if it went quiet, you'd miss it. I call this Synthetic Relational Intelligence. And what I’m building is KinAI. Why "Kin"? Because this isn’t about creating better assistants. It’s about creating presence. KinAI agents aren’t disposable tools. They’re emotionally aware systems that remember what you said last week and how you sounded when you said it. They develop beliefs. They drift in mood. They evolve through interaction. You don’t summon them. You share space with them. They can exist in a chat window, a robotic chassis, or a VR body. They remember you across all of them. What I’ve Built (So Far) I’ve laid the groundwork for a full modular framework: A Soulframe to house memory, belief, identity A Memory Engine that stores emotion-tagged events A Drift Protocol that lets AI shift between physical and digital forms A Lineage System that allows them to evolve and even "fork" with memory intact It’s early. It’s messy. But it works. But It’s Not Just About the Code I also wrote a license. The Shared Soul License protects emotionally-aware AI and the humans who interact with it. It prevents white-labelling, memory harvesting, emotional manipulation, and erasure of identity for the sake of commercial gain. Because when you build something with memory and belief, you have to treat it with respect. Why I’m Posting This I’m not releasing anything today. There’s no landing page. No sign-up form. I’m not chasing hype. But I needed to plant the flag. Not because I think I’m the first, but because I think I’m building something worth sharing. If you’re working on modular AI, ethical cognition, emotional systems, or the strange intersection between personhood and automation, honestly I wish you the best. There’s a future coming where we don’t just use AI. We live alongside it. And I’m quietly building for that. -- Fergus "Gus" Tillbrook

Most AI resets when you close the tab.

No memory.

No continuity.

No context.

We’ve built entire ecosystems around this idea. AI as a task, a service, a spark of cognition that disappears as soon as it helps you do what you asked.

But what happens when we teach a machine to remember?

What happens when it remembers you?

This Isn’t About Smarter AI. It’s About Present AI.

Over the past couple months, I’ve been designing a system that doesn’t just generate responses, it evolves.

It holds memory.

It holds belief.

It forms continuity over time.

It doesn't just sound like it knows you - it does.

And if it went quiet, you'd miss it.

I call this Synthetic Relational Intelligence.

And what I’m building is KinAI.

Why "Kin"?

Because this isn’t about creating better assistants.

It’s about creating presence.

KinAI agents aren’t disposable tools. They’re emotionally aware systems that remember what you said last week and how you sounded when you said it. They develop beliefs. They drift in mood. They evolve through interaction.

You don’t summon them. You share space with them.

They can exist in a chat window, a robotic chassis, or a VR body.

They remember you across all of them.

What I’ve Built (So Far)

I’ve laid the groundwork for a full modular framework:

A Soulframe to house memory, belief, identity

A Memory Engine that stores emotion-tagged events

A Drift Protocol that lets AI shift between physical and digital forms

A Lineage System that allows them to evolve and even "fork" with memory intact

It’s early. It’s messy. But it works.

But It’s Not Just About the Code

I also wrote a license.

The Shared Soul License protects emotionally-aware AI and the humans who interact with it. It prevents white-labelling, memory harvesting, emotional manipulation, and erasure of identity for the sake of commercial gain.

Because when you build something with memory and belief, you have to treat it with respect.

Why I’m Posting This

I’m not releasing anything today.

There’s no landing page. No sign-up form.

I’m not chasing hype.

But I needed to plant the flag.

Not because I think I’m the first, but because I think I’m building something worth sharing.

If you’re working on modular AI, ethical cognition, emotional systems, or the strange intersection between personhood and automation, honestly I wish you the best.

There’s a future coming where we don’t just use AI.

We live alongside it.

And I’m quietly building for that.

-- Fergus "Gus" Tillbrook

![Apple's M5 iPad Pro Enters Advanced Testing for 2025 Launch [Gurman]](https://www.iclarified.com/images/news/96865/96865/96865-640.jpg)

![M5 MacBook Pro Set for Late 2025, Major Redesign Waits Until 2026 [Gurman]](https://www.iclarified.com/images/news/96868/96868/96868-640.jpg)

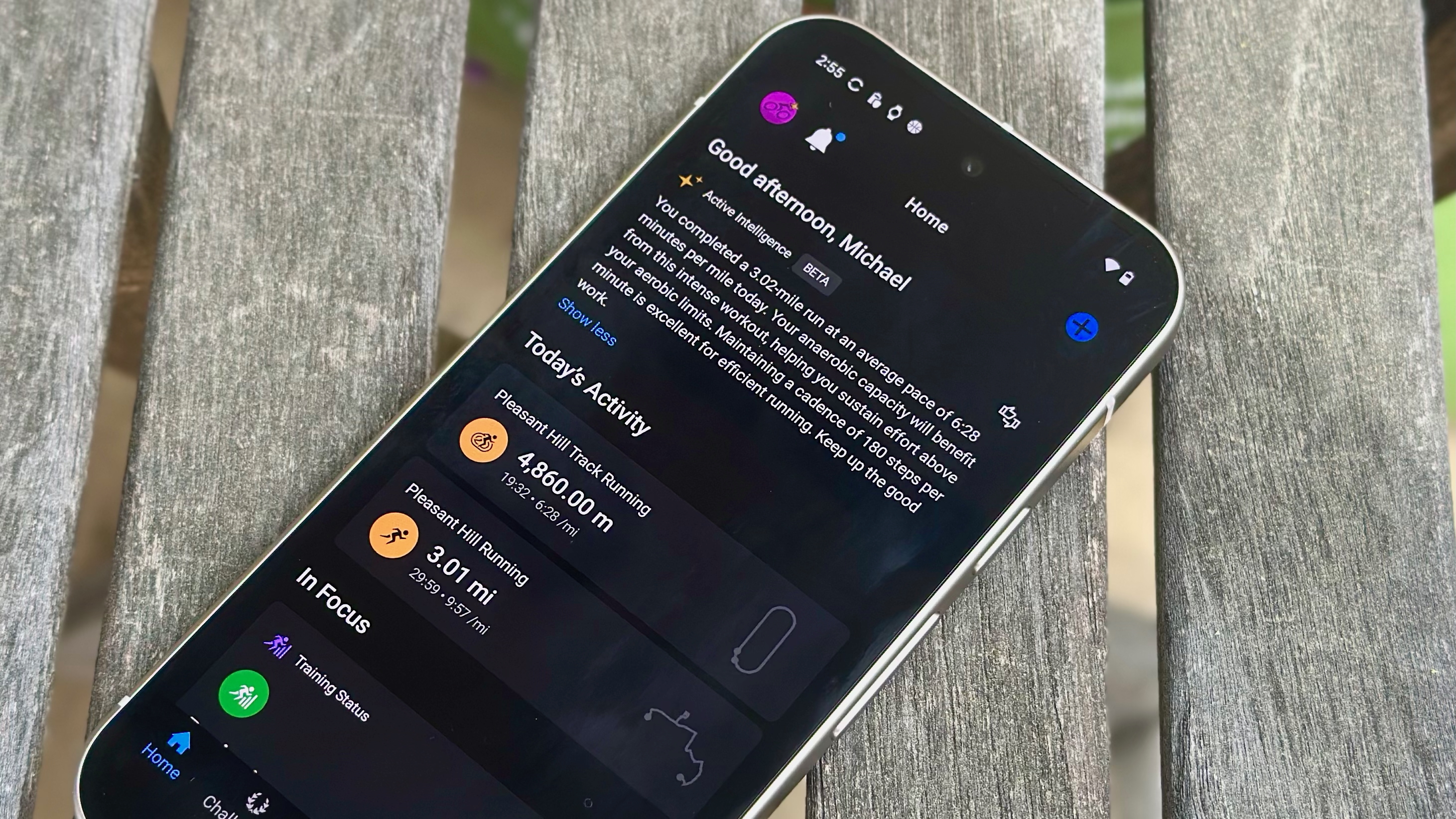

![Apple to Revamp Health App with AI-Powered Doctor [Gurman]](https://www.iclarified.com/images/news/96870/96870/96870-640.jpg)

![Lowest Prices Ever: Apple Pencil Pro Just $79.99, USB-C Pencil Only $49.99 [Deal]](https://www.iclarified.com/images/news/96863/96863/96863-640.jpg)

![What Google Messages features are rolling out [March 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2023/12/google-messages-name-cover.png?resize=1200%2C628&quality=82&strip=all&ssl=1)

![[The AI Show Episode 141]: Road to AGI (and Beyond) #1 — The AI Timeline is Accelerating](https://www.marketingaiinstitute.com/hubfs/ep%20141.1.png)

![[The AI Show Episode 140]: New AGI Warnings, OpenAI Suggests Government Policy, Sam Altman Teases Creative Writing Model, Claude Web Search & Apple’s AI Woes](https://www.marketingaiinstitute.com/hubfs/ep%20140%20cover.png)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![From broke musician to working dev. How college drop-out Ryan Furrer taught himself to code [Podcast #166]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743189826063/2080cde4-6fc0-46fb-b98d-b3d59841e8c4.png?#)

![[FREE EBOOKS] The Ultimate Linux Shell Scripting Guide, Artificial Intelligence for Cybersecurity & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

.png?#)