The Art of Hybrid Architectures

Combining CNNs and Transformers to Elevate Fine-Grained Visual Classification The post The Art of Hybrid Architectures appeared first on Towards Data Science.

In my previous article, I discussed how morphological feature extractors mimic the way biological experts visually assess images.

This time, I want to go a step further and explore a new question:

Can different architectures complement each other to build an AI that “sees” like an expert?

Introduction: Rethinking Model Architecture Design

While building a high accuracy visual recognition model, I ran into a key challenge:

How do we get AI to not just “see” an image, but actually understand the features that matter?

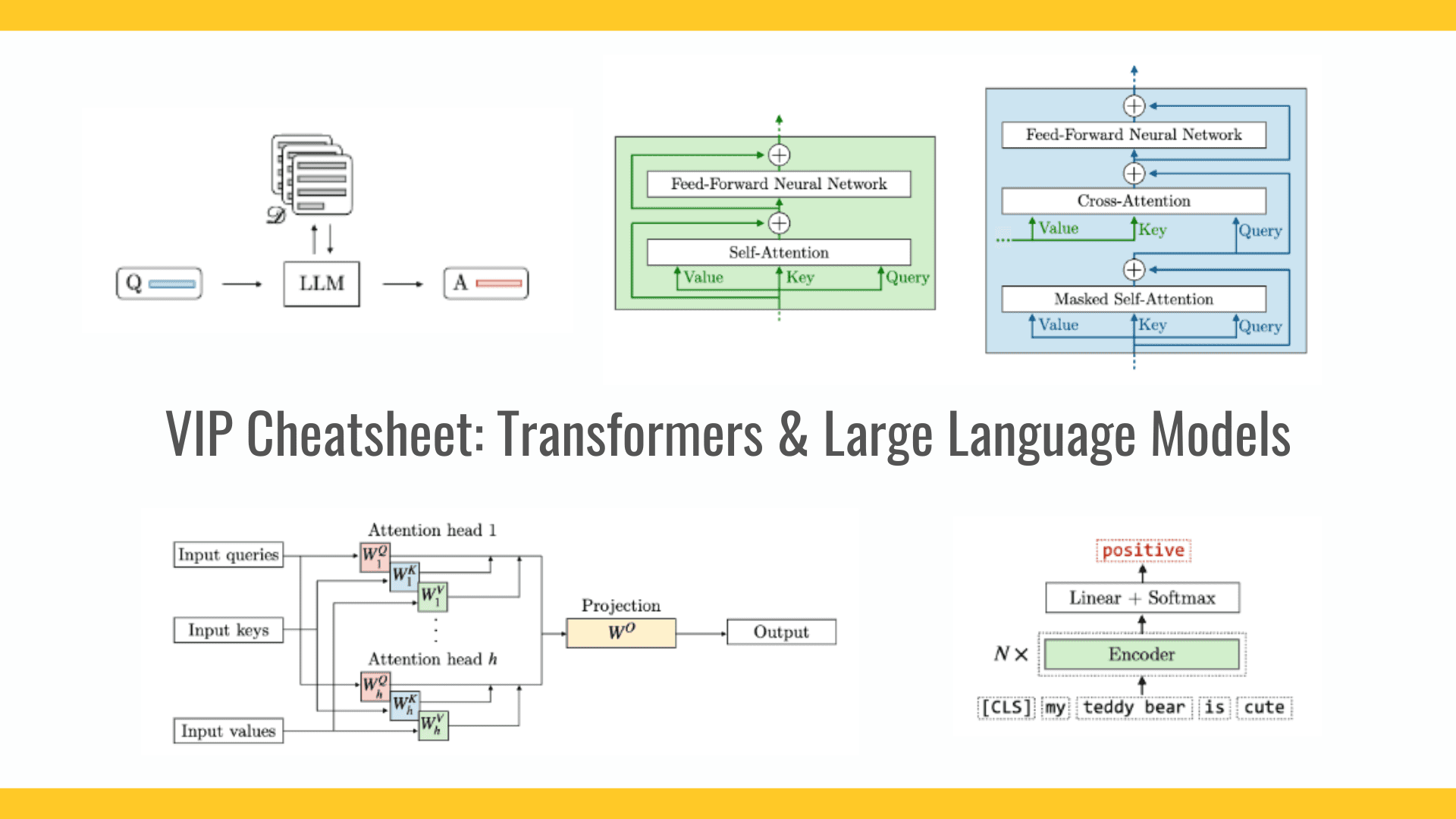

Traditional CNNs excel at capturing local details like fur texture or ear shape, but they often miss the bigger picture. Transformers, on the other hand, are great at modeling global relationships, how different regions of an image interact, but they can easily overlook fine-grained cues.

This insight led me to explore combining the strengths of both architectures to create a model that not only captures fine details but also comprehends the bigger picture.

While developing PawMatchAI, a 124-breed dog classification system, I went through three major architectural phases:

1. Early Stage: EfficientNetV2-M + Multi-Head Attention

I started with EfficientNetV2-M and added a multi-head attention module.

I experimented with 4, 8, and 16 heads—eventually settling on 8, which gave the best results.

This setup reached an F1 score of 78%, but it felt more like a technical combination than a cohesive design.

2. Refinement: Focal Loss + Advanced Data Augmentation

After closely analyzing the dataset, I noticed a class imbalance, some breeds appeared far more frequently than others, skewing the model’s predictions.

To address this, I introduced Focal Loss, along with RandAug and mixup, to make the data distribution more balanced and diverse.

This pushed the F1 score up to 82.3%.

3. Breakthrough: Switching to ConvNextV2-Base + Training Optimization

Next, I replaced the backbone with ConvNextV2-Base, and optimized the training using OneCycleLR and a progressive unfreezing strategy.

The F1 score climbed to 87.89%.

But during real-world testing, the model still struggled with visually similar breeds, indicating room for improvement in generalization.

4. Final Step: Building a Truly Hybrid Architecture

After reviewing the first three phases, I realized the core issue: stacking technologies isn’t the same as getting them to work together.

What I needed was true collaboration between the CNN, the Transformer, and the morphological feature extractor, each playing to its strengths. So I restructured the entire pipeline.

ConvNextV2 was in charge of extracting detailed local features.

The morphological module acted like a domain expert, highlighting features critical for breed identification.

Finally, the multi-head attention brought it all together by modeling global relationships.

This time, they weren’t just independent modules, they were a team.

CNNs identified the details, the morphology module amplified the meaningful ones, and the attention mechanism tied everything into a coherent global view.

Key Result: The F1 score rose to 88.70%, but more importantly, this gain came from the model learning to understand morphology, not just memorize textures or colors.

It started recognizing subtle structural features—just like a real expert would—making better generalizations across visually similar breeds.

_Borka_Kiss_Alamy.jpg?#)

![Apple's M5 iPad Pro Enters Advanced Testing for 2025 Launch [Gurman]](https://www.iclarified.com/images/news/96865/96865/96865-640.jpg)

![M5 MacBook Pro Set for Late 2025, Major Redesign Waits Until 2026 [Gurman]](https://www.iclarified.com/images/news/96868/96868/96868-640.jpg)

![Google fixes ‘can’t run’ Chrome installer issue on Windows [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2021/05/google_chrome_windows_1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![[The AI Show Episode 141]: Road to AGI (and Beyond) #1 — The AI Timeline is Accelerating](https://www.marketingaiinstitute.com/hubfs/ep%20141.1.png)

![[The AI Show Episode 140]: New AGI Warnings, OpenAI Suggests Government Policy, Sam Altman Teases Creative Writing Model, Claude Web Search & Apple’s AI Woes](https://www.marketingaiinstitute.com/hubfs/ep%20140%20cover.png)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![From broke musician to working dev. How college drop-out Ryan Furrer taught himself to code [Podcast #166]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743189826063/2080cde4-6fc0-46fb-b98d-b3d59841e8c4.png?#)

.jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

OSAMU-NAKAMURA.jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

.png?#)