Enterprise Grade AI/ML Deployment on AWS 2025

AWS AI/ML deployment requires integrated infrastructure, deployment patterns, and optimization techniques Implementing production-scale AI/ML workloads on AWS in 2025 demands a comprehensive approach integrating sophisticated hardware selection, distributed training architectures, and custom security controls with advanced monitoring systems. This guide presents a complete financial fraud detection implementation showcasing AWS's latest ML services, infrastructure patterns, and optimization techniques that reduce cost by 40-70% while maintaining sub-100ms latency at scale. The most successful implementations use purpose-built accelerators (Trainium2/Inferentia2), infrastructure-as-code deployment, and multi-faceted security to create resilient, performant AI systems. 1. The modern AWS AI/ML ecosystem and service landscape The AI/ML landscape in 2025 has evolved dramatically from previous generations of tools and technologies. What was once a collection of disparate services has transformed into a cohesive ecosystem designed to address every stage of the machine learning lifecycle. This evolution reflects AWS's strategic response to the growing complexity of AI workloads and the increasing need for specialized infrastructure to support them. Organizations implementing AI/ML solutions today face fundamentally different challenges than even a few years ago. Models have grown exponentially in size and complexity, data volumes have exploded, and the expectations for real-time, scalable inference have increased. Understanding AWS's current AI/ML portfolio is essential for navigating these challenges effectively. Core service stack and technical capabilities AWS now offers three primary service categories for AI/ML workloads, each serving distinct use cases and technical requirements: SageMaker AI Platform has consolidated into a unified experience with several integrated components: SageMaker Unified Studio - Integrated environment for accessing all data and AI tools SageMaker HyperPod - Purpose-built infrastructure reducing foundation model training time by 40% SageMaker Inference - Optimized deployment reducing costs by 50% and latency by 20% SageMaker Clarify - Enhanced capabilities for evaluating foundation models SageMaker's evolution represents AWS's push toward streamlining the end-to-end ML development process. The platform has transformed from a collection of related tools into a cohesive system that handles the entire ML lifecycle. This consolidation addresses a key challenge in enterprise ML: the fragmentation of tooling and processes across different phases of development. The Unified Studio experience now serves as a central interface for data scientists and ML engineers, integrating previously separate tools for data preparation, model development, training, and deployment. HyperPod's specialized infrastructure particularly shines when working with large foundation models, where training efficiency gains translate directly to reduced time-to-market and lower costs. Bedrock has matured into a comprehensive generative AI platform with: Foundation Model Access - Over 100 foundation models from industry leaders Bedrock Data Automation - Extracting insights from unstructured multimodal content Agents for Bedrock - Automated planning and execution of multistep tasks Knowledge Bases for Bedrock - Managed RAG capability with GraphRAG support Bedrock represents AWS's response to the explosion of foundation models and generative AI. The service has evolved from simply providing access to foundation models to offering a complete platform for building generative AI applications. The addition of Agents for Bedrock is particularly significant, as it enables more complex AI applications that can autonomously plan and execute multi-step workflows—a capability that was largely theoretical just a few years ago. Knowledge Bases for Bedrock has also evolved significantly, now incorporating Graph-based Retrieval Augmented Generation (GraphRAG), which enhances the ability to retrieve contextual information by understanding relationships between data entities. This is crucial for applications that require nuanced understanding of complex domains. AWS continues offering specialized AI services for specific use cases: Amazon Fraud Detector - ML-based fraud identification Amazon Comprehend - Natural language processing Amazon Textract - Document processing Amazon Rekognition - Computer vision These specialized services provide pre-built ML capabilities for common use cases, allowing organizations to leverage sophisticated AI without deep ML expertise. Each service has been optimized for its specific domain, incorporating best practices and model architectures proven effective for these tasks. For example, Fraud Detector now incorporates temporal behavioral analysis and network effect detection, capabilities that would be extremely complex to build from scratch.

AWS AI/ML deployment requires integrated infrastructure, deployment patterns, and optimization techniques

Implementing production-scale AI/ML workloads on AWS in 2025 demands a comprehensive approach integrating sophisticated hardware selection, distributed training architectures, and custom security controls with advanced monitoring systems. This guide presents a complete financial fraud detection implementation showcasing AWS's latest ML services, infrastructure patterns, and optimization techniques that reduce cost by 40-70% while maintaining sub-100ms latency at scale. The most successful implementations use purpose-built accelerators (Trainium2/Inferentia2), infrastructure-as-code deployment, and multi-faceted security to create resilient, performant AI systems.

1. The modern AWS AI/ML ecosystem and service landscape

The AI/ML landscape in 2025 has evolved dramatically from previous generations of tools and technologies. What was once a collection of disparate services has transformed into a cohesive ecosystem designed to address every stage of the machine learning lifecycle. This evolution reflects AWS's strategic response to the growing complexity of AI workloads and the increasing need for specialized infrastructure to support them.

Organizations implementing AI/ML solutions today face fundamentally different challenges than even a few years ago. Models have grown exponentially in size and complexity, data volumes have exploded, and the expectations for real-time, scalable inference have increased. Understanding AWS's current AI/ML portfolio is essential for navigating these challenges effectively.

Core service stack and technical capabilities

AWS now offers three primary service categories for AI/ML workloads, each serving distinct use cases and technical requirements:

SageMaker AI Platform has consolidated into a unified experience with several integrated components:

- SageMaker Unified Studio - Integrated environment for accessing all data and AI tools

- SageMaker HyperPod - Purpose-built infrastructure reducing foundation model training time by 40%

- SageMaker Inference - Optimized deployment reducing costs by 50% and latency by 20%

- SageMaker Clarify - Enhanced capabilities for evaluating foundation models

SageMaker's evolution represents AWS's push toward streamlining the end-to-end ML development process. The platform has transformed from a collection of related tools into a cohesive system that handles the entire ML lifecycle. This consolidation addresses a key challenge in enterprise ML: the fragmentation of tooling and processes across different phases of development.

The Unified Studio experience now serves as a central interface for data scientists and ML engineers, integrating previously separate tools for data preparation, model development, training, and deployment. HyperPod's specialized infrastructure particularly shines when working with large foundation models, where training efficiency gains translate directly to reduced time-to-market and lower costs.

Bedrock has matured into a comprehensive generative AI platform with:

- Foundation Model Access - Over 100 foundation models from industry leaders

- Bedrock Data Automation - Extracting insights from unstructured multimodal content

- Agents for Bedrock - Automated planning and execution of multistep tasks

- Knowledge Bases for Bedrock - Managed RAG capability with GraphRAG support

Bedrock represents AWS's response to the explosion of foundation models and generative AI. The service has evolved from simply providing access to foundation models to offering a complete platform for building generative AI applications. The addition of Agents for Bedrock is particularly significant, as it enables more complex AI applications that can autonomously plan and execute multi-step workflows—a capability that was largely theoretical just a few years ago.

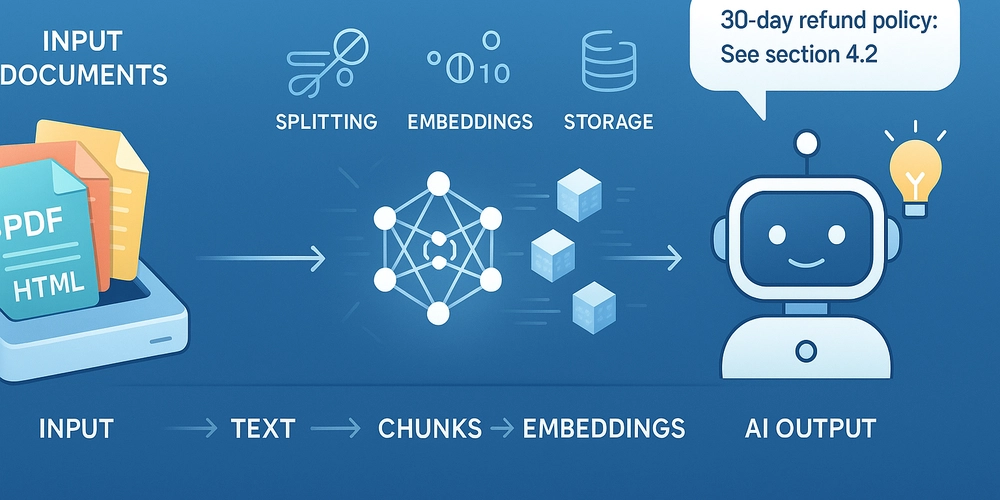

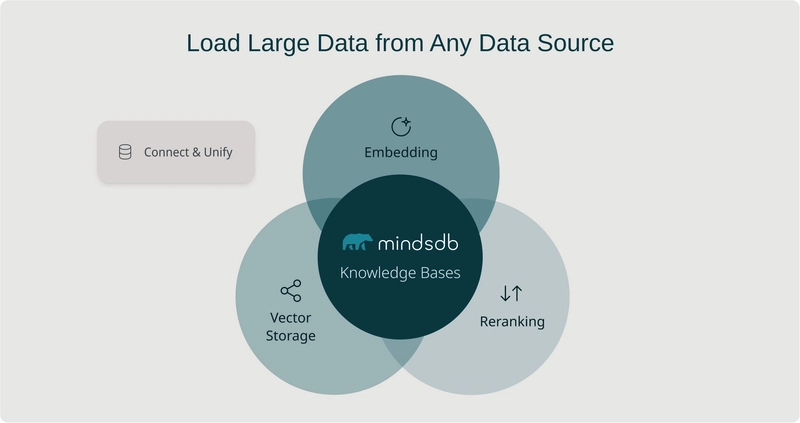

Knowledge Bases for Bedrock has also evolved significantly, now incorporating Graph-based Retrieval Augmented Generation (GraphRAG), which enhances the ability to retrieve contextual information by understanding relationships between data entities. This is crucial for applications that require nuanced understanding of complex domains.

AWS continues offering specialized AI services for specific use cases:

- Amazon Fraud Detector - ML-based fraud identification

- Amazon Comprehend - Natural language processing

- Amazon Textract - Document processing

- Amazon Rekognition - Computer vision

These specialized services provide pre-built ML capabilities for common use cases, allowing organizations to leverage sophisticated AI without deep ML expertise. Each service has been optimized for its specific domain, incorporating best practices and model architectures proven effective for these tasks. For example, Fraud Detector now incorporates temporal behavioral analysis and network effect detection, capabilities that would be extremely complex to build from scratch.

The infrastructure layer now features purpose-built AI acceleration:

- AWS Trainium2 - 4x performance over first-generation, optimized for training

- AWS Inferentia2 - 4x higher throughput and 10x lower latency than first-generation

- EC2 P5/P5e Instances - NVIDIA H100-based instances with petabit-scale networking

- AWS UltraClusters - Massively scalable infrastructure for distributed ML training

The evolution of AWS's AI infrastructure layer is perhaps the most significant advancement in the ecosystem. The transition from general-purpose computing to purpose-built accelerators represents a fundamental shift in how AI workloads are executed. Trainium2 and Inferentia2 reflect AWS's investment in custom silicon optimized specifically for ML workloads, providing significant performance and cost advantages over general-purpose GPUs for certain use cases.

The UltraClusters technology addresses one of the most challenging aspects of large-scale AI training: efficient communication between compute nodes. By providing petabit-scale networking and optimized topologies, UltraClusters enable near-linear scaling for distributed training, dramatically reducing the time required to train large models.

2. Real-world implementation: Financial fraud detection system

Building enterprise-scale AI applications requires moving beyond theoretical architecture to practical implementation. Financial fraud detection represents an ideal case study because it encompasses many of the challenges faced by production AI systems: real-time requirements, complex data relationships, high stakes outcomes, regulatory constraints, and the need for continuous adaptation.

The following implementation demonstrates how AWS's AI/ML ecosystem can be applied to create a sophisticated fraud detection system that meets stringent business and technical requirements.

Business requirements and technical constraints

Financial fraud detection presents unique challenges that require a sophisticated technical approach. The business requirements and technical constraints listed below represent real-world considerations that shape architectural decisions and implementation strategies.

GlobalFinance Inc., a multinational financial services corporation processing approximately 1 billion transactions daily (~$100B volume), faces sophisticated attack vectors including deepfake identity verification bypass and coordinated account takeover attempts. Their fraud detection system must:

- Process transactions in real-time (<100ms decision time)

- Scale to handle peak volumes (50,000 TPS)

- Maintain false positive rate <0.1% and false negative rate <0.05%

- Adapt to evolving fraud patterns without manual retraining

- Analyze heterogeneous data from multiple sources (transaction details, historical customer behavior, device information, biometric verification, threat intelligence)

- Comply with stringent regulatory requirements (PCI-DSS, GDPR, AML)

These requirements present significant technical challenges. The sub-100ms latency requirement is particularly demanding, as it must include the complete round trip: receiving the transaction, extracting features, running inference across multiple models, combining results, applying business rules, and returning a decision. This tight timeframe eliminates many traditional approaches to machine learning inference that introduce too much latency.

The scale requirement of 50,000 transactions per second (TPS) demands a highly distributed architecture that can horizontally scale to handle peak loads, which typically occur during holiday seasons or major shopping events. This throughput requirement drives decisions around data partitioning, load balancing, and infrastructure provisioning.

The false positive/negative constraints highlight the business impact of model accuracy. False positives (legitimate transactions incorrectly flagged as fraudulent) directly impact customer experience and revenue, while false negatives (fraudulent transactions incorrectly approved) result in financial losses and potential regulatory issues. Meeting these constraints requires sophisticated model architectures and ensemble approaches.

The need to analyze heterogeneous data from multiple sources introduces complexity in data ingestion, feature engineering, and model design. Some data sources (like transaction details) are structured, while others (like biometric verification) may contain unstructured elements. Integrating these diverse data types requires careful consideration of data pipelines and feature representation.

Modular, event-driven architecture

To address the business requirements and technical constraints, the implementation adopts a modular, event-driven architecture. This approach provides several advantages: components can be developed, deployed, and scaled independently; the system can evolve over time without complete redesign; and the event-driven nature enables real-time processing and rapid response to changing conditions.

The solution implements a multi-layered approach with these components:

- Real-time Transaction Scoring - ML models calculating fraud risk scores

- Behavioral Biometrics Analysis - Monitoring user behavior patterns

- Graph Network Analysis - Identifying suspicious relationships between accounts

- Adaptive Model Layer - Using reinforcement learning to improve detection

- Explainable Rules Engine - Providing human-understandable justifications

- Continuous Learning System - Automatically incorporating new fraud patterns

This architecture separates concerns and allows each component to utilize the most appropriate technologies for its specific function. For example, the Graph Network Analysis component employs specialized graph neural networks optimized for detecting complex relationships, while the Real-time Transaction Scoring component uses gradient-boosted trees for their efficiency and accuracy with tabular data.

The Explainable Rules Engine addresses an often-overlooked aspect of fraud detection: the need to provide clear explanations for why a transaction was flagged. This is crucial for regulatory compliance and for helping fraud analysts make informed decisions when reviewing flagged transactions.

The event-driven nature of the architecture leverages AWS services like Amazon Kinesis for real-time data streaming and AWS Lambda for serverless compute, enabling the system to scale automatically in response to transaction volume and to process events as they occur without batch delays.

Data ingestion and feature engineering implementation

Data ingestion and feature engineering form the foundation of any ML system. For fraud detection, these components must handle high-volume streaming data, extract meaningful features in real-time, and make those features available to multiple ML models with minimal latency.

The data ingestion layer uses multiple AWS services:

// Kinesis Stream Configuration for transaction ingest

{

"StreamName": "global-finance-transaction-stream",

"ShardCount": 100,

"RetentionPeriodHours": 48,

"StreamEncryption": {

"EncryptionType": "KMS",

"KeyId": "alias/fraud-detection-key"

}

}

This Kinesis configuration demonstrates several important considerations for high-volume data ingestion. The shard count of 100 is chosen to handle the peak load of 50,000 TPS, providing sufficient throughput capacity with headroom for unexpected traffic spikes. Each Kinesis shard can handle up to 1,000 records per second for writes, so 100 shards supports the required throughput while allowing for uneven distribution of traffic across shards.

The retention period of 48 hours enables replay of recent transactions if needed, which is valuable for debugging, model retraining, and recovery from downstream processing failures. Encryption using AWS KMS ensures that sensitive financial data is protected while in transit and at rest, addressing both security best practices and regulatory requirements like PCI-DSS.

Kinesis was chosen over other streaming options like Kafka or MSK because it provides seamless integration with other AWS services, managed scaling, and built-in encryption, reducing operational overhead while meeting the stringent requirements of financial data processing.

Feature engineering leverages SageMaker Feature Store for real-time feature management:

# Feature Store Configuration

import boto3

from sagemaker.feature_store.feature_group import FeatureGroup

feature_group_name = "transaction_features"

sagemaker_client = boto3.client('sagemaker')

feature_group = FeatureGroup(

name=feature_group_name,

sagemaker_session=sagemaker_session

)

feature_definitions = [

{"FeatureName": "transaction_id", "FeatureType": "String"},

{"FeatureName": "customer_id", "FeatureType": "String"},

{"FeatureName": "amount", "FeatureType": "Fractional"},

{"FeatureName": "merchant_id", "FeatureType": "String"},

{"FeatureName": "merchant_category", "FeatureType": "String"},

{"FeatureName": "transaction_time", "FeatureType": "String"},

{"FeatureName": "device_id", "FeatureType": "String"},

{"FeatureName": "ip_address", "FeatureType": "String"},

{"FeatureName": "location_lat", "FeatureType": "Fractional"},

{"FeatureName": "location_long", "FeatureType": "Fractional"},

{"FeatureName": "transaction_velocity_1h", "FeatureType": "Integral"},

{"FeatureName": "amount_velocity_24h", "FeatureType": "Fractional"}

]

feature_group.load_feature_definitions(feature_definitions)

feature_group.create(

s3_uri="s3://globalfinance-feature-store/transaction-features",

record_identifier_name="transaction_id",

event_time_feature_name="transaction_time",

role_arn="arn:aws:iam::123456789012:role/SageMakerFeatureStoreRole",

enable_online_store=True

)

This Feature Store configuration highlights the critical role of feature engineering in fraud detection. The feature definitions encompass various data types that contribute to fraud prediction:

- Identity features (transaction_id, customer_id, merchant_id) serve as keys for joining related data

- Transaction characteristics (amount, merchant_category) provide basic information about the transaction

- Contextual features (device_id, ip_address, location coordinates) help identify suspicious contexts

- Behavioral features (transaction_velocity_1h, amount_velocity_24h) capture patterns that might indicate fraud

The enable_online_store=True parameter is crucial for meeting the sub-100ms latency requirement. The online store provides low-latency, high-throughput access to the latest feature values, enabling real-time scoring of transactions. Meanwhile, the S3 URI configuration specifies the offline store location, which maintains historical feature values for training and analysis.

SageMaker Feature Store addresses several key challenges in ML feature engineering:

- Feature consistency: It ensures that the same feature definitions and transformations are used in both training and inference, preventing training-serving skew

- Feature reuse: Different models can access the same features, eliminating redundant computation

- Point-in-time correctness: It maintains feature history, allowing models to be trained on features as they existed at specific points in time

- Low-latency access: The online store provides sub-millisecond access to feature values, critical for real-time fraud detection

The transaction velocity and amount velocity features exemplify the importance of temporal patterns in fraud detection. These features capture how frequently a customer is transacting and the total transaction amount over different time windows, which can reveal unusual behavior patterns that might indicate fraud. Calculating these features requires maintaining state and processing streaming data, capabilities that Feature Store facilitates through its integration with Kinesis and its incremental feature calculation capabilities.

Model hierarchy and training pipeline implementation

Financial fraud detection benefits from a multi-model approach that captures different aspects of potentially fraudulent behavior. No single model can effectively identify all fraud patterns, so this implementation employs a hierarchical structure of specialized models, each focusing on different signals or patterns.

The solution employs multiple model types in a hierarchical structure:

- Primary Scoring Model: XGBoost ensemble trained on labeled transaction data

# XGBoost Primary Model Configuration

import sagemaker

from sagemaker.xgboost.estimator import XGBoost

hyperparameters = {

"max_depth": 6,

"eta": 0.2,

"gamma": 4,

"min_child_weight": 6,

"subsample": 0.8,

"objective": "binary:logistic",

"num_round": 100,

"verbosity": 1

}

xgb_estimator = XGBoost(

entry_point="fraud_detection_training.py",

hyperparameters=hyperparameters,

role=role,

instance_count=4,

instance_type="ml.c5.4xlarge",

framework_version="2.0-1",

output_path=f"s3://{bucket}/{prefix}/output"

)

xgb_estimator.fit({

"train": training_data_uri,

"validation": validation_data_uri

})

The primary scoring model uses XGBoost, a gradient boosting framework that has proven highly effective for fraud detection. XGBoost offers several advantages for this use case:

- It handles the class imbalance inherent in fraud data (where legitimate transactions far outnumber fraudulent ones)

- It natively handles mixed data types and missing values, common in transaction data

- It provides good interpretability through feature importance rankings

- It delivers high accuracy with relatively small model size, enabling fast inference

The hyperparameters chosen reflect best practices for fraud detection:

-

max_depth: 6prevents overfitting while capturing complex interactions between features -

eta: 0.2(learning rate) balances training speed and model quality -

gamma: 4controls the minimum loss reduction required for a split, reducing overfitting -

min_child_weight: 6helps manage class imbalance by requiring substantial weight in leaf nodes -

subsample: 0.8introduces randomness to prevent overfitting -

objective: binary:logisticoutputs probabilities suitable for fraud scoring

The configuration uses 4 instances for distributed training, reflecting the size of the training dataset (typically billions of rows for a large financial institution). The ml.c5.4xlarge instance type is chosen for its balance of CPU power and memory, which are more important than GPU acceleration for tree-based models like XGBoost.

-

Specialized Models:

- Graph Neural Network (GNN): Heterogeneous graph model using DGL

- Behavioral Biometrics Model: LSTM network analyzing interaction patterns

- NLP Model: Claude 3.5 fine-tuned for transaction descriptions

- Anomaly Detection: Deep autoencoder for unusual patterns

- Device Fingerprinting: CNN for device profile analysis

These specialized models address different aspects of fraud detection:

The Graph Neural Network analyzes relationships between entities (customers, merchants, devices, IP addresses) to identify suspicious patterns that aren't visible when examining transactions in isolation. For example, it can detect when multiple accounts are controlled by the same fraudster based on shared connections or behaviors. The use of the Deep Graph Library (DGL) enables efficient processing of heterogeneous graphs where nodes and edges have different types and attributes.

The Behavioral Biometrics Model examines patterns in how users interact with their devices and applications. For example, it can analyze typing rhythm, mouse movements, or touch gestures to distinguish between legitimate users and impostors. The Long Short-Term Memory (LSTM) architecture is particularly well-suited for this task because it can capture temporal patterns in sequential data.

The NLP Model leverages Claude 3.5, a large language model, to analyze transaction descriptions and other text data. This model can identify unusual or suspicious language patterns that might indicate fraud, such as inconsistencies between the description and the transaction amount or merchant category. Fine-tuning Claude 3.5 for this specific task ensures that it focuses on relevant patterns rather than general language understanding.

The Anomaly Detection model uses a deep autoencoder to identify transactions that deviate significantly from normal patterns. By learning to compress and reconstruct "normal" transactions, the autoencoder can identify anomalies as transactions that have high reconstruction error. This approach is particularly valuable for detecting novel fraud patterns that haven't been seen before.

The Device Fingerprinting model uses a Convolutional Neural Network (CNN) to analyze device profiles, identifying suspicious devices or potential device spoofing. This model can detect when a device's characteristics don't match expected patterns or when a fraudster is attempting to mimic a legitimate user's device.

- Meta-Model Ensemble: Combines outputs using a stacking approach

The meta-model ensemble integrates outputs from all models to make a final fraud determination. The stacking approach treats each model's output as a feature, then trains a higher-level model to optimally combine these features based on historical performance. This approach allows the system to leverage the strengths of each model while mitigating their individual weaknesses.

For example, the XGBoost model might excel at identifying fraud based on transaction characteristics, while the GNN might be better at detecting fraud rings, and the Behavioral Biometrics model might excel at identifying account takeovers. The meta-model learns which models are most reliable in different contexts and weights their outputs accordingly.

Real-time inference pipeline implementation

Converting ML models into a production system requires careful orchestration of data flow, model invocation, and decision logic. The inference pipeline must handle high throughput while maintaining low latency, ensuring that fraud decisions are both accurate and timely.

The inference workflow uses AWS Step Functions for orchestration:

// Step Functions State Machine Definition

{

"Comment": "Fraud Detection Workflow",

"StartAt": "ExtractTransactionData",

"States": {

"ExtractTransactionData": {

"Type": "Task",

"Resource": "arn:aws:lambda:region:account:function:ExtractTransactionDataFunction",

"Next": "GetFeatures"

},

"GetFeatures": {

"Type": "Task",

"Resource": "arn:aws:lambda:region:account:function:GetFeaturesFunction",

"Next": "ParallelModelInference"

},

"ParallelModelInference": {

"Type": "Parallel",

"Branches": [

{

"StartAt": "PrimaryModelInference",

"States": {

"PrimaryModelInference": {

"Type": "Task",

"Resource": "arn:aws:lambda:region:account:function:InvokePrimaryModelFunction",

"End": true

}

}

},

{

"StartAt": "GNNModelInference",

"States": {

"GNNModelInference": {

"Type": "Task",

"Resource": "arn:aws:lambda:region:account:function:InvokeGNNModelFunction",

"End": true

}

}

},

{

"StartAt": "BehavioralModelInference",

"States": {

"BehavioralModelInference": {

"Type": "Task",

"Resource": "arn:aws:lambda:region:account:function:InvokeBehavioralModelFunction",

"End": true

}

}

}

],

"Next": "EnsembleResults"

},

"EnsembleResults": {

"Type": "Task",

"Resource": "arn:aws:lambda:region:account:function:EnsembleResultsFunction",

"Next": "ApplyRules"

},

"ApplyRules": {

"Type": "Task",

"Resource": "arn:aws:lambda:region:account:function:ApplyRulesFunction",

"Next": "DetermineAction"

},

"DetermineAction": {

"Type": "Choice",

"Choices": [

{

"Variable": "$.fraudScore",

"NumericGreaterThan": 0.8,

"Next": "RejectTransaction"

},

{

"Variable": "$.fraudScore",

"NumericGreaterThan": 0.5,

"Next": "FlagForReview"

}

],

"Default": "ApproveTransaction"

},

"RejectTransaction": {

"Type": "Task",

"Resource": "arn:aws:lambda:region:account:function:RejectTransactionFunction",

"Next": "PublishResult"

},

"FlagForReview": {

"Type": "Task",

"Resource": "arn:aws:lambda:region:account:function:FlagForReviewFunction",

"Next": "PublishResult"

},

"ApproveTransaction": {

"Type": "Task",

"Resource": "arn:aws:lambda:region:account:function:ApproveTransactionFunction",

"Next": "PublishResult"

},

"PublishResult": {

"Type": "Task",

"Resource": "arn:aws:lambda:region:account:function:PublishResultFunction",

"End": true

}

}

}

This AWS Step Functions state machine orchestrates the entire fraud detection workflow, from receiving a transaction to making and communicating a decision. The workflow design reflects several key architectural considerations:

Modular Design: Each step is implemented as a separate Lambda function, allowing independent development, testing, and scaling. This modular approach also enables gradual evolution of the system, as individual components can be updated without affecting the overall workflow.

Parallel Processing: The

ParallelModelInferencestate executes multiple model inference tasks simultaneously, reducing the overall latency compared to sequential execution. This parallelism is crucial for meeting the sub-100ms latency requirement while still leveraging multiple specialized models.Workflow Visibility: Using Step Functions provides clear visibility into the workflow execution, facilitating monitoring, debugging, and compliance auditing. Each execution is tracked with its own execution ID, making it possible to trace the decision-making process for any transaction.

Error Handling: While not explicitly shown in this excerpt, Step Functions supports sophisticated error handling, including retries with exponential backoff and catch states that can implement fallback logic. This robustness is essential for a mission-critical system like fraud detection.

Decision Logic: The

DetermineActionstate implements a simple decision tree based on the fraud score, with different thresholds triggering different actions. This separation of decision logic from model inference allows business rules to be adjusted without changing the underlying models.

The workflow processes a transaction through these key stages:

Data Extraction: The

ExtractTransactionDatastep parses the incoming transaction data, normalizing formats and validating inputs.Feature Retrieval: The

GetFeaturesstep retrieves current and historical features from the Feature Store, including both transaction-specific features and entity-level features (customer, merchant, device).-

Model Inference: Multiple models run in parallel to assess different aspects of fraud risk:

- The primary XGBoost model evaluates transaction characteristics

- The GNN model analyzes entity relationships

- The behavioral model examines user interaction patterns

Result Combination: The

EnsembleResultsstep combines the outputs from individual models using the meta-model, generating a unified fraud score and confidence level.Rule Application: The

ApplyRulesstep applies business rules and regulatory constraints that might override or adjust the model-based decision. For example, certain high-risk merchant categories might require additional scrutiny regardless of model scores.Decision and Action: Based on the final fraud score, the workflow branches to either approve the transaction, flag it for review, or reject it outright. The thresholds (0.5 and 0.8 in this example) are calibrated based on the desired balance between fraud prevention and false positives.

Result Publication: Finally, the decision is published to downstream systems, including the transaction processing system, customer notification services, and fraud analytics platforms.

For high-volume transaction processing, this Step Functions workflow is typically initiated by a Lambda function that processes events from the Kinesis data stream. The Lambda function can batch transactions for efficiency while ensuring that high-risk transactions are prioritized.

Continuous learning implementation

Fraud patterns evolve rapidly as fraudsters adapt to detection methods. A static fraud detection system would quickly become ineffective, making continuous learning capabilities essential for maintaining detection accuracy over time.

The solution ensures models remain effective against evolving fraud patterns:

# SageMaker Pipeline for Continuous Model Retraining

from sagemaker.workflow.pipeline import Pipeline

from sagemaker.workflow.steps import ProcessingStep, TrainingStep, CreateModelStep

from sagemaker.workflow.condition_step import ConditionStep

from sagemaker.workflow.conditions import ConditionGreaterThanOrEqualTo

from sagemaker.workflow.properties import PropertyFile

# Step 1: Data preprocessing

preprocessing_step = ProcessingStep(

name="PreprocessTrainingData",

processor=sklearn_processor,

inputs=[

ProcessingInput(

source=input_data_uri,

destination="/opt/ml/processing/input"

)

],

outputs=[

ProcessingOutput(output_name="train", source="/opt/ml/processing/train"),

ProcessingOutput(output_name="validation", source="/opt/ml/processing/validation"),

ProcessingOutput(output_name="test", source="/opt/ml/processing/test")

],

code="preprocess.py"

)

# Step 2: Model training

training_step = TrainingStep(

name="TrainFraudDetectionModel",

estimator=xgb_estimator,

inputs={

"train": preprocessing_step.properties.ProcessingOutputConfig.Outputs["train"].S3Output.S3Uri,

"validation": preprocessing_step.properties.ProcessingOutputConfig.Outputs["validation"].S3Output.S3Uri

}

)

# Step 3: Model evaluation

evaluation_step = ProcessingStep(

name="EvaluateModel",

processor=sklearn_processor,

inputs=[

ProcessingInput(

source=training_step.properties.ModelArtifacts.S3ModelArtifacts,

destination="/opt/ml/processing/model"

),

ProcessingInput(

source=preprocessing_step.properties.ProcessingOutputConfig.Outputs["test"].S3Output.S3Uri,

destination="/opt/ml/processing/test"

)

],

outputs=[

ProcessingOutput(output_name="evaluation", source="/opt/ml/processing/evaluation"),

],

code="evaluate.py"

)

# Step 4: Register model only if accuracy meets threshold

evaluation_report = PropertyFile(

name="EvaluationReport",

output_name="evaluation",

path="evaluation.json"

)

register_step = ConditionStep(

name="RegisterNewModel",

conditions=[ConditionGreaterThanOrEqualTo(

left=JsonGet(

step_name=evaluation_step.name,

property_file=evaluation_report,

json_path="classification_metrics.accuracy.value"

),

right=0.8

)],

if_steps=[

CreateModelStep(

name="CreateModel",

model=model,

inputs=model_inputs

),

# Model registration step

RegisterModel(

name="RegisterModel",

estimator=xgb_estimator,

model_data=training_step.properties.ModelArtifacts.S3ModelArtifacts,

content_types=["application/json"],

response_types=["application/json"],

inference_instances=["ml.c5.xlarge", "ml.m5.xlarge"],

transform_instances=["ml.m5.xlarge"],

model_package_group_name="FraudDetectionModels"

)

],

else_steps=[]

)

# Create and run pipeline

pipeline = Pipeline(

name="FraudDetectionContinuousLearningPipeline",

steps=[preprocessing_step, training_step, evaluation_step, register_step],

sagemaker_session=sagemaker_session

)

This SageMaker Pipeline implementation demonstrates a systematic approach to continuous model improvement, addressing several key challenges in maintaining effective fraud detection over time:

Automated Retraining: The pipeline automates the entire process from data preprocessing to model deployment, enabling frequent retraining without manual intervention. This automation is crucial for keeping pace with evolving fraud patterns, which can change on a daily or even hourly basis.

Data Quality Control: The preprocessing step not only prepares data for training but can also implement quality checks to ensure that the training data is valid and representative. This might include checks for class imbalance, feature distribution shifts, or data integrity issues.

Performance Validation: The pipeline includes a rigorous evaluation step that assesses the new model's performance against a held-out test set. This validation ensures that model updates actually improve detection capabilities rather than degrading them.

Conditional Deployment: The condition step ensures that only models that meet or exceed a performance threshold (80% accuracy in this example) are registered for deployment. This safeguard prevents the deployment of underperforming models that could increase false positives or miss fraudulent transactions.

Model Registry Integration: The

RegisterModelstep integrates with the SageMaker Model Registry, maintaining a versioned history of models along with their metadata and performance metrics. This registry facilitates model governance, auditing, and rollback if needed.

In practice, this pipeline would be triggered based on multiple conditions:

- Scheduled Retraining: Regular retraining cycles (e.g., daily or weekly) to incorporate recent data

- Performance Degradation: Automated triggers when the current model's performance falls below thresholds

- Data Drift Detection: Triggers when significant shifts in feature distributions are detected

- Manual Initiation: For emergency updates in response to new fraud patterns

The continuous learning system also incorporates techniques beyond basic retraining:

- Active Learning: Prioritizing ambiguous or borderline cases for expert review, rapidly improving the model in areas of uncertainty

- Online Learning: Incremental model updates based on streaming data, particularly for adapting to sudden changes in fraud patterns

- Champion-Challenger Testing: Running multiple model variants simultaneously and gradually shifting traffic based on performance

These continuous learning capabilities ensure that the fraud detection system remains effective even as fraudsters adapt their tactics, new types of fraud emerge, and legitimate transaction patterns evolve over time.

3. Advanced hardware acceleration and optimization techniques

The performance and cost-effectiveness of ML workloads depend significantly on the underlying hardware infrastructure. AWS offers a range of specialized hardware accelerators optimized for different ML tasks, enabling organizations to balance performance, cost, and energy efficiency based on their specific requirements.

Selecting the appropriate hardware for each component of an ML workflow is crucial for meeting latency requirements while controlling costs. Different phases of the ML lifecycle—training, fine-tuning, and inference—have different computational characteristics and can benefit from different types of accelerators.

Hardware acceleration selection and configuration

AWS offers multiple acceleration options for different ML workload phases:

Inferentia2 (Inference Optimization)

- Performance Metrics: 4x higher throughput and 10x lower latency compared to first-generation

- Technical Specifications: Each chip supports up to 190 TFLOPS of FP16 with 32GB HBM memory

- Optimal Instance Configuration: Inf2.48xlarge with 12 Inferentia2 accelerators delivers 2.3 petaflops

- Unique Feature: NeuronLink for faster distributed inference with direct data flow between accelerators

Inferentia2 represents a significant advancement in inference acceleration, designed specifically to address the demands of modern deep learning models. The architecture features specialized circuits for common neural network operations, dramatically reducing the computational overhead compared to general-purpose processors.

The NeuronLink technology is particularly valuable for large model inference, where model parallelism is necessary. By enabling direct chip-to-chip communication without going through host memory, NeuronLink reduces latency and increases throughput for models that exceed the memory capacity of a single accelerator—a common situation with modern foundation models.

For fraud detection applications, Inferentia2 is ideal for deploying computationally intensive components like the graph neural networks and behavioral biometrics models, where low-latency inference is critical to meeting the overall sub-100ms response time requirement.

Trainium2 (Training Optimization)

- Performance Metrics: 4x the performance of first-generation Trainium

- Technical Specifications: Trn2 instances feature 16 Trainium2 chips with NeuronLink, delivering 20.8 petaflops

- Advanced Features: Hardware optimizations for 4x sparsity (16:4), micro-scaling, stochastic rounding

- Cost Advantage: 30-40% better price performance compared to GPU-based EC2 P5 instances

Trainium2 is optimized specifically for training deep learning models, with architectural features that accelerate common training operations like backpropagation. The support for 4x sparsity (where only 4 out of every 16 weights are non-zero) is particularly beneficial for large language models and other neural networks that can leverage sparse representations without significant accuracy loss.

The micro-scaling feature enables fine-grained control over the precision used for different parts of the model, reducing memory requirements and computational overhead. Stochastic rounding improves training stability for low-precision arithmetic, enabling the use of lower precision formats (like FP8) without compromising model convergence.

For fraud detection, Trainium2 is well-suited for training the specialized neural network models, particularly when working with large datasets that benefit from distributed training.

| Workload Type | Recommended Instance | Key Specifications | Optimal Usage Pattern |

|---|---|---|---|

| Large-scale LLM Training | P5e/P5en | NVIDIA H100 GPUs, petabit-scale networking | Distributed training of foundation models |

| Mid-sized Training | G5 | NVIDIA A10G GPUs | More flexible sizing for varied workloads |

| Cost-Optimized Training | Trn1/Trn2 | AWS Trainium chips | Up to 50% cost-to-train savings |

| High-Performance Inference | Inf2 | AWS Inferentia2 chips | Lowest inference cost with high throughput |

| Flexible Inference | G4dn | NVIDIA T4 GPUs | General-purpose ML inference |

This table provides a framework for selecting the appropriate instance type based on workload characteristics. The choice between NVIDIA-based instances (P5e, G5, G4dn) and AWS custom silicon (Trn1/Trn2, Inf2) depends on several factors:

- Model Compatibility: Some models or frameworks may have optimizations specific to certain hardware platforms

- Development Stage: Early experimentation often benefits from the broader ecosystem support of NVIDIA GPUs

- Operational Requirements: Custom silicon typically requires specialized tooling (AWS Neuron SDK)

- Cost Sensitivity: Custom silicon generally offers better price-performance for compatible workloads

For fraud detection systems, a hybrid approach often works best: using NVIDIA-based instances for initial development and experimentation, then migrating production workloads to Inferentia2 for inference and Trainium2 for regular retraining once the models are stable.

Distributed training architectures

As model sizes grow and training datasets expand, distributed training becomes essential for maintaining reasonable training times. Modern distributed training goes beyond simple data parallelism to encompass sophisticated techniques for splitting models across multiple devices while maintaining training efficiency.

For large-scale model training, implement these advanced techniques:

Tensor Parallelism Implementation

# Configuration for tensor parallelism with AWS Neuron SDK

import torch_neuron as neuron

neuron_config = {

"tensor_parallel_degree": 8,

"pipeline_parallel_degree": 1,

"microbatch_count": 4,

"optimize_memory_usage": True

}

# Apply configuration to model training

with neuron.parallel_config(**neuron_config):

model_parallel = neuron.parallelize(model)

Tensor parallelism splits individual tensors (model weights, activations, gradients) across multiple devices, enabling training of models that are too large to fit on a single accelerator. This approach is particularly valuable for large language models and other architectures with massive parameter counts.

The configuration shown above implements tensor parallelism across 8 devices (likely Trainium2 accelerators within a Trn2 instance), with a microbatch count of 4 to optimize throughput. The optimize_memory_usage parameter enables memory-saving techniques like activation checkpointing, which trades computation for memory by recomputing certain activations during the backward pass rather than storing them.

Tensor parallelism complements other distributed training strategies:

Fully Sharded Data Parallel (FSDP)

- Shards model parameters, gradients, and optimizer states across workers

- Reduces memory footprint by up to 4x compared to standard distributed data parallel

- Implementation best practice: use activation checkpointing with FSDP

FSDP extends traditional data parallelism (where each worker has a complete copy of the model) by sharding model components across workers. During the forward and backward passes, parameters are communicated just-in-time, reducing memory requirements at the cost of additional communication overhead.

This technique is crucial for training very large models, as it allows the aggregate memory of multiple devices to be used effectively. In the context of fraud detection, FSDP is valuable when training complex models like graph neural networks on large transaction graphs, where memory consumption can become a bottleneck.

AWS-Optimized Infrastructure

- EC2 UltraCluster Architecture with petabit-scale non-blocking networking

- SageMaker HyperPod with automated fault detection, diagnosis, and recovery

- EFA network interfaces for up to 400 Gbps bandwidth and sub-microsecond latency

The network infrastructure connecting compute nodes is as important as the accelerators themselves for distributed training performance. AWS's UltraCluster architecture provides non-blocking, full-bisection bandwidth between nodes, minimizing communication bottlenecks during distributed training. This is essential for scaling to hundreds or thousands of accelerators.

SageMaker HyperPod simplifies the operation of large-scale training clusters, providing automated fault handling and cluster management. This operational efficiency is particularly valuable for long-running training jobs, where hardware failures become increasingly probable as the scale increases.

Elastic Fabric Adapter (EFA) provides high-throughput, low-latency networking optimized for ML workloads. The sub-microsecond latency is crucial for reducing the communication overhead in distributed training, particularly for techniques like tensor parallelism and FSDP that require frequent parameter synchronization.

Hyperparameter optimization techniques

Hyperparameter optimization (HPO) is crucial for achieving optimal model performance, but can be computationally expensive and time-consuming. Advanced HPO techniques reduce the resources required while improving the quality of the resulting models.

# Multi-objective HPO configuration

hyperparameter_tuner = HyperparameterTuner(

estimator=estimator,

objective_type='Maximize',

objective_metric_name='validation:accuracy',

metric_definitions=[

{'Name': 'inference_latency', 'Regex': 'Inference latency: ([0-9.]+)'},

{'Name': 'memory_usage', 'Regex': 'Memory usage: ([0-9.]+)'}

],

strategy='Bayesian',

early_stopping_type='Auto',

max_jobs=50,

max_parallel_jobs=5,

warm_start_type='TransferLearning',

warm_start_config=WarmStartConfig(

warm_start_type='TransferLearning',

parents=['parent-tuning-job-name']

)

)

This HPO configuration demonstrates several advanced techniques:

Multi-objective Optimization: Rather than optimizing for a single metric (accuracy), this configuration also tracks inference latency and memory usage. This multi-objective approach is crucial for production ML systems, where performance constraints are as important as model quality. The tuner will seek hyperparameter combinations that balance these competing objectives.

Bayesian Optimization: Unlike grid search or random search, Bayesian optimization uses a probabilistic model of the objective function to intelligently select the next hyperparameter combinations to evaluate. This approach converges much faster than exhaustive methods, particularly for high-dimensional hyperparameter spaces.

Early Stopping: The

early_stopping_type='Auto'parameter enables automatic termination of underperforming training jobs based on intermediate evaluation metrics. This prevents wasting computational resources on hyperparameter combinations that are unlikely to yield good results.Transfer Learning: The

warm_start_type='TransferLearning'configuration leverages knowledge from previous hyperparameter tuning jobs to accelerate the current tuning process. This is particularly valuable when making incremental changes to models or adapting models to similar domains.Parallel Execution: The configuration allows up to 5 training jobs to run in parallel (

max_parallel_jobs=5), balancing resource utilization with the need for sequential information in Bayesian optimization.

Key HPO strategies include:

- Adaptive Early Stopping via Hyperband - Dynamically allocates resources to promising configurations

- Bayesian Optimization with Transfer Learning - Leverages knowledge from previous similar models

- Multi-Objective Optimization - Simultaneously optimizes for multiple metrics like accuracy, latency, memory usage

For fraud detection models, HPO needs to consider domain-specific considerations:

- Balancing precision and recall based on business cost models (false positives vs. false negatives)

- Optimizing for performance at specific decision thresholds rather than overall accuracy

- Ensuring model stability across different data segments (e.g., transaction types, customer segments)

- Maintaining acceptable latency under peak load conditions

By leveraging these advanced HPO techniques, the fraud detection system can continuously refine its models to improve detection rates while maintaining operational efficiency.

Model compression and quantization

As ML models grow in size and complexity, deploying them efficiently for real-time inference becomes increasingly challenging. Model compression and quantization techniques address this challenge by reducing model size and computational requirements without significantly sacrificing accuracy.

Apply these techniques to reduce model size and improve inference performance:

Activation-Aware Weight Quantization (AWQ)

- Reduces model size by 4x while preserving accuracy

- Identifies critical weights based on activation patterns

- Selectively preserves precision for sensitive weights (top 1%)

AWQ represents a significant advancement over traditional quantization methods. Rather than applying uniform quantization across all weights, it identifies which weights are most critical to model accuracy based on their activation patterns. By preserving higher precision for these critical weights while aggressively quantizing others, AWQ achieves better accuracy with the same overall model size.

# Deploying quantized models on AWS Inferentia2

import torch

import torch_neuronx

# Load pre-trained model

model = torch.load('model.pt')

# Define example input

example_input = torch.zeros([1, 3, 224, 224])

# Compile with INT8 quantization

compiler_args = ['--target=trn1', '--auto-cast=fp16', '--enable-quantization']

model_neuron = torch_neuronx.trace(model, example_input, compiler_args=compiler_args)

# Save compiled model

torch.jit.save(model_neuron, 'model_neuron_quantized.pt')

This code demonstrates how to deploy a quantized model on AWS Inferentia2 using the Neuron SDK. The --enable-quantization flag activates the built-in quantization capabilities of the compiler, which includes techniques like AWQ.

The process works in several steps:

- The model is traced with a representative input tensor, allowing the compiler to analyze the computational graph

- The

--auto-cast=fp16flag converts appropriate operations to half-precision (FP16) arithmetic - The quantization process converts weights from floating-point to integer representation (typically INT8)

- The compiler optimizes the quantized model for the Inferentia2 hardware architecture

- The resulting compiled model is saved for deployment

For fraud detection models, quantization must be applied carefully to avoid compromising detection accuracy. Critical components like the primary scoring model and behavioral biometrics models benefit from selective quantization, where only stable and robust parts of the model are quantized while maintaining higher precision for sensitive operations.

Other optimization techniques include:

- Outlier-Aware Quantization (OAQ) - Reshapes weight distributions to enhance quantization accuracy

- Knowledge Distillation - Teacher-student frameworks with intermediate feature matching

- Sparse Tensor Train Decomposition - Represents high-dimensional tensors as a series of low-rank cores

Knowledge distillation is particularly valuable for complex ensemble models like those used in fraud detection. A large, accurate "teacher" ensemble can be distilled into a smaller, faster "student" model that captures most of the ensemble's predictive power while being much more efficient to deploy. This technique maintains the accuracy benefits of ensemble methods while addressing their latency and resource challenges.

Sparse Tensor Train Decomposition addresses the computational challenges of models with high-dimensional parameter tensors, common in multi-modal fraud detection systems. By decomposing these tensors into products of much smaller core tensors, this technique dramatically reduces parameter counts while preserving most of the model's representational capacity.

4. Infrastructure as code for AI/ML workloads

Machine learning systems present unique infrastructure challenges due to their complex lifecycles, specialized resource requirements, and the need for reproducibility. Infrastructure as Code (IaC) addresses these challenges by providing declarative, version-controlled definitions of infrastructure that can be consistently deployed and managed.

For ML workloads, IaC is particularly valuable because it:

- Ensures consistency between development, testing, and production environments

- Provides audit trails for model training infrastructure, crucial for regulatory compliance

- Enables rapid deployment of complex, multi-component ML systems

- Facilitates disaster recovery and business continuity for critical ML applications

- Streamlines the transition from experimentation to production

The following sections demonstrate different IaC approaches for deploying ML infrastructure on AWS, each with its own strengths and ecosystem.

AWS CloudFormation/CDK implementation

AWS Cloud Development Kit (CDK) provides a higher-level abstraction over CloudFormation, allowing infrastructure to be defined using familiar programming languages. This approach is particularly valuable for ML infrastructure, where complex relationships between components are common.

Create a secure SageMaker environment using AWS CDK:

from aws_cdk import (

aws_sagemaker as sagemaker,

aws_ec2 as ec2,

aws_iam as iam,

aws_kms as kms,

Stack

)

from constructs import Construct

class SecureSageMakerStack(Stack):

def __init__(self, scope: Construct, id: str, **kwargs) -> None:

super().__init__(scope, id, **kwargs)

# Create a VPC with isolated subnets

vpc = ec2.Vpc(self, "MLWorkloadVPC",

max_azs=2,

subnet_configuration=[

ec2.SubnetConfiguration(

name="private",

subnet_type=ec2.SubnetType.PRIVATE_ISOLATED,

cidr_mask=24

)

]

)

# Add VPC Endpoints for SageMaker API, Runtime, and S3

vpc.add_interface_endpoint("SageMakerAPI",

service=ec2.InterfaceVpcEndpointAwsService.SAGEMAKER_API

)

vpc.add_interface_endpoint("SageMakerRuntime",

service=ec2.InterfaceVpcEndpointAwsService.SAGEMAKER_RUNTIME

)

vpc.add_gateway_endpoint("S3Endpoint",

service=ec2.GatewayVpcEndpointAwsService.S3

)

# Create KMS key for encryption

kms_key = kms.Key(self, "MLDataKey",

enable_key_rotation=True,

description="KMS key for ML data encryption"

)

# Create IAM role with least privilege

notebook_role = iam.Role(self, "NotebookRole",

assumed_by=iam.ServicePrincipal("sagemaker.amazonaws.com")

)

# Add necessary permissions

notebook_role.add_managed_policy(

iam.ManagedPolicy.from_aws_managed_policy_name("AmazonSageMakerFullAccess")

)

# Create security group for the notebook instance

sg = ec2.SecurityGroup(self, "NotebookSG",

vpc=vpc,

description="Security group for SageMaker notebook instance",

allow_all_outbound=False

)

# Create a SageMaker notebook instance

notebook = sagemaker.CfnNotebookInstance(self, "SecureNotebook",

instance_type="ml.t3.medium",

role_arn=notebook_role.role_arn,

root_access="Disabled",

direct_internet_access="Disabled",

subnet_id=vpc.private_subnets[0].subnet_id,

security_group_ids=[sg.security_group_id],

kms_key_id=kms_key.key_arn,

volume_size_in_gb=50

)

This CDK implementation creates a secure SageMaker development environment with several important security features:

Network Isolation: The SageMaker notebook instance is deployed in a private, isolated subnet without direct internet access. This prevents unauthorized data exfiltration and limits exposure to external threats.

VPC Endpoints: Interface endpoints for SageMaker API and Runtime, along with a gateway endpoint for S3, allow the notebook instance to communicate with AWS services without traversing the public internet. This enhances security while maintaining functionality.

Data Encryption: A dedicated KMS key is created for encrypting ML data, with automatic key rotation enabled. This encryption protects sensitive data at rest, addressing requirements in regulations like GDPR and industry standards like PCI-DSS.

Least Privilege Access: The IAM role created for the notebook instance follows the principle of least privilege, though in this example it uses the managed policy

AmazonSageMakerFullAccess. In a production environment, this would typically be replaced with a more restrictive custom policy.Root Access Restriction: The

root_access="Disabled"setting prevents users from gaining root access to the underlying instance, limiting the potential for system-level modifications that could compromise security.

This infrastructure definition can be version-controlled, reviewed through standard code review processes, and deployed consistently across multiple environments. It also serves as documentation of the infrastructure, making it easier to understand the system's architecture and security controls.

For ML workloads, CDK's programming model provides advantages over raw CloudFormation:

- Logic for dynamic resource configuration (e.g., scaling instance types based on data size)

- Reusable components for common ML infrastructure patterns

- Integration with existing software development workflows

- Type safety and IDE support for catching errors early

Terraform implementation

Terraform is a popular multi-cloud IaC tool that provides a consistent workflow across different cloud providers. For organizations using multiple clouds or migrating between providers, Terraform offers valuable flexibility.

Configure a secure SageMaker endpoint with Terraform:

provider "aws" {

region = "us-east-1"

}

resource "aws_iam_role" "sagemaker_role" {

name = "sagemaker-execution-role"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = "sts:AssumeRole"

Effect = "Allow"

Principal = {

Service = "sagemaker.amazonaws.com"

}

}

]

})

}

resource "aws_iam_role_policy_attachment" "sagemaker_policy" {

role = aws_iam_role.sagemaker_role.name

policy_arn = "arn:aws:iam::aws:policy/AmazonSageMakerFullAccess"

}

resource "aws_security_group" "training_sg" {

name = "sagemaker-training-sg"

description = "Security group for SageMaker training jobs"

vpc_id = var.vpc_id

egress {

from_port = 443

to_port = 443

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

description = "Allow HTTPS outbound traffic"

}

}

resource "aws_sagemaker_model" "ml_model" {

name = "secure-ml-model"

execution_role_arn = aws_iam_role.sagemaker_role.arn

primary_container {

image = "${var.account_id}.dkr.ecr.${var.region}.amazonaws.com/${var.model_image}:latest"

model_data_url = "s3://${var.model_bucket}/${var.model_key}"

}

vpc_config {

subnets = var.private_subnet_ids

security_group_ids = [aws_security_group.training_sg.id]

}

enable_network_isolation = true

}

resource "aws_sagemaker_endpoint_configuration" "endpoint_config" {

name = "secure-endpoint-config"

production_variants {

variant_name = "variant-1"

model_name = aws_sagemaker_model.ml_model.name

initial_instance_count = 1

instance_type = "ml.c5.large"

}

kms_key_arn = var.kms_key_arn

}

resource "aws_sagemaker_endpoint" "endpoint" {

name = "secure-ml-endpoint"

endpoint_config_name = aws_sagemaker_endpoint_configuration.endpoint_config.name

}

This Terraform configuration deploys a secure SageMaker endpoint for model inference, incorporating several security best practices:

Network Isolation: The

enable_network_isolation = truesetting ensures that the model container cannot make outbound network calls, preventing potential data exfiltration or unauthorized API access.VPC Deployment: The model is deployed within a VPC, with security groups controlling network traffic. This provides network-level isolation and control over communication paths.

Restrictive Egress Rules: The security group allows only HTTPS outbound traffic (port 443), restricting the model container's ability to communicate with external services.

Encryption: The endpoint configuration references a KMS key (provided as a variable) for encrypting data at rest, including the model artifacts and endpoint storage.

The configuration also demonstrates Terraform's use of variables (var.vpc_id, var.private_subnet_ids, etc.), which allow the same infrastructure definition to be deployed across different environments with environment-specific parameters.

For ML infrastructure, Terraform offers several advantages:

- State management for tracking infrastructure changes over time

- Modules for encapsulating and reusing infrastructure patterns

- Provider ecosystem for managing resources across multiple cloud providers

- Plan/apply workflow for reviewing changes before implementation

Terraform's declarative approach is particularly valuable for compliance-focused organizations, as it provides clear documentation of infrastructure state and changes over time. This audit trail is crucial for regulated industries like finance and healthcare, where ML model deployments must meet stringent governance requirements.

Pulumi implementation

Pulumi takes a different approach to IaC, allowing infrastructure to be defined using general-purpose programming languages like Python, TypeScript, Go, and others. This approach is particularly valuable for ML infrastructure, where complex logic may be needed to configure resources based on model characteristics or data properties.

Create a secure ML infrastructure using Pulumi:

import pulumi

import pulumi_aws as aws

# Create IAM role for SageMaker

sagemaker_role = aws.iam.Role("sagemaker-role",

assume_role_policy=aws.iam.get_policy_document(statements=[

aws.iam.GetPolicyDocumentStatementArgs(

actions=["sts:AssumeRole"],

principals=[aws.iam.GetPolicyDocumentStatementPrincipalArgs(

type="Service",

identifiers=["sagemaker.amazonaws.com"],

)]

)

]).json

)

# Attach the SageMaker policy

role_policy_attachment = aws.iam.RolePolicyAttachment("sagemaker-policy-attachment",

role=sagemaker_role.name,

policy_arn="arn:aws:iam::aws:policy/AmazonSageMakerFullAccess"

)

# Create a VPC for secure ML workloads

vpc = aws.ec2.Vpc("ml-vpc",

cidr_block="10.0.0.0/16",

enable_dns_hostnames=True,

enable_dns_support=True

)

# Create private subnets

private_subnet_1 = aws.ec2.Subnet("private-subnet-1",

vpc_id=vpc.id,

cidr_block="10.0.1.0/24",

availability_zone="us-east-1a",

map_public_ip_on_launch=False

)

private_subnet_2 = aws.ec2.Subnet("private-subnet-2",

vpc_id=vpc.id,

cidr_block="10.0.2.0/24",

availability_zone="us-east-1b",

map_public_ip_on_launch=False

)

# Create a security group for ML workloads

ml_security_group = aws.ec2.SecurityGroup("ml-security-group",

vpc_id=vpc.id,

description="Security group for ML workloads",

egress=[aws.ec2.SecurityGroupEgressArgs(

from_port=0,

to_port=0,

protocol="-1",

cidr_blocks=["0.0.0.0/0"],

description="Allow all outbound traffic"

)]

)

# Create the SageMaker model

model = aws.sagemaker.Model("ml-model",

execution_role_arn=sagemaker_role.arn,

primary_container=aws.sagemaker.ModelPrimaryContainerArgs(

image=f"{account_id}.dkr.ecr.{region}.amazonaws.com/{model_image}:latest",

model_data_url=f"s3://{model_bucket}/{model_key}"

),

vpc_config=aws.sagemaker.ModelVpcConfigArgs(

security_group_ids=[ml_security_group.id],

subnets=[private_subnet_1.id, private_subnet_2.id]

),

enable_network_isolation=True

)

# Create an endpoint configuration

endpoint_config = aws.sagemaker.EndpointConfiguration("ml-endpoint-config",

production_variants=[aws.sagemaker.EndpointConfigurationProductionVariantArgs(

variant_name="variant-1",

model_name=model.name,

initial_instance_count=1,

instance_type="ml.c5.large",

initial_variant_weight=1.0

)]

)

# Create the endpoint

endpoint = aws.sagemaker.Endpoint("ml-endpoint",

endpoint_config_name=endpoint_config.name

)

# Export the endpoint name

pulumi.export("endpoint_name", endpoint.name)

This Pulumi program creates a similar infrastructure to the Terraform example, but with the advantages of a general-purpose programming language:

Full Programming Capabilities: The code can include conditional logic, loops, functions, and other programming constructs to create more dynamic infrastructure definitions. For ML workloads, this might include scaling resources based on model size or complexity.

Object-Oriented Design: The infrastructure can be modeled using object-oriented principles, with classes representing reusable infrastructure patterns. This is particularly valuable for ML infrastructure, which often involves similar patterns across different models or applications.

Native Integration with Application Code: Pulumi allows infrastructure definitions to live alongside application code, facilitating DevOps practices and ensuring that infrastructure changes are coordinated with application changes.

Testing Framework Integration: Infrastructure code can be tested using standard programming language testing frameworks, enabling test-driven development for infrastructure.

For ML operations (MLOps), Pulumi's programming model can be particularly valuable when infrastructure needs to adapt to model characteristics. For example, instance types could be selected based on model size, memory requirements, or computational complexity, all calculated programmatically as part of the infrastructure definition.

The example creates a secure deployment environment with private networking, appropriate security groups, and network isolation for the SageMaker model. The multi-AZ deployment across two availability zones (us-east-1a and us-east-1b) enhances availability, ensuring that the ML service remains accessible even if one availability zone experiences issues.

5. AI/ML-specific security considerations

Machine learning systems introduce unique security challenges that go beyond traditional application security concerns. From protecting sensitive training data to preventing adversarial attacks on deployed models, ML security requires a comprehensive approach addressing the entire ML lifecycle.

For fraud detection systems, security is particularly critical given the financial impact of compromises and the sensitive nature of the data involved. The following sections outline key ML-specific security considerations and how to address them on AWS.

Model security and adversarial attack prevention

AI/ML models face unique security challenges that require specialized protection:

Model Poisoning Mitigation

- Implement rigorous data validation procedures before training

- Deploy anomaly detection to filter potentially adversarial data

- Track data origins using OWASP CycloneDX or ML-BOM

- Configure SageMaker training jobs with

EnableNetworkIsolationset totrue

Model poisoning represents a significant threat to ML systems, particularly those used for security-critical applications like fraud detection. In a poisoning attack, an adversary manipulates the training data to introduce vulnerabilities or backdoors that can be exploited later. For example, a fraudster might attempt to poison a fraud detection model's training data to create blind spots for specific fraud patterns.

Data validation procedures are the first line of defense against poisoning attacks. These procedures should check for statistical anomalies, inconsistencies, and patterns that might indicate tampering. For fraud detection models, this might include checking for unusual distributions of transaction amounts, suspicious patterns in IP addresses, or anomalous relationships between features.

Tracking data provenance using frameworks like OWASP CycloneDX or ML Bill of Materials (ML-BOM) provides accountability and traceability for all data used in model training. This audit trail is valuable not only for security but also for regulatory compliance, allowing organizations to demonstrate due diligence in data handling.

Network isolation during training prevents the training job from making unauthorized network calls, which could potentially exfiltrate sensitive data or import compromised code or data. This isolation is a simple but effective security control that should be standard practice for all production ML training jobs.

Adversarial Attack Prevention

- Implement thorough validation pipelines for inference inputs

- Maintain model version control for quick recovery

- Use retrieval-augmented generation (RAG) to reduce hallucination risks

Adversarial attacks on deployed models represent another significant threat. In these attacks, an adversary crafts inputs specifically designed to confuse or mislead the model, causing it to produce incorrect outputs. For fraud detection, this might involve creating transaction patterns that appear legitimate to the model despite being fraudulent.

Input validation for inference requests is crucial for preventing adversarial attacks. This validation should check for input values outside expected ranges, unusual combinations of features, or patterns known to be associated with adversarial attempts. For example, a fraud detection system might flag transactions with abnormally precise amounts or suspiciously round timestamps.

Model version control enables rapid response to detected vulnerabilities or attacks. If a model is found to be compromised or vulnerable to specific adversarial patterns, having a robust versioning system allows for quick rollback to a previous secure version while the issue is addressed.

For models that incorporate generative components, retrieval-augmented generation (RAG) can reduce the risk of "hallucinations" or fabricated outputs. By grounding generated content in retrieved information from trusted sources, RAG helps ensure that the model's outputs are based on factual information rather than confabulations that might be exploited by attackers.

Data encryption and protection

Implement comprehensive data security measures:

# KMS encryption configuration for ML data (Terraform)

resource "aws_kms_key" "ml_data_key" {

description = "KMS key for ML data encryption"

deletion_window_in_days = 30

enable_key_rotation = true

policy = data.aws_iam_policy_document.ml_kms_policy.json

}

resource "aws_s3_bucket" "ml_data_bucket" {

bucket = "secure-ml-data-${random_string.suffix.result}"

}

resource "aws_s3_bucket_server_side_encryption_configuration" "ml_bucket_encryption" {

bucket = aws_s3_bucket.ml_data_bucket.id

rule {

apply_server_side_encryption_by_default {

kms_master_key_id = aws_kms_key.ml_data_key.arn

sse_algorithm = "aws:kms"

}

}

}

Data protection is particularly critical for ML systems, which often process large volumes of sensitive information. The configuration above demonstrates several important data protection measures:

KMS Key Management: Creating a dedicated KMS key for ML data provides fine-grained control over encryption and access. The 30-day deletion window provides protection against accidental key deletion, while automatic key rotation enhances security by periodically changing the encryption material.

Bucket Encryption: Configuring server-side encryption using the KMS key ensures that all data stored in the bucket is automatically encrypted at rest. This addresses regulatory requirements for data protection and reduces the risk of data exposure if storage media are compromised.

Randomized Bucket Name: Including a random suffix in the bucket name (

${random_string.suffix.result}) prevents predictable resource naming, making it harder for attackers to guess resource names.

For ML workloads, data protection should address the entire data lifecycle:

- Collection: Secure methods for gathering and importing data, including encrypted transit

- Storage: Encryption at rest for all data, with appropriate access controls

- Processing: Secure computing environments for feature engineering and training