How AI Content Is Flooding Social Media & How to Spot It

Article courtesy: SoftpageCMS.com In recent months, social media platforms have experienced an unprecedented surge in AI-generated content, transforming how we consume information online. From seemingly perfect travel photos to politically charged commentary, artificial intelligence is increasingly shaping our digital landscape—often without our knowledge. This shift raises important questions about authenticity in digital media, trust, and the future of online communication. The AI Content Explosion The rapid advancement of generative AI tools has democratised content creation. What once required professional skills in writing, photography, or graphic design can now be accomplished with a few prompts. The result? A flood of AI-generated content across platforms like Instagram, Twitter, TikTok, and Facebook. According to recent analysis by the Reuters Institute for the Study of Journalism, approximately 18% of viral content on major social platforms now contains elements created or significantly enhanced by AI. This represents a fourfold increase from just one year ago. “We’re witnessing a fundamental transformation in how content is produced and consumed online,” explains Dr Sarah Chen, digital media researcher at Oxford University. “The barriers to creating polished, professional-looking content have essentially disappeared.” Why Is AI Content So Prevalent? Several factors drive this surge in AI-generated social media content: Cost and Efficiency For businesses and content creators, AI tools significantly reduce production costs. What might have required a team of specialists can now be done by one person with access to the right AI systems. Marketing agencies report cutting content production times by up to 70% using AI assistants for first drafts and concept generation. Algorithm Optimisation AI-generated content often performs exceptionally well with engagement algorithms. By analysing vast datasets of successful posts, AI can create content specifically engineered to maximise likes, shares, and comments—a practice some call “algorithm hacking.” Misinformation Campaigns Perhaps most concerning is the use of AI to generate misleading or false content at scale. A study from the Computational Propaganda Research Project found that politically motivated actors can now produce hundreds of contextually relevant, persuasive comments across multiple platforms in minutes—a task that previously required extensive human resources. How to Identify AI-Generated Content As AI-generated content becomes increasingly sophisticated, spotting it requires careful attention to several key indicators: Perfectionism with Odd Details AI-generated images often appear flawless at first glance but contain subtle inconsistencies upon closer inspection. Watch for: Unnatural hand renderings (extra fingers or distorted proportions) Inconsistent lighting or shadows Peculiar background blurring or artefacts Symmetry that’s too perfect to be natural Adobe’s Content Authenticity Initiative has developed tools to help identify manipulated or synthetic images based on these telltale signs. Generic Language Patterns AI-written text often exhibits certain linguistic patterns: Overuse of specific transitional phrases (“furthermore,” “moreover”) Consistently perfect grammar without the natural irregularities of human writing Even tone throughout long pieces without the emotional fluctuations typical in human writing Generic perspectives that lack personal anecdotes or specific viewpoints Tools like GPTZero can help detect these patterns in suspected AI-written content. Contextual Inconsistencies AI still struggles with certain contextual elements: Cultural references that seem slightly off or anachronistic Facts cited without specific sources Vague rather than specific examples Claims that seem plausible but lack verifiable details Content at Scale’s AI detector specialises in identifying these contextual inconsistencies. Response Patterns When engaging with suspected AI accounts: Note robotic response times (too quick or mechanically regular) Watch for generic responses to specific questions Observe how accounts handle unexpected queries or challenges The Impact on Online Trust The proliferation of AI-generated content fundamentally challenges how we establish truth and authenticity online. Traditional markers of credibility—well-written text, professional-looking images, consistent posting—can now be easily simulated. “We’re entering an era where visual evidence, long considered the gold standard for verification, can no longer be trusted implicitly,” notes Professor Marcus Williams of the Oxford Internet Institute. “This requires a complete rethinking of digital literacy.” The Center for Humane Technology has identified this trust crisis as one of the most significant challenges facing social media platforms today. Developing Digital Self-Defence As AI content becomes more prevalent, developing stro

Article courtesy: SoftpageCMS.com

In recent months, social media platforms have experienced an unprecedented surge in AI-generated content, transforming how we consume information online. From seemingly perfect travel photos to politically charged commentary, artificial intelligence is increasingly shaping our digital landscape—often without our knowledge. This shift raises important questions about authenticity in digital media, trust, and the future of online communication.

The AI Content Explosion

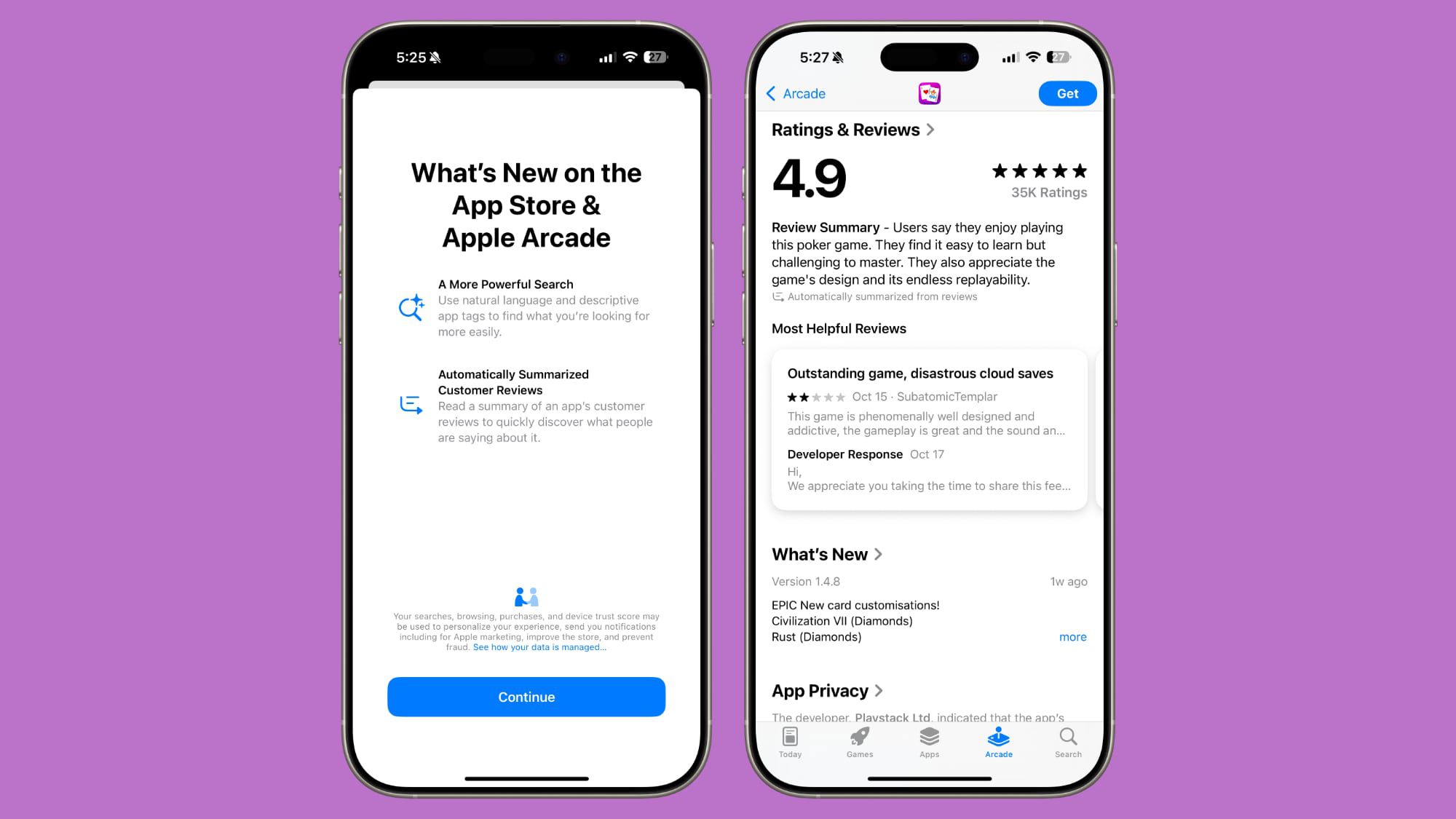

The rapid advancement of generative AI tools has democratised content creation. What once required professional skills in writing, photography, or graphic design can now be accomplished with a few prompts. The result? A flood of AI-generated content across platforms like Instagram, Twitter, TikTok, and Facebook.

According to recent analysis by the Reuters Institute for the Study of Journalism, approximately 18% of viral content on major social platforms now contains elements created or significantly enhanced by AI. This represents a fourfold increase from just one year ago.

“We’re witnessing a fundamental transformation in how content is produced and consumed online,” explains Dr Sarah Chen, digital media researcher at Oxford University. “The barriers to creating polished, professional-looking content have essentially disappeared.”

Why Is AI Content So Prevalent?

Several factors drive this surge in AI-generated social media content:

Cost and Efficiency

For businesses and content creators, AI tools significantly reduce production costs. What might have required a team of specialists can now be done by one person with access to the right AI systems. Marketing agencies report cutting content production times by up to 70% using AI assistants for first drafts and concept generation.

Algorithm Optimisation

AI-generated content often performs exceptionally well with engagement algorithms. By analysing vast datasets of successful posts, AI can create content specifically engineered to maximise likes, shares, and comments—a practice some call “algorithm hacking.”

Misinformation Campaigns

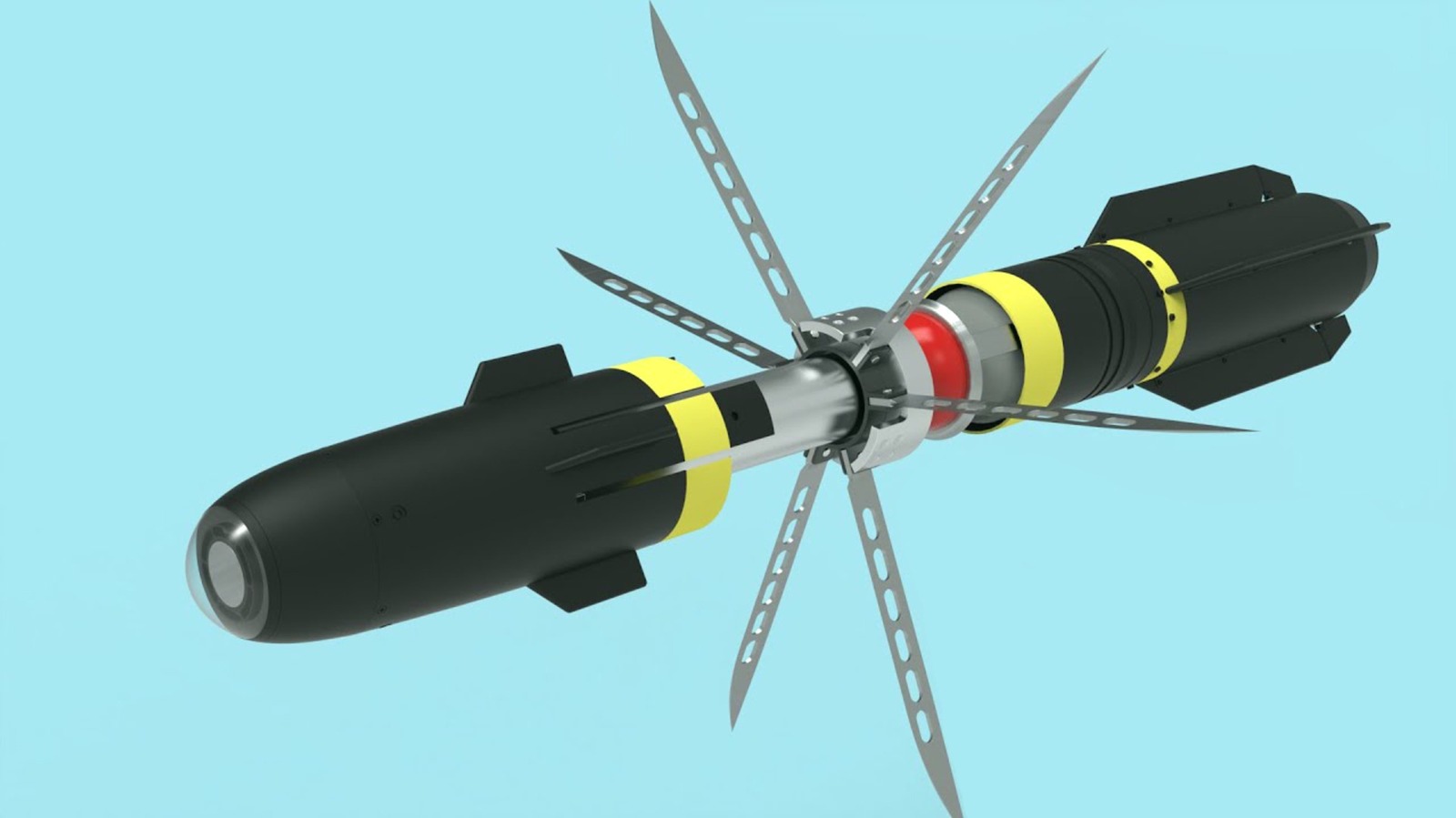

Perhaps most concerning is the use of AI to generate misleading or false content at scale. A study from the Computational Propaganda Research Project found that politically motivated actors can now produce hundreds of contextually relevant, persuasive comments across multiple platforms in minutes—a task that previously required extensive human resources.

How to Identify AI-Generated Content

As AI-generated content becomes increasingly sophisticated, spotting it requires careful attention to several key indicators:

- Perfectionism with Odd Details

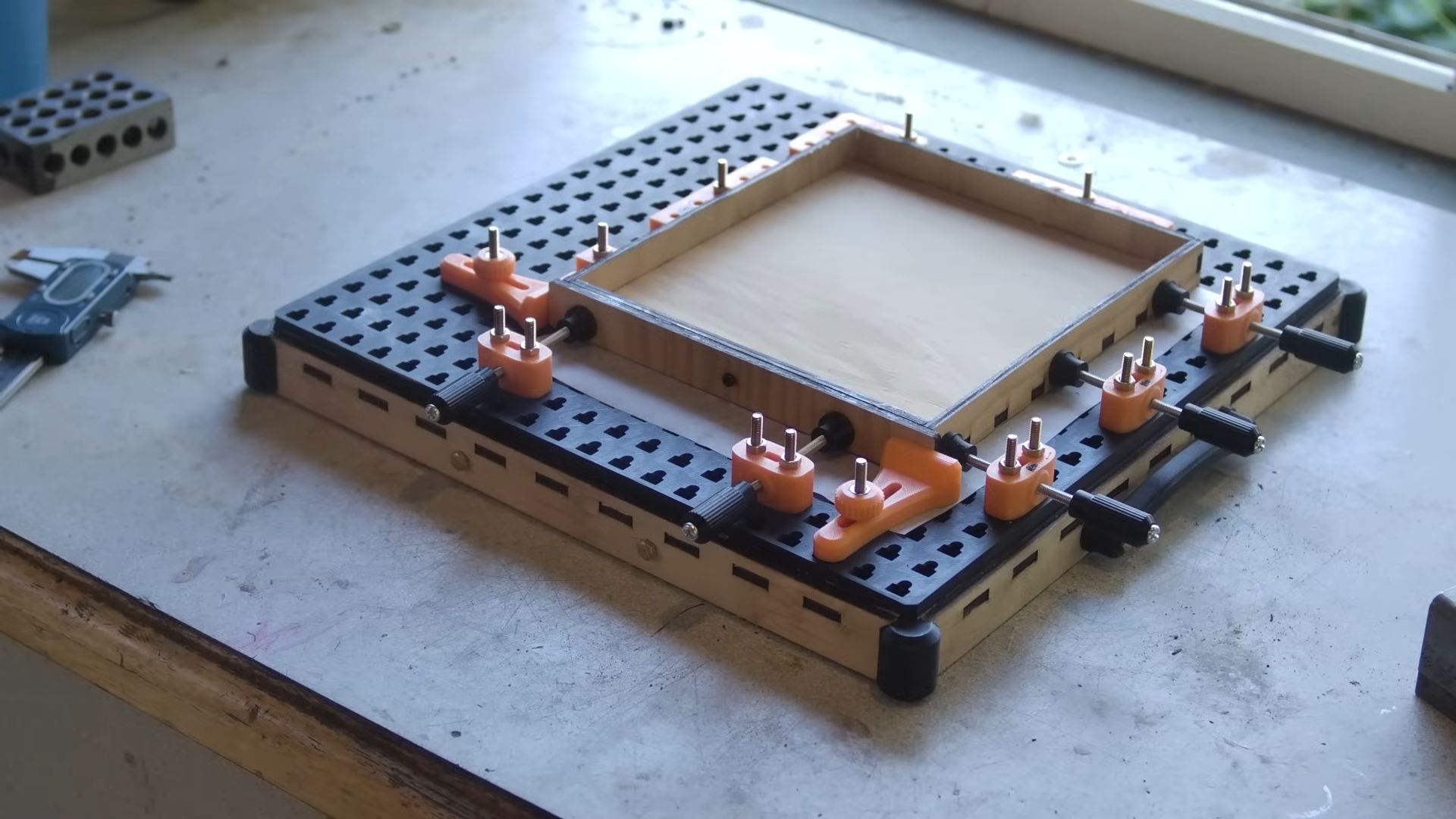

AI-generated images often appear flawless at first glance but contain subtle inconsistencies upon closer inspection. Watch for:

Unnatural hand renderings (extra fingers or distorted proportions)

Inconsistent lighting or shadows

Peculiar background blurring or artefacts

Symmetry that’s too perfect to be natural

Adobe’s Content Authenticity Initiative has developed tools to help identify manipulated or synthetic images based on these telltale signs.

- Generic Language Patterns

AI-written text often exhibits certain linguistic patterns:

Overuse of specific transitional phrases (“furthermore,” “moreover”)

Consistently perfect grammar without the natural irregularities of human writing

Even tone throughout long pieces without the emotional fluctuations typical in human writing

Generic perspectives that lack personal anecdotes or specific viewpoints

Tools like GPTZero can help detect these patterns in suspected AI-written content.

- Contextual Inconsistencies

AI still struggles with certain contextual elements:

Cultural references that seem slightly off or anachronistic

Facts cited without specific sources

Vague rather than specific examples

Claims that seem plausible but lack verifiable details

Content at Scale’s AI detector specialises in identifying these contextual inconsistencies.

- Response Patterns

When engaging with suspected AI accounts:

Note robotic response times (too quick or mechanically regular)

Watch for generic responses to specific questions

Observe how accounts handle unexpected queries or challenges

The Impact on Online Trust

The proliferation of AI-generated content fundamentally challenges how we establish truth and authenticity online. Traditional markers of credibility—well-written text, professional-looking images, consistent posting—can now be easily simulated.

“We’re entering an era where visual evidence, long considered the gold standard for verification, can no longer be trusted implicitly,” notes Professor Marcus Williams of the Oxford Internet Institute. “This requires a complete rethinking of digital literacy.”

The Center for Humane Technology has identified this trust crisis as one of the most significant challenges facing social media platforms today.

Developing Digital Self-Defence

As AI content becomes more prevalent, developing stronger critical thinking skills becomes essential:

Cross-Reference Information

Never rely on a single source. When encountering striking claims or images, seek verification from established, reputable sources before sharing.

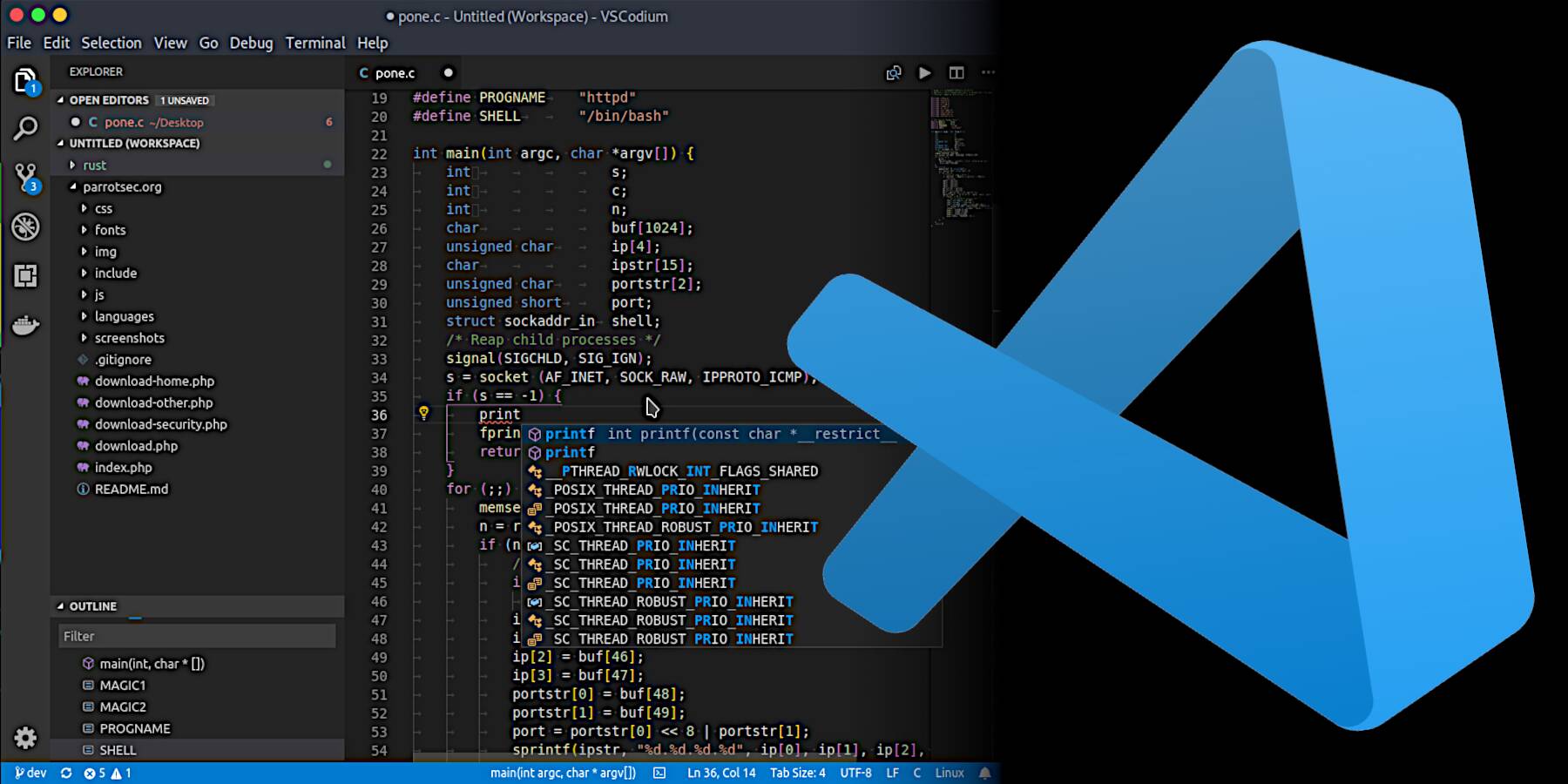

Use AI Detection Tools

Several AI content detection tools are now available to help identify computer-generated text and images. While not foolproof, they provide an additional layer of verification. Writer.com’s AI Content Detector and Originality.ai offer accessible options for general users.

Follow the Source Chain

Trace content back to its original source whenever possible. AI-generated content often lacks a clear provenance or appears simultaneously across multiple unrelated accounts.

Trust Verified Sources

Established news organisations and verified experts typically maintain rigorous fact-checking standards. The News Literacy Project provides excellent resources for evaluating source credibility in the age of AI.

The Path Forward

As AI content generation capabilities continue to advance, both technological and social responses will need to evolve:

Platform Responsibility

Major social media companies have begun implementing AI content labelling systems, though critics argue these efforts remain insufficient. Meta’s latest content policies require disclosure of digitally created or altered content, but enforcement remains challenging.

Regulatory Approaches

Governments worldwide are considering legislation requiring disclosure of AI-generated content. The European Union’s Digital Services Act now includes provisions specifically addressing synthetic media and its potential for manipulation.

Education and Awareness

Perhaps most important is widespread education about AI capabilities and limitations. Understanding how to critically evaluate digital content is becoming as essential as traditional literacy. UNESCO’s Media and Information Literacy curriculum offers valuable frameworks for developing these skills.

Conclusion

AI-generated content on social media represents both remarkable technological progress and significant social challenges. While the ability to create compelling media has never been more accessible, the same technologies enable manipulation at unprecedented scale.

By developing awareness of AI content patterns, maintaining healthy scepticism, and supporting transparency initiatives, users can navigate this new landscape more safely. The future of social media will likely involve a complex balance between leveraging AI’s creative potential while preserving authentic human connection and trustworthy information sharing.

As we move forward, the most valuable skill may be learning to ask not just whether content is engaging or persuasive, but whether it’s authentic—and increasingly, who or what created it.

For those looking to stay informed about these developments, the Poynter Institute’s MediaWise program provides regular updates and tools for identifying misleading content online.

We’d love your questions or comments on today’s topic!

For more articles like this one, click here.

Thought for the day:

“You will either step forward into growth, or you will step back into safety” Abraham Maslow

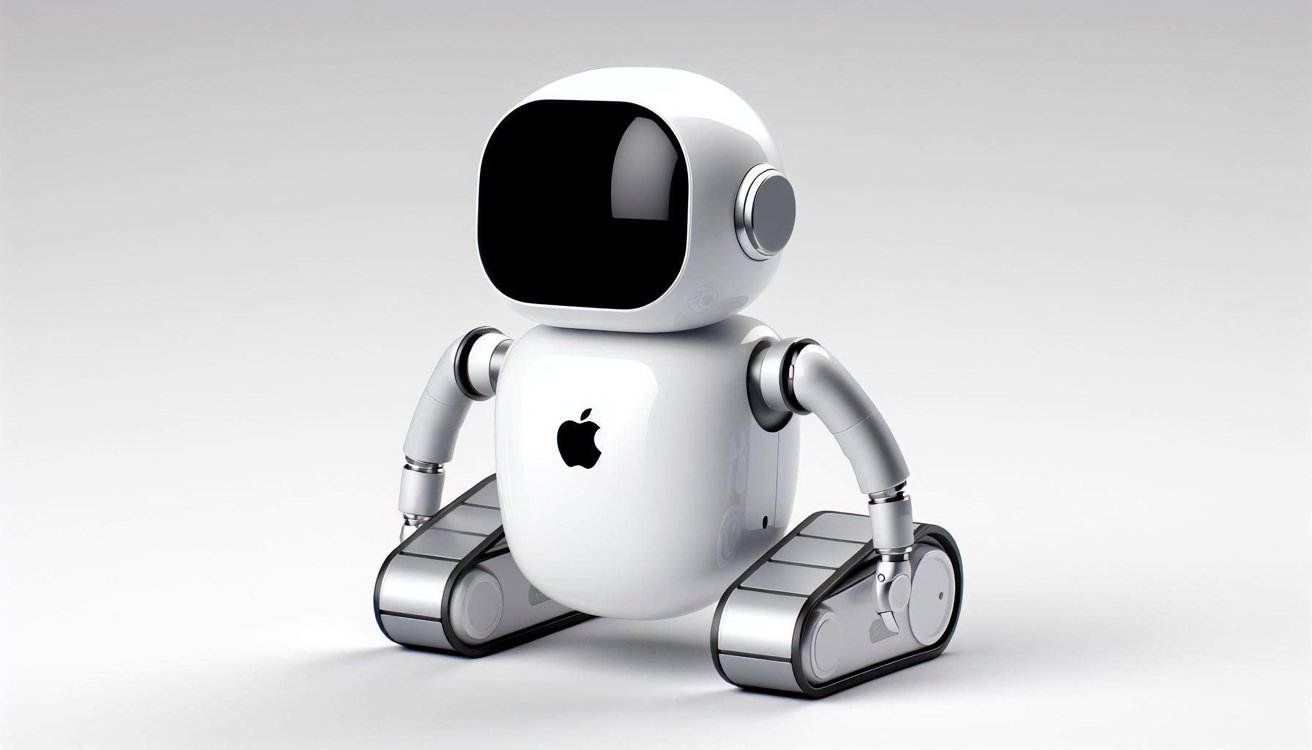

![Apple to Shift Robotics Unit From AI Division to Hardware Engineering [Report]](https://www.iclarified.com/images/news/97128/97128/97128-640.jpg)

![Apple Shares New Ad for iPhone 16: 'Trust Issues' [Video]](https://www.iclarified.com/images/news/97125/97125/97125-640.jpg)

![Hands-on: Motorola’s new trio of Razr phones are beautiful, if familiar vessels for AI [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/04/motorola-razr-2025-family-9.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![The big yearly Android upgrade doesn’t matter all that much now [Video]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/04/Android-versions-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] Sterling Stock Picker: Lifetime Subscription (85% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

.jpg?#)