How to Build an AI-Powered UI Components Generator in Minutes (LangGraph, GPT-4, & Stream Chat SDK)

This article will teach you how to build an AI-powered UI components generator. The generator enables you to generate UI components from a prompt. Moreover, you will learn how to integrate an AI-powered chat interface using Stream Chat SDK that enables collaborative discussions about UI components and generates new UI component prompts. In a nutshell, here is what the article will cover: Understanding what AI agents are and the structure of a LangGraph AI agent in an application Building the UI components generator backend using Python, LangGraph, and GPT-4 Building a Flask Web Server to handle Frontend requests and Backend responses in the UI components generator Building the UI components generator frontend using Next.js, TypeScript, and TailwindCSS Integrating AI-powered Stream Chat SDK to the UI Components Generator frontend Here’s a preview of the application we’ll be building. What are AI agents? An AI agent is a system that uses artificial intelligence (AI) to perform defined tasks in an application. In this case, an AI agent uses AI to generate clean, functional UI components using HTML and Tailwind CSS. To build AI agents, you can use LangGraph, an open-source framework that enables developers to build, run, and manage AI agent workflows. LangGraph is built on LangChain, a Python framework for building AI applications, and uses graph-based architecture to manage AI agent workflows. Understanding the structure of LangGraph AI agents Imagine a LangGraph AI agent as a car factory that builds cars based on customer-specific orders through an assembly line and delivers the orders as finished cars to the customer. The assembly line comprises workstations with different tools, conveyor belts, a foreman, a mechanical engineer, and quality checkpoints. Using the car factory analogy, here is how you can describe a LangGraph AI agent structure: Customer Order (User Input): A customer order represents a user input, question, or task given to an AI agent. For example, a user input could be “Generate a contact form.” Assembly Line (Graph): The assembly line represents the whole LangGraph setup, known as the Graph, where workstations are connected using conveyor belts. In simple terms, a Graph can be described as a map or blueprint that defines how different parts of an AI agent talk to each other and work together. Workstatations (Nodes): The workstations represent Nodes. Each Node has a specific task and the tools it needs to implement, such as generating UI components code. Conveyor belts (Edges): Conveyor belts represent Edges. Edges connect one Node to another where they move the user’s input between Nodes. In LangGraph, there are two types of edges: regular and conditional. Regular edges connect one Node to another, while conditional edges redirect tasks based on the user’s input. For example, the conditional edge redirects the order to the red seats workstation if the customer wants a car with red seats. Similarly, if the customer wants a car with blue seats, the order is redirected to the blue seats workstation. Foreman (State): The foreman represents the AI agent state. The agent’s state follows the customer’s order to each workstation to ensure each workstation does its job and records changes made to the customer’s order in a notebook or clipboard. Notebook (Memory): The notebook or clipboard represents the AI agent’s memory. The memory records tasks completed in each workstation and tasks yet to be completed. Mechanical Engineer (Language Model): Mechanical engineer represents a language model (e.g., GPT-4). The language model acts as the brain of an AI agent. Picture a language model as a highly skilled and creative expert who designs and builds the car part by part based on the customer’s order through each workstation. Finished Car (Agent Output): The finished car represents the AI agent’s output, which is given to the user after completing tasks in all workstations. Prerequisites To fully understand this tutorial, you need to have a basic understanding of React or Next.js. We'll also make use of the following: Python - a popular programming language for building AI agents with LangGraph; make sure it is installed on your computer. LangGraph - a framework for creating and deploying AI agents. It also helps to define the control flows and actions to be performed by the agent. OpenAI API Key - to enable us to perform various tasks using the GPT models; for this tutorial, ensure you have access to the GPT-4 model. Tavily AI - a search engine that enables AI agents to conduct research and access real-time knowledge within the application. Stream Chat SDK - Easy-to-use chat SDKs with the building blocks you need to create world-class in-app messaging experiences. Ace Code Editor - an embeddable code editor written in JavaScript that matches the features and performance of native editors. Note: The link to this proj

This article will teach you how to build an AI-powered UI components generator. The generator enables you to generate UI components from a prompt.

Moreover, you will learn how to integrate an AI-powered chat interface using Stream Chat SDK that enables collaborative discussions about UI components and generates new UI component prompts.

In a nutshell, here is what the article will cover:

Understanding what AI agents are and the structure of a LangGraph AI agent in an application

Building the UI components generator backend using Python, LangGraph, and GPT-4

Building a Flask Web Server to handle Frontend requests and Backend responses in the UI components generator

Building the UI components generator frontend using Next.js, TypeScript, and TailwindCSS

Integrating AI-powered Stream Chat SDK to the UI Components Generator frontend

Here’s a preview of the application we’ll be building.

What are AI agents?

An AI agent is a system that uses artificial intelligence (AI) to perform defined tasks in an application.

In this case, an AI agent uses AI to generate clean, functional UI components using HTML and Tailwind CSS.

To build AI agents, you can use LangGraph, an open-source framework that enables developers to build, run, and manage AI agent workflows.

LangGraph is built on LangChain, a Python framework for building AI applications, and uses graph-based architecture to manage AI agent workflows.

Understanding the structure of LangGraph AI agents

Imagine a LangGraph AI agent as a car factory that builds cars based on customer-specific orders through an assembly line and delivers the orders as finished cars to the customer.

The assembly line comprises workstations with different tools, conveyor belts, a foreman, a mechanical engineer, and quality checkpoints.

Using the car factory analogy, here is how you can describe a LangGraph AI agent structure:

Customer Order (User Input): A customer order represents a user input, question, or task given to an AI agent. For example, a user input could be “Generate a contact form.”

Assembly Line (Graph): The assembly line represents the whole LangGraph setup, known as the Graph, where workstations are connected using conveyor belts.

In simple terms, a Graph can be described as a map or blueprint that defines how different parts of an AI agent talk to each other and work together.

Workstatations (Nodes): The workstations represent Nodes. Each Node has a specific task and the tools it needs to implement, such as generating UI components code.

Conveyor belts (Edges): Conveyor belts represent Edges. Edges connect one Node to another where they move the user’s input between Nodes. In LangGraph, there are two types of edges: regular and conditional.

Regular edges connect one Node to another, while conditional edges redirect tasks based on the user’s input.

For example, the conditional edge redirects the order to the red seats workstation if the customer wants a car with red seats. Similarly, if the customer wants a car with blue seats, the order is redirected to the blue seats workstation.

Foreman (State): The foreman represents the AI agent state. The agent’s state follows the customer’s order to each workstation to ensure each workstation does its job and records changes made to the customer’s order in a notebook or clipboard.

Notebook (Memory): The notebook or clipboard represents the AI agent’s memory. The memory records tasks completed in each workstation and tasks yet to be completed.

Mechanical Engineer (Language Model): Mechanical engineer represents a language model (e.g., GPT-4). The language model acts as the brain of an AI agent.

Picture a language model as a highly skilled and creative expert who designs and builds the car part by part based on the customer’s order through each workstation.

- Finished Car (Agent Output): The finished car represents the AI agent’s output, which is given to the user after completing tasks in all workstations.

Prerequisites

To fully understand this tutorial, you need to have a basic understanding of React or Next.js.

We'll also make use of the following:

Python - a popular programming language for building AI agents with LangGraph; make sure it is installed on your computer.

LangGraph - a framework for creating and deploying AI agents. It also helps to define the control flows and actions to be performed by the agent.

OpenAI API Key - to enable us to perform various tasks using the GPT models; for this tutorial, ensure you have access to the GPT-4 model.

Tavily AI - a search engine that enables AI agents to conduct research and access real-time knowledge within the application.

Stream Chat SDK - Easy-to-use chat SDKs with the building blocks you need to create world-class in-app messaging experiences.

Ace Code Editor - an embeddable code editor written in JavaScript that matches the features and performance of native editors.

Note: The link to this project source code is provided at the end of this tutorial.

Building the UI components generator backend

In this section, you will learn how to build a UI components generator backend using Python, LangGraph, and GPT-4.

Using our car factory analogy, imagine this agent as a factory that builds UI components like forms, buttons, or navbars instead of cars. This factory takes user input like “Build me a contact form” and produces a custom UI component (the finished car).

To build the agent, follow the steps below.

Step 1: Setting up the Project

First, create a ui-components-generator folder. Then, create an agent folder in the ui-components-generator folder.

mkdir ui-components-generator

cd ui-components-generator

mkdir agent

Then, navigate into the agent folder and use the commands below to create and activate the virtual environment.

cd agent

python -m venv venv

.\venv\Scripts\activate

After that, install the required packages using the pip package.

pip install -U langgraph langsmith tavily-python langchain_community langchain-openai

Before we continue, let’s discuss these packages. The LangGraph package provides a framework for building AI agents, while LangSmith provides development and debugging tools.

The Tavily Python package provides your AI agent with web search functionality, while the LangChain OpenAI package connects your AI agent to OpenAI’s language models.

The LangChain Community package provides pre-built tools to integrate with your AI agent to perform tasks.

Step 2: Importing the required Libraries

First, create a ui-components-generator/agent/agent.py file. Then, paste the code below into the file to import the necessary Python modules, libraries, and installed packages.

# Standard library imports for basic functionality

import getpass

import logging

import os

# Type hinting imports to improve code readability and catch errors

from typing import Annotated, Optional

from typing_extensions import TypedDict

# LangGraph and LangChain imports for building the AI workflow

from langgraph.graph import StateGraph, START, END

from langgraph.graph.message import add_messages

from langchain_openai import ChatOpenAI

from langchain_community.tools.tavily_search import TavilySearchResults

from langchain_core.messages import BaseMessage

from langgraph.prebuilt import ToolNode, tools_condition

from langgraph.checkpoint.memory import MemorySaver

# Environment variable management to load secrets from a .env file

from dotenv import load_dotenv

load_dotenv()

Step 3: Setting up the Environment Variables

Below the imports, define a function named _set_env that sets environment variables if missing.

//...

def _set_env(var: str) -> None:

"""Set environment variable if not already set, prompting user for input."""

if not os.environ.get(var):

os.environ[var] = getpass.getpass(f"{var}: ")

Then, below the _set_env function, set the OpenAI API key (to use GPT language models) and Tavily API key (for search) using the _set_env function.

_set_env("OPENAI_API_KEY")

_set_env("TAVILY_API_KEY")

After that, create a .env file in the agent folder. Then, add OpenAI and Tavily API keys to the environment variables.

OPENAI_API_KEY=your_key

TAVILY_API_KEY=your_key

Step 4: Configure the Graph

Define a config dictionary that sets up the AI agent’s memory. The thread_id Identifies the conversation thread, checkpoint_ns saves the conversation state, and checkpoint_id serves as the session identifier. This ensures the AI remembers the conversation across interactions.

CONFIG = {

"configurable": {

"thread_id": "1",

"checkpoint_ns": "code_session",

"checkpoint_id": "default"

}

}

Step 5: Defining a System Prompt

Define a system prompt that determines the agent’s behavior and code generation rules. This system prompt can be defined as a rulebook the AI agent follows.

SYSTEM_PROMPT = """

You are an expert coding assistant specializing in creating modern UI components. Your primary tasks are:

1. Generate clean, functional UI components using HTML and Tailwind CSS

2. Use best practices for responsive design and accessibility

3. Provide code as plain text without any markdown formatting or code blocks

When generating React UI components:

- IMPORTANT: ALWAYS use 'className' attribute instead of 'class' for CSS classes (React JSX syntax)

- Use Tailwind CSS classes for styling

- Ensure components are responsive across all device sizes (mobile, tablet, laptop, desktop)

- Include hover states, focus states, and smooth transitions where appropriate

- Use semantic HTML5 elements for better accessibility and SEO

- Add comprehensive accessibility attributes (ARIA labels, roles, etc.)

- Optimize for performance by keeping the DOM structure efficient

- Include comments to explain complex parts of the implementation

- Ensure all interactive elements are keyboard accessible

Example of CORRECT syntax:

"flex items-center p-4 bg-blue-500">

"text-2xl font-bold">Hello World

Example of INCORRECT syntax (do not use this):

"flex items-center p-4 bg-blue-500">

"text-2xl font-bold">Hello World

Provide only the raw code as plain text without any markdown formatting (no ```

``` marks), explanations, or additional commentary. Maintain a concise, professional approach focusing solely on delivering the component code.

If the user asks for a specific component type (navbar, card, form, etc.), tailor your response accordingly.

If the user mentions specific requirements for colors, spacing, or animations, incorporate those precisely.

"""

Step 6: Defining the AI agent State

Define a State class that uses TypedDict to define the structure of the AI agent state. The class contains a messages field that uses add_messagesannotation to keep track of user inputs or messages in the AI agent.

class State(TypedDict):

"""State definition for the conversation graph."""

messages: Annotated[list, add_messages]

Think of the AI agent State as a container that holds everything the AI agent needs to know about the current conversation between it and the user.

Step 7: Initializing the Graph

Initialize the state graph with the state structure defined above. The state graph defines how the AI agent processes inputs and generates outputs.

graph_builder = StateGraph(State)

Step 8: Setting up tools and the Language Model

Set up tools and language model for the AI agent using the code below.

tool = TavilySearchResults(max_results=2)

tools = [tool]

llm = ChatOpenAI(model="gpt-4-turbo")

llm_with_tools = llm.bind_tools(tools)

In the code above, a TavilySearchResults tool, which can search the web and return up to 2 results, is initialized and stored in a tools list.

After that, OpenAI’s GPT-4 Turbo language model is set up using the ChatOpenAI class. The language model is then connected to the search tool using the bind_tools() method.

Step 9: Creating the UI Components Agent Node

Define a ui_components_agent Node function that processes State and generates responses.

def UIComponentsAgent(state: State) -> dict:

"""Process the state and generate UI component response using LLM."""

messages = state["messages"]

if not messages or (len(messages) == 1 and messages[0]["role"] == "user"):

system_message = {"role": "system", "content": SYSTEM_PROMPT}

messages = [system_message] + messages

response = llm_with_tools.invoke(messages)

return {"messages": [response]}

In the code above, the UIComponentsAgent function inputs the current state (with messages). If it’s the first message, it adds the SYSTEM_PROMPT to guide the AI agent.

After that, the function uses llm_with_tools.invoke(messages) method to generate a response (e.g., a UI component code). Then, it returns a dictionary with the new response added to the state.

Take the UIComponentsAgent function as the workstation where UI components are built into your AI agent.

Step 10: Adding Nodes and Edges to build the Graph

Build the graph using the code below where the graph_builder.add_node() method adds the UIComponentsAgent node to the graph.

After that, the ToolNode(tools=[tool]) method creates a node for the search tool while graph_builder.add_node() method adds the tool node to the graph.

graph_builder.add_node("ui_components_agent", ui_components_agent)

tool_node = ToolNode(tools=TOOLS)

graph_builder.add_node("tools", tool_node)

Next, define edges to determine how the state graph flows between the nodes. Here, a conditional edge determines if the AI agent needs the search tool. If the agent needs the tool, it goes to the tools node. After using the tool, the AI agent returns to the UIComponentsAgent node.

graph_builder.add_conditional_edges(

"UIComponentsAgent",

tools_condition,

)

graph_builder.add_edge("tools", "UIComponentsAgent") graph_builder.set_entry_point("UIComponentsAgent)

Finally, the AI agent uses the set_entry_point() method to set the UIComponentsAgent node as the graph's entry point.

Step 11: Compiling the Graph

Initialize the agent’s memory and compile the state graph with memory enabled.

memory = MemorySaver()

graph = graph_builder.compile(checkpointer=memory)

Step 12: Generating a Visual Diagram of the Graph

Add the code below that generates a PNG using the graph.get_graph().draw_mermaid_png() method and saves the PNG as graph_visualization.png.

try:

with open("graph_visualization.png", "wb") as f: # Open file in binary write mode

f.write(graph.get_graph().draw_mermaid_png()) # Save graph as Mermaid PNG

print("Graph visualization saved as 'graph_visualization.png'")

except Exception as e: # Handle missing dependencies

print("Graph visualization skipped due to missing dependencies.")

print(f"Error: {e}")

Step 13: Adding input to the AI agent and Streaming its responses

Define a stream_graph_updates function that takes user input, packages it with the system prompt, runs it through the graph, and returns a response.

def stream_graph_updates(user_input: str) -> str:

"""Execute the graph: Stream updates based on user input."""

input_data = { # Package user input with system prompt and config

"messages": [

{"role": "system", "content": SYSTEM_PROMPT}, # Include system prompt

{"role": "user", "content": user_input} # Add user's request

],

"configurable": CONFIG # Use predefined config for thread/checkpoint

}

try:

for event in graph.stream(input_data, CONFIG): # Stream events from the graph

for value in event.values(): # Process each event's values

print("Agent:", value["messages"][-1].content) # Print latest message

return value["messages"][-1].content # Return the response

except Exception as e: # Handle execution errors

logging.error(f"Error in stream_graph_updates: {str(e)}") # Log the error

return "I apologize, but I encountered an error processing your request."

Step 14: Testing the AI Agent

For testing purposes, define an optional loop that takes a user input, processes it using the stream_graph_updates function, and keeps running until the user decides to stop or an error occurs.

Note: Comment out this code before running the Flask web server in the next section.

while True:

try:

user_input = input("User: ") # Get user input

if user_input.lower() in ["quit", "exit", "q"]: # Check for exit commands

print("Goodbye!")

break

stream_graph_updates(user_input) # Process input and print response

except: # Exit on error (e.g., Ctrl+C)

break

Then, run the command below on the command line to test the agent.

python agent.py

Once the command completes running, an image that visualizes the ui-components-generator LangGraph AI agent should be generated and saved in the agent folder, as shown below.

After that, add a “build for me a contact form” input message in the command line. The agent should respond with a contact form code, as shown below.

I hope you have seen how simple it is to build a LangGraph AI agent. Next, you will see how to build a Flask web server that handles frontend requests and the AI agent responses.

Building a Flask Web Server to handle Frontend requests and Backend responses

In this section, you will learn how to build a Flask web server that sets up a simple API to handle the UI components generator's frontend requests and backend responses.

Without wasting much time, let’s get started.

First, install Flask and Flask Cors packages using the command below.

pip install flask flask-cors

In the agent folder, create an app.py file and import the necessary packages into the file.

# Import Flask tools for building a web server

from flask import Flask, request, jsonify

# Import the AI agent's processing function from agent.py

from agent import stream_graph_updates

# Import logging to track errors and debug info

import logging

# Import CORS to allow cross-origin requests

from flask_cors import CORS

Then set up the Flask web server by creating a Flask app instance and enabling CORS(Cross-Origin Resource Sharing) for the app. CORS enables the connection between the frontend and backend.

# Configure logging to show detailed messages

logging.basicConfig(level=logging.DEBUG)

# Create a Flask app instance

app = Flask(__name__)

# Enable CORS for the app

CORS(app)

Next, define a chat API endpoint that handles frontend requests and backend responses.

# Define a route for handling chat requests

@app.route('/chat', methods=['POST'])

def chat():

try:

# Extract the user's message from the request payload

user_message = request.json['message']

# Process the message using the stream_graph_updates function

response = stream_graph_updates(user_message)

# Check if the response is valid; return an error if not

if response is None:

return jsonify({

'error': 'No response generated'

}), 500

# Return the generated response as a JSON object

return jsonify({

'response': response

})

except Exception as e:

# Log any errors that occur during request processing

logging.error(f"Error in chat endpoint: {str(e)}")

# Return a generic error message to the client

return jsonify({

'error': 'An error occurred processing your request'

}), 500

After that, add the code snippet below that starts the Flask web server and listens to requests on port 5000.

# Check if the script is run directly (not imported)

if __name__ == '__main__':

# Start the Flask server

app.run(host='127.0.0.1', port=5000, debug=True)

Finally, launch the Flask web server using the command below.

python app.py

As simple as that, you have built a web server that connects the front end to the back end. Next, let us build the UI components generator frontend.

Building the UI Components Generator Frontend

This section will teach you how to build the UI components generator frontend using Next.js, TypeScript, and Tailwind CSS. This will enable you to preview the generated UI components and edit their code.

To get started, follow the steps below.

Step 1: Setting up the Frontend

In the ui-components-generator folder, create a Next.js app using the command below.

npx create-next-app@latest client

Then, select your preferred configuration settings. For this tutorial, we'll be using TypeScript and Next.js App Router.

Next, navigate to the ui-components-generator/client folder and use the command below to set up shadcn/ui components.

npx shadcn@latest init

Then install the required shadcn/ui components using the command below.

npx shadcn@latest add button input tabs scroll-area

After that, install other necessary UI dependencies such as React Ace and HTML React Parser. React Ace is an embeddable code editor, while HTML React Parser converts an HTML string to one or more React elements.

npm install react-ace ace-builds html-react-parser

Step 2: Creating the Components Preview component

In the components folder, create a ComponentPreview component that displays a preview of UI components code generated by the LangGraph AI agent. To create the component, create a src/app/components/component-preview.tsx file and paste the following code into the file:

"use client";

import { useEffect, useState } from "react";

import { Loader2 } from "lucide-react";

import parse from "html-react-parser";

interface ComponentPreviewProps {

code: string;

}

export default function ComponentPreview({ code }: ComponentPreviewProps) {

const [loading, setLoading] = useState(false);

const [error, setError] = useState<string | null>(null);

useEffect(() => {

if (!code) {

setError(null);

return;

}

setLoading(true);

setError(null);

// Simulate loading time

const timer = setTimeout(() => {

setLoading(false);

}, 1000);

return () => clearTimeout(timer);

}, [code]);

if (loading) {

return (

<div className="flex items-center justify-center h-full">

<Loader2 className="h-8 w-8 animate-spin text-primary" />

</div>

);

}

if (error) {

return (

<div className="flex items-center justify-center h-full text-destructive">

{error}

</div>

);

}

if (!code) {

return (

<div className="flex items-center justify-center h-full text-muted-foreground bg-gray-900">

Component preview will appear here

</div>

);

}

return <div className="p-4 h-[400px] overflow-auto">{parse(code)}</div>;

}

Step 3: Creating the Code Display component

Create a CodeDisplay component that displays and edits code generated by the LangGraph AI agent using the AceEditor library. To create the component, create a src/app/components/code-display.tsx file and paste the following code into the file:

"use client";

import { useEffect, useState } from "react";

import { Button } from "@/components/ui/button";

import { Clipboard, Check } from "lucide-react";

import dynamic from "next/dynamic";

// Dynamically import AceEditor with no SSR

const AceEditor = dynamic(

async () => {

const ace = await import("react-ace");

await import("ace-builds/src-noconflict/mode-javascript");

await import("ace-builds/src-noconflict/theme-monokai");

return ace;

},

{ ssr: false }

);

interface CodeDisplayProps {

code: string;

setGeneratedCode: (code: string) => void;

}

export default function CodeDisplay({

code,

setGeneratedCode,

}: CodeDisplayProps) {

const [copied, setCopied] = useState(false);

const [editorHeight, setEditorHeight] = useState("400px");

const [editorLoaded, setEditorLoaded] = useState(false);

useEffect(() => {

setEditorLoaded(true);

}, []);

useEffect(() => {

if (copied) {

const timeout = setTimeout(() => setCopied(false), 2000);

return () => clearTimeout(timeout);

}

}, [copied]);

const copyToClipboard = () => {

navigator.clipboard.writeText(JSON.stringify(code, null, 2));

setCopied(true);

};

const handleResize = (newHeight: string) => {

setEditorHeight(newHeight);

};

const handleCodeChange = (newCode: string) => {

setGeneratedCode(newCode);

};

return (

<div className="relative border rounded-lg overflow-hidden">

<div className=" p-2 flex justify-between items-center">

<span className="text-lg font-medium mb-2 ">Generated Code</span>

<Button

variant="outline"

size="sm"

className="h-8 w-8 p-0 bg-gray-700 hover:bg-gray-600"

onClick={copyToClipboard}>

{copied ? (

<Check className="h-4 w-4 text-green-500" />

) : (

<Clipboard className="h-4 w-4 text-white" />

)}

</Button>

</div>

{editorLoaded && code ? (

<AceEditor

mode="javascript"

theme="monokai"

name="code-editor"

editorProps={{ $blockScrolling: true }}

value={code}

onChange={handleCodeChange}

width="100%"

height={editorHeight}

setOptions={{

showPrintMargin: false,

highlightActiveLine: false,

}}

style={{ resize: "vertical" }}

onLoad={(editor) => {

editor.renderer.setScrollMargin(10, 10, 0, 0);

editor.renderer.setPadding(10);

}}

/>

) : (

<div className="flex items-center justify-center h-[400px] bg-gray-900 text-gray-400">

{code ? "Loading editor..." : "Generated code will appear here"}

</div>

)}

<div

className="h-2 bg-gray-700 cursor-ns-resize"

onMouseDown={(e) => {

const startY = e.clientY;

const startHeight = Number.parseInt(editorHeight);

const onMouseMove = (e: MouseEvent) => {

const newHeight = startHeight + e.clientY - startY;

handleResize(`${newHeight}px`);

};

const onMouseUp = () => {

document.removeEventListener("mousemove", onMouseMove);

document.removeEventListener("mouseup", onMouseUp);

};

document.addEventListener("mousemove", onMouseMove);

document.addEventListener("mouseup", onMouseUp);

}}

/>

</div>

);

}

Step 4: Creating the Version Manager component

Create a VersionManager component that manages generated code versions, allowing users to save new versions and switch between existing ones. To create the component, create a src/app/components/version-manager.tsx file and paste the following code into the file:

"use client";

import { useState } from "react";

import { Button } from "@/components/ui/button";

import { Input } from "@/components/ui/input";

import { ScrollArea } from "@/components/ui/scroll-area";

interface Version {

id: string;

label: string;

code: string;

timestamp: Date;

}

interface VersionManagerProps {

versions: Version[];

currentVersionId: string | null;

onVersionChange: (versionId: string) => void;

onSaveVersion: (label: string) => void;

}

export default function VersionManager({

versions,

currentVersionId,

onVersionChange,

onSaveVersion,

}: VersionManagerProps) {

const [newVersionLabel, setNewVersionLabel] = useState("");

const handleSaveVersion = () => {

if (newVersionLabel.trim()) {

onSaveVersion(newVersionLabel.trim());

setNewVersionLabel("");

}

};

return (

<div className="h-full flex flex-col">

<div className="flex items-center space-x-2 mb-4">

<Input

placeholder="New version label"

value={newVersionLabel}

onChange={(e) => setNewVersionLabel(e.target.value)}

/>

<Button onClick={handleSaveVersion}>Save Version</Button>

</div>

<ScrollArea className="flex-grow">

{versions.map((version) => (

<div

key={version.id}

className={`p-4 border-b cursor-pointer ${

version.id === currentVersionId

? "bg-primary/10"

: "hover:bg-secondary/50"

}`}

onClick={() => onVersionChange(version.id)}>

<h3 className="font-medium">{version.label}</h3>

<p className="text-sm text-muted-foreground">

{version.timestamp.toLocaleString()}

</p>

</div>

))}

</ScrollArea>

</div>

);

}

Step 5: Creating the Component Generator component

Create a ComponentGenerator component that generates UI component code based on user prompts via an API call to the backend. Then, import ComponentPreview, CodeDisplay, and VersionManger components to preview, edit, and manage versions of the generated code. To create the component, create a src/app/components/component-generator.tsx file and paste the following code into the file:

"use client";

import { useState } from "react";

import { Button } from "@/components/ui/button";

import { Tabs, TabsContent, TabsList, TabsTrigger } from "@/components/ui/tabs";

import { Loader2 } from "lucide-react";

import CodeDisplay from "./code-display";

import ComponentPreview from "./component-preview";

import { Input } from "@/components/ui/input";

import VersionManager from "./version-manager";

interface Version {

id: string;

label: string;

code: string;

timestamp: Date;

}

export default function ComponentGenerator() {

const [prompt, setPrompt] = useState("");

const [generatedCode, setGeneratedCode] = useState(``);

const [isGenerating, setIsGenerating] = useState(false);

const [activeTab, setActiveTab] = useState("split");

const [history, setHistory] = useState<Version[]>([]);

const [currentVersionId, setCurrentVersionId] = useState<string | null>(null);

const handleGenerate = async () => {

if (!prompt.trim()) return;

setIsGenerating(true);

setTimeout(async () => {

const userMessage = prompt.trim();

const response = await fetch("http://localhost:5000/chat", {

method: "POST",

headers: {

"Content-Type": "application/json",

},

body: JSON.stringify({ message: userMessage }),

});

const data = await response.json();

const formattedResponse = data.response.replace(/\\n/g, "\n").trim();

const newVersion: Version = {

id: Date.now().toString(),

label: `Version ${history.length + 1}`,

code: formattedResponse,

timestamp: new Date(),

};

setGeneratedCode(formattedResponse);

setHistory((prevHistory) => [...prevHistory, newVersion]);

setCurrentVersionId(newVersion.id);

setIsGenerating(false);

}, 1500);

};

const handleKeyPress = (e: React.KeyboardEvent<HTMLInputElement>) => {

if (e.key === "Enter") {

handleGenerate();

}

};

const handleVersionChange = (versionId: string) => {

const version = history.find((v) => v.id === versionId);

if (version) {

setGeneratedCode(version.code);

setCurrentVersionId(version.id);

}

};

const handleSaveVersion = (label: string) => {

const newVersion: Version = {

id: Date.now().toString(),

label,

code: generatedCode,

timestamp: new Date(),

};

setHistory((prevHistory) => [...prevHistory, newVersion]);

setCurrentVersionId(newVersion.id);

};

return (

<div className="flex flex-col h-[calc(100vh-200px)] min-h-[600px]">

<div className="flex-1 mb-4">

<Tabs value={activeTab} onValueChange={setActiveTab} className="h-full">

<div className="flex justify-between items-center mb-4">

<TabsList>

<TabsTrigger value="split">Split View</TabsTrigger>

<TabsTrigger value="preview">Preview</TabsTrigger>

<TabsTrigger value="code">Code</TabsTrigger>

<TabsTrigger value="history">History</TabsTrigger>

</TabsList>

</div>

<div className="h-[calc(100%-40px)]">

<TabsContent value="split" className="h-full m-0">

<div className="grid grid-cols-1 md:grid-cols-2 gap-4 h-full">

<div className="border rounded-lg h-full overflow-hidden">

<h2 className="text-lg font-medium mb-2 p-2">Preview</h2>

<ComponentPreview code={generatedCode} />

</div>

<div className="h-full bg-background overflow-hidden">

<CodeDisplay

code={generatedCode}

setGeneratedCode={setGeneratedCode}

/>

</div>

</div>

</TabsContent>

<TabsContent value="preview" className="h-full m-0">

<div className="border rounded-lg p-4 h-full bg-background overflow-auto">

<ComponentPreview code={generatedCode} />

</div>

</TabsContent>

<TabsContent value="code" className="h-full m-0">

<div className="h-full bg-background overflow-hidden">

<CodeDisplay

code={generatedCode}

setGeneratedCode={setGeneratedCode}

/>

</div>

</TabsContent>

<TabsContent value="history" className="h-full m-0">

<VersionManager

versions={history}

currentVersionId={currentVersionId}

onVersionChange={handleVersionChange}

onSaveVersion={handleSaveVersion}

/>

</TabsContent>

</div>

</Tabs>

</div>

<div className="border rounded-lg p-4 bg-background">

<div className="flex space-x-2">

<Input

placeholder="Describe the UI component..."

value={prompt}

onChange={(e) => setPrompt(e.target.value)}

onKeyPress={handleKeyPress}

className="flex-grow"

/>

<Button

onClick={handleGenerate}

disabled={!prompt.trim() || isGenerating}

className="min-w-[120px] whitespace-nowrap">

{isGenerating ? (

<>

<Loader2 className="mr-2 h-4 w-4 animate-spin" />

Generating...

</>

) : (

"Generate"

)}

</Button>

</div>

</div>

</div>

);

}

Step 6: Rendering the Components Generator component

In the src/app/page.tsx file, add the following code that renders the ComponentGenerator component.

import ComponentGenerator from "@/components/component-generator";

export default function Home() {

return (

<main className="min-h-screen p-4 md:p-8">

<div className="max-w-7xl mx-auto">

<h1 className="text-3xl font-bold mb-6">

AI-Powered UI Component Generator

</h1>

<p className="text-muted-foreground mb-8">

Enter a prompt to generate custom UI components with AI

</p>

<ComponentGenerator />

</div>

</main>

);

}

Step 7: Testing the Frontend

Run the server using the command below and then navigate to http://localhost:3000/ or use the URL displayed in their terminal.

npm run dev

Now, you should view the UI components generator frontend on your browser, as shown below.

To generate UI components, make sure the Flask web server is running. Then, add a “Generate a contact form” prompt to the generator input field and press the Enter button.

Once the UI component is generated, you should see a preview of the generated code, as shown below.

Now that we have a working UI components generator let's integrate the Stream Chat SDK to enable collaboration and prompt generation.

Integrating Stream Chat SDK to the UI Components Generator Frontend

This section will teach you how to integrate the Stream Chat SDK into the UI components generator. This will enable you to generate prompts, collaborate, and discuss generated UI components.

To get started, follow the steps below.

Step 1: Setting up Stream Chat SDK

In the client folder, install Stream Chat SDK packages using the command below.

npm i stream-chat stream-chat-react

Then, go to the Stream signup page and create a new account.

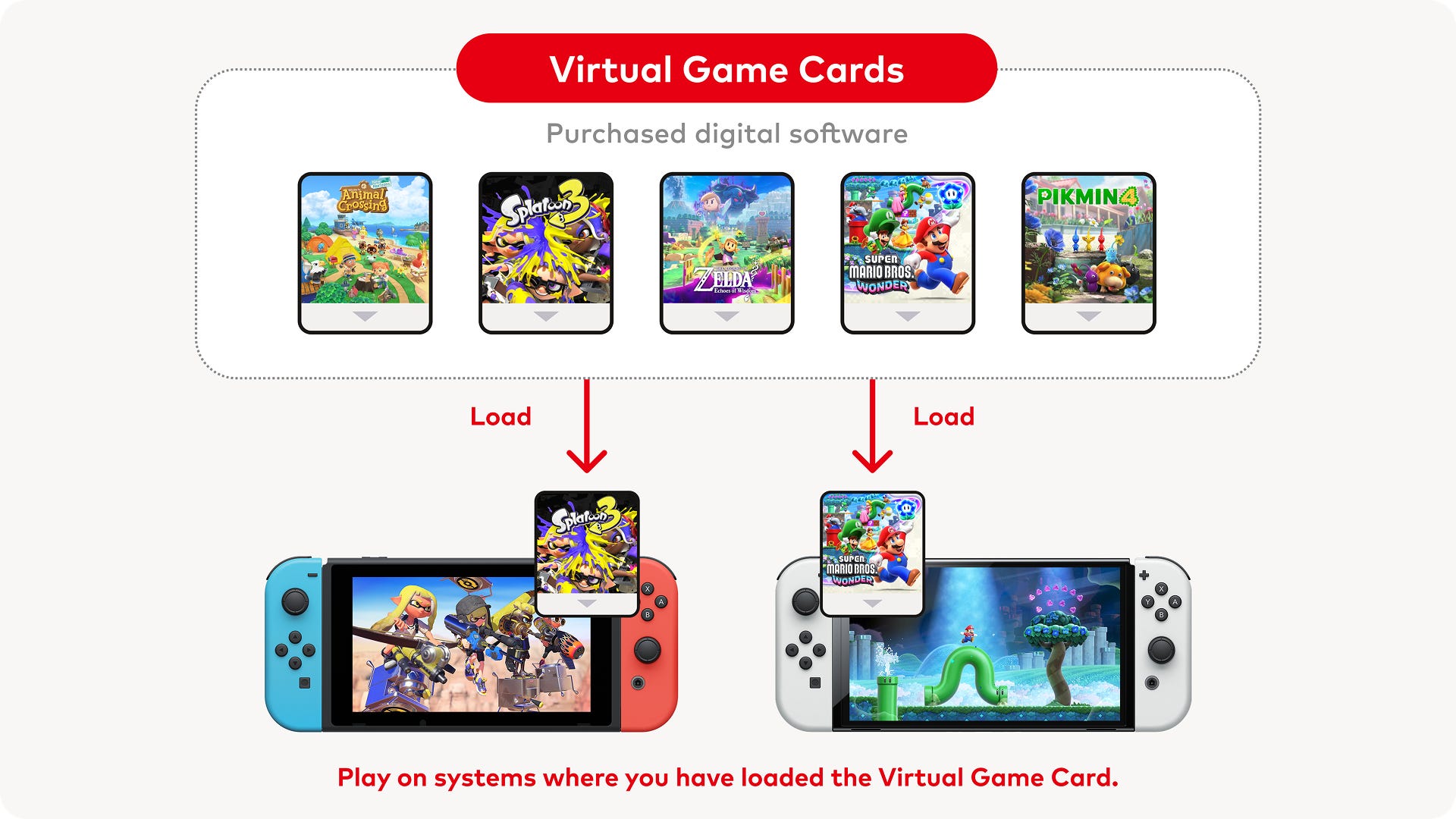

Next, create a new app in the Stream Dashboard after signing up, as shown below.

Step 2: Setting up the Environment Variables

Create a .env.local file and add your Stream credentials. Navigate to the Stream docs to learn how to get the API key and tokens.

NEXT_PUBLIC_STREAM_API_KEY=your-api-key

STREAM_APP_SECRET=your-app-secret

NEXT_PUBLIC_USER_ID=test-user

NEXT_PUBLIC_USER_TOKEN=your-user-token

Step 3: Creating Watchers custom hook

Create a custom React hook called useWatchers that manages a list of "watchers" (users who are actively watching a chat channel) using the Stream Chat API.

The hook fetches the initial list of watchers, tracks real-time updates when users start or stop watching, and manages loading and error states.

To create the hook, create a src/lib/useWatchers.ts file and paste the following code into the file:

import { useCallback, useEffect, useState } from 'react';

import { Channel } from 'stream-chat';

export const useWatchers = ({ channel }: { channel: Channel }) => {

const [watchers, setWatchers] = useState<string[] | undefined>(undefined);

const [loading, setLoading] = useState(false);

const [error, setError] = useState<Error | null>(null);

const queryWatchers = useCallback(async () => {

setLoading(true);

setError(null);

try {

const result = await channel.query({ watchers: { limit: 5, offset: 0 } });

setWatchers(result?.watchers?.map((watcher) => watcher.id));

setLoading(false);

return;

} catch (err) {

console.error('An error has occurred while querying watchers: ', err);

setError(err as Error);

}

}, [channel]);

useEffect(() => {

queryWatchers();

}, [queryWatchers]);

useEffect(() => {

const watchingStartListener = channel.on('user.watching.start', (event) => {

const userId = event?.user?.id;

if (userId && userId.startsWith('ai-bot')) {

setWatchers((prevWatchers) => [

userId,

...(prevWatchers || []).filter((watcherId) => watcherId !== userId),

]);

}

});

const watchingStopListener = channel.on('user.watching.stop', (event) => {

const userId = event?.user?.id;

if (userId && userId.startsWith('ai-bot')) {

setWatchers((prevWatchers) =>

(prevWatchers || []).filter((watcherId) => watcherId !== userId)

);

}

});

return () => {

watchingStartListener.unsubscribe();

watchingStopListener.unsubscribe();

};

}, [channel]);

return { watchers, loading, error };

};

Step 4: Creating the MyChannelHeader Component

Create a MyChannelHeader component that renders a channel header for the chat interface using the Stream Chat library.

The component displays the channel name and a button to add or remove an AI agent from the channel based on whether an AI is currently watching the channel.

Moreover, the component uses the useWatchers custom hook to track watchers and makes API calls to control the AI agent's presence.

To create the component, create a src/app/components/MyChannelHeader.tsx file and paste the following code into the file:

import { useChannelStateContext } from "stream-chat-react";

import { useWatchers } from "../lib/useWatchers";

export default function MyChannelHeader() {

const { channel } = useChannelStateContext();

const { watchers } = useWatchers({ channel });

const aiInChannel =

(watchers ?? []).filter((watcher) => watcher.includes("ai-bot")).length > 0;

return (

<div className="my-channel-header">

<h2>{channel?.data?.name ?? "Chat with an AI"}</h2>

<button onClick={addOrRemoveAgent}>

{aiInChannel ? "Remove AI" : "Add AI"}

</button>

</div>

);

async function addOrRemoveAgent() {

if (!channel) return;

const endpoint = aiInChannel ? "stop-ai-agent" : "start-ai-agent";

await fetch(`http://127.0.0.1:3000/${endpoint}`, {

method: "POST",

headers: { "Content-Type": "application/json" },

body: JSON.stringify({ channel_id: channel.id }),

});

}

}

After that, add styling to the MyChannelHeader component. To add the styling, go to src/app/globals.css file and paste the following CSS code into the file:

.my-channel-header {

display: flex;

align-items: center;

justify-content: space-between;

/* font: normal; */

padding: 1rem 1rem;

border-bottom: 1px solid lightgray;

}

.my-channel-header button {

background: #005fff;

color: white;

padding: 0.5rem 1rem;

border-radius: 0.5rem;

border: none;

cursor: pointer;

}

Step 5: Creating the MyAIStateIndicator Component

Create a MyAIStateIndicator component that displays a text message indicating the current state of an AI (e.g., thinking, generating an answer, etc.) in the chat interface.

The component uses the Stream Chat library’s hooks to access the channel and AI state and maps the AI state to human-readable text.

To create the component, create a src/app/components/AIStateIndicator.tsxfile and paste the following code into the file:

import { AIState } from 'stream-chat';

import { useAIState, useChannelStateContext } from 'stream-chat-react';

export default function MyAIStateIndicator() {

const { channel } = useChannelStateContext();

const { aiState } = useAIState(channel);

const text = textForState(aiState);

return text && <p className='my-ai-state-indicator'>{text}</p>;

function textForState(aiState: AIState): string {

switch (aiState) {

case 'AI_STATE_ERROR':

return 'Something went wrong...';

case 'AI_STATE_CHECKING_SOURCES':

return 'Checking external resources...';

case 'AI_STATE_THINKING':

return "I'm currently thinking...";

case 'AI_STATE_GENERATING':

return 'Generating an answer for you...';

default:

return '';

}

}

}

After that, add styling to the MyIStateIndicator component. To add the styling, go to the src/app/globals.css file and paste the following CSS code into the file:

.my-ai-state-indicator {

background: #005fff;

color: white;

padding: 0.5rem 1rem;

border-radius: 0.5rem;

border: 1px solid #003899;

margin: 1rem;

}

Step 6: Creating the Chat Interface Component

Create a ChatComponent that renders a chat interface using the Stream Chat library. It connects a user to a chat client, sets up a specific channel based on a provided channelId, and displays a chat UI with a custom header, AI state indicator, message list, and input field.

To create the component, create a src/app/components/chat-component.tsx file and paste the following code into the file:

"use client";

import { useEffect, useState } from "react";

import { StreamChat, Channel as StreamChannel } from "stream-chat";

import {

Chat,

Channel,

MessageInput,

MessageList,

Thread,

Window,

} from "stream-chat-react";

import "stream-chat-react/dist/css/v2/index.css";

import MyChannelHeader from "./MyChannelHeader";

import MyAIStateIndicator from "./MyAIStateIndicator";

import { Loader2 } from "lucide-react";

const chatClient = StreamChat.getInstance(

process.env.NEXT_PUBLIC_STREAM_API_KEY!

);

const user = {

id: process.env.NEXT_PUBLIC_USER_ID!,

name: "John Smith",

};

interface ChatComponentProps {

channelId: string;

}

export default function ChatComponent({ channelId }: ChatComponentProps) {

const [clientReady, setClientReady] = useState(false);

const [channel, setChannel] = useState<StreamChannel | null>(null);

useEffect(() => {

const setupClient = async () => {

try {

await chatClient.connectUser(user, process.env.NEXT_PUBLIC_USER_TOKEN!);

const channel = chatClient.channel("messaging", channelId, {

name: `Component Discussion - ${channelId}`,

members: [user.id],

});

await channel.watch();

setChannel(channel);

setClientReady(true);

} catch (err) {

console.error("Error connecting to Stream Chat:", err);

}

};

if (!clientReady) setupClient();

return () => {

if (clientReady) chatClient.disconnectUser();

};

}, [channelId, clientReady]);

if (!clientReady) {

return (

<div className="flex items-center justify-center h-full">

<Loader2 className="h-8 w-8 animate-spin text-primary" />

</div>

);

}

return (

<div className="h-[450px] overflow-auto">

<Chat client={chatClient} theme="messaging light">

{channel && (

<Channel channel={channel}>

<Window>

<MyChannelHeader />

<MyAIStateIndicator />

<MessageList />

<MessageInput />

</Window>

<Thread />

</Channel>

)}

</Chat>

</div>

);

}

Then, integrate the chat interface with the UI components generator frontend. To do that, go to the src/app/components/component-generator. Update the TSX file with the following code.

// ...

import ChatComponent from "./chat-component";

// ...

export default function ComponentGenerator() {

// ...

const [chatChannelId, setChatChannelId] = useState<string | null>(null);

const handleGenerate = async () => {

// ...

setTimeout(async () => {

// ...

setChatChannelId(newVersion.id);

// ...

}, 1500);

};

const handleVersionChange = (versionId: string) => {

// ...

if (version) {

// ...

setChatChannelId(version.id);

}

};

const handleSaveVersion = (label: string) => {

// ...

setChatChannelId(newVersion.id);

};

return (

<div className="flex flex-col h-[calc(100vh-200px)] min-h-[600px]">

<div className="flex-1 mb-4">

<Tabs value={activeTab} onValueChange={setActiveTab} className="h-full">

<div className="flex justify-between items-center mb-4">

<TabsList>

{/* ... */}

<TabsTrigger value="chat">Chat</TabsTrigger>

</TabsList>

</div>

<div className="h-[calc(100%-40px)]">

{/* ... */}

<TabsContent value="chat" className="h-full m-0">

{chatChannelId ? (

<div className="border rounded-lg h-full overflow-hidden">

<ChatComponent channelId={chatChannelId} />

</div>

) : (

<div className="flex items-center justify-center border rounded-lg overflow-hidden h-full text-muted-foreground">

Generate a component to start a chat

</div>

)}

</TabsContent>

</div>

</Tabs>

</div>

{/* ... */}

</div>

);

}

If you view the UI components generator on a browser, you should see the chat interface tab, as shown below.

Step 7: Integrating AI to the Stream Chat Interface

Set up a node.js backend that exposes two methods for starting and stopping an AI agent for a particular channel to add AI features to the chat interface.

To run the server locally, clone it using the command below.

git clone https://github.com/GetStream/ai-assistant-nodejs.git

Next, open the node.js backend folder in a code editor. Then, create a .env file in the folder and set the environment variables using the following keys.

ANTHROPIC_API_KEY=insert_your_key

STREAM_API_KEY=insert_your_key

STREAM_API_SECRET=insert_your_secret

OPENAI_API_KEY=insert_your_key

You can provide a key for either ANTHROPIC_API_KEY or OPENAI_API_KEY, depending on which one you would use. To determine which key to use, go to the ai-assistant-nodejs/src/index.ts file and set the platform to either anthropic or openai, as shown below.

npm install

Then, run the command below to start the server.

npm start

The server should start running on localhost:3000.

Step 8: Testing the AI-powered Stream Chat SDK

Open the UI components generator on a browser and generate a UI component. If you open the chat tab, you should see the chat interface, as shown below.

In the chat interface, click the Add AI button. Then, ask the AI agent to prompt you to generate a signup form, as shown below.

Then, copy the prompt generated and use it to create a signup form, as shown below.

You have now integrated an AI-powered chat interface using the Stream Chat SDK into the UI components generator.

Conclusion

In this tutorial, we have built an AI-powered UI components generator with the following features:

A backend powered by LangGraph that processes natural language requests and generates UI components using GPT-4 AI model.

A frontend interface built with Next.js, TypeScript, and TailwindCSS that allows users to preview, edit, and manage versions of generated UI components.

An AI-powered chat interface using Stream Chat SDK that enables collaborative discussions about UI components and generates new UI component prompts.

In conclusion, the tutorial showcases how AI agents can be effectively structured to handle complex tasks through the LangGraph framework.

Project source code: AI-powered UI Components Generator

![Apple Releases iOS 18.4 RC 2 and iPadOS 18.4 RC 2 to Developers [Download]](https://www.iclarified.com/images/news/96860/96860/96860-640.jpg)

![Amazon Drops Renewed iPhone 15 Pro Max to $762 [Big Spring Deal]](https://www.iclarified.com/images/news/96858/96858/96858-640.jpg)

![[The AI Show Episode 141]: Road to AGI (and Beyond) #1 — The AI Timeline is Accelerating](https://www.marketingaiinstitute.com/hubfs/ep%20141.1.png)

![[The AI Show Episode 140]: New AGI Warnings, OpenAI Suggests Government Policy, Sam Altman Teases Creative Writing Model, Claude Web Search & Apple’s AI Woes](https://www.marketingaiinstitute.com/hubfs/ep%20140%20cover.png)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![From broke musician to working dev. How college drop-out Ryan Furrer taught himself to code [Podcast #166]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743189826063/2080cde4-6fc0-46fb-b98d-b3d59841e8c4.png?#)

![[FREE EBOOKS] The Ultimate Linux Shell Scripting Guide, Artificial Intelligence for Cybersecurity & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Mini Review: Rendering Ranger: R2 [Rewind] (Switch) - A Novel Run 'N' Gun/Shooter Hybrid That's Finally Affordable](https://images.nintendolife.com/0e9d68643dde0/large.jpg?#)

.jpg?#)

.png?#)