And so it begins - Amazon Web Services is aggressively courting its own customers to use its Trainium tech rather than Nvidia's GPUs

AWS has reportedly offered customers a 25% saving to move from Nvidia hardware to its own Trainium chips.

- AWS urging customers to switch from Nvidia to its cheaper Trainium chip

- It says its hardware offers the same performance with a 25 percent cost saving

- Amazon's pitch happened as Nvidia was showcasing its new hardware at GTC 2025

While Nvidia was hosting its annual GTC 2025 conference, showing off new products like the DGX Spark and DGX Station AI supercomputers, Amazon was trying to convince its cloud customers they could save money by moving away from pricey Nvidia hardware and embracing Amazon’s own AI chips.

The Information claims AWS pitched at least one of its cloud customers to consider renting servers powered by Amazon’s Trainium chip, claiming they could enjoy the same performance as Nvidia’s H100, but at 25 percent of the cost.

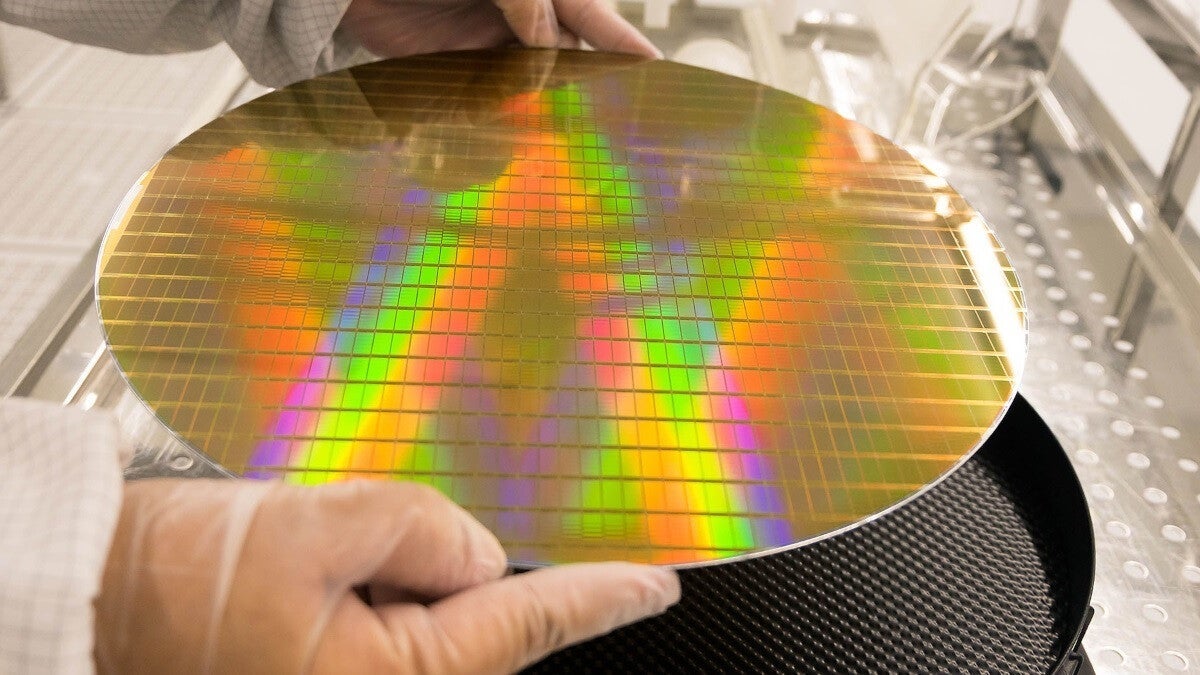

Trainium is one of several in-house chips that Amazon has developed (alongside Graviton and Inferentia), built for training machine learning models in the AWS cloud, and offering a lower-cost alternative to GPU-based systems. Amazon’s silicon is not intended as a like-for-like replacement for Nvidia’s more advanced products, but it doesn’t need to be.

Part of the AI conversation

Amazon’s offer looks to be part of a broader shift across the cloud market, where providers like AWS and Google are developing their own chips and offering them to customers as a way to avoid the cost - and scarcity - of Nvidia’s highly sought-after GPUs.

“What AWS is doing is smart,” Matt Kimball, VP and principal analyst for data compute and storage at Moor Insights & Strategy, told NetworkWorld. “It is telling the world that there is a cost-effective alternative that is also performant for AI training needs. It is inserting itself into the AI conversation.”

The pitch here, of course, is access. AWS is giving customers the opportunity to experiment with training and inferencing workloads without having to wait months for an Nvidia GPU or pay top dollar for it.

While a 25 percent saving is definitely not to be sniffed at, and something that will no doubt appeal to a number of AWS customers, there are obvious downsides for buyers to consider.

As NetworkWorld notes, “Enterprises used to working with Nvidia’s compute unified device architecture (CUDA) need to think about the cost of switching to a whole new platform like Trainium. Furthermore, Trainium is only available on AWS, so users can get locked in.”

![Apple Officially Releases macOS Sequoia 15.4 [Download]](https://www.iclarified.com/images/news/96887/96887/96887-640.jpg)

![Oppo ditches Alert Slider in teaser for smaller Find X8s, five-camera Find X8 Ultra [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/03/oppo-find-x8s-ultra-teaser-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

_Borka_Kiss_Alamy.jpg?#)

![[The AI Show Episode 141]: Road to AGI (and Beyond) #1 — The AI Timeline is Accelerating](https://www.marketingaiinstitute.com/hubfs/ep%20141.1.png)

![[The AI Show Episode 140]: New AGI Warnings, OpenAI Suggests Government Policy, Sam Altman Teases Creative Writing Model, Claude Web Search & Apple’s AI Woes](https://www.marketingaiinstitute.com/hubfs/ep%20140%20cover.png)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![From broke musician to working dev. How college drop-out Ryan Furrer taught himself to code [Podcast #166]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743189826063/2080cde4-6fc0-46fb-b98d-b3d59841e8c4.png?#)

-1280x720.jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)