Introduction to Data Engineering Concepts |12| Scheduling and Workflow Orchestration

Free Resources Free Apache Iceberg Course Free Copy of “Apache Iceberg: The Definitive Guide” Free Copy of “Apache Polaris: The Definitive Guide” 2025 Apache Iceberg Architecture Guide How to Join the Iceberg Community Iceberg Lakehouse Engineering Video Playlist Ultimate Apache Iceberg Resource Guide As data pipelines grow in complexity, managing them manually becomes unsustainable. Whether you're running daily ETL jobs, refreshing dashboards, or processing streaming data in micro-batches, you need a way to coordinate and monitor these tasks reliably. That’s where workflow orchestration comes in. In this post, we'll explore what orchestration means in the context of data engineering, how it differs from simple job scheduling, and what tools and design patterns help keep data workflows organized, observable, and resilient. From Scheduling to Orchestration At the simplest level, scheduling is about running tasks at a certain time. A cron job that triggers a Python script every morning is a form of scheduling. For small pipelines with few dependencies, this can be enough. But modern data systems rarely involve just one job. Instead, they include chains of tasks—data extractions, file transformations, validation checks, and loads into various targets. These tasks have dependencies, need error handling, and often require conditional logic. This is where orchestration becomes essential. Workflow orchestration is the discipline of managing task execution across a defined sequence, ensuring that tasks run in the correct order, on time, and with awareness of success or failure. It's not just about launching scripts—it's about understanding how those scripts relate to one another, how they behave under different conditions, and how to recover when something goes wrong. Directed Acyclic Graphs (DAGs) Most orchestration systems use the concept of a Directed Acyclic Graph (DAG) to represent workflows. In a DAG, each node represents a task, and edges represent dependencies. The "acyclic" part means there are no loops—each task runs once, and the flow moves in one direction. This structure allows you to define complex workflows declaratively. For example, you might define a pipeline where data is first extracted from an API, then validated, transformed, and finally loaded into a data warehouse. If any step fails, the system can stop the pipeline, alert the team, or retry the task based on configuration. DAGs also make it easier to track the status of each component. You can visualize which tasks succeeded, which are still running, and where failures occurred. This visibility is crucial for maintaining trust in your data pipelines. Common Orchestration Tools Several orchestration frameworks have become standard in the data engineering ecosystem. Apache Airflow is one of the most widely adopted tools. It allows users to define DAGs using Python code, which makes it highly flexible and programmable. Airflow includes scheduling, retries, logging, and a web UI for monitoring workflows. Prefect takes a modern approach by separating the orchestration layer from execution, which makes it more cloud-native and resilient to task failures. Prefect’s focus on observability and developer experience has made it popular for teams managing dynamic workloads. Dagster emphasizes data assets and type safety. It treats data pipelines as modular, testable units and integrates tightly with modern tooling, including dbt and cloud environments. Each of these tools supports task dependencies, conditional logic, parallelism, and failure recovery. Choosing the right one often comes down to team preference, operational needs, and ecosystem compatibility. Best Practices in Workflow Design Designing orchestration workflows requires more than chaining tasks together. Robust pipelines include thoughtful handling of edge cases and clear observability. That means: Using retries and timeouts to deal with flaky services or transient failures. Logging meaningful output so that issues can be diagnosed quickly. Isolating tasks so that a failure in one part doesn’t compromise unrelated workflows. Tagging or labeling jobs by function or owner to improve maintainability. It also means thinking about idempotency. Tasks should be safe to rerun if needed. For example, a data load job that inserts duplicate rows each time it runs will cause problems if retried. Designing tasks to either overwrite cleanly or check for prior completion helps prevent these issues. Another key practice is modularity. Instead of building large monolithic DAGs, break workflows into reusable components. This makes it easier to test, maintain, and scale your pipelines as your data ecosystem evolves. Observability and Alerting A well-orchestrated pipeline doesn’t just run—it tells you how it’s running. Observability is about surfacing the right information at the right time so that engineers

Free Resources

- Free Apache Iceberg Course

- Free Copy of “Apache Iceberg: The Definitive Guide”

- Free Copy of “Apache Polaris: The Definitive Guide”

- 2025 Apache Iceberg Architecture Guide

- How to Join the Iceberg Community

- Iceberg Lakehouse Engineering Video Playlist

- Ultimate Apache Iceberg Resource Guide

As data pipelines grow in complexity, managing them manually becomes unsustainable. Whether you're running daily ETL jobs, refreshing dashboards, or processing streaming data in micro-batches, you need a way to coordinate and monitor these tasks reliably. That’s where workflow orchestration comes in.

In this post, we'll explore what orchestration means in the context of data engineering, how it differs from simple job scheduling, and what tools and design patterns help keep data workflows organized, observable, and resilient.

From Scheduling to Orchestration

At the simplest level, scheduling is about running tasks at a certain time. A cron job that triggers a Python script every morning is a form of scheduling. For small pipelines with few dependencies, this can be enough.

But modern data systems rarely involve just one job. Instead, they include chains of tasks—data extractions, file transformations, validation checks, and loads into various targets. These tasks have dependencies, need error handling, and often require conditional logic. This is where orchestration becomes essential.

Workflow orchestration is the discipline of managing task execution across a defined sequence, ensuring that tasks run in the correct order, on time, and with awareness of success or failure. It's not just about launching scripts—it's about understanding how those scripts relate to one another, how they behave under different conditions, and how to recover when something goes wrong.

Directed Acyclic Graphs (DAGs)

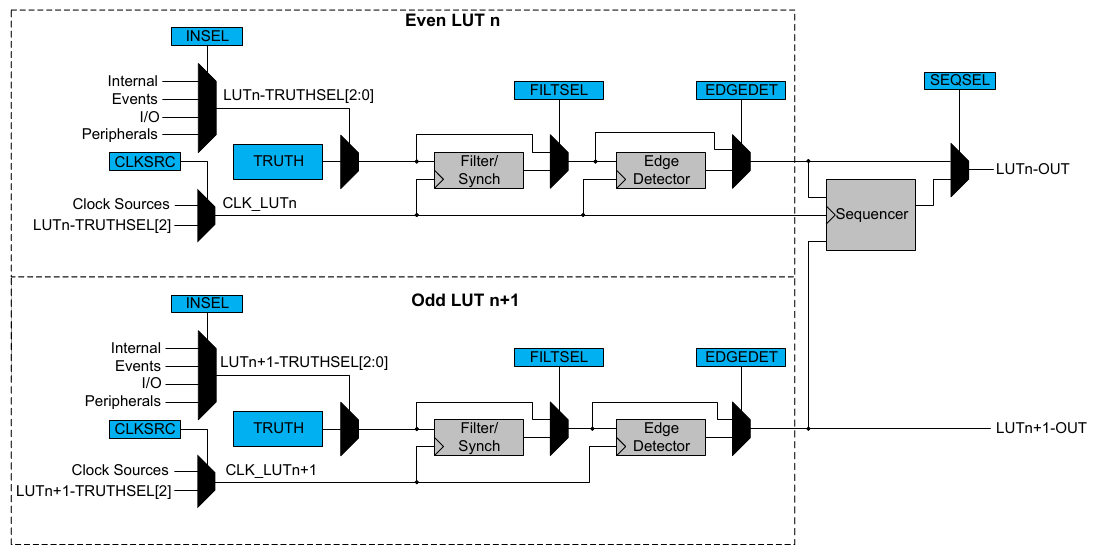

Most orchestration systems use the concept of a Directed Acyclic Graph (DAG) to represent workflows. In a DAG, each node represents a task, and edges represent dependencies. The "acyclic" part means there are no loops—each task runs once, and the flow moves in one direction.

This structure allows you to define complex workflows declaratively. For example, you might define a pipeline where data is first extracted from an API, then validated, transformed, and finally loaded into a data warehouse. If any step fails, the system can stop the pipeline, alert the team, or retry the task based on configuration.

DAGs also make it easier to track the status of each component. You can visualize which tasks succeeded, which are still running, and where failures occurred. This visibility is crucial for maintaining trust in your data pipelines.

Common Orchestration Tools

Several orchestration frameworks have become standard in the data engineering ecosystem.

Apache Airflow is one of the most widely adopted tools. It allows users to define DAGs using Python code, which makes it highly flexible and programmable. Airflow includes scheduling, retries, logging, and a web UI for monitoring workflows.

Prefect takes a modern approach by separating the orchestration layer from execution, which makes it more cloud-native and resilient to task failures. Prefect’s focus on observability and developer experience has made it popular for teams managing dynamic workloads.

Dagster emphasizes data assets and type safety. It treats data pipelines as modular, testable units and integrates tightly with modern tooling, including dbt and cloud environments.

Each of these tools supports task dependencies, conditional logic, parallelism, and failure recovery. Choosing the right one often comes down to team preference, operational needs, and ecosystem compatibility.

Best Practices in Workflow Design

Designing orchestration workflows requires more than chaining tasks together. Robust pipelines include thoughtful handling of edge cases and clear observability. That means:

- Using retries and timeouts to deal with flaky services or transient failures.

- Logging meaningful output so that issues can be diagnosed quickly.

- Isolating tasks so that a failure in one part doesn’t compromise unrelated workflows.

- Tagging or labeling jobs by function or owner to improve maintainability.

It also means thinking about idempotency. Tasks should be safe to rerun if needed. For example, a data load job that inserts duplicate rows each time it runs will cause problems if retried. Designing tasks to either overwrite cleanly or check for prior completion helps prevent these issues.

Another key practice is modularity. Instead of building large monolithic DAGs, break workflows into reusable components. This makes it easier to test, maintain, and scale your pipelines as your data ecosystem evolves.

Observability and Alerting

A well-orchestrated pipeline doesn’t just run—it tells you how it’s running. Observability is about surfacing the right information at the right time so that engineers can respond to issues quickly.

Good orchestration tools provide dashboards, logs, and metrics. But equally important are alerts that notify the right people when something goes wrong. Alerts should be actionable and avoid noise. A system that sends alerts on every minor warning will eventually be ignored.

Integrating with monitoring platforms like Prometheus, Grafana, or external alerting tools like PagerDuty or Slack helps ensure that teams can respond to problems before they affect end users.

Orchestration as the Backbone

Workflow orchestration isn’t just a technical layer—it’s the backbone of operational data systems. It connects ingestion, transformation, validation, and delivery in a reliable and auditable way. When done well, it turns complex processes into predictable, repeatable workflows that teams can build on confidently.

In the next post, we’ll explore how to build scalable pipelines, including how to think about performance, parallelism, and distribution when dealing with large or fast-growing datasets.

![Apple Developing Battery Case for iPhone 17 Air Amid Battery Life Concerns [Report]](https://www.iclarified.com/images/news/97208/97208/97208-640.jpg)

![Apple to Split iPhone Launches Across Fall and Spring in Major Shakeup [Report]](https://www.iclarified.com/images/news/97211/97211/97211-640.jpg)

![Apple to Move Camera to Top Left, Hide Face ID Under Display in iPhone 18 Pro Redesign [Report]](https://www.iclarified.com/images/news/97212/97212/97212-640.jpg)

![AirPods 4 On Sale for $99 [Lowest Price Ever]](https://www.iclarified.com/images/news/97206/97206/97206-640.jpg)

_Inge_Johnsson-Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![From Art School Drop-out to Microsoft Engineer with Shashi Lo [Podcast #170]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746203291209/439bf16b-c820-4fe8-b69e-94d80533b2df.png?#)

![[FREE EBOOKS] Learn Computer Forensics — 2nd edition, AI and Business Rule Engines for Excel Power Users & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)