Context Without Complexity: LangChain’s In-Memory Superpower

Context, Lost and Found Picture this: you've just built a slick little chatbot. You greet it, it greets back. You ask a follow-up—and it acts like it’s never met you. You double-check your code. Nothing’s broken… except the memory. LLMs are brilliant at language but terrible at continuity. Each prompt is a blank slate unless you explicitly tell it otherwise. For devs working on support agents, assistants, or multi-turn experiences, this becomes the first real hurdle. And this is where things get interesting. LangChain’s memory tools—specifically the in-memory store—let you prototype fast, stay stateless, and simulate context without spinning up Redis or hooking into Postgres. This came in especially handy during our recent hackathon, where speed and flexibility were key and spinning up infra just wasn’t an option. Lightweight, but flexible. Temporary, but powerful. In this post, I’ll walk you through how it works, where it fits, and why sometimes the simplest tool is all you need to move fast and stay sane. When Ephemeral Is Enough LangChain’s ChatMessageHistory isn’t built for permanence—and that’s the point. It shines in: Quick experiments where infrastructure is overkill Short sessions where you only need the last few messages Serverless or containerized apps where state lives ephemerally It’s the sticky note of memory tools. No setup, no commitment, but useful when you’re in the zone. Quickfire Example: Minimal Setup, Maximum Impact Say you want to build a multi-user chatbot that remembers just the last 4 user-AI exchanges. The setup? Barebones: conversation_id = "user-42" if conversation_id not in memory_store: memory_store[conversation_id] = ChatMessageHistory() history = memory_store[conversation_id] history.add_message(HumanMessage(content="Remind me about my 2 PM call.")) history.add_message(AIMessage(content="Noted. I'll remind you at 1:50 PM.")) # Keep the latest 4 exchanges if len(history.messages) > 8: history.messages = history.messages[-8:] Now you’ve got contextual memory that doesn’t outlive the session, and that’s often exactly what you need in dev and test environments. Code That Doesn't Get Clingy The trap with memory is overengineering. It’s tempting to reach for persistence, backups, and failover strategies—when all you really needed was 5 minutes of recall. Here’s how to keep your memory layer clean: Avoid hard dependencies. Inject the memory strategy. Use a wrapper class like ConversationManager. Add a formatter that compiles history into LLM-ready prompt chunks. This way, swapping in Redis or Pinecone later doesn’t require rewriting everything upstream. Why This Matters for Real Apps Even the most powerful LLM is only as useful as its context window. If you’re building: Slack bots with short conversations Internal tools that don’t store chat logs Testing frameworks that need to simulate prior messages ...then in-memory is gold. It’s fast, stateless, and won’t complain when you blow it away after a demo. When you need to persist, you will. But until then? Build fast, stay lean. Beyond the Sticky Note: Scaling Your Memory Architecture In-memory storage gets you far—but not forever. When your app starts getting real traffic, or your chatbot needs to persist context across devices or days, it’s time to evolve. Here’s how teams typically scale beyond ephemeral memory: 1. Redis (and friends) The natural upgrade. Drop-in fast key-value storage with support for TTLs, pub/sub, and multi-user memory. LangChain even supports it out of the box with RedisChatMessageHistory. Why Redis? Low latency, ideal for real-time apps Shared memory across servers Easy to expire memory after inactivity 2. SQL/NoSQL Backends If you’re already using Postgres or MongoDB for business logic, why not store memory there too? You get durability, queries, and versioned chat logs. Use it when you need: Auditable chat records Queryable sessions Memory tied to user accounts 3. Vector Memory (for long-term recall) This is where memory gets smart. Instead of recalling exact messages, you store semantic embeddings of past conversations. Tools like FAISS, Weaviate, or Pinecone let you retrieve similar interactions—not just recent ones. Great for: Semantic context recall Smart summarization Persistent user memory 4. Summarization Strategies Don’t underestimate the power of a summary. When chat history grows too large, summarize and replace it in the prompt. You’ll save tokens and keep context lean. Combine it with: Token budget constraints Sliding window approaches User-specific personalization Final Bits Choosing the right memory architecture isn’t about what’s popular—it’s about what’s appropriate. In-memory might look too simple, but it delivers speed, simplicity, and surprisingly good UX for a huge number of cases. When you’re re

Context, Lost and Found

Picture this: you've just built a slick little chatbot. You greet it, it greets back. You ask a follow-up—and it acts like it’s never met you. You double-check your code. Nothing’s broken… except the memory.

LLMs are brilliant at language but terrible at continuity. Each prompt is a blank slate unless you explicitly tell it otherwise. For devs working on support agents, assistants, or multi-turn experiences, this becomes the first real hurdle.

And this is where things get interesting.

LangChain’s memory tools—specifically the in-memory store—let you prototype fast, stay stateless, and simulate context without spinning up Redis or hooking into Postgres. This came in especially handy during our recent hackathon, where speed and flexibility were key and spinning up infra just wasn’t an option. Lightweight, but flexible. Temporary, but powerful.

In this post, I’ll walk you through how it works, where it fits, and why sometimes the simplest tool is all you need to move fast and stay sane.

When Ephemeral Is Enough

LangChain’s ChatMessageHistory isn’t built for permanence—and that’s the point.

It shines in:

- Quick experiments where infrastructure is overkill

- Short sessions where you only need the last few messages

- Serverless or containerized apps where state lives ephemerally

It’s the sticky note of memory tools. No setup, no commitment, but useful when you’re in the zone.

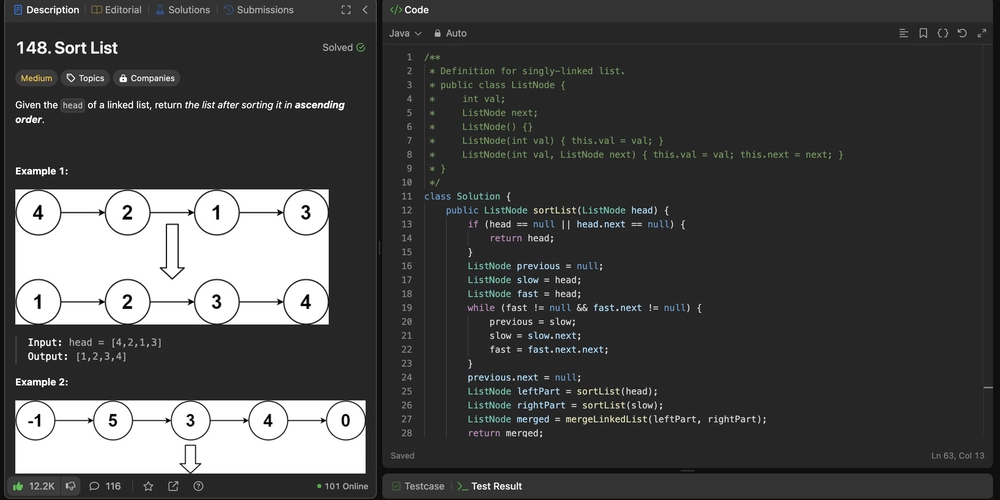

Quickfire Example: Minimal Setup, Maximum Impact

Say you want to build a multi-user chatbot that remembers just the last 4 user-AI exchanges. The setup? Barebones:

conversation_id = "user-42"

if conversation_id not in memory_store:

memory_store[conversation_id] = ChatMessageHistory()

history = memory_store[conversation_id]

history.add_message(HumanMessage(content="Remind me about my 2 PM call."))

history.add_message(AIMessage(content="Noted. I'll remind you at 1:50 PM."))

# Keep the latest 4 exchanges

if len(history.messages) > 8:

history.messages = history.messages[-8:]

Now you’ve got contextual memory that doesn’t outlive the session, and that’s often exactly what you need in dev and test environments.

Code That Doesn't Get Clingy

The trap with memory is overengineering. It’s tempting to reach for persistence, backups, and failover strategies—when all you really needed was 5 minutes of recall.

Here’s how to keep your memory layer clean:

- Avoid hard dependencies. Inject the memory strategy.

- Use a wrapper class like

ConversationManager. - Add a formatter that compiles history into LLM-ready prompt chunks.

This way, swapping in Redis or Pinecone later doesn’t require rewriting everything upstream.

Why This Matters for Real Apps

Even the most powerful LLM is only as useful as its context window. If you’re building:

- Slack bots with short conversations

- Internal tools that don’t store chat logs

- Testing frameworks that need to simulate prior messages

...then in-memory is gold. It’s fast, stateless, and won’t complain when you blow it away after a demo.

When you need to persist, you will. But until then? Build fast, stay lean.

Beyond the Sticky Note: Scaling Your Memory Architecture

In-memory storage gets you far—but not forever. When your app starts getting real traffic, or your chatbot needs to persist context across devices or days, it’s time to evolve.

Here’s how teams typically scale beyond ephemeral memory:

1. Redis (and friends)

The natural upgrade. Drop-in fast key-value storage with support for TTLs, pub/sub, and multi-user memory. LangChain even supports it out of the box with RedisChatMessageHistory.

Why Redis?

- Low latency, ideal for real-time apps

- Shared memory across servers

- Easy to expire memory after inactivity

2. SQL/NoSQL Backends

If you’re already using Postgres or MongoDB for business logic, why not store memory there too? You get durability, queries, and versioned chat logs.

Use it when you need:

- Auditable chat records

- Queryable sessions

- Memory tied to user accounts

3. Vector Memory (for long-term recall)

This is where memory gets smart. Instead of recalling exact messages, you store semantic embeddings of past conversations. Tools like FAISS, Weaviate, or Pinecone let you retrieve similar interactions—not just recent ones.

Great for:

- Semantic context recall

- Smart summarization

- Persistent user memory

4. Summarization Strategies

Don’t underestimate the power of a summary. When chat history grows too large, summarize and replace it in the prompt. You’ll save tokens and keep context lean.

Combine it with:

- Token budget constraints

- Sliding window approaches

- User-specific personalization

Final Bits

Choosing the right memory architecture isn’t about what’s popular—it’s about what’s appropriate. In-memory might look too simple, but it delivers speed, simplicity, and surprisingly good UX for a huge number of cases.

When you’re ready to scale, LangChain makes it easy to migrate—thanks to its consistent memory interfaces.

So start with the sticky note. And upgrade when the use case demands it.

Context is everything—and memory is how you earn it.

![Lowest Prices Ever: Apple Pencil Pro Just $79.99, USB-C Pencil Only $49.99 [Deal]](https://www.iclarified.com/images/news/96863/96863/96863-640.jpg)

![Apple Releases iOS 18.4 RC 2 and iPadOS 18.4 RC 2 to Developers [Download]](https://www.iclarified.com/images/news/96860/96860/96860-640.jpg)

![[The AI Show Episode 141]: Road to AGI (and Beyond) #1 — The AI Timeline is Accelerating](https://www.marketingaiinstitute.com/hubfs/ep%20141.1.png)

![[The AI Show Episode 140]: New AGI Warnings, OpenAI Suggests Government Policy, Sam Altman Teases Creative Writing Model, Claude Web Search & Apple’s AI Woes](https://www.marketingaiinstitute.com/hubfs/ep%20140%20cover.png)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![From broke musician to working dev. How college drop-out Ryan Furrer taught himself to code [Podcast #166]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743189826063/2080cde4-6fc0-46fb-b98d-b3d59841e8c4.png?#)

![[FREE EBOOKS] The Ultimate Linux Shell Scripting Guide, Artificial Intelligence for Cybersecurity & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

.png?#)

![Mini Review: Rendering Ranger: R2 [Rewind] (Switch) - A Novel Run 'N' Gun/Shooter Hybrid That's Finally Affordable](https://images.nintendolife.com/0e9d68643dde0/large.jpg?#)