Measuring your program speed correctly

Hallo curious friend! Have you ever run a program and wondered how long it took? Let's say you had two programs and you were trying to figure out which one was faster. Maybe you used a tool and got a measurement for both. How sure are you that it took that long? Let's explore measuring our program speed and try to find out how to be as accurate as possible. MAYBE EVEN DOWN TO THE NANOSECOND! As Barney Stinson would say, CHALLENGE ACCEPTED! Okay there are several ways we could try to do this. Let's look at them one by one. Why would I care about nanosecond precision? CPUs these days run at 3 GHz. What does that mean? That means it runs 3 * 10e9 cpu clock cycles per second. 3 BILLION CYCLES PER SECOND. This also means 3 cpu clock cycles per nanosecond... (a nanosecond is 10e-9 seconds, super small....). If I can run 1 addition operation in 1 cpu clock cycle, in 1 second, I can add 3 BILLION things together! Wow computers are so powerful. But this also means to be precise about our performance, we should probably try to measure as close to nanoseconds as possible. The time command posix If you use the time command, which is implemented in POSIX systems like linux, you will get 3 types of times for your program. real: The real time is end to end time of your program from invocation to the end of the process. user: This is the time the cpu spent in user space (the logic of your program). system: This is the time the cpu spent in kernel space for either system calls or interrupts. (System calls are ways your program can make use of your operating system's resources. Interrupts are the way your operating system prevents you from hogging the cpu for yourself.) Great let's run a simple C or C++ program and see how things get measured. int main(int argc, char** argv){ int sum{0}; for(int i = 0; i < 20; i++){ sum += i; } return sum; } Let's make this fun. Give a guess how long you think this will run. If you're brave enough, lock in your answer in the comments lol. We can see who gets the closest. Okay, I'm going to run it now... time ./test Here is what we get: real 0m0.014s user 0m0.001s sys 0m0.000s Okay it seems like our process took 0.014s overall, but the logic of our program took 0.001s (1 millisecond) of it. Honestly, at this point I have no clue if this is right or not. But many people might stop here and believe this value. Let's see what is happening behind the scenes. It seems like time is a built in shell command, so let's look at the bash shell codebase. After some digging, we find the time_command C function here. Here is an important part of it. #if defined (HAVE_GETRUSAGE) && defined (HAVE_GETTIMEOFDAY) struct timeval real, user, sys; struct timeval before, after; # if defined (HAVE_STRUCT_TIMEZONE) struct timezone dtz; /* posix doesn't define this */ # endif struct rusage selfb, selfa, kidsb, kidsa; /* a = after, b = before */ #else # if defined (HAVE_TIMES) clock_t tbefore, tafter, real, user, sys; struct tms before, after; # endif #endif Let's dissect this. There are 2 important terms here to highlight timeval and rusage. timeval timeval holds two variables. struct timeval { time_t tv_sec; /* seconds */ susecond_t tv_usec; /* microseconds */ }; It is often used with the gettimeofday system call. This system call is defined here. It gives you microseconds since epoch (Jan 1, 1970). A system call is a call your program makes to the system to perform privileged tasks like asking for the time from hardware. Specifically, gettimeofday is a vsyscall and you can see how it works here. But long story short, it asks the system's real-time clock (RTC) for the time. RTC actually gives you the time in nanoseconds. But we actually lose this precision and it gets converted to microseconds/seconds when we get it! AHHHHHHH! This sucks... The idea is we use gettimeofday before the program starts and after it ends to measure the end to end program time. rusage It's actually short for resource usage. The linux kernel maintains statistics about your program like the amount of time spent in user space and time spent by the system on other tasks. It also contains other information relating to memory and input/output devices. To give you an idea, it looks like this: struct rusage { struct timeval ru_utime; /* user CPU time used */ struct timeval ru_stime; /* system CPU time used */ long ru_maxrss; /* maximum resident set size */ long ru_ixrss; /* integral shared memory size */ long ru_idrss; /* integral unshared data size */ long ru_isrss; /* integral unshared stack size */ long ru_minflt; /* page reclaims (soft page faults) */

Hallo curious friend! Have you ever run a program and wondered how long it took?

Let's say you had two programs and you were trying to figure out which one was faster. Maybe you used a tool and got a measurement for both. How sure are you that it took that long?

Let's explore measuring our program speed and try to find out how to be as accurate as possible. MAYBE EVEN DOWN TO THE NANOSECOND!

As Barney Stinson would say, CHALLENGE ACCEPTED!

Okay there are several ways we could try to do this. Let's look at them one by one.

Why would I care about nanosecond precision?

CPUs these days run at 3 GHz.

What does that mean?

That means it runs 3 * 10e9 cpu clock cycles per second.

3 BILLION CYCLES PER SECOND.

This also means 3 cpu clock cycles per nanosecond...

(a nanosecond is 10e-9 seconds, super small....).

If I can run 1 addition operation in 1 cpu clock cycle, in 1 second, I can add 3 BILLION things together!

Wow computers are so powerful. But this also means to be precise about our performance, we should probably try to measure as close to nanoseconds as possible.

The time command posix

If you use the time command, which is implemented in POSIX systems like linux, you will get 3 types of times for your program.

-

real: The real time is end to end time of your program from invocation to the end of the process. -

user: This is the time the cpu spent in user space (the logic of your program). -

system: This is the time the cpu spent in kernel space for either system calls or interrupts. (System calls are ways your program can make use of your operating system's resources. Interrupts are the way your operating system prevents you from hogging the cpu for yourself.)

Great let's run a simple C or C++ program and see how things get measured.

int main(int argc, char** argv){

int sum{0};

for(int i = 0; i < 20; i++){

sum += i;

}

return sum;

}

Let's make this fun. Give a guess how long you think this will run. If you're brave enough, lock in your answer in the comments lol. We can see who gets the closest.

Okay, I'm going to run it now...

time ./test

Here is what we get:

real 0m0.014s

user 0m0.001s

sys 0m0.000s

Okay it seems like our process took 0.014s overall, but the logic of our program took 0.001s (1 millisecond) of it.

Honestly, at this point I have no clue if this is right or not.

But many people might stop here and believe this value.

Let's see what is happening behind the scenes. It seems like time is a built in shell command, so let's look at the bash shell codebase.

After some digging, we find the time_command C function here. Here is an important part of it.

#if defined (HAVE_GETRUSAGE) && defined (HAVE_GETTIMEOFDAY)

struct timeval real, user, sys;

struct timeval before, after;

# if defined (HAVE_STRUCT_TIMEZONE)

struct timezone dtz; /* posix doesn't define this */

# endif

struct rusage selfb, selfa, kidsb, kidsa; /* a = after, b = before */

#else

# if defined (HAVE_TIMES)

clock_t tbefore, tafter, real, user, sys;

struct tms before, after;

# endif

#endif

Let's dissect this. There are 2 important terms here to highlight timeval and rusage.

timeval

timeval holds two variables.

struct timeval {

time_t tv_sec; /* seconds */

susecond_t tv_usec; /* microseconds */

};

It is often used with the gettimeofday system call. This system call is defined here. It gives you microseconds since epoch (Jan 1, 1970).

A system call is a call your program makes to the system to perform privileged tasks like asking for the time from hardware.

Specifically, gettimeofday is a vsyscall and you can see how it works here.

But long story short, it asks the system's real-time clock (RTC) for the time. RTC actually gives you the time in nanoseconds.

But we actually lose this precision and it gets converted to microseconds/seconds when we get it! AHHHHHHH! This sucks...

The idea is we use gettimeofday before the program starts and after it ends to measure the end to end program time.

rusage

It's actually short for resource usage. The linux kernel maintains statistics about your program like the amount of time spent in user space and time spent by the system on other tasks.

It also contains other information relating to memory and input/output devices. To give you an idea, it looks like this:

struct rusage {

struct timeval ru_utime; /* user CPU time used */

struct timeval ru_stime; /* system CPU time used */

long ru_maxrss; /* maximum resident set size */

long ru_ixrss; /* integral shared memory size */

long ru_idrss; /* integral unshared data size */

long ru_isrss; /* integral unshared stack size */

long ru_minflt; /* page reclaims (soft page faults) */

long ru_majflt; /* page faults (hard page faults) */

long ru_nswap; /* swaps */

long ru_inblock; /* block input operations */

long ru_oublock; /* block output operations */

long ru_msgsnd; /* IPC messages sent */

long ru_msgrcv; /* IPC messages received */

long ru_nsignals; /* signals received */

long ru_nvcsw; /* voluntary context switches */

long ru_nivcsw; /* involuntary context switches */

};

Yep, it's a lot of statistics.

To collect this data, your linux libc library supports the getrusage syscall (more info).

You can track the entire process (combines all threads), a specific thread, or even children processes!

As you can see once again, this once again utilizes the timeval struct which had microsecond precision.

While the microsecond precision sucks a little, it is pretty cool that the kernel tracks stuff for us (especially the user/system time).

Overall, here is what I've concluded from the time command.

- It has microsecond precision

- It maintains user/system time for a process

- It provides data from process startup to process destruction

Ok let's test it out. I was a bit lazy so I asked ChatGPT to help me implement the getrusage calls for this one. It looks like this:

#include

#include

int main(int argc, char** argv){

struct rusage usage;

struct timeval start_user, start_system, end_user, end_system;

long long start_user_us, start_system_us, end_user_us, end_system_us;

// Get starting resource usage

getrusage(RUSAGE_SELF, &usage);

start_user = usage.ru_utime;

start_system = usage.ru_stime;

// Convert timeval to microseconds for easier calculation

start_user_us = (start_user.tv_sec * 1000000LL) + start_user.tv_usec;

start_system_us = (start_system.tv_sec * 1000000LL) + start_system.tv_usec;

int sum{0};

for(int i = 0; i < 20; i++){

sum += i;

}

getrusage(RUSAGE_SELF, &usage);

end_user = usage.ru_utime;

end_system = usage.ru_stime;

// Convert to microseconds

end_user_us = (end_user.tv_sec * 1000000LL) + end_user.tv_usec;

end_system_us = (end_system.tv_sec * 1000000LL) + end_system.tv_usec;

// Calculate elapsed time

long long elapsed_user_us = end_user_us - start_user_us;

long long elapsed_system_us = end_system_us - start_system_us;

long long elapsed_total_us = elapsed_user_us + elapsed_system_us;

// Print results

std::cout << "User CPU time: " << elapsed_user_us / 1000000.0 << " seconds" << std::endl;

std::cout << "System CPU time: " << elapsed_system_us / 1000000.0 << " seconds" << std::endl;

}

And so how long did it take??? Here is the output:

User CPU time: 1e-06 seconds

System CPU time: 0 seconds

Total CPU time: 1e-06 seconds

1 MICROSECOND! whoa did we get faster??? Or are we wrong?

Remember the time command said we spent 1 millisecond in user space. And the whole program took 14 milliseconds process creation to destruction.

So who is more right??? One measurement is off from the other by 100x!!!

Well, the time command takes into account more than just the logic in the program. Process creation/destruction can be expensive.

At the same time, the time command tends to lose a lot of precision when outputting to users.

clock_t

This one is a cool one. Why?

It uses the hardware clock rather than the RTC.

So what exactly is clock_t?

It can be an int or a float or other type depending on your libc implementation as long as it is capable of keeping track of clock ticks.

Clock ticks are an arbitrary measurement of time determined by your hardware clock/timer. It is much more granular than milliseconds/seconds.

In fact, there is a CLOCKS_PER_SECOND macro/variable in C that converts clock ticks to seconds. This is sometimes set to values like 1,000,000.

This would mean you would get microsecond precision. If it was a larger value, you could get even more precision.

Note that this is not the same as clock cycles. Clock cycles are the CPU's internal processor frequency.

To use clock_t, you can get the current clock value from the clock() function.

#include

#include

int main(int argc, char** argv){

clock_t start = clock();

int sum{0};

for(int i = 0; i < 20; i++){

sum += i;

}

clock_t end = clock();

std::cout << (end - start) << " us"<< std::endl;

std::cout << "clocks per sec: " << CLOCKS_PER_SEC << std::endl;

return sum;

}

If we time, this program again and observe the results... here it is:

And tadaaa....

1 us

clocks per sec: 1000000

This for loop takes 1 microsecond according to our measurement. Similar to getrusage!

Is this the smallest granularity we can go???

Well, I feel like we can do better because even if a cycle were to take 1 nanosecond, that means our code would've took 1000 cycles.

1000 instructions to loop through 20 items is INSANE!!

But with only microsecond precision, we are definitely overshooting with a huge error from the actual program runtime.

Okay, let's try something else because ticks aren't frequent enough.

In C++, there is a chrono library and it seems like it can support nanosecond granularity for its system clock.

chrono

chrono has been around since C++11, which means its been there for a while now lol. It is a great time library. It can even do timezone conversions in C++20!! (ikr! why'd it take so long...)

Using chrono, I can use the high resolution clock to supposedly get nanosecond level precision. Here we go:

#include

#include

int main(int argc, char** argv){

auto start = std::chrono::high_resolution_clock::now();

int sum{0};

for(int i = 0; i < 20; i++){

sum += i;

}

auto end = std::chrono::high_resolution_clock::now();

std::chrono::duration duration = end - start;

std::cout << "Time taken: " << duration.count() << " nanoseconds" << std::endl;

return sum;

}

The code is short and simple. So what does it say about our loop?

Our program outputted:

Time taken: 254 nanoseconds

Much faster than 1 microsecond. In fact 4x better. And 4000x faster than time. CRAZY!

So now is it really 254 nanoseconds?? If we assumed a cycle took a nanosecond, this says that we went through 254 cycles for a 20 item for loop???

Let's see what this clock is really doing and maybe we can find out why...

After looking at the GNU/linux C++ source code (libstdc++), I found the implementation of now().

It seems like it is an alias to either a system clock or steady clock on different versions, but here is a the system clock implementation.

The system clock means a real time clock that users use to perceive time. It can be affected by the calendar and can jump forwards/backwards in time.

The steady clock is a monotonic clock kind of like what clock() used. It only increases at a certain frequency.

It seems like my system clock gives nanosecond precision.

When we look at the source code, we see the following system call:

#ifdef _GLIBCXX_USE_CLOCK_GETTIME_SYSCALL

syscall(SYS_clock_gettime, CLOCK_REALTIME, &tp);

#else

clock_gettime(CLOCK_REALTIME, &tp);

This means the kernel has to do some work and get us the time. tp is a timespec struct and it has nanosecond precision (defined in

struct timespec {

time_t tv_sec; // seconds

long tv_nsec; // nanoseconds

};

The kernel goes to the system's real time clock with CLOCK_REALTIME and fills in this struct. This process of switching from user space code to kernel space and executing kernel instructions takes time!

And in fact, it is affecting us by a lot of nanoseconds.

Not only, that we don't really know the frequency/precision of our system's real time clock with respect to nanoseconds. Does it update every couple nanoseconds? Or every nanosecond?

Lots of questions regarding the real time clock.

There is a lot of factors relating to the hardware and protocol that can impact the frequency, so it is hard to tell.

Clearly, the clock_gettime() syscall used by chrono's high_resolution_clock and system_clock aren't precise enough for this tiny program.

SO CAN WE GET MORE PRECISE???????

CAN WE????????

I can't hear you!!!

Ohhhhhhhhhh......

Just kidding. This isn't Spongebob. I'll stop with the cliff hangers.

TSC

Introducing the TSC, the Time Stamp Counter.

Silly enough, the answer has been in front of you all along since the beginning of this blog.

We can use the clock cycles to measure how long our program took. TSC is a monotonic counter that increments for each clock cycle.

How do we get access to the TSC?

Specifically, TSC is 64 bit register on x86 processors. So... I'm sorry if you are on a different processor (like ARM). You probably have something else.

But many of the world's standard machines run x86, so this is very relevant.

Okay, how do we get the 64 bits of data from the x86 register?

Luckily for us there is an assembly instruction that copies the TSC value to two 4 byte registers that we can access.

The instruction is rdtsc.

Also lucky for us, we can embed assembly into our C++. If we do this and then move the register value into our variable, we can use the value.

Here is what that looks like:

#include

int main(int argc, char** argv){

uint64_t start{0};

uint64_t end{0};

__asm__ volatile(

"rdtsc"

:"=A" (start)

);

int sum{0};

for(int i = 0; i < 20; i++){

sum += i;

}

__asm__ volatile(

"rdtsc"

:"=A" (end)

);

std::cout << "Result: " << (end-start) << " cycles" << std::endl;

return sum;

}

And now guess what you think the output is in cycles... How many cycles does this simple for loop of additions take?

And the answer issss

Result: 300 cycles

Alright alright, this isn't as useful unless we know the frequency of our processor cycles.

If I run lscpu in my terminal, it tells me a lot of cool things about my cpu/processor...

In fact it shows me this useful fact:

CPU MHz: 2495.998

2496 Megahertz! That's roughly 2.5 GHz!!!

If I do 1 / (2.5 * 10^9) to get the number of seconds per cycle, I get roughly 0.4 nanoseconds per cycle!

If I multiply 0.4 nanoseconds per cycle with 300 cycles, I get 120 nanoseconds!!!

We just halved our time from the previous method of using get_clocktime() with the system clock!

We were a lot more precise as we weren't accounting for any system calls and kernel instructions for the most part.

Wow! We have nanosecond level precision.

That's not all. What if we ran our program with O3 optimization on our compiler. Could we get a lower number?

In fact we can....... and get the following results......

Result: 35 cycles

BOOOOOOOOM!!! Faster than the FLASH.... (jk, i've never seen the Flash)

Okay calm down buddy. Turns out our program was so simple the compiler precomputed the sum (I think).

Let's see the assembly just to make sure.

#APP

# 8 "test.cpp" 1

rdtsc

# 0 "" 2

#NO_APP

movq %rax, %rbx

#APP

# 16 "test.cpp" 1

rdtsc

# 0 "" 2

#NO_APP

movl $8, %edx

movq %rax, %rbp

... more assembly ...

_ZSt4endlIcSt11char_traitsIcEERSt13basic_ostreamIT_T0_ES6_@PLT

addq $8, %rsp

.cfi_def_cfa_offset 24

movl $190, %eax

Okay, we see the two rdtsc commands. BUT THERE IS ONLY 1 INSTRUCTION BETWEEN!

And the compiler precomputed the sum and stores 190 into %eax and returns that.

LOL so that's why our -O3 optimization looks faster. Things got reordered and precomputed.

So our algorithm/for loop in fact no longer exists.

Beautiful.

As my boss says at work...

"The best kind of code is no code"

The Conclusion

-

timeis gross and too slow -

getrusageis somewhat better but not as precise but it can show you user vs system time -

chrono's system_clock is better but has some imprecision. It has nanoseconds! -

rdtscis the GOAT! And using it is simple. Clock cycles are the lowest form of precison, even better than nanoseconds.

We started at 1 millisecond and went to 100 nanos. That is a factor of around 10,000x for our precision! (10^-3 to 10^-7)

I hope you learned how to be insanely precise...

Now I must leave... See you next time

(or many clock cycles from now)

Peace <3

- absterdabster

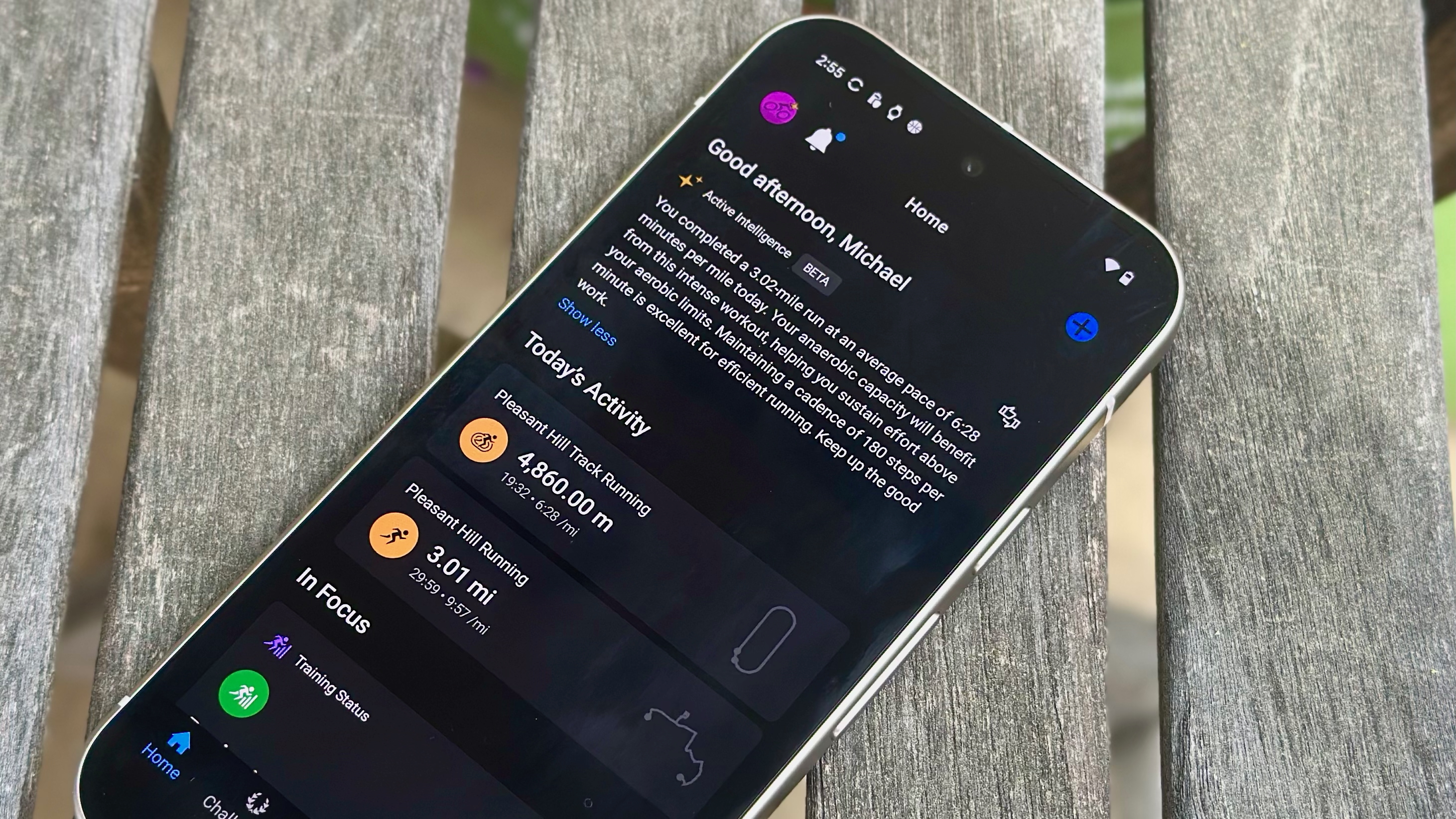

![Apple's M5 iPad Pro Enters Advanced Testing for 2025 Launch [Gurman]](https://www.iclarified.com/images/news/96865/96865/96865-640.jpg)

![M5 MacBook Pro Set for Late 2025, Major Redesign Waits Until 2026 [Gurman]](https://www.iclarified.com/images/news/96868/96868/96868-640.jpg)

![Apple to Revamp Health App with AI-Powered Doctor [Gurman]](https://www.iclarified.com/images/news/96870/96870/96870-640.jpg)

![Lowest Prices Ever: Apple Pencil Pro Just $79.99, USB-C Pencil Only $49.99 [Deal]](https://www.iclarified.com/images/news/96863/96863/96863-640.jpg)

![What Google Messages features are rolling out [March 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2023/12/google-messages-name-cover.png?resize=1200%2C628&quality=82&strip=all&ssl=1)

![[The AI Show Episode 141]: Road to AGI (and Beyond) #1 — The AI Timeline is Accelerating](https://www.marketingaiinstitute.com/hubfs/ep%20141.1.png)

![[The AI Show Episode 140]: New AGI Warnings, OpenAI Suggests Government Policy, Sam Altman Teases Creative Writing Model, Claude Web Search & Apple’s AI Woes](https://www.marketingaiinstitute.com/hubfs/ep%20140%20cover.png)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![From broke musician to working dev. How college drop-out Ryan Furrer taught himself to code [Podcast #166]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743189826063/2080cde4-6fc0-46fb-b98d-b3d59841e8c4.png?#)

![[FREE EBOOKS] The Ultimate Linux Shell Scripting Guide, Artificial Intelligence for Cybersecurity & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

.png?#)