Multimodal Queries Require Multimodal RAG: Researchers from KAIST and DeepAuto.ai Propose UniversalRAG—A New Framework That Dynamically Routes Across Modalities and Granularities for Accurate and Efficient Retrieval-Augmented Generation

RAG has proven effective in enhancing the factual accuracy of LLMs by grounding their outputs in external, relevant information. However, most existing RAG implementations are limited to text-based corpora, which restricts their applicability to real-world scenarios where queries may require diverse types of information, ranging from textual definitions to spatial understanding from images or temporal […] The post Multimodal Queries Require Multimodal RAG: Researchers from KAIST and DeepAuto.ai Propose UniversalRAG—A New Framework That Dynamically Routes Across Modalities and Granularities for Accurate and Efficient Retrieval-Augmented Generation appeared first on MarkTechPost.

RAG has proven effective in enhancing the factual accuracy of LLMs by grounding their outputs in external, relevant information. However, most existing RAG implementations are limited to text-based corpora, which restricts their applicability to real-world scenarios where queries may require diverse types of information, ranging from textual definitions to spatial understanding from images or temporal reasoning from videos. While some recent approaches have extended RAG to handle different modalities like images and videos, these systems are often constrained to operate within a single modality-specific corpus. This limits their ability to effectively respond to a wide spectrum of user queries that demand multimodal reasoning. Moreover, current RAG methods usually retrieve from all modalities without discerning which is most relevant for a given query, making the process inefficient and less adaptive to specific information needs.

To address this, recent research emphasizes the need for adaptive RAG systems to determine the appropriate modality and retrieval granularity based on the query context. Strategies include routing queries based on complexity, such as deciding between no retrieval, single-step, or multi-step retrieval, and using model confidence to trigger retrieval only when needed. Furthermore, the granularity of retrieval plays a crucial role, as studies have shown that indexing corpora at finer levels, like propositions or specific video clips, can significantly improve retrieval relevance and system performance. Hence, for RAG to truly support complex, real-world information needs, it must handle multiple modalities and adapt its retrieval depth and scope to the specific demands of each query.

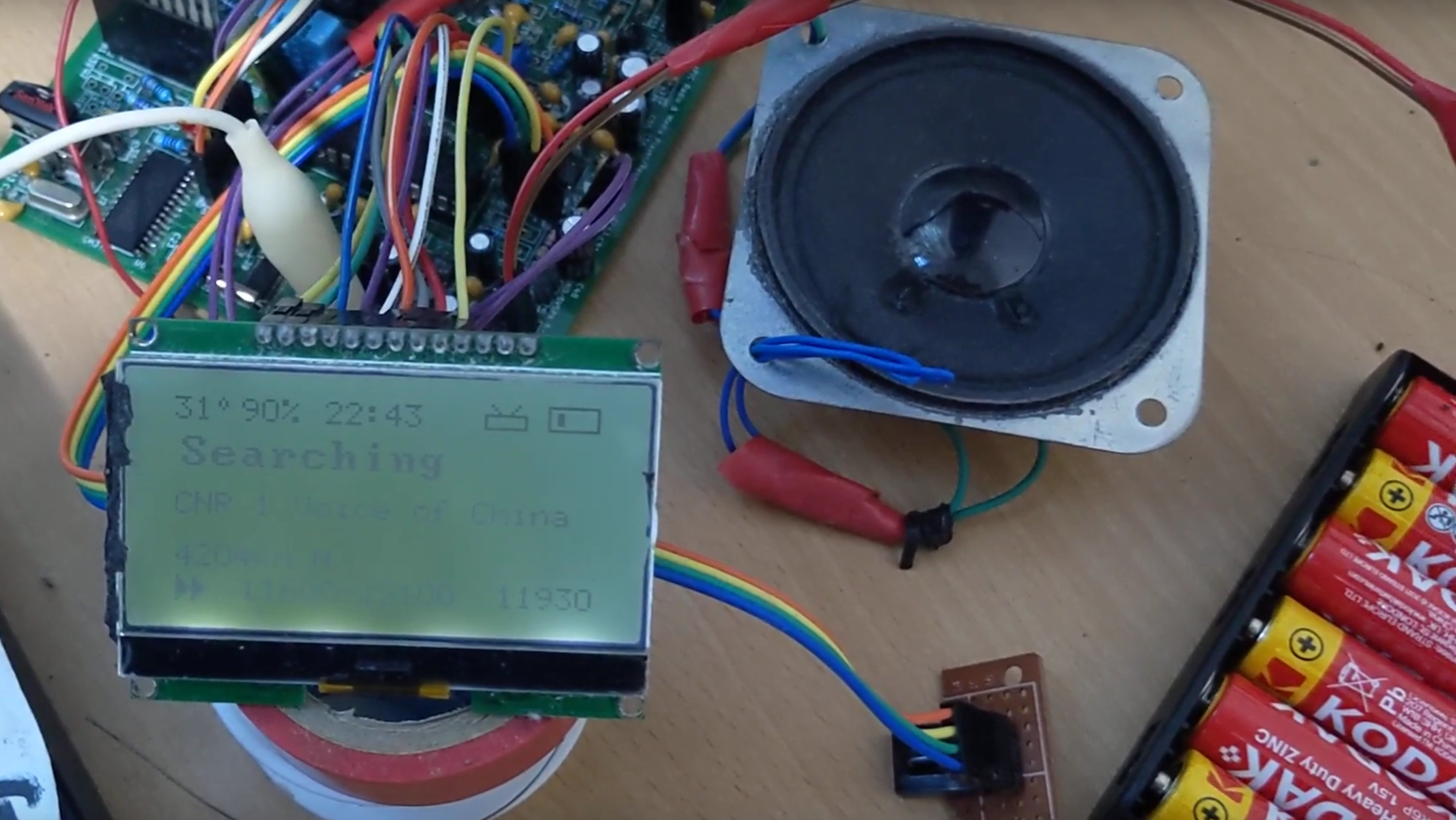

Researchers from KAIST and DeepAuto.ai introduce UniversalRAG, a RAG framework that retrieves and integrates knowledge from various modality-specific sources (text, image, video) and multiple granularity levels. Unlike traditional approaches that embed all modalities into a shared space, leading to modality bias, UniversalRAG uses a modality-aware routing mechanism to select the most relevant corpus dynamically based on the query. It further enhances retrieval precision by organizing each modality into granularity-specific corpora, such as paragraphs or video clips. Validated on eight multimodal benchmarks, UniversalRAG consistently outperforms unified and modality-specific baselines, demonstrating its adaptability to diverse query needs.

UniversalRAG is a retrieval-augmented generation framework that handles queries across various modalities and data granularities. Unlike standard RAG models limited to a single corpus, UniversalRAG separates knowledge into text, image, and video corpora, each with fine- and coarse-grained levels. A routing module first determines the optimal modality and granularity for a given query, choosing among options like paragraphs, full documents, video clips, or full video, and retrieves relevant information accordingly. This router can be either a training-free LLM-based classifier or a trained model using heuristic labels from benchmark datasets. An LVLM then uses the selected content to generate the final response.

The experimental setup assesses UniversalRAG across six retrieval scenarios: no retrieval, paragraph, document, image, clip, and video. For no-retrieval, MMLU tests general knowledge. Paragraph-level tasks use SQuAD and Natural Questions, while HotpotQA handles multi-hop document retrieval. Image-based queries come from WebQA, and video-related ones are sourced from LVBench and VideoRAG datasets, split into clip- and full-video levels. Corresponding retrieval corpora are curated for each modality—Wikipedia-based for text, WebQA for images, and YouTube videos for video tasks. This comprehensive benchmark ensures robust evaluation across varied modalities and retrieval granularities.

In conclusion, UniversalRAG is a Retrieval-Augmented Generation framework that can retrieve knowledge from multiple modalities and levels of granularity. Unlike existing RAG methods that rely on a single, often text-only, corpus or a single-modality source, UniversalRAG dynamically routes queries to the most appropriate modality- and granularity-specific corpus. This approach addresses issues like modality gaps and rigid retrieval structures. Evaluated on eight multimodal benchmarks, UniversalRAG outperforms both unified and modality-specific baselines. The study also emphasizes the benefits of fine-grained retrieval and highlights how both trained and train-free routing mechanisms contribute to robust, flexible multimodal reasoning.

Check out the Paper. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 90k+ ML SubReddit. For Promotion and Partnerships, please talk us.

![New Apple iPad mini 7 On Sale for $399! [Lowest Price Ever]](https://www.iclarified.com/images/news/96096/96096/96096-640.jpg)

![Apple Developing Battery Case for iPhone 17 Air Amid Battery Life Concerns [Report]](https://www.iclarified.com/images/news/97208/97208/97208-640.jpg)

![Apple to Split iPhone Launches Across Fall and Spring in Major Shakeup [Report]](https://www.iclarified.com/images/news/97211/97211/97211-640.jpg)

![Apple to Move Camera to Top Left, Hide Face ID Under Display in iPhone 18 Pro Redesign [Report]](https://www.iclarified.com/images/news/97212/97212/97212-640.jpg)

![The Material 3 Expressive redesign of Google Clock leaks out [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/03/Google-Clock-v2.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![What Google Messages features are rolling out [May 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2023/12/google-messages-name-cover.png?resize=1200%2C628&quality=82&strip=all&ssl=1)

_Inge_Johnsson-Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![Re-designing a Git/development workflow with best practices [closed]](https://i.postimg.cc/tRvBYcrt/branching-example.jpg)

![From Art School Drop-out to Microsoft Engineer with Shashi Lo [Podcast #170]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746203291209/439bf16b-c820-4fe8-b69e-94d80533b2df.png?#)

![[DEALS] Microsoft 365: 1-Year Subscription (Family/Up to 6 Users) (23% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)