Python Graph Databases: Efficient Techniques for Connected Data Analysis

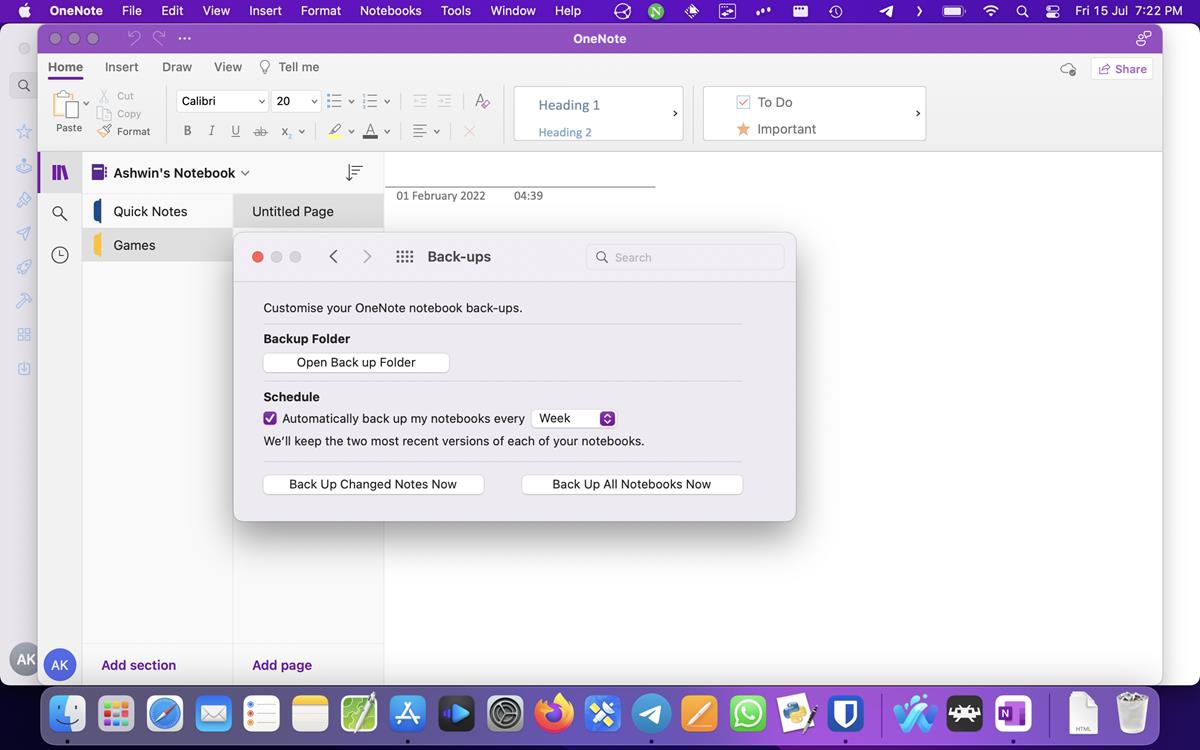

As a best-selling author, I invite you to explore my books on Amazon. Don't forget to follow me on Medium and show your support. Thank you! Your support means the world! Graph databases are an essential tool in modern data analysis, offering a natural way to represent and query connected data. With Python's extensive ecosystem, we can harness the power of these databases efficiently. I've worked with graph databases for years and found certain techniques particularly valuable for optimizing performance and developer productivity. Understanding Graph Database Fundamentals Graph databases store data in nodes and edges (relationships), making them ideal for handling complex relationships. Unlike relational databases, they excel at traversing connections between entities without expensive join operations. The property graph model used by most graph databases allows attributes on both nodes and relationships, creating rich data representations. This structure naturally fits many real-world scenarios from social networks to recommendation systems. Py2neo for Neo4j Integration Py2neo provides an intuitive Python interface for Neo4j, the most popular graph database. Working with Neo4j through py2neo simplifies many operations. from py2neo import Graph, Node, Relationship, NodeMatcher # Connect to Neo4j graph = Graph("bolt://localhost:7687", auth=("neo4j", "password")) # Create nodes and relationships alice = Node("Person", name="Alice", age=30) bob = Node("Person", name="Bob", age=32) friendship = Relationship(alice, "FRIENDS_WITH", bob, since=2010) # Create in database graph.create(alice) graph.create(bob) graph.create(friendship) # Find nodes using NodeMatcher matcher = NodeMatcher(graph) alice_node = matcher.match("Person", name="Alice").first() For better performance with large datasets, use transactions and batch operations: # Batch operations with transactions def create_person_network(people_data, relationships_data): tx = graph.begin() nodes = {} # Create nodes for person in people_data: node = Node("Person", name=person["name"], age=person["age"]) nodes[person["name"]] = node tx.create(node) # Create relationships for rel in relationships_data: source = nodes[rel["from"]] target = nodes[rel["to"]] relationship = Relationship(source, rel["type"], target) tx.create(relationship) tx.commit() return len(nodes), len(relationships_data) Cypher Query Optimization Cypher is Neo4j's query language. Writing efficient Cypher queries saves significant execution time: # Inefficient query def find_mutual_friends_inefficient(person1, person2): query = """ MATCH (p1:Person {name: $name1})-[:FRIENDS_WITH]->(friend) MATCH (p2:Person {name: $name2})-[:FRIENDS_WITH]->(friend) RETURN friend.name """ return graph.run(query, name1=person1, name2=person2).data() # Optimized query using pattern matching def find_mutual_friends_efficient(person1, person2): query = """ MATCH (p1:Person {name: $name1})-[:FRIENDS_WITH]->(friend)(p2:Person) RETURN p1 as source, p2 as target, properties(r) as properties """ nx_graph = neo4j_to_networkx(social_query) # Now use NetworkX for analysis betweenness = nx.betweenness_centrality(nx_graph) communities = nx.community.greedy_modularity_communities(nx_graph.to_undirected()) Efficient Data Modeling Techniques Proper graph data modeling significantly impacts performance: # Example of efficient data modeling with py2neo def create_optimized_product_catalog(products, categories): tx = graph.begin() # Create category nodes with indexes graph.run("CREATE INDEX ON :Category(name)") category_nodes = {} for category in categories: cat_node = Node("Category", name=category["name"]) category_nodes[category["id"]] = cat_node tx.create(cat_node) # Create product nodes with optimized properties graph.run("CREATE INDEX ON :Product(sku)") for product in products: # Store frequently queried properties directly on node prod_node = Node( "Product", sku=product["sku"], name=product["name"], price=product["price"], # Store less-queried data as JSON details=json.dumps(product["details"]) ) tx.create(prod_node) # Connect to categories for cat_id in product["categories"]: rel = Relationship( prod_node, "BELONGS_TO", category_nodes[cat_id] ) tx.create(rel) tx.commit() Query Caching and Result Reuse Implement caching for frequent queries: import functools from datetime import datetime, timedelta # Simple time-based cache for expensive graph queries def timed_cache(max_age_seconds=300): def decorator(func): cache = {}

As a best-selling author, I invite you to explore my books on Amazon. Don't forget to follow me on Medium and show your support. Thank you! Your support means the world!

Graph databases are an essential tool in modern data analysis, offering a natural way to represent and query connected data. With Python's extensive ecosystem, we can harness the power of these databases efficiently. I've worked with graph databases for years and found certain techniques particularly valuable for optimizing performance and developer productivity.

Understanding Graph Database Fundamentals

Graph databases store data in nodes and edges (relationships), making them ideal for handling complex relationships. Unlike relational databases, they excel at traversing connections between entities without expensive join operations.

The property graph model used by most graph databases allows attributes on both nodes and relationships, creating rich data representations. This structure naturally fits many real-world scenarios from social networks to recommendation systems.

Py2neo for Neo4j Integration

Py2neo provides an intuitive Python interface for Neo4j, the most popular graph database. Working with Neo4j through py2neo simplifies many operations.

from py2neo import Graph, Node, Relationship, NodeMatcher

# Connect to Neo4j

graph = Graph("bolt://localhost:7687", auth=("neo4j", "password"))

# Create nodes and relationships

alice = Node("Person", name="Alice", age=30)

bob = Node("Person", name="Bob", age=32)

friendship = Relationship(alice, "FRIENDS_WITH", bob, since=2010)

# Create in database

graph.create(alice)

graph.create(bob)

graph.create(friendship)

# Find nodes using NodeMatcher

matcher = NodeMatcher(graph)

alice_node = matcher.match("Person", name="Alice").first()

For better performance with large datasets, use transactions and batch operations:

# Batch operations with transactions

def create_person_network(people_data, relationships_data):

tx = graph.begin()

nodes = {}

# Create nodes

for person in people_data:

node = Node("Person", name=person["name"], age=person["age"])

nodes[person["name"]] = node

tx.create(node)

# Create relationships

for rel in relationships_data:

source = nodes[rel["from"]]

target = nodes[rel["to"]]

relationship = Relationship(source, rel["type"], target)

tx.create(relationship)

tx.commit()

return len(nodes), len(relationships_data)

Cypher Query Optimization

Cypher is Neo4j's query language. Writing efficient Cypher queries saves significant execution time:

# Inefficient query

def find_mutual_friends_inefficient(person1, person2):

query = """

MATCH (p1:Person {name: $name1})-[:FRIENDS_WITH]->(friend)

MATCH (p2:Person {name: $name2})-[:FRIENDS_WITH]->(friend)

RETURN friend.name

"""

return graph.run(query, name1=person1, name2=person2).data()

# Optimized query using pattern matching

def find_mutual_friends_efficient(person1, person2):

query = """

MATCH (p1:Person {name: $name1})-[:FRIENDS_WITH]->(friend)<-[:FRIENDS_WITH]-(p2:Person {name: $name2})

RETURN friend.name

"""

return graph.run(query, name1=person1, name2=person2).data()

Gremlin-Python for Multi-Database Compatibility

For projects requiring database flexibility, Apache TinkerPop's Gremlin query language works across compatible graph databases like JanusGraph, Neptune, and CosmosDB.

from gremlin_python.process.anonymous_traversal import traversal

from gremlin_python.driver.driver_remote_connection import DriverRemoteConnection

# Connect to a Gremlin server

connection = DriverRemoteConnection('ws://localhost:8182/gremlin', 'g')

g = traversal().withRemote(connection)

# Create a vertex (node)

g.addV('person').property('name', 'Charlie').property('age', 35).next()

# Add an edge (relationship)

g.V().has('person', 'name', 'Alice').as_('a')\

.V().has('person', 'name', 'Charlie').as_('c')\

.addE('knows').from_('a').to('c').property('since', 2022).next()

# Query with traversal optimization

def recommend_friends(person_name, max_recommendations=5):

return g.V().has('person', 'name', person_name).as_('p')\

.out('knows').aggregate('direct')\

.out('knows').where(__.not_(within('direct')))\

.where(__.not_(__.has('name', person_name)))\

.groupCount().by('name')\

.order(local).by(values, desc)\

.limit(local, max_recommendations)\

.toList()

NetworkX Integration for Analysis

NetworkX provides powerful graph analysis capabilities that complement graph databases:

import networkx as nx

from py2neo import Graph

# Connect to Neo4j

neo4j_graph = Graph("bolt://localhost:7687", auth=("neo4j", "password"))

# Convert Neo4j graph to NetworkX

def neo4j_to_networkx(query):

results = neo4j_graph.run(query)

G = nx.DiGraph()

for record in results:

# Assuming query returns rows with 'source', 'target', and 'properties'

G.add_edge(

record["source"]["name"],

record["target"]["name"],

**record["properties"]

)

return G

# Example: Get social network as NetworkX graph

social_query = """

MATCH (p1:Person)-[r:FRIENDS_WITH]->(p2:Person)

RETURN p1 as source, p2 as target, properties(r) as properties

"""

nx_graph = neo4j_to_networkx(social_query)

# Now use NetworkX for analysis

betweenness = nx.betweenness_centrality(nx_graph)

communities = nx.community.greedy_modularity_communities(nx_graph.to_undirected())

Efficient Data Modeling Techniques

Proper graph data modeling significantly impacts performance:

# Example of efficient data modeling with py2neo

def create_optimized_product_catalog(products, categories):

tx = graph.begin()

# Create category nodes with indexes

graph.run("CREATE INDEX ON :Category(name)")

category_nodes = {}

for category in categories:

cat_node = Node("Category", name=category["name"])

category_nodes[category["id"]] = cat_node

tx.create(cat_node)

# Create product nodes with optimized properties

graph.run("CREATE INDEX ON :Product(sku)")

for product in products:

# Store frequently queried properties directly on node

prod_node = Node(

"Product",

sku=product["sku"],

name=product["name"],

price=product["price"],

# Store less-queried data as JSON

details=json.dumps(product["details"])

)

tx.create(prod_node)

# Connect to categories

for cat_id in product["categories"]:

rel = Relationship(

prod_node,

"BELONGS_TO",

category_nodes[cat_id]

)

tx.create(rel)

tx.commit()

Query Caching and Result Reuse

Implement caching for frequent queries:

import functools

from datetime import datetime, timedelta

# Simple time-based cache for expensive graph queries

def timed_cache(max_age_seconds=300):

def decorator(func):

cache = {}

@functools.wraps(func)

def wrapper(*args, **kwargs):

key = str(args) + str(kwargs)

current_time = datetime.now()

if key in cache:

result, timestamp = cache[key]

if current_time - timestamp < timedelta(seconds=max_age_seconds):

return result

result = func(*args, **kwargs)

cache[key] = (result, current_time)

return result

return wrapper

return decorator

# Apply to expensive queries

@timed_cache(max_age_seconds=60)

def get_recommendation_graph(user_id):

query = """

MATCH (u:User {id: $user_id})-[:PURCHASED]->(p:Product)<-[:PURCHASED]-(other:User)

WHERE u <> other

WITH other, count(*) AS commonProducts

ORDER BY commonProducts DESC

LIMIT 100

MATCH (other)-[:PURCHASED]->(rec:Product)

WHERE NOT EXISTS((u)-[:PURCHASED]->(rec))

RETURN rec.name, count(*) AS score

ORDER BY score DESC

LIMIT 10

"""

return graph.run(query, user_id=user_id).data()

Bulk Operations for Large Datasets

Efficiently handling large datasets requires specialized approaches:

import csv

from py2neo import Graph, Node, Relationship

graph = Graph("bolt://localhost:7687", auth=("neo4j", "password"))

def bulk_import_from_csv(nodes_file, relationships_file, batch_size=5000):

# Import nodes

node_mapping = {}

total_nodes = 0

with open(nodes_file, 'r') as f:

reader = csv.DictReader(f)

batch = []

for row in reader:

node_id = row.pop('id')

node_label = row.pop('label')

# Convert data types

for key, value in row.items():

if value.isdigit():

row[key] = int(value)

elif value.lower() in ('true', 'false'):

row[key] = value.lower() == 'true'

node = Node(node_label, **row)

batch.append((node_id, node))

if len(batch) >= batch_size:

tx = graph.begin()

for node_id, node in batch:

tx.create(node)

node_mapping[node_id] = node

tx.commit()

total_nodes += len(batch)

print(f"Imported {total_nodes} nodes")

batch = []

# Import remaining nodes

if batch:

tx = graph.begin()

for node_id, node in batch:

tx.create(node)

node_mapping[node_id] = node

tx.commit()

total_nodes += len(batch)

print(f"Imported {total_nodes} nodes (final batch)")

# Import relationships

total_rels = 0

with open(relationships_file, 'r') as f:

reader = csv.DictReader(f)

batch = []

for row in reader:

start_id = row.pop('start_id')

end_id = row.pop('end_id')

rel_type = row.pop('type')

# Convert data types for properties

for key, value in row.items():

if value.isdigit():

row[key] = int(value)

elif value.lower() in ('true', 'false'):

row[key] = value.lower() == 'true'

if start_id in node_mapping and end_id in node_mapping:

rel = Relationship(

node_mapping[start_id],

rel_type,

node_mapping[end_id],

**row

)

batch.append(rel)

if len(batch) >= batch_size:

tx = graph.begin()

for rel in batch:

tx.create(rel)

tx.commit()

total_rels += len(batch)

print(f"Imported {total_rels} relationships")

batch = []

# Import remaining relationships

if batch:

tx = graph.begin()

for rel in batch:

tx.create(rel)

tx.commit()

total_rels += len(batch)

print(f"Imported {total_rels} relationships (final batch)")

return total_nodes, total_rels

Path Compression for Deep Traversals

For deep traversals, path compression improves performance:

def build_hierarchical_index(graph):

# Create shortcut relationships for faster traversal

query = """

MATCH (parent:Category)<-[:SUBCATEGORY_OF*1..5]-(descendant:Category)

WHERE NOT (descendant)-[:SUBCATEGORY_OF]->(parent)

MERGE (descendant)-[:ANCESTOR_OF {distance: length(path)}]->(parent)

"""

graph.run(query)

# Now queries can use these shortcuts

def get_all_products_in_category_tree(category_name):

# This query uses the pre-computed paths

query = """

MATCH (c:Category {name: $category})<-[:ANCESTOR_OF]-(subcat:Category)

MATCH (subcat)<-[:BELONGS_TO]-(product:Product)

RETURN product.name, product.price, subcat.name as subcategory

"""

return graph.run(query, category=category_name).data()

Application: Real-time Recommendation Engine

Here's how to implement a real-time recommendation engine:

def get_personalized_recommendations(user_id, limit=10):

# First approach: Collaborative filtering

query1 = """

MATCH (user:User {id: $user_id})-[:RATED]->(m:Movie)

MATCH (m)<-[:RATED]-(similar:User)

MATCH (similar)-[r:RATED]->(recommendation:Movie)

WHERE NOT EXISTS((user)-[:RATED]->(recommendation))

WITH recommendation, AVG(r.rating) AS score, COUNT(*) AS frequency

WHERE frequency > 5

RETURN recommendation.title, score

ORDER BY score DESC, frequency DESC

LIMIT $limit

"""

# Second approach: Content-based filtering

query2 = """

MATCH (user:User {id: $user_id})-[:RATED]->(m:Movie)-[:HAS_GENRE]->(genre:Genre)

MATCH (recommendation:Movie)-[:HAS_GENRE]->(genre)

WHERE NOT EXISTS((user)-[:RATED]->(recommendation))

WITH recommendation, COUNT(DISTINCT genre) AS genreOverlap

MATCH (recommendation)-[:HAS_GENRE]->(genre:Genre)

WITH recommendation, genreOverlap, COUNT(genre) AS totalGenres

RETURN recommendation.title, genreOverlap * 1.0 / totalGenres AS score

ORDER BY score DESC

LIMIT $limit

"""

# Run both approaches and combine results

collaborative_results = graph.run(query1, user_id=user_id, limit=limit).data()

content_results = graph.run(query2, user_id=user_id, limit=limit).data()

# Simple hybrid approach: combine and re-rank

combined = {}

for item in collaborative_results + content_results:

title = item["recommendation.title"]

score = item["score"]

combined[title] = combined.get(title, 0) + score

# Return top recommendations

sorted_recommendations = sorted(combined.items(), key=lambda x: x[1], reverse=True)

return [{"title": title, "score": score} for title, score in sorted_recommendations[:limit]]

Application: Social Network Analysis

Detecting communities and influencers in social networks:

def identify_community_influencers(community_label):

# Find influencers within a community

query = """

MATCH (u:User {community: $community})

MATCH (u)-[:FOLLOWS]->(follower)

WITH u, COUNT(follower) AS followers

MATCH (u)-[:POSTS]->(p:Post)<-[:LIKES]-(liker)

WITH u, followers, COUNT(DISTINCT liker) AS engagement

RETURN u.id, u.name, followers, engagement,

followers * 0.6 + engagement * 0.4 AS influence_score

ORDER BY influence_score DESC

LIMIT 10

"""

return graph.run(query, community=community_label).data()

def detect_communities(min_community_size=3):

# Use graph algorithms for community detection

# First, create a projected graph

graph.run("""

CALL gds.graph.create(

'socialGraph',

'User',

{

FOLLOWS: {

orientation: 'UNDIRECTED'

}

}

)

""")

# Run Louvain algorithm

results = graph.run("""

CALL gds.louvain.stream('socialGraph')

YIELD nodeId, communityId

WITH gds.util.asNode(nodeId) AS user, communityId

WITH communityId, COLLECT(user) AS users

WHERE size(users) >= $minSize

RETURN communityId, size(users) AS communitySize,

[u IN users | u.name] AS members

ORDER BY communitySize DESC

""", minSize=min_community_size).data()

# Cleanup

graph.run("CALL gds.graph.drop('socialGraph')")

return results

Application: Knowledge Graph Navigation

Exploring connected information in knowledge graphs:

def explore_knowledge_graph(start_entity, max_depth=2):

query = """

MATCH path = (start {name: $entity})-[*1..$depth]-(connected)

WHERE start <> connected

RETURN connected.name AS entity,

[rel IN relationships(path) | type(rel)] AS relationship_types,

length(path) AS distance

ORDER BY distance, entity

"""

return graph.run(query, entity=start_entity, depth=max_depth).data()

def answer_complex_query(question):

# Example: "What medications treat conditions with symptoms like headache?"

query = """

MATCH (symptom:Symptom {name: 'Headache'})

MATCH (symptom)<-[:HAS_SYMPTOM]-(condition:Condition)

MATCH (condition)<-[:TREATS]-(medication:Medication)

RETURN medication.name AS medication,

collect(DISTINCT condition.name) AS conditions,

count(DISTINCT condition) AS relevance

ORDER BY relevance DESC

"""

return graph.run(query).data()

Scaling Considerations

As your graph database grows, consider these scaling techniques:

- Properly indexed properties based on query patterns

- Implement server-side procedures for complex algorithms

- Use connection pooling for multi-threaded applications

- Consider sharding for extremely large graphs

- Apply domain-driven partitioning where possible

# Example connection pooling with py2neo

from py2neo import Graph

from concurrent.futures import ThreadPoolExecutor

class GraphDatabasePool:

def __init__(self, uri, auth, max_connections=10):

self.uri = uri

self.auth = auth

self.max_connections = max_connections

self._pool = []

def get_connection(self):

if not self._pool:

return Graph(self.uri, auth=self.auth)

return self._pool.pop()

def release_connection(self, connection):

if len(self._pool) < self.max_connections:

self._pool.append(connection)

def execute_query(self, query, params=None):

conn = self.get_connection()

try:

result = conn.run(query, parameters=params)

return result.data()

finally:

self.release_connection(conn)

def parallel_query(self, query_params_list, max_workers=5):

with ThreadPoolExecutor(max_workers=min(max_workers, self.max_connections)) as executor:

futures = [

executor.submit(self.execute_query, qp[0], qp[1])

for qp in query_params_list

]

return [future.result() for future in futures]

In my experience, graph databases truly shine when relationships are central to your data model. I've seen projects where switching from relational models to graph-based approaches led to 10-100x performance improvements for complex relationship queries. The techniques outlined above help ensure you get the most from your graph database implementation.

Working with graph databases in Python has transformed how I approach data modeling and analysis. These powerful tools let us represent the world's inherent connectedness in ways traditional databases simply can't match.

101 Books

101 Books is an AI-driven publishing company co-founded by author Aarav Joshi. By leveraging advanced AI technology, we keep our publishing costs incredibly low—some books are priced as low as $4—making quality knowledge accessible to everyone.

Check out our book Golang Clean Code available on Amazon.

Stay tuned for updates and exciting news. When shopping for books, search for Aarav Joshi to find more of our titles. Use the provided link to enjoy special discounts!

Our Creations

Be sure to check out our creations:

Investor Central | Investor Central Spanish | Investor Central German | Smart Living | Epochs & Echoes | Puzzling Mysteries | Hindutva | Elite Dev | JS Schools

We are on Medium

Tech Koala Insights | Epochs & Echoes World | Investor Central Medium | Puzzling Mysteries Medium | Science & Epochs Medium | Modern Hindutva

_Rokas_Tenys_Alamy.jpg?#)

![Apple Seeds visionOS 2.4 RC With Apple Intelligence to Developers [Download]](https://www.iclarified.com/images/news/96797/96797/96797-640.jpg)

![Apple Seeds tvOS 18.4 RC to Developers [Download]](https://www.iclarified.com/images/news/96800/96800/96800-640.jpg)

![Apple Seeds watchOS 11.4 RC to Developers [Download]](https://www.iclarified.com/images/news/96803/96803/96803-640.jpg)

![Apple Releases macOS Sequoia 15.4 RC to Developers [Download]](https://www.iclarified.com/images/news/96805/96805/96805-640.jpg)

![Motorola Razr (2025) leaks with smaller display, big battery, and a vibrant green color [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/03/motorola-razr-2025-green-leak-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![[The AI Show Episode 138]: Introducing GPT-4.5, Claude 3.7 Sonnet, Alexa+, Deep Research Now in ChatGPT Plus & How AI Is Disrupting Writing](https://www.marketingaiinstitute.com/hubfs/ep%20138%20cover.png)

![From hating coding to programming satellites at age 37 with Francesco Ciulla [Podcast #165]](https://cdn.hashnode.com/res/hashnode/image/upload/v1742585568977/09b25b8e-8c92-4f4b-853f-64b7f7915980.png?#)

.jpg?#)

.png?#)

.jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)