How to Choose the Right LLM

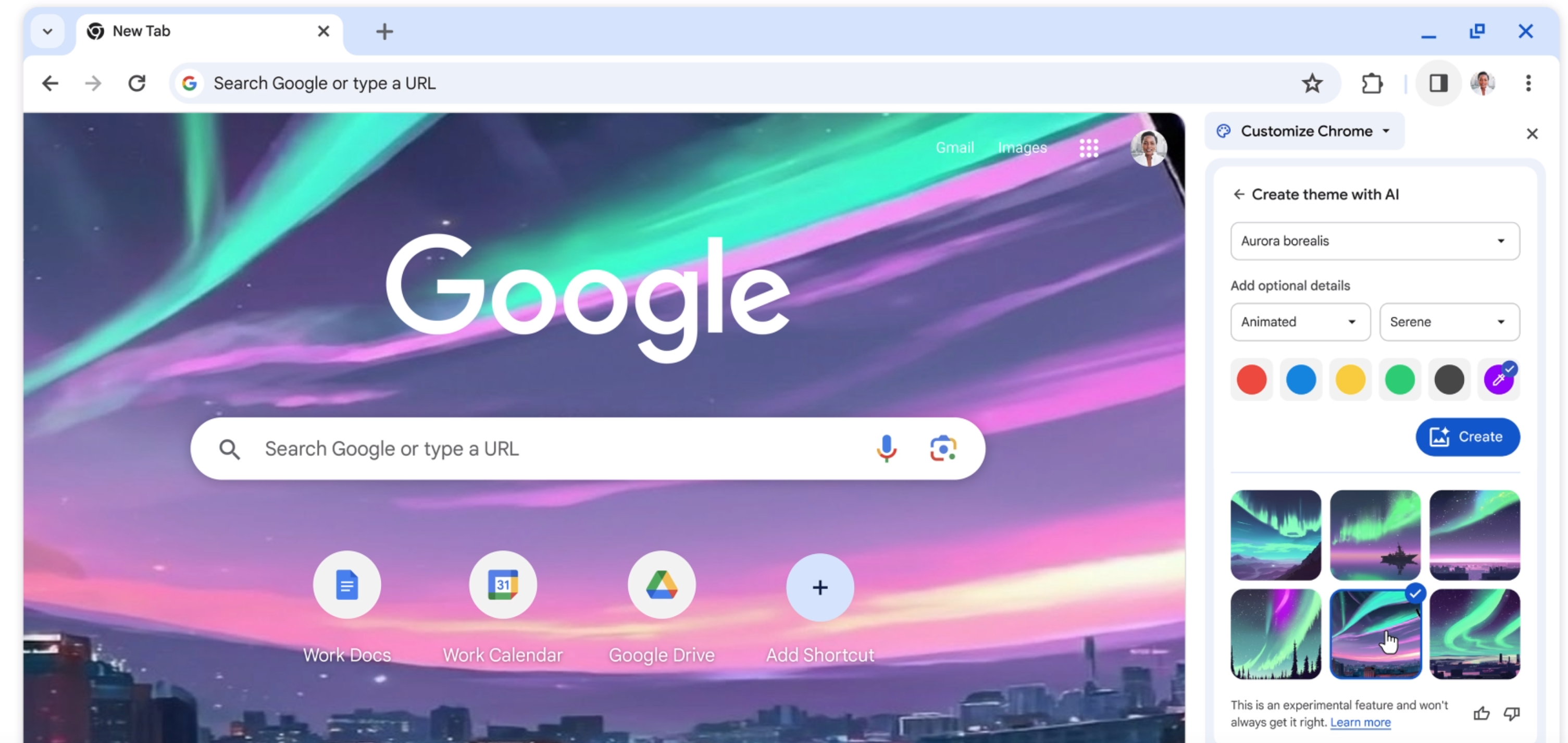

JetBrains AI Assistant is now multimodel! This is an exciting development, but what does it actually mean for you? It means AI Assistant is now more adaptable. It combines the strengths of different AI models, from the deep reasoning of large ones to the speed of compact ones, and even the privacy of local ones. […]

JetBrains AI Assistant is now multimodel! This is an exciting development, but what does it actually mean for you? It means AI Assistant is now more adaptable. It combines the strengths of different AI models, from the deep reasoning of large ones to the speed of compact ones, and even the privacy of local ones.

So, which model is best for you? Do you need just one, or should you mix and match for different jobs? The answer isn’t always obvious, but don’t worry – we’ve done the homework for you. In this post, we’ll break down the models that power AI Assistant, explaining what they’re best at and how you can make the most of them.

The metrics we consider important

First, let’s define the metrics that will help us compare the models.

Speed – How fast does the model generate responses? If one model is slower than another, that’s not necessarily a bad thing. Some models take extra time because they use a reasoning-based approach, which can lead to more precise answers. Depending on your task, this metric can be crucial – for example if you absolutely need a quick response.

In this blog post we share our in-house data for speed calculated in tokens per second (TPS).

Hallucination rate – AI is powerful, but not perfect. Some models have a higher tendency to generate incorrect or misleading answers. The lower the hallucination rate, the better. In this blog post we’re relying on GitHub’s data for hallucination rate.

Context window size – This defines how much code the model can process at once. The larger the context window, the more the AI can “remember” in a single go, which can be crucial for working on complex projects.

Coding performance – This metric highlights how well the model can handle coding tasks. Several reliable benchmarks help us rate the LLMs:

- HumanEval+ measures how well an LLM can solve Python coding problems within a certain number of attempts. With 100 as the maximum, a high score means that the model is reliable and can generate correct code in one go.

- ChatBot Arena ranks LLMs based on real user feedback, making it one of the most dynamic and practical AI benchmarks today. If you see a higher number here, the model consistently outperforms others in vote-based, head-to-head comparisons.

- Aider’s polyglot benchmark evaluates how well LLMs write and fix code in multiple programming languages by checking whether their solutions run correctly. A high score indicates that the LLM is highly accurate and reliable at coding in multiple programming languages, meaning it’s a strong choice for a variety of development tasks.

Which LLM to use for your coding tasks

Now that we’ve defined the metrics, let’s see how the LLMs supported by AI Assistant compare.

| LLM | Coding Performance | Speed (TPS) | Hallucination Rate |

Context Window |

|---|---|---|---|---|

| OpenAI | ||||

|

GPT-4o

OpenAI’s most advanced and reliable GPT model, GPT-4o offers deep understanding and lightning-fast responses. |

HumanEval+: 87.2

ChatBot Arena: 1,377

Aider: 27.1%

|

53.20 ±15.57 | 1.5% | 128K tokens |

|

GPT-4o mini

This is a smaller model that distills GPT-4o’s power into a compact, low-latency package. |

HumanEval+: 83.5

ChatBot Arena: 1,283

Aider: 55.6%

|

62.78 ±19.72 | 1.7% | 128K tokens |

|

o1

The o1 series models are trained with reinforcement learning to handle complex reasoning. They think before they respond, generating a detailed internal chain of thought to provide more accurate, logical, and well-structured answers. |

HumanEval+: 89

ChatBot Arena: 1,358

Aider: 61.7%

|

134.96 ±35.58 | 2.4% | 100K tokens |

|

o1-mini

This is a smaller, cost-effective reasoning model that nearly matches the coding performance of the full o1 model. Despite its smaller size, it can handle coding challenges nearly as well as o1 models, as evidenced by their comparable ChatBot Arena scores. |

HumanEval+: 89

ChatBot Arena: 1,353

Aider: 32.9%

|

186.98 ±47.55 | 1.4% | 100K tokens |

|

o3-mini

The latest small reasoning model, o3-mini delivers exceptional STEM capabilities with a particular strength in coding. It maintains the low cost and speed of o1‑mini while matching the larger o1 model’s coding performance and providing faster responses. This makes it a very effective choice for coding and logical problem-solving tasks. |

HumanEval+: –

ChatBot Arena: 1,353

Aider: 60.4%

|

155.01 ±45.11 | 0.8% | 100K tokens |

|

Gemini 2.0 Flash

This is a high-speed, low-latency model optimized for efficiency and performance. It is ideal for powering dynamic, agent-driven experiences. |

HumanEval+: –

ChatBot Arena: 1,356

Aider: 22.2%

|

103.89 ±23.60 | 0.7% | 1M tokens |

|

Gemini 1.5 Flash

This is Google’s lightweight AI model, optimized for tasks where speed and efficiency matter most. Gemini 1.5 Flash delivers high-quality performance on most tasks, rivaling larger models while being significantly more cost-efficient and responsive. |

HumanEval+: 75.6

ChatBot Arena: 1,254

Aider: –

|

112.57 ±24.03 | 0.7% | 1M tokens |

|

Gemini 1.5 Pro

A powerful AI model built for deep reasoning across large-scale data, Gemini 1.5 Pro excels at analyzing, classifying, and summarizing vast amounts of content. It can handle over 100,000 lines of code with advanced comprehension – ideal for complex, multimodal tasks. |

HumanEval+: 79.3

ChatBot Arena: 1,291

Aider: –

|

45.47 ±7.78 | 0.8% | 1–2M tokens |

| Anthropic | ||||

|

Claude 3.7 Sonnet

This is Anthropic’s most advanced coding model. Balancing speed and quality, it excels at full-cycle software development with agentic coding, deep problem-solving, and intelligent automation. |

HumanEval+: –

ChatBot Arena: 1,364

Aider: 64.9%

|

46.43 ±7.35 | – | 200K tokens |

|

Claude 3.5 Sonnet

Up until very recently the most intelligent Anthropic model, Claude 3.5 Sonnet is a versatile LLM for coding, code migration, bug fixes, refactoring, and translation. It supports agentic workflows and offers deep code understanding, as well as strong problem-solving skills. |

HumanEval+: –

ChatBot Arena: 1,327

Aider: 51.6%

|

43.07 ±7.03 | 4.6% | 200K tokens |

|

Claude 3.5 Haiku

This fast, cost-effective LLM, excels at real-time coding, chatbots development, data extraction, and content moderation. |

HumanEval+: –

ChatBot Arena: 1,263

Aider: 28.0%

|

42.90 ±6.83 | 4.9% | 200K tokens |

We work hard to connect you to the best available LLMs as soon as they’re released. The world of LLMs is vast and evolving rapidly, and no single model excels in every aspect. Based on our benchmarks, here are the leaders in various key categories:

- Hallucination rate: Gemini 2.0 Flash

- Speed: GPT-4o-mini, Gemini 1.5 Flash, and Gemini 2.0 Flash

- General intelligence (non-reasoning models): GPT-4o, Claude 3.5 Sonnet, Claude 3.5 Haiku, and Gemini 1.5 Pro

- General intelligence with reasoning: Claude 3.7 Sonnet, o1, o1-mini, and o3-mini

Local models

If you need AI Assistant to work offline or would like to avoid sharing your code with LLM API providers, you have this option as well! AI Assistant comes with support for local models, provided through ollama and LM Studio. The most powerful models currently are Qwen-2.5-Coder and Deepseek R1, but you can use any model from the ollama collection that is small enough to fit on your hardware.

Summary

With JetBrains AI Assistant, you’re in control. With access to multiple LLMs, you always have the right model for your context right inside your JetBrains IDE.

There’s no one-size-fits-all model, but with JetBrains AI Assistant, you always have the best one for the job at your fingertips.

![Apple Shares Behind-the-Scenes Look at The Making of 'Someday' [Video]](https://www.iclarified.com/images/news/96836/96836/96836-640.jpg)

![Ring Battery Doorbell On Sale for 45% Off [Big Spring Sale]](https://www.iclarified.com/images/news/96837/96837/96837-640.jpg)

![Apple TV+ Presents 'The Art of the Perfect Shot' [Video]](https://www.iclarified.com/images/news/96838/96838/96838-640.jpg)

.webp?#)

_Ted_Hsu_Alamy_.jpg?#)

_Futuristic_overlay_Alamy.jpg?#)

![[The AI Show Episode 140]: New AGI Warnings, OpenAI Suggests Government Policy, Sam Altman Teases Creative Writing Model, Claude Web Search & Apple’s AI Woes](https://www.marketingaiinstitute.com/hubfs/ep%20140%20cover.png)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)