Caching Servers: Speeding Up Performance Like a Pro

Caching is the art of storing frequently accessed data in a temporary storage layer to reduce latency, minimize network traffic, and improve response times. Whether it's web pages, database queries, or API responses, caching ensures that repeated requests are served faster by avoiding redundant computations or network trips. Think of caching like a well-stocked fridge. If you frequently grab a snack, it's much quicker to get it from your kitchen than to go to the grocery store each time. Similarly, caching servers store copies of requested data close to the user, reducing the time it takes to fetch it from the origin. What is a Caching Server? A caching server, also known as a proxy cache or cache server, is a dedicated system that stores and delivers frequently accessed data to users, reducing the load on backend systems. By intercepting requests, it can serve cached responses without reaching out to the original source, drastically improving performance and efficiency. Why Use a Caching Server? Reduced Latency – Cached responses are delivered instantly instead of waiting for processing at the backend. Lower Bandwidth Consumption – Cached content reduces the need to fetch data from remote sources repeatedly. Scalability – Reduces the load on application servers, allowing them to handle more concurrent users. Better User Experience – Faster response times translate to smoother interactions for users. Fault Tolerance – If the origin server is temporarily down, cached content can still be served. Types of Caching Servers Caching servers come in different flavors, each serving a distinct purpose depending on the type of content being cached. 1. Web Caching A web caching server stores copies of web pages, images, CSS, and JavaScript files, reducing load times and bandwidth consumption. Browsers, ISPs, and enterprise networks often use web caches to serve repeated requests more efficiently. Example: Squid Proxy acts as a caching proxy for web traffic. Use Case: If multiple users visit the same website, the cache server can serve the page without making redundant requests to the website’s origin. 2. Content Delivery Network (CDN) Caching CDNs distribute cached content across globally dispersed servers, ensuring that users receive data from the nearest node. Example: Cloudflare, AWS CloudFront, Akamai Use Case: A video streaming platform caches videos at edge servers, reducing buffering time for users in different locations. 3. Database Caching Caching frequently executed queries improves database performance by storing results in memory. Example: Redis, Memcached Use Case: An e-commerce website caches product listings to reduce load on the primary database. 4. DNS Caching DNS cache servers store domain name lookups to speed up subsequent requests to the same domain. Example: BIND, Unbound Use Case: Instead of repeatedly querying DNS servers for an IP address, cached records serve future requests instantly. 5. Application-Level Caching Caching at the application layer optimizes API responses, page rendering, and computational results. Example: Varnish Cache, Fastly Use Case: A news website caches popular articles to reduce backend load during peak traffic hours. How Caching Servers Work A caching server follows a simple mechanism: A user requests data (e.g., a webpage, an image, or a database query result). The caching server checks if the requested data exists in its cache (cache hit). If yes, the cached data is served instantly. If no, the request is forwarded to the origin server (cache miss), and the response is stored in the cache for future use. Cached content remains available until its Time-To-Live (TTL) expires or the cache is manually cleared. Cache Expiration and Invalidation Strategies Cached data cannot live forever; it needs to be refreshed periodically. Common cache expiration strategies include: Time-Based Expiry (TTL) – Data is cached for a fixed duration before being invalidated. Least Recently Used (LRU) Eviction – Older, unused cache entries are removed when memory is full. Cache Purging – Manual removal of stale data, often triggered by updates in the backend. Etag & Last-Modified Headers – Used in HTTP caching to check if content has changed before serving a cached version. Optimizing Cache Performance To maximize the benefits of caching servers, consider these best practices: Define Appropriate Cache Policies – Set TTL values based on data volatility. Use Cache Invalidation Mechanisms – Avoid serving stale data by efficiently updating caches. Leverage Distributed Caching – Use multiple caching nodes to prevent single points of failure. Monitor Cache Metrics – Track cache hit/miss ratios to identify performance bottlenecks. Compress Cached Content – Reduce storage requirements and speed up content delive

Caching is the art of storing frequently accessed data in a temporary storage layer to reduce latency, minimize network traffic, and improve response times.

Whether it's web pages, database queries, or API responses, caching ensures that repeated requests are served faster by avoiding redundant computations or network trips.

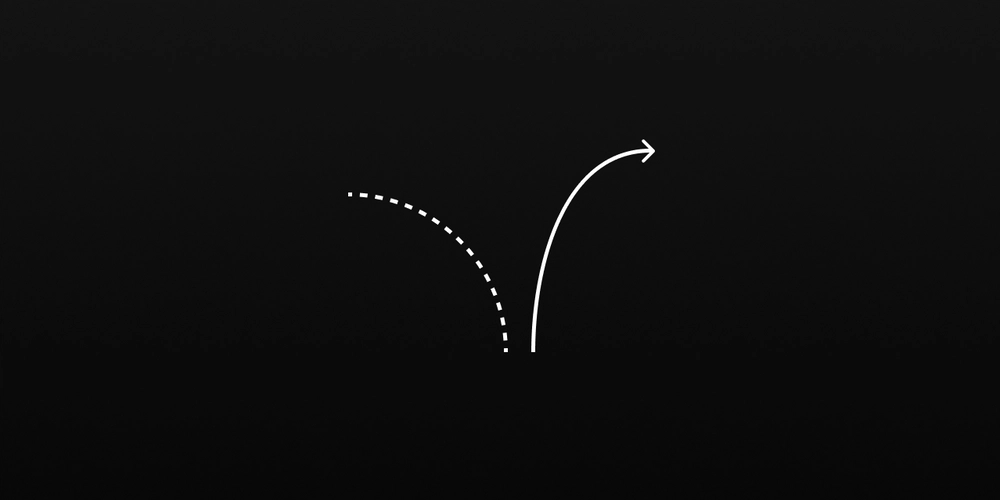

Think of caching like a well-stocked fridge.

If you frequently grab a snack, it's much quicker to get it from your kitchen than to go to the grocery store each time.

Similarly, caching servers store copies of requested data close to the user, reducing the time it takes to fetch it from the origin.

What is a Caching Server?

A caching server, also known as a proxy cache or cache server, is a dedicated system that stores and delivers frequently accessed data to users, reducing the load on backend systems.

By intercepting requests, it can serve cached responses without reaching out to the original source, drastically improving performance and efficiency.

Why Use a Caching Server?

- Reduced Latency – Cached responses are delivered instantly instead of waiting for processing at the backend.

- Lower Bandwidth Consumption – Cached content reduces the need to fetch data from remote sources repeatedly.

- Scalability – Reduces the load on application servers, allowing them to handle more concurrent users.

- Better User Experience – Faster response times translate to smoother interactions for users.

- Fault Tolerance – If the origin server is temporarily down, cached content can still be served.

Types of Caching Servers

Caching servers come in different flavors, each serving a distinct purpose depending on the type of content being cached.

1. Web Caching

A web caching server stores copies of web pages, images, CSS, and JavaScript files, reducing load times and bandwidth consumption.

Browsers, ISPs, and enterprise networks often use web caches to serve repeated requests more efficiently.

- Example: Squid Proxy acts as a caching proxy for web traffic.

- Use Case: If multiple users visit the same website, the cache server can serve the page without making redundant requests to the website’s origin.

2. Content Delivery Network (CDN) Caching

CDNs distribute cached content across globally dispersed servers, ensuring that users receive data from the nearest node.

- Example: Cloudflare, AWS CloudFront, Akamai

- Use Case: A video streaming platform caches videos at edge servers, reducing buffering time for users in different locations.

3. Database Caching

Caching frequently executed queries improves database performance by storing results in memory.

- Example: Redis, Memcached

- Use Case: An e-commerce website caches product listings to reduce load on the primary database.

4. DNS Caching

DNS cache servers store domain name lookups to speed up subsequent requests to the same domain.

- Example: BIND, Unbound

- Use Case: Instead of repeatedly querying DNS servers for an IP address, cached records serve future requests instantly.

5. Application-Level Caching

Caching at the application layer optimizes API responses, page rendering, and computational results.

- Example: Varnish Cache, Fastly

- Use Case: A news website caches popular articles to reduce backend load during peak traffic hours.

How Caching Servers Work

A caching server follows a simple mechanism:

- A user requests data (e.g., a webpage, an image, or a database query result).

- The caching server checks if the requested data exists in its cache (cache hit).

- If yes, the cached data is served instantly.

- If no, the request is forwarded to the origin server (cache miss), and the response is stored in the cache for future use.

- Cached content remains available until its Time-To-Live (TTL) expires or the cache is manually cleared.

Cache Expiration and Invalidation Strategies

Cached data cannot live forever; it needs to be refreshed periodically.

Common cache expiration strategies include:

- Time-Based Expiry (TTL) – Data is cached for a fixed duration before being invalidated.

- Least Recently Used (LRU) Eviction – Older, unused cache entries are removed when memory is full.

- Cache Purging – Manual removal of stale data, often triggered by updates in the backend.

- Etag & Last-Modified Headers – Used in HTTP caching to check if content has changed before serving a cached version.

Optimizing Cache Performance

To maximize the benefits of caching servers, consider these best practices:

- Define Appropriate Cache Policies – Set TTL values based on data volatility.

- Use Cache Invalidation Mechanisms – Avoid serving stale data by efficiently updating caches.

- Leverage Distributed Caching – Use multiple caching nodes to prevent single points of failure.

- Monitor Cache Metrics – Track cache hit/miss ratios to identify performance bottlenecks.

- Compress Cached Content – Reduce storage requirements and speed up content delivery.

Popular Caching Server Implementations

Here are some widely used caching solutions for different needs:

- Web & Reverse Proxy Caching: Nginx, Varnish, Squid

- Database Caching: Redis, Memcached

- CDN Services: Cloudflare, AWS CloudFront, Akamai

- Application Caching: Fastly, Apache Traffic Server

Conclusion

Caching servers are an indispensable part of modern web infrastructure, enabling fast, scalable, and cost-efficient data delivery.

Whether you're optimizing database queries, speeding up web pages, or reducing latency via a CDN, implementing caching effectively can supercharge your application's performance and user experience.

If you're not caching yet, you're missing out on some serious speed boosts!

![Apple's M5 iPad Pro Enters Advanced Testing for 2025 Launch [Gurman]](https://www.iclarified.com/images/news/96865/96865/96865-640.jpg)

![M5 MacBook Pro Set for Late 2025, Major Redesign Waits Until 2026 [Gurman]](https://www.iclarified.com/images/news/96868/96868/96868-640.jpg)

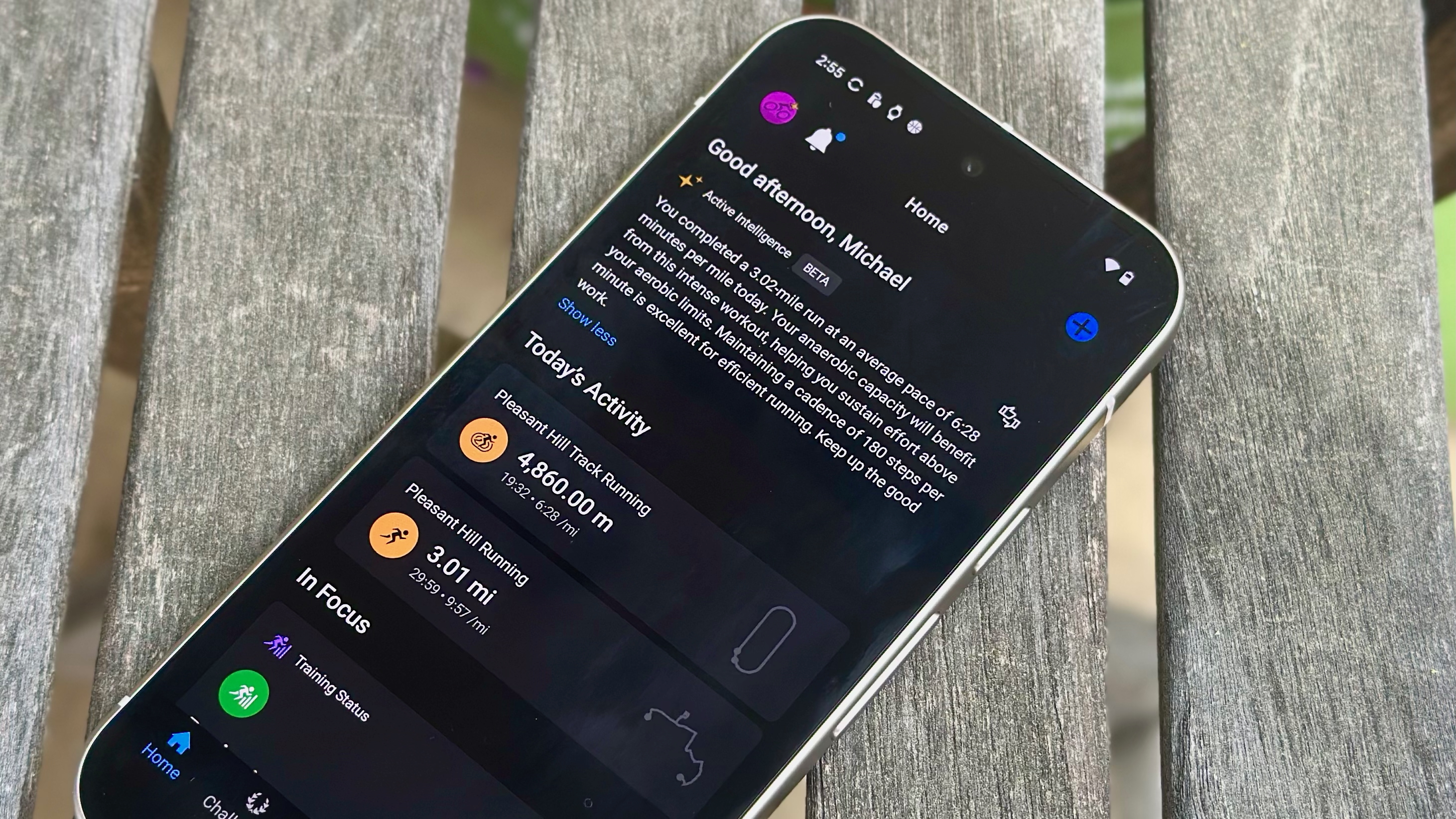

![Apple to Revamp Health App with AI-Powered Doctor [Gurman]](https://www.iclarified.com/images/news/96870/96870/96870-640.jpg)

![What Google Messages features are rolling out [March 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2023/12/google-messages-name-cover.png?resize=1200%2C628&quality=82&strip=all&ssl=1)

![[The AI Show Episode 141]: Road to AGI (and Beyond) #1 — The AI Timeline is Accelerating](https://www.marketingaiinstitute.com/hubfs/ep%20141.1.png)

![[The AI Show Episode 140]: New AGI Warnings, OpenAI Suggests Government Policy, Sam Altman Teases Creative Writing Model, Claude Web Search & Apple’s AI Woes](https://www.marketingaiinstitute.com/hubfs/ep%20140%20cover.png)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![From broke musician to working dev. How college drop-out Ryan Furrer taught himself to code [Podcast #166]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743189826063/2080cde4-6fc0-46fb-b98d-b3d59841e8c4.png?#)

![[FREE EBOOKS] The Ultimate Linux Shell Scripting Guide, Artificial Intelligence for Cybersecurity & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

OSAMU-NAKAMURA.jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

.png?#)