Inside a romance scam compound—and how people get tricked into being there

Heading north in the dark, the only way Gavesh could try to track his progress through the Thai countryside was by watching the road signs zip by. The Jeep’s three occupants—Gavesh, a driver, and a young Chinese woman—had no languages in common, so they drove for hours in nervous silence as they wove their way…

Heading north in the dark, the only way Gavesh could try to track his progress through the Thai countryside was by watching the road signs zip by. The Jeep’s three occupants—Gavesh, a driver, and a young Chinese woman—had no languages in common, so they drove for hours in nervous silence as they wove their way out of Bangkok and toward Mae Sot, a city on Thailand’s western border with Myanmar.

When they reached the city, the driver pulled off the road toward a small hotel, where another car was waiting. “I had some suspicions—like, why are we changing vehicles?” Gavesh remembers. “But it happened so fast.”

They left the highway and drove on until, in total darkness, they parked at what looked like a private house. “We stopped the vehicle. There were people gathered. Maybe 10 of them. They took the luggage and they asked us to come,” Gavesh says. “One was going in front, there was another one behind, and everyone said: ‘Go, go, go.’”

Gavesh and the Chinese woman were marched through the pitch-black fields by flashlight to a riverside where a boat was moored. By then, it was far too late to back out.

Gavesh’s journey had started, seemingly innocently, with a job ad on Facebook promising work he desperately needed.

Instead, he found himself trafficked into a business commonly known as “pig butchering”—a form of fraud in which scammers form romantic or other close relationships with targets online and extract money from them. The Chinese crime syndicates behind the scams have netted billions of dollars, and they have used violence and coercion to force their workers, many of them people trafficked like Gavesh, to carry out the frauds from large compounds, several of which operate openly in the quasi-lawless borderlands of Myanmar.

We spoke to Gavesh and five other workers from inside the scam industry, as well as anti-trafficking experts and technology specialists. Their testimony reveals how global companies, including American social media and dating apps and international cryptocurrency and messaging platforms, have given the fraud business the means to become industrialized. By the same token, it is Big Tech that may hold the key to breaking up the scam syndicates—if only these companies can be persuaded or compelled to act.

We’re identifying Gavesh using a pseudonym to protect his identity. He is from a country in South Asia, one he asked us not to name. He hasn’t shared his story much, and he still hasn’t told his family. He worries about how they’d handle it.

Until the pandemic, he had held down a job in the tourism industry. But lockdowns had gutted the sector, and two years later he was working as a day laborer to support himself and his father and sister. “I was fed up with my life,” he says. “I was trying so hard to find a way to get out.”

When he saw the Facebook post in mid-2022, it seemed like a godsend. A company in Thailand was looking for English-speaking customer service and data entry specialists. The monthly salary was $1,500—far more than he could earn at home—with meals, travel costs, a visa, and accommodation included. “I knew if I got this job, my life would turn around. I would be able to give my family a good life,” Gavesh says.

What came next was life-changing, but not in the way Gavesh had hoped. The advert was a fraud—and a classic tactic syndicates use to force workers like Gavesh into an economy that operates as something like a dark mirror of the global outsourcing industry.

The true scale of this type of fraud is hard to estimate, but the United Nations reported in 2023 that hundreds of thousands of people had been trafficked to work as online scammers in Southeast Asia. One 2024 study, from the University of Texas, estimates that the criminal syndicates that run these businesses have stolen at least $75 billion since 2020.

These schemes have been going on for more than two decades, but they’ve started to capture global attention only recently, as the syndicates running them increasingly shift from Chinese targets toward the West. And even as investigators, international organizations, and journalists gradually pull back the curtain on the brutal conditions inside scamming compounds and document their vast scale, what is far less exposed is the pivotal role platforms owned by Big Tech play throughout the industry—from initially coercing individuals to become scammers to, finally, duping scam targets out of their life savings.

As losses mount, governments and law enforcement agencies have looked for ways to disrupt the syndicates, which have become adept at using ungoverned spaces in lawless borderlands and partnering with corrupt regimes. But on the whole, the syndicates have managed to stay a step ahead of law enforcement—in part by relying on services from the world’s tech giants. Apple iPhones are their preferred scamming tools. Meta-owned Facebook and WhatsApp are used to recruit people into forced labor, as is Telegram. Social media and messaging platforms, including Facebook, Instagram, WhatsApp, WeChat, and X, provide spaces for scammers to find and lure targets. So do dating apps, including Tinder. Some of the scam compounds have their own Starlink terminals. And cryptocurrencies like tether and global crypto platforms like Binance have allowed the criminal operations to move money with little or no oversight.

“Private-sector corporations are, unfortunately, inadvertently enabling this criminal industry,” says Andrew Wasuwongse, the Thailand country director at the anti-trafficking nonprofit International Justice Mission (IJM). “The private sector holds significant tools and responsibility to disrupt and prevent its further growth.”

Yet while the tech sector has, slowly, begun to roll out anti-scam tools and policies, experts in human trafficking, platform integrity, and cybercrime tell us that these measures largely focus on the downstream problem: the losses suffered by the victims of the scams. That approach overlooks the other set of victims, often from lower-income countries, at the far end of a fraud “supply chain” that is built on human misery—and on Big Tech. Meanwhile, the scams continue on a mass scale.

Tech companies could certainly be doing more to crack down, the experts say. Even relatively small interventions, they argue, could start to erode the business model of the scam syndicates; with enough of these, the whole business could start to founder.

“The trick is: How do you make it unprofitable?” says Eric Davis, a platform integrity expert and senior vice president of special projects at the Institute for Security and Technology (IST), a think tank in California. “How do you create enough friction?”

That question is only becoming more urgent as many tech companies pull back on efforts to moderate their platforms, artificial intelligence supercharges scam operations, and the Trump administration signals broad support for deregulation of the tech sector while withdrawing support from organizations that study the scams and support the victims. All these trends may further embolden the syndicates. And even as the human costs keep building, global governments exert ineffectual pressure—if any at all—on the tech sector to turn its vast financial and technical resources against a criminal economy that has thrived in the spaces Silicon Valley built.

Capturing a vulnerable workforce

The roots of “pig butchering” scams reach back to the offshore gambling industry that emerged from China in the early 2000s. Online casinos had become hugely popular in China, but the government cracked down, forcing the operators to relocate to Cambodia, the Philippines, Laos, and Myanmar. There, they could continue to target Chinese gamblers with relative impunity. Over time, the casinos began to use social media to entice people back home, deploying scam-like tactics that frequently centered on attractive and even nude dealers.

The doubts didn’t really start until after Gavesh reached Bangkok’s Suvarnabhumi Airport. As time ticked by, it began to occur to him that he was alone, with no money, no return ticket, and no working SIM card.

“Often the romance scam was a part of that—building romantic relationships with people that you eventually would aim to hook,” says Jason Tower, Myanmar country director at the United States Institute of Peace (USIP), a research and diplomacy organization funded by the US government, who researches the cyber scam industry. (USIP’s leadership was recently targeted by the Trump administration and Elon Musk’s Department of Government Efficiency task force, leaving the organization’s future uncertain; its website, which previously housed its research, is also currently offline.)

By the late 2010s, many of the casinos were big, professional operations. Gradually, says Tower, the business model turned more sinister, with a tactic called sha zhu pan in Chinese emerging as a core strategy. Scamming operatives work to “fatten up” or cultivate a target by building a relationship before going in for the “slaughter”—persuading them to invest in a supposedly once-in-a-lifetime scheme and then absconding with the money. “That actually ended up being much, much more lucrative than online gambling,” Tower says. (The international law enforcement organization Interpol no longer uses the graphic term “pig butchering,” citing concerns that it dehumanizes and stigmatizes victims.)

Like other online industries, the romance scamming business was supercharged by the pandemic. There were simply more isolated people to defraud, and more people out of work who might be persuaded to try scamming others—or who were vulnerable to being trafficked into the industry.

Initially, most of the workers carrying out the frauds were Chinese, as were the fraud victims. But after the government in Beijing tightened travel restrictions, making it hard to recruit Chinese laborers, the syndicates went global. They started targeting more Western markets and turning, Tower says, to “much more malign types of approaches to tricking people into scam centers.”

Getting recruited

Gavesh was scrolling through Facebook when he saw the ad. He sent his résumé to a Telegram contact number. A human resources representative replied and had him demonstrate his English and typing skills over video. It all felt very professional. “I didn’t have any reason to suspect,” he says.

The doubts didn’t really start until after he reached Bangkok’s Suvarnabhumi Airport. After being met at arrivals by a man who spoke no English, he was left to wait. As time ticked by, it began to occur to Gavesh that he was alone, with no money, no return ticket, and no working SIM card. Finally, the Jeep arrived to pick him up.

Hours later, exhausted, he was on a boat crossing the Moei River from Thailand into Myanmar. On the far bank, a group was waiting. One man was in military uniform and carried a gun. “In my country, if we see an army guy when we are in trouble, we feel safe,” Gavesh says. “So my initial thoughts were: Okay, there’s nothing to be worried about.”

They hiked a kilometer across a sodden paddy field and emerged at the other side caked in mud. There a van was parked, and the driver took them to what he called, in broken English, “the office.” They arrived at the gate of a huge compound, surrounded by high walls topped with barbed wire.

While some people are drawn into online scamming directly by friends and relatives, Facebook is, according to IJM’s Wasuwongse, the most common entry point for people recruited on social media.

Meta has known for years that its platforms host this kind of content. Back in 2019, the BBC exposed “slave markets” that were running on Instagram; in 2021, the Wall Street Journal reported, drawing on documents leaked by a whistleblower, that Meta had long struggled to rein in the problem but took meaningful action only after Apple threatened to pull Instagram from its app store.

Today, years on, ads like the one that Gavesh responded to are still easy to find on Facebook if you know what to look for.

They are typically posted in job seekers’ groups and usually seem to be advertising legitimate jobs in areas like customer service. They offer attractive wages, especially for people with language skills—usually English or Chinese.

The traffickers tend to finish the recruitment process on encrypted or private messaging apps. In our research, many experts said that Telegram, which is notorious for hosting terrorist content, child sexual abuse material, and other communication related to criminal activity, was particularly problematic. Many spoke with a combination of anger and resignation about its apparent lack of interest in working with them to address the problem; Mina Chiang, founder of Humanity Research Consultancy, an anti-trafficking organization, accuses the app of being “very much complicit” in human trafficking and “proactively facilitating” these scams. (Telegram did not respond to a request for comment.)

But while Telegram users have the option of encrypting their messages end to end, making them almost impossible to monitor, social media companies are of course able to access users’ posts. And it’s here, at the beginning of the romance scam supply chain, where Big Tech could arguably make its most consequential intervention.

Social media is monitored by a combination of human moderators and AI systems, which help flag users and content—ads, posts, pages—that break the law or violate the companies’ own policies. Dangerous content is easiest to police when it follows predictable patterns or is posted by users acting in distinctive and suspicious ways.

“They have financial resources. You can hire the most talented coding engineers in the world. Why can’t you just find people who understand the issue properly?”

Anti-trafficking experts say the scam advertising tends to follow formulaic templates and use common language, and that they routinely report the ads to Meta and point out the markers they have identified. Their hope is that this information will be fed into the data sets that train the content moderation models.

While individual ads may be taken down, even in big waves—last November, Meta said it had purged 2 million accounts connected to scamming syndicates over the previous year—experts say that Facebook still continues to be used in recruiting. And new ads keep appearing.

(In response to a request for comment, a Meta spokesperson shared links to policies about bans on content or advertisements that facilitate human trafficking, as well as company blog posts telling users how to protect themselves from romance scams and sharing details about the company’s efforts to disrupt fraud on its platforms, one stating that it is “constantly rolling out new product features to help protect people on [its] apps from known scam tactics at scale.” The spokesperson also said that WhatsApp has spam detection technology, and millions of accounts are banned per month.)

Anti-trafficking experts we spoke with say that as recently as last fall, Meta was engaging with them and had told them it was ramping up its capabilities. But Chiang says there still isn’t enough urgency from tech companies. “There’s a question about speed. They might be able to say That’s the goal for the next two years. No. But that’s not fast enough. We need it now,” she says. “They have financial resources. You can hire the most talented coding engineers in the world. Why can’t you just find people who understand the issue properly?”

Part of the answer comes down to money, according to experts we spoke with. Scaling up content moderation and other processes that could cause users to be kicked off a platform requires not only technological staff but also legal and policy experts—which not everyone sees as worth the cost.

“The vast majority of these companies are doing the minimum or less,” says Tower of USIP. “If not properly incentivized, either through regulatory action or through exposure by media or other forms of pressure … often, these companies will underinvest in keeping their platforms safe.”

Getting set up

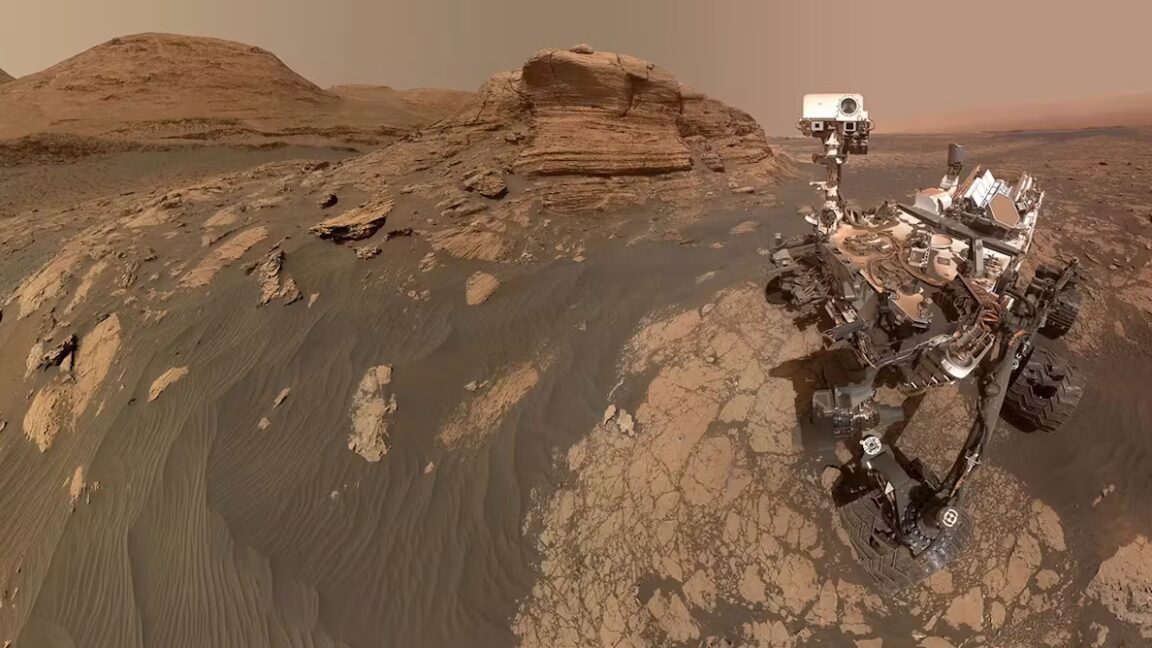

Gavesh’s new “office” turned out to be one of the most infamous scamming hubs in Southeast Asia: KK Park in Myanmar’s Myawaddy region. Satellite imagery shows it as a densely packed cluster of buildings, surrounded by fields. Most of it has been built since late 2019.

Inside, it runs like a hybrid of a company campus and a prison.

When Gavesh arrived, he handed over his phone and passport and was assigned to a dormitory and an employer. He was allowed his own phone back only for short periods, and his calls were monitored. Security was tight. He had to pass through airport-style metal detectors when he went in or out of the office. Black-uniformed personnel patrolled the buildings, while armed men in combat fatigues watched the perimeter fences from guard posts.

On his first full day, he was put in front of a computer with just four documents on it, which he had to read over and over—guides on how to approach strangers. On his second day, he learned to build fake profiles on social media and dating apps. The trick was to find real people on Instagram or Facebook who were physically attractive, posted often, and appeared to be wealthy and living “a luxurious life,” he says, and use their photos to build a new account: “There are so many Instagram models that pretend they have a lot of money.”

Next, he was given a batch of iPhone 8s—most people on his team used between eight and 10 devices each—loaded with local SIM cards and apps that spoofed their location so that they appeared to be in the US. Using male and female aliases, he set up dozens of accounts on Facebook, WhatsApp, Telegram, Instagram, and X and profiles on several dating platforms, though he can’t remember exactly which ones.

Different scamming operations teach different techniques for finding and reaching out to potential victims, several people who worked in the compounds tell us. Some people used direct approaches on dating apps, Facebook, Instagram, or—for those targeting Chinese victims—WeChat. One worker from Myanmar sent out mass messages on WhatsApp, pretending to have accidentally messaged a wrong number, in the hope of striking up a conversation. (Tencent, which owns WeChat, declined to comment.)

Some scamming workers we spoke to were told to target white, middle-aged or older men in Western countries who seemed to be well off. Gavesh says he would pretend to be white men and women, using information found from Google to add verisimilitude to his claims of living in, say, Miami Beach. He would chat with the targets, trying to figure out from their jobs, spending habits, and ambitions whether they’d be worth investing time in.

One South African woman, trafficked to Myanmar in 2022, says she was given a script and told to pose as an Asian woman living in Chicago. She was instructed to study her assigned city and learn quotidian details about life there. “They kept on punishing people all the time for not knowing or for forgetting that they’re staying in Chicago,” she says, “or for forgetting what’s Starbucks or what’s [a] latte.”

Fake users have, of course, been a problem on social media platforms and dating sites for years. Some platforms, such as X, allow practically anyone to create accounts and even to have them verified for a fee. Others, including Facebook, have periodically conducted sweeps to get rid of fake accounts engaged in what Meta calls “coordinated inauthentic behavior.” (X did not respond to requests for comment.)

But scam workers tell us they were advised on simple ways to circumvent detection mechanisms on social media. They were given basic training in how to avoid suspicious behavior such as adding too many contacts too quickly, which might trigger the company to review whether someone’s profile is authentic. The South African woman says she was shown how to manipulate the dates on a Facebook account “to seem as if you opened the account in 2019 or whatever,” making it easier to add friends. (Meta’s spam filters—meant to reduce the spread of unwanted content—include limits on friend requests and bulk messaging.)

Wang set up a Tinder profile with a picture of a dog and a bio that read, “I am a dog.” It passed through the platform’s verification system without a hitch.

Dating apps, whose users generally hope to meet other users in real life, have a particular need to make sure that people are who they say they are. But Match Group, the parent company of Tinder, ended its partnership with a company doing background checks in 2023. It now encourages users to verify their profile with a selfie and further ID checks, though insiders say these systems are often rudimentary. “They just check a box and [do] what is legally required or what will make the media get off of [their] case,” says one tech executive who has worked with multiple dating apps on safety systems, speaking on the condition of anonymity because they were not permitted to speak about their work with certain companies.

Fangzhou Wang, an assistant professor at the University of Texas at Arlington who studies romance scams, ran a test: She set up a Tinder profile with a picture of a dog and a bio that read, “I am a dog.” It passed through the platform’s verification system without a hitch. “They are not providing enough security measures to filter out fraudulent profiles,” Wang says. “Everybody can create anything.”

Like recruitment ads, the scam profiles tend to follow patterns that should raise red flags. They use photos copied from existing users or made by artificial intelligence, and the accounts are sometimes set up using phone numbers generated by voice-over-internet-protocol services. Then there’s the scammers’ behavior: They swipe too fast, or spend too much time logged in. “A normal human doesn’t spend … eight hours on a dating app a day,” the tech executive says.

What’s more, scammers use the same language over and over again as they reach out to potential targets. “The majority of them are using predesigned scripts,” says Wang.

It would be fairly easy for platforms to detect these signs and either stop accounts from being created or make the users go through further checks, experts tell us. Signals of some of these behaviors “can potentially be embedded into a type of machine-learning algorithm,” Wang says. She approached Tinder a few years ago with her research into the language that scammers use on the platforms, and offered to help build data sets for its moderation models. She says the company didn’t reply.

(In a statement, Yoel Roth, vice president of trust and safety at Match Group, said that the company invests in “proactive tools, advanced detection systems and user education to help prevent harm.” He wrote, “We use proprietary AI-powered tools to help identify scammer messaging, and unlike many platforms, we moderate messages, which allows us to detect suspicious patterns early and act quickly,” adding that the company has recently worked with Reality Defender, a provider of deepfake detection tools, to strengthen its ability to detect AI-generated content. A company spokesperson reported having no record of Wang’s outreach but said that the company “welcome[s] collaboration and [is] always open to reviewing research that can help strengthen user safety.”)

A recent investigation published in The Markup found that Match Group has long possessed the tools and resources to track sex offenders and other bad actors but has resisted efforts to roll out safety protocols for fear they might slow growth.

This tension, between the desire to keep increasing the number of users and the need to ensure that these users and their online activity are authentic, is often behind safety issues on platforms. While no platform wants to be a haven for fraudsters, identity verification creates friction for users, which stops real people as well as impostors from signing up. And again, cracking down on platform violations costs money.

According to Josh Kim, an economist who works in Big Tech, it would be costly for tech companies to build out the legal, policy, and operational teams for content moderation tools that could get users kicked off a platform—and the expense is one companies may find hard to justify in the current business climate. “The shift toward profitability means that you have to be very selective in … where you invest the resources that you have,” he says.

“My intuition here is that unless there are fines or pressure from governments or regulatory agencies or the public themselves,” he adds, “the current atmosphere in the tech ecosystem is to focus on building a product that is profitable and grows fast, and things that don’t contribute to those two points are probably being deprioritized.”

Getting online—and staying in line

At work, Gavesh wore a blue tag, marking him as belonging to the lowest rank of workers. “On top of us are the ones who are wearing the yellow tags—they call themselves HR or translators, or office guys,” he says. “Red tags are team leaders, managers … And then moving from that, they have black and ash tags. Those are the ones running the office.” Most of the latter were Chinese, Gavesh says, as were the really “big bosses,” who didn’t wear tags at all.

Within this hierarchy operated a system of incentives and punishments. Workers who followed orders and proved successful at scamming could rise through the ranks to training or supervisory positions, and gain access to perks like restaurants and nightclubs. Those who failed to meet the targets or broke the rules faced violence and humiliation.

Gavesh says he was once beaten because he broke an unwritten rule that it was forbidden to cross your legs at work. Yawning was banned, and bathroom breaks were limited to two minutes at a time.

Beatings were usually conducted in the open, though the most severe punishments at Gavesh’s company happened in a room called the “water jail.” One day a coworker was there alongside the others, “and the next day he was not,” Gavesh recalls. When the colleague was brought back to the office, he had been so badly beaten he couldn’t walk or speak. “They took him to the front, and they said: ‘If you do not listen to us, this is what will happen to you.’”

Gavesh was desperate to leave but felt there was no chance of escaping. The armed guards seemed ready to shoot, and there were rumors in the compound that some people who jumped the fence had been found drowned in the river.

This kind of physical and psychological abuse is routine across the industry. Gavesh and others we spoke to describe working 12 hours or more a day, without days off. They faced strict quotas for the number of scam targets they had to have on the hook. If they failed to reach them, they were punished. The UN has documented cases of torture, arbitrary detention, and sexual violence in the compounds. We heard accounts of people made to perform calisthenics and being thrashed on the backside in front of other workers.

Even if someone could escape, there is often no authority to appeal to on the outside. KK Park and other scam factories in Myanmar are situated in a geopolitical gray zone—borderlands where criminal enterprises have based themselves for decades, trading in narcotics and other unlawful industries. Armed groups, some of them operating under the command of the military, are credibly believed to profit directly from the trade in people and contraband in these areas, in some cases facing international sanctions as a result. Illicit industries in Myanmar have only expanded since a military coup in 2021. By August 2023, according to UN estimates, more than 120,000 people were being held in the country for the purposes of forced scamming, making it the largest hub for the frauds in Southeast Asia.

Workers who followed orders and proved successful at scamming could rise through the ranks and gain access to perks like restaurants and nightclubs. Those who failed to meet the targets or broke the rules faced violence and humiliation.

In at least some attempt to get a handle on this lawlessness, Thailand tried to cut off internet services for some compounds across its western border starting last May. Syndicates adapted by running fiber-optic cables across the river. When some of those were discovered, they were severed by Thai authorities. Thailand again ramped up its crackdowns on the industry earlier this year, with tactics that included cutting off internet, gas, and electricity to known scamming enclaves, following the trafficking of a Chinese celebrity through Thailand into Myanmar.

Still, the scammers keep adapting—again, using Western technology. “We’ve started to see and hear of Starlink systems being used by these compounds,” says Eric Heintz, a global analyst at IJM.

While the military junta has criminalized the use of unauthorized satellite internet service, intercepted shipments and raids on scamming centers over the past year indicate that syndicates smuggle in equipment. The crackdowns seem to have had a limited impact—a Wired investigation published in February found that scamming networks appeared to be “widely using” Starlink in Myanmar. The journalist, using mobile-phone connection data collected by an online advertising industry tool, identified eight known scam compounds on the Myanmar-Thailand border where hundreds of phones had used Starlink more than 40,000 times since November 2024. He also identified photos that appeared to show dozens of Starlink satellite dishes on a scamming compound rooftop.

Starlink could provide another prime opportunity for systematic efforts to interrupt the scams, particularly since it requires a subscription and is able to geofence its services. “I could give you coordinates of where some of these [scamming operations] are, like IP addresses that are connecting to them,” Heintz says. “That should make a huge paper trail.”

Starlink’s parent company, SpaceX, has previously limited access in areas of Ukraine under Russian occupation, after all. Its policies also state that SpaceX may terminate Starlink services to users who participate in “fraudulent” activities. (SpaceX did not respond to a request for comment.)

Knowing the locations of scam compounds could also allow Apple to step in: Workers rely on iPhones to make contact with victims, and these have to be associated with an Apple ID, even if the workers use apps to spoof their addresses.

As Heintz puts it, “[If] you have an iCloud account with five phones, and you know that those phones’ GPS antenna locates those phones inside a known scam compound, then all of those phones should be bricked. The account should be locked.”

(Apple did not provide a response to a request for comment.)

“This isn’t like the other trafficking cases that we’ve worked on, where we’re trying to find a boat in the middle of the ocean,” Heintz adds. “These are city-size compounds. We all know where they are, and we’ve watched them being built via satellite imagery. We should be able to do something location-based to take these accounts offline.”

Getting paid

Once Gavesh developed a relationship on social media or a dating site, he was supposed to move the conversation to WhatsApp. That platform is end-to-end encrypted, meaning even Meta can’t read the content of messages—although it should be possible for the company to spot a user’s unusual patterns of behavior, like opening large numbers of WhatsApp accounts or sending numerous messages in a short span of time.

“If you have an account that is suddenly adding people in large quantities all over the world, should you immediately flag it and freeze that account or require that that individual verify his or her information?” USIP’s Tower says.

After cultivating targets’ trust, scammers would inevitably shift the conversation to the subject of money. Having made themselves out to be living a life of luxury, they would offer a chance to share in the secrets of their wealth. Gavesh was taught to make the approach as if it were an extension of an existing intimacy. “I would not show this platform to anyone else,” he says he was supposed to say. “But since I feel like you are my life partner, I feel like you are my future.”

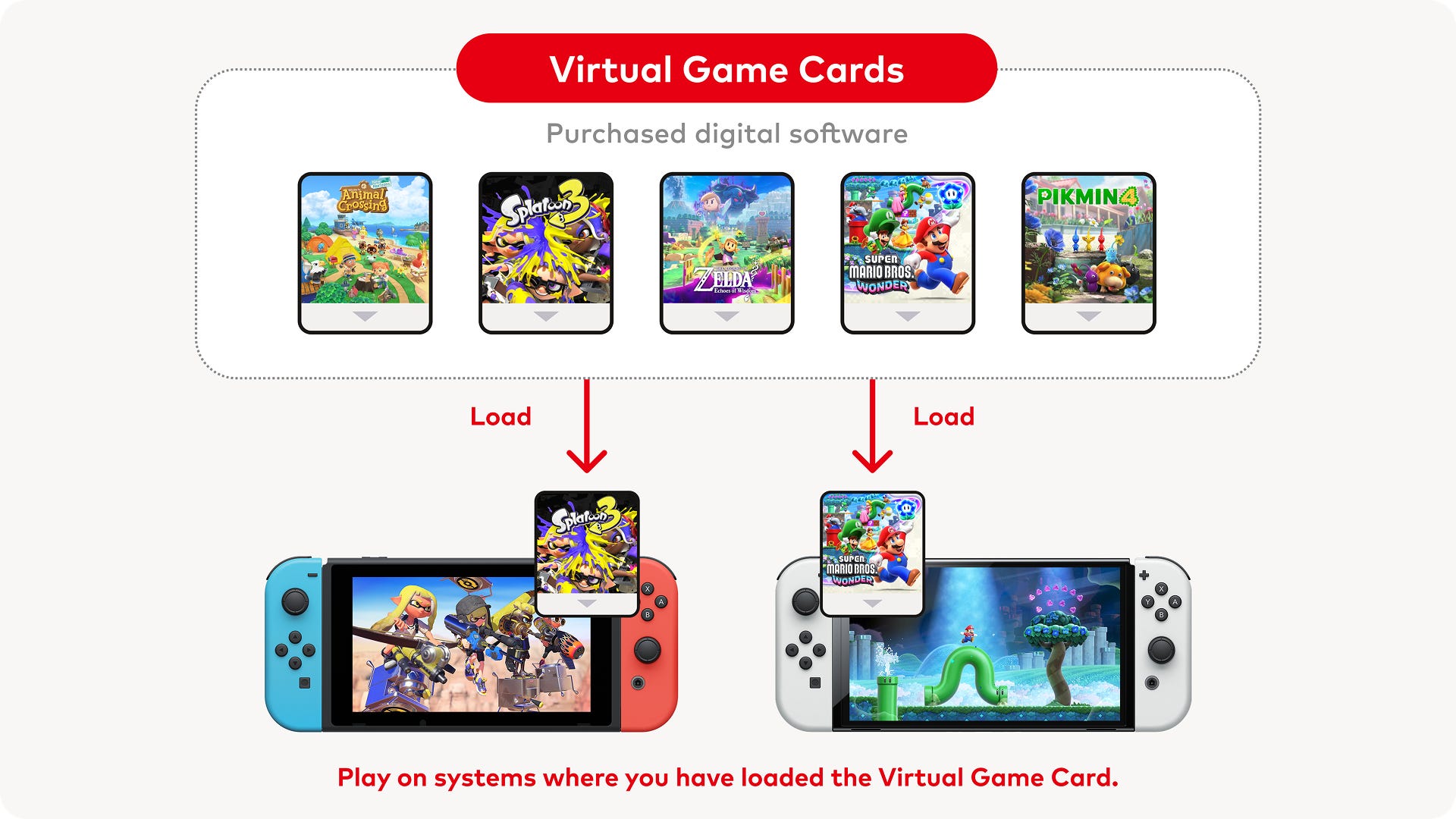

Lower-level workers like Gavesh were only expected to get scamming targets on the hook; then they’d pass off the relationship to a manager. From there, there is some variation in the approach, but the target is sometimes encouraged to set up an account with a mainstream crypto exchange and buy some tokens. Then the scammer sends the victim—or “customer,” as some workers say they called these targets—a link to a convincing, but fake, crypto investment platform.

After the target invests an initial amount of money, the scammer typically sends fake investment return charts that seem to show the value of that stake rising and rising. To demonstrate good faith, the scammer sends a few hundred dollars back to the victim’s crypto wallet, all the while working to convince the mark to keep investing. Then, once the customer is all in, the scammer goes in for the kill, using every means possible to take more money. “We [would] pull out bigger amounts from the customers and squeeze them out of their possessions,” one worker tells us.

The design of cryptocurrency allows some degree of anonymity, but with enough time, persistence, and luck, it’s possible to figure out where tokens are flowing. It’s also possible, though even more difficult, to discover who owns the crypto wallets.

In early 2024, University of Texas researchers John M. Griffin and Kevin Mei published a paper that followed money from crypto wallets associated with scammers. They tracked hundreds of thousands of transactions, collectively worth billions of dollars—money that was transferred in and out of mainstream exchanges, including Binance, Coinbase, and Crypto.com.

Some scam syndicates would move crypto off these big exchanges, launder it through anonymous platforms known as mixers (which can be used to obscure crypto transactions), and then come back to the exchanges to cash out into fiat currency such as dollars.

Griffin and Mei were able to identify deposit addresses on Binance and smaller platforms, including Hong Kong–based Huobi and Seychelles-based OKX, that were collectively receiving billions of dollars from suspected scams. These addresses were being used over and over again to send and receive money, “suggesting limited monitoring by crypto exchanges,” the authors wrote.

(We were unable to reach OKX for comment; Coinbase and Huobi did not respond to requests for comment. A Binance spokesperson said that the company disputes the findings of the University of Texas study, alleging that they are “misleading at best and, at worst, wildly inaccurate.” The spokesperson also said that the company has extensive know-your-customer requirements, uses internal and third-party tools to spot illicit activity, freezes funds, and works with law enforcement to help reclaim stolen assets, claiming to have “proactively prevented $4.2 billion in potential losses for 2.8 million users from scams and frauds” and “recovered $88 million in stolen or misplaced funds” last year. A Crypto.com spokesperson said that the company is “committed to security, compliance and consumer protection” and that it uses “robust” transaction monitoring and fraud detection controls, “rigorously investigates accounts flagged for potential fraudulent activity or victimization,” and has internal blacklisting processes for wallet addresses known to be linked to scams.)

But while tracking illicit payments through the crypto ecosystem is possible, it’s “messy” and “complicated” to actually pin down who owns a scam wallet, according to Griffin Hotchkiss, a writer and use-case researcher at the Ethereum Foundation who has worked on crypto projects in Myanmar and who spoke in his personal capacity. Investigators have to build models that connect users to accounts by the flows of money going through them, which involves a degree of “guesswork” and “red string and sticky notes on the board trying to trace the flow of funds,” he says.

There are, however, certain actors within the crypto ecosystem who should have a good vantage point for observing how money moves through it. The most significant of these is Tether Holdings, a company formerly based in the British Virgin Islands (it has since relocated to El Salvador) that issues tether or USDT, a so-called stablecoin whose value is nominally pegged to the US dollar. Tether is widely used by crypto traders to park their money in dollar-denominated assets without having to convert cryptocurrencies into fiat currency. It is also widely used in criminal activity.

“There was this one guy I was chatting with, [using] a girl’s profile. He was trying to make a living. He was working in a cafe. He had a daughter who was living with [her] mother. That story was really touching. And, like, you don’t want to get these people [involved].”

There is more than $140 billion worth of USDT in circulation; in 2023, TRM Labs, a firm that traces crypto fraud, estimated that $19.3 billion worth of tether transactions was associated with illicit activity. In January 2024, the UN’s Office on Drugs and Crime said that tether was a leading means of exchange for fraudsters and money launderers operating in Southeast Asia. In October, US federal investigators reportedly opened an investigation alleging possible sanctions violations and complicity in money laundering (though at the time, Tether Holdings’ CEO said there was “no indication” the company was under investigation).

Tech experts tell us that USDT is ever-present in the scam business, used to move money and as the main medium of exchange on anonymous marketplaces such as Cambodia-based Huione Guarantee, which has been accused of allowing romance scammers to launder the proceeds of their crimes. (Cambodia revoked the banking license of Huione Pay in March of this year. Huione, which did not respond to a request for comment, has previously denied engaging in criminal activity.)

While much of the crypto ecosystem is decentralized, USDT “does have a central authority” that could intervene, Hotchkiss says. Tether’s code has functions that allow the company to blacklist users, freeze accounts, and even destroy tokens, he adds. (Tether Holdings did not respond to requests for comment.)

In practice, Hotchkiss says, the company has frozen very few accounts—and, like other experts we spoke to, he thinks it’s unlikely to happen at scale. If it were to start acting like a regulator or a bank, the currency would lose a fundamental part of its appeal: its anonymity and independence from the mainstream of finance. The more you intervene, “the less trust people have in your coin,” he says. “The incentives are kind of misaligned.”

Getting out

Gavesh really wasn’t very good at scamming. The knowledge that the person on the other side of the conversation was working hard for money that he was trying to steal weighed heavily on him. “There was this one guy I was chatting with, [using] a girl’s profile,” he says. “He was trying to make a living. He was working in a cafe. He had a daughter who was living with [her] mother. That story was really touching. And, like, you don’t want to get these people [involved].”

The nature of the work left him racked with guilt. “I believe in karma,” he says. “What goes around comes around.”

Twice during Gavesh’s incarceration, he was sold on from one “employer” to another, but he still struggled with scamming. In February 2023, he was put up for sale a third time, along with some other workers.

“We went to the boss and begged him not to sell [us] and to please let us go home,” Gavesh says. The boss eventually agreed but told them it would cost them. As well as forgoing their salaries, they had to pay a ransom—Gavesh’s was set at 72,000 Thai baht, more than $2,000.

Gavesh managed to scrape the money together, and he and around a dozen others were driven to the river in a military vehicle. “We had to be very silent,” he says. They were told “not to make any sounds or anything—just to get on the boat.” They slipped back into Thailand the way they had come.

To avoid checkpoints on the way to Bangkok, the smugglers took paths through the jungle and changed vehicles around 10 times.

The group barely had enough money to survive a couple of days in the city, so they stuck together, staying in a cheap hotel while figuring out what to do next. With the help of a compatriot, Gavesh got in touch with IJM, which offered to help him navigate the legal bureaucracy ahead.

The traffickers hadn’t given him back his passport, and he was in Thailand without authorization. It was April before he was finally able to board a flight home, where he faced yet more questioning from police and immigration officials. He told his family he had “a small visa issue” and that he had lost his passport in Bangkok. He has never told them about his ordeal. “It would be very hard for them to process,” he says.

Recent history shows it’s very unlikely Gavesh will get any justice. That’s part of the reason why disrupting scams’ technology supply chain is so important: It’s incredibly challenging to hold the people operating the syndicates accountable. They straddle borders and jurisdictions. They have trafficked people from more than 60 countries, according to research from USIP, and scam targets come from all over the world. Much of the stolen money is moved through crypto wallets based in secrecy jurisdictions. “This thing is really like an onion. You’ve got layer after layer after layer of it, and it’s just really difficult to see where jurisdiction starts and where jurisdiction ends,” Tower says.

Chinese authorities are often more willing to cooperate with the military junta and armed groups in Myanmar that Western governments will not deal with, and they have cracked down where they can on operations involving their nationals. Thailand has also stepped up its efforts to address the human trafficking crisis and shut down scamming operations across its border in recent months. But when it comes to regulating tech platforms, the reaction from governments has been slower.

The few legislative efforts in the US, which are still in the earliest stages, focus on supporting law enforcement and financial institutions, not directly on ways to address the abuse of American tech platforms for scamming. And they probably won’t take that on anytime soon. Trump, who has been boosted and courted by several high-profile tech executives, has indicated that his administration opposes heavier online moderation. One executive order, signed in February, vows to impose tariffs on foreign governments if they introduce measures that could “inhibit the growth” of US companies—particularly those in tech—or compel them to moderate online content.

The Trump White House also supports reducing regulation in the crypto industry; it has halted major investigations into crypto companies and just this month removed sanctions on the crypto mixer Tornado Cash. In what was widely seen as a nod to libertarian-leaning crypto-enthusiasts, Trump pardoned Ross Ulbricht, the founder of the dark web marketplace Silk Road and one of the earlier adopters of crypto for large-scale criminal activity. The administration’s embrace of crypto could indeed have implications for the scamming industry, notes Kim, the economist: “It makes it much easier for crypto services to proliferate and have wider-spread adoption, and that might make it easier for criminal enterprises to tap into that and exploit that for their own means.”

What’s more, the new US administration has overseen the rollback of funding for myriad international aid programs, primarily programs run through the US Agency for International Development and including those working to help the people who’ve been trafficked into scam compounds. In late February, CNN reports, every one of the agency’s anti-trafficking projects was halted.

This all means it’s up to the tech companies themselves to act on their own initiative. And Big Tech has rarely acted without legislative threats or significant social or financial pressure. Companies won’t do anything if “it’s not mandatory, it’s not enforced by the government,” and most important, if companies don’t profit from it, says Wang, from the University of Texas. While a group of tech companies, including Meta, Match, and Coinbase, last year announced the formation of Tech Against Scams, a collaboration to share tips and best practices, experts tell us there are no concrete actions to point to yet.

And at a time when more resources are desperately needed to address the growing problems on their platforms, social media companies like X, Meta, and others have laid off hundreds of people from their trust and safety departments in recent years, reducing their capacity to tackle even the most pressing issues. Since the reelection of Trump, Meta has signaled an even greater rollback of its moderation and fact checking, a decision that earned praise from the president.

Still, companies may feel pressure given that a handful of entities and executives have in recent years been held legally responsible for criminal activity on their platforms. Changpeng Zhao, who founded Binance, the world’s largest cryptocurrency exchange, was sentenced to four months in jail last April after pleading guilty to breaking US money-laundering laws, and the company had to forfeit some $4 billion for offenses that included allowing users to bypass sanctions. Then last May, Alexey Pertsev, a Tornado Cash cofounder, was sentenced to more than five years in a Dutch prison for facilitating the laundering of money stolen by, among others, the Lazarus Group, North Korea’s infamous state-backed hacking team. And in August last year, French authorities arrested Pavel Durov, the CEO of Telegram, and charged him with complicity in drug trafficking and distribution of child sexual abuse material.

“I think all social media [companies] should really be looking at the case of Telegram right now,” USIP’s Tower says. “At that CEO level, you’re starting to see states try to hold a company accountable for its role in enabling major transnational criminal activity on a global scale.”

Compounding all the challenges, however, is the integration of cheap and easy-to-use artificial intelligence into scamming operations. The trafficked individuals we spoke to, who had mostly left the compounds before the widespread adoption of generative AI, said that if targets suggested a video call they would deflect or, as a last resort, play prerecorded video clips. Only one described the use of AI by his company; he says he was paid to record himself saying various sentences in ways that reflected different emotions, for the purposes of feeding the audio into an AI model. Recently, reports have emerged of scammers who have used AI-powered “face swap” and voice-altering products so that they can impersonate their characters more convincingly. “Malicious actors can exploit these models, especially open-source models, to produce content at an unprecedented scale,” says Gabrielle Tran, senior analyst for technology and society at IST. “These models are purposefully being fine-tuned … to serve as convincing humans.”

Experts we spoke with warn that if platforms don’t pick up the pace on enforcement now, they’re likely to fall even further behind.

Every now and again, Gavesh still goes on Facebook to report pages he thinks are scams. He never hears back.

But he is working again in the tourism industry and on the path to recovering from his ordeal. “I can’t say that I’m 100% out of the trauma, but I’m trying to survive because I have responsibilities,” he says.

He chose to speak out because he doesn’t want anyone else to be tricked—into a scamming compound, or into giving up their life savings to a stranger. He’s seen behind the scenes into a brutal industry that exploits people’s real needs for work, connection, and human contact, and he wants to make sure no one else ends up where he did.

“There’s a very scary world,” he says. “A world beyond what we have seen.”

Peter Guest is a journalist based in London. Emily Fishbein is a freelance journalist focusing on Myanmar.

Additional reporting by Nu Nu Lusan.

![Lowest Prices Ever: Apple Pencil Pro Just $79.99, USB-C Pencil Only $49.99 [Deal]](https://www.iclarified.com/images/news/96863/96863/96863-640.jpg)

![Apple Releases iOS 18.4 RC 2 and iPadOS 18.4 RC 2 to Developers [Download]](https://www.iclarified.com/images/news/96860/96860/96860-640.jpg)

![What Google Messages features are rolling out [March 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2023/12/google-messages-name-cover.png?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Chip Glitching 101 with [Hash]](https://hackaday.com/wp-content/uploads/2025/03/glitching.jpeg?#)

![[The AI Show Episode 141]: Road to AGI (and Beyond) #1 — The AI Timeline is Accelerating](https://www.marketingaiinstitute.com/hubfs/ep%20141.1.png)

![[The AI Show Episode 140]: New AGI Warnings, OpenAI Suggests Government Policy, Sam Altman Teases Creative Writing Model, Claude Web Search & Apple’s AI Woes](https://www.marketingaiinstitute.com/hubfs/ep%20140%20cover.png)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![From broke musician to working dev. How college drop-out Ryan Furrer taught himself to code [Podcast #166]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743189826063/2080cde4-6fc0-46fb-b98d-b3d59841e8c4.png?#)

![[FREE EBOOKS] The Ultimate Linux Shell Scripting Guide, Artificial Intelligence for Cybersecurity & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

.png?#)