Is AI like ChatGPT, Grok, and Gemini ruining social media? Because it sure feels like it

AI is taking over social media, one sloppy post at a time – but the consequences go way beyond cringe.

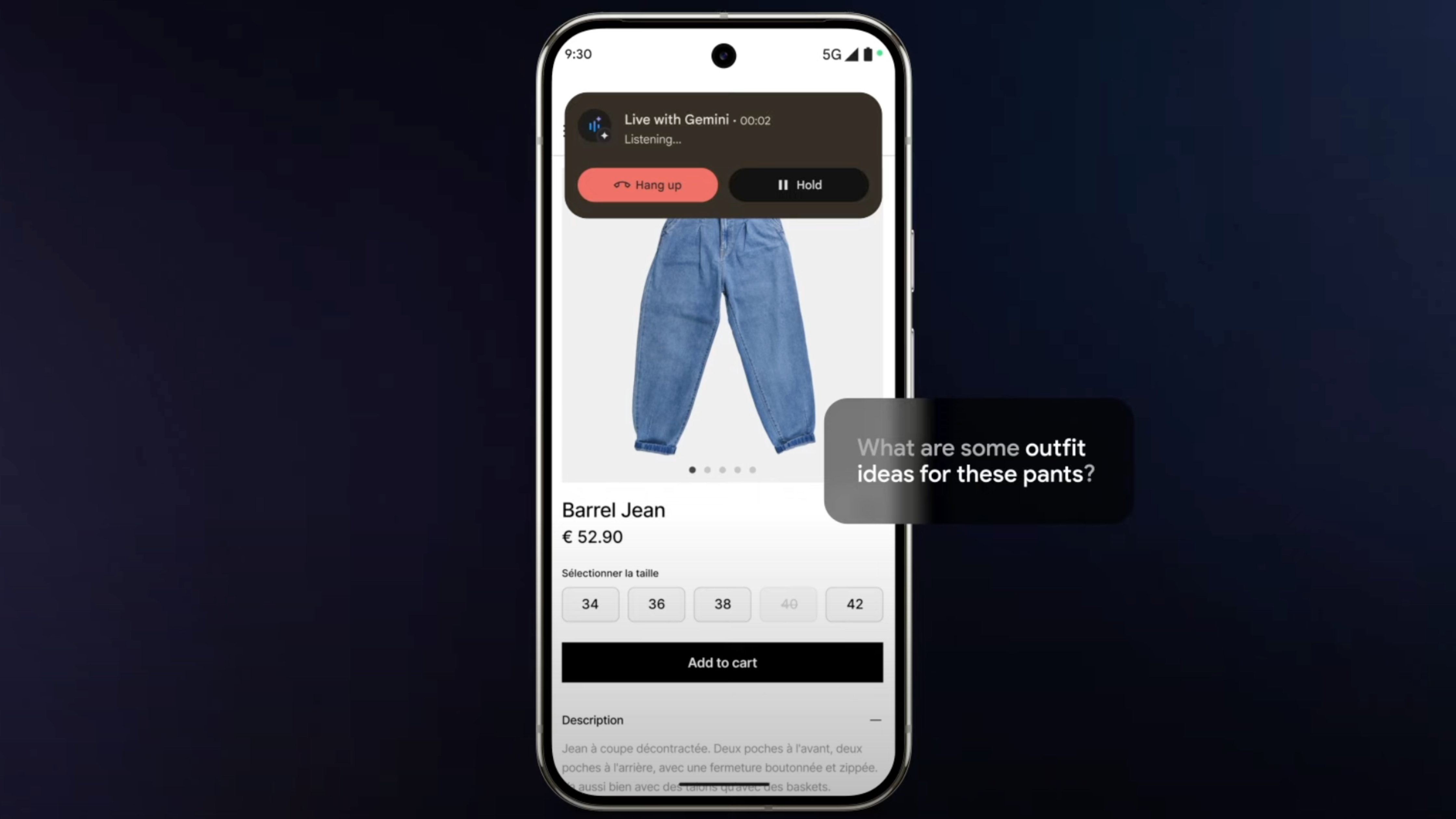

Yesterday morning, I logged into Facebook and saw an image of the Colosseum turned into a water park. On LinkedIn, everyone was busy transforming their headshots into Studio Ghibli characters, courtesy of ChatGPT’s latest update. Threads showed me a video reimagining the cast of Severance crawling around Lumon as babies. And X kindly served up a Grok-generated image of Elon Musk and Donald Trump in a pose I wish I could unsee.

It’s not just me, right? Social media has become a swirling mess of synthetic content. We’ve written about the rise of AI slop before – part cringe-inducing art gallery, part uncanny valley fever dream. And sure, the algorithm is feeding me stuff it thinks I care about – Severance babies, travel inspo, a healthy dose of Musk mockery. That checks out.

But I keep thinking: has AI officially ruined social media? And is this just harmless chaos or is it quietly rewiring how we see truth, trust, and reality?

The four horsemen of the AI slopocalypse

One of the biggest problems with AI content is the sheer volume – and it’s coming from all sides. AI evangelists. Your coworkers. Your friends. Your gran (who probably doesn’t even realize what she’s reposting). And brands that absolutely should know better.

To make sense of the mess, I spoke to Joe Goulcher, a creative director and social media expert. He works with brands on this stuff daily and has a front-row seat to the AI slop flood. According to Joe, AI content tends to fall into four distinct “strains” – “like a virus,” he tells me.

Functional AI slop

“Crudely, badly made stuff that is basically stock imagery used to fill a hole where an image should be,” Goulcher explains. “It's bottom of the barrel, and barely any conscious thought has gone into why or what it is.” This is the lowest-effort tier. Bland visuals slapped onto posts just to have something there. Placeholder content that somehow became the content.

Clickbait slop

“Stuff that makes us stop scrolling and think ‘god this is disgusting and bad,’ but it’s by design to generate conversation,” Goulcher says. This one’s the most insidious. It’s not trying to be good, it’s trying to be just bad enough to go viral.

The ‘look what I made!’ post

“This is where people use AI to create something that looks like LOTR, Star Wars or another behemoth IP because it’s trained on it,” Goulcher tells me. “They say things like ‘look what I did in ten mins!! this is going to change the industry and you should be scared and using it now instead of spending thousands with artists.’ It usually goes down like a sack of bricks.” This is the hype-fuelled, tech-bro theatre of generative content. The tone is always breathless, and the results are always underwhelming.

The genuinely good stuff

“There are actually good AI campaigns, with purpose, permission, and laced with incredible VFX, handcrafted where AI couldn't do the job,” Goulcher says. Yes, some brands are doing it well, with thought, care, and actual artistry. But it’s rare. And usually buried under a steaming pile of junk.

Breaking it down like this might feel bleak – like we’ve gone full epidemiology on the most cursed content – but it’s actually useful. Categorizing the chaos helps explain why AI content feels inescapable, and why it hits so many different shades of dystopian.

AI for the sake of AI

We’re in an era of AI content being made simply because it can be. People, brands, entire businesses are churning it out. Not necessarily because they have something to say, and not because it’s better than the alternatives, but because the tools exist, they're easy to use, and the pressure to use them is enormous.

This speaks to a deeper issue in tech. Just look at Apple’s recent AI missteps. Despite all the hype, AI isn’t delivering the magic it was sold on. It’s being shoved into products not because users need it, but because shareholders want to hear “AI” on earnings calls.

And right now? It’s not revolutionizing much of anything. In fact, in most applications, it’s starting to look like a very expensive gimmick.

“It reminds me of the early days of torrenting or music streaming – a bit of a Wild West,” Goulcher explains. “Even though brands whacked a logo on them, it didn't make it ethically good in any way. They were just trying to ride the waves of legality and dosh until legislation kicked in far too late.”

Like crypto or NFTs, we see there’s innovation, hype, overuse, and then fatigue. “I think we can apply the same tech hype graph to AI content,” Goulcher says. “There's always something in my gut that’s like, ‘this bubble will burst.’ And I still think that will happen. When AI data sets start eating themselves, and the innovative wow factor wears off – what’s left?”

And he’s asking the question more of us probably should be: “How are any of these billion-dollar tools actually making our lives better? Because the novelty is wearing off, the ethics (or lack of them) are becoming clearer, and the shock value is beginning to wane.”

AI hasn’t just broken social media, it’s broken the truth

It’s not like social media was perfect before this. AI didn’t start the rot, things were already slipping. But this latest wave of generative content has pushed it straight into uncanny, derivative, brain-melting chaos.

And if AI is flooding our feeds with pointless slop, it’s also doing something more dangerous: weaponizing it. It’s easy to laugh at AI-generated celeb babies or cringe at a brand ad that crawled out of the uncanny valley. But that reaction misses the bigger, scarier picture.

Because AI can fuel misinformation at scale. For example, across Europe, far-right groups are using AI-generated images to provoke outrage, spread conspiracy theories, and stoke division. And these aren’t just fringe trolls – they’re coordinated campaigns, designed to manipulate public opinion.

That’s just one example. Election misinformation. Deepfake porn. Fake war footage. Yes, it's the kind of content that has always existed online. Only now, the rules have changed. You don’t need skills. You don’t need a team. You don’t even need a budget. Just a narrative and a willingness to abandon reality. And on social media, the rest takes care of itself.

That’s the real horror story. Not just that we’re drowning in junk, or that brands think we want AI-generated ads, but that we’re slipping into a world where what’s fake moves faster than what’s true. And the algorithm doesn’t care if it’s real – just that it’s getting your clicks, your likes, your attention.

![T-Mobile says it didn't compromise its values to get FCC to approve fiber deal [UPDATED]](https://m-cdn.phonearena.com/images/article/169088-two/T-Mobile-says-it-didnt-compromise-its-values-to-get-FCC-to-approve-fiber-deal-UPDATED.jpg?#)

![Nomad Goods Launches 15% Sitewide Sale for 48 Hours Only [Deal]](https://www.iclarified.com/images/news/96899/96899/96899-640.jpg)

![Apple Watch Series 10 Prototype with Mystery Sensor Surfaces [Images]](https://www.iclarified.com/images/news/96892/96892/96892-640.jpg)

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![Is this a suitable approach to architect a flutter app? [closed]](https://i.sstatic.net/4hMHGb1L.png)

![From broke musician to working dev. How college drop-out Ryan Furrer taught himself to code [Podcast #166]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743189826063/2080cde4-6fc0-46fb-b98d-b3d59841e8c4.png?#)