Desire for Structure (read “SQL”)

Or obsession for control? Let’s admit it: we love the idea of running SQL queries on ALL our data! Not just a preference — it feels like an obsession. No matter how old or how rarely accessed, we cling to the idea that everything must remain instantly queryable. It feels so simple, until it’s not. And then bad things happen: The database grows too large, and queries slow down to death. Indexes sizes explode, eating up CPU and memory just to keep up. Schema changes become a nightmare, locking tables and causing downtime. The cost of scaling up SQL infrastructure skyrockets. Suddenly, our beautiful, structured world starts crumbling under its own weight. What seemed like an easy decision — “let’s just store everything in SQL” — becomes a scaling bottleneck that forces us to rethink our approach. But why does this happen? Relational Databases are powerful because they provide structure, indexing, and queryability — elements that make it easy for users to analyze and manipulate data. For engineers, analysts, and business users, SQL offers: A universal way to query data — The language is standardized and widely understood. Ad-hoc queryability — You can ask complex questions without predefining reports. Data consistency — Enforced schemas and constraints prevent data corruption. Indexing for performance — The ability to speed up searches through optimized indexes. Because of these benefits, many organizations enforce SQL as the default, even when other approaches may be more suitable. But what happens when this need for structure becomes a liability rather than an asset? We will look a bit into the problem space, and then we will explore (not exhaustively!) the solution space. 2. The Problem space: When Structure Becomes a Limitation 2.1. The cost of our need for Structure While SQL provides clarity, it also introduces constraints. Let’s look at the most important ones. Schema A schema demands data to be organized in a set of tables. And for each table it needs a set of columns (names and types) and primary key column. You can also introduce relationships between tables, but we are not going to talk about that here (that’s why, incidentally, they are called “relational databases”). Schema changes are delicate operations. They must be deployed before application changes to ensure compatibility. But even when done correctly, they introduce risks — what if your production data has unexpected edge cases your test environment didn’t? What if rollback becomes a nightmare? Oh wait! Do we push the DB schema changes first, or the application changes first? schema changes first of course! Then just pray that the application you are pushing has the exact same schema. Yes you tested in the test environment… which is always perfectly identical to the production environment… right? Well… not always. Indices A dear friend of mine and accomplished engineer used to say that “writing Databases is the art of writing indices”. What is an index? An index is a type of data structure that helps finding the data when you do a search. You put the data into a table as it comes. Now you want to search for all the orders for Mr Smith. If you indexed the LastName column…. The index has an acceleration data structure (probably a kind of tree) where it quickly can find all the rows where the last name is equal to “Smith”. Nice right? Yah. You know what it takes? Let’s try to list of what it takes: · Fast memory (RAM) where to keep the fast data structure. · Time: it needs to lock the index while it is searching it, so that mutations on the index do not make it crash. Yes these locks can be avoided or reduced most of the times but… you get the gist. Let’s stop it here for now. Now what happens if you need more data? You need more memory? Sure but there’s a limit to physical memory on a single machine. Let’s go distributed, let’s use a cluster. Now I ask you to do the mental exercise to imagine how to lock a distributed index data structure across a cluster of 4 nodes. Well… difficult but possible. But what if your data is really, REALLY huge? You might need 400 nodes. Or 4,000. Or 40,000. It’s impossible. Why? Because indexes improve query speed — but they also introduce contention. Updating an index requires locking portions of it, slowing down concurrent writes. In a single-node setup, this is manageable. But in a distributed database spanning hundreds of nodes, keeping indexes in sync becomes a nightmare. This is why most distributed databases avoid global indexes or use eventually consistent indexing approaches. Without careful design, a single overloaded index update can throttle an entire system, creating cascading slowdowns. And probably spending so much time and engineering effort in calibrating indexes does not align with your core business. Conclusions so far That’s why you need to give up and think about other ways of storing your data. The

Or obsession for control?

Let’s admit it: we love the idea of running SQL queries on ALL our data! Not just a preference — it feels like an obsession. No matter how old or how rarely accessed, we cling to the idea that everything must remain instantly queryable.

It feels so simple, until it’s not. And then bad things happen:

The database grows too large, and queries slow down to death.

Indexes sizes explode, eating up CPU and memory just to keep up.

Schema changes become a nightmare, locking tables and causing downtime.

The cost of scaling up SQL infrastructure skyrockets.

Suddenly, our beautiful, structured world starts crumbling under its own weight. What seemed like an easy decision — “let’s just store everything in SQL” — becomes a scaling bottleneck that forces us to rethink our approach.

But why does this happen?

Relational Databases are powerful because they provide structure, indexing, and queryability — elements that make it easy for users to analyze and manipulate data.

For engineers, analysts, and business users, SQL offers:

A universal way to query data — The language is standardized and widely understood.

Ad-hoc queryability — You can ask complex questions without predefining reports.

Data consistency — Enforced schemas and constraints prevent data corruption.

Indexing for performance — The ability to speed up searches through optimized indexes.

Because of these benefits, many organizations enforce SQL as the default, even when other approaches may be more suitable.

But what happens when this need for structure becomes a liability rather than an asset?

We will look a bit into the problem space, and then we will explore (not exhaustively!) the solution space.

2. The Problem space: When Structure Becomes a Limitation

2.1. The cost of our need for Structure

While SQL provides clarity, it also introduces constraints. Let’s look at the most important ones.

Schema

A schema demands data to be organized in a set of tables. And for each table it needs a set of columns (names and types) and primary key column. You can also introduce relationships between tables, but we are not going to talk about that here (that’s why, incidentally, they are called “relational databases”).

Schema changes are delicate operations. They must be deployed before application changes to ensure compatibility. But even when done correctly, they introduce risks — what if your production data has unexpected edge cases your test environment didn’t? What if rollback becomes a nightmare?

Oh wait! Do we push the DB schema changes first, or the application changes first? schema changes first of course! Then just pray that the application you are pushing has the exact same schema. Yes you tested in the test environment… which is always perfectly identical to the production environment… right?

Well… not always.

Indices

A dear friend of mine and accomplished engineer used to say that “writing Databases is the art of writing indices”.

What is an index? An index is a type of data structure that helps finding the data when you do a search.

You put the data into a table as it comes. Now you want to search for all the orders for Mr Smith. If you indexed the LastName column…. The index has an acceleration data structure (probably a kind of tree) where it quickly can find all the rows where the last name is equal to “Smith”.

Nice right? Yah.

You know what it takes? Let’s try to list of what it takes:

· Fast memory (RAM) where to keep the fast data structure.

· Time: it needs to lock the index while it is searching it, so that mutations on the index do not make it crash. Yes these locks can be avoided or reduced most of the times but… you get the gist. Let’s stop it here for now.

Now what happens if you need more data?

You need more memory? Sure but there’s a limit to physical memory on a single machine.

Let’s go distributed, let’s use a cluster. Now I ask you to do the mental exercise to imagine how to lock a distributed index data structure across a cluster of 4 nodes. Well… difficult but possible.

But what if your data is really, REALLY huge? You might need 400 nodes. Or 4,000. Or 40,000. It’s impossible. Why?

Because indexes improve query speed — but they also introduce contention. Updating an index requires locking portions of it, slowing down concurrent writes. In a single-node setup, this is manageable. But in a distributed database spanning hundreds of nodes, keeping indexes in sync becomes a nightmare. This is why most distributed databases avoid global indexes or use eventually consistent indexing approaches. Without careful design, a single overloaded index update can throttle an entire system, creating cascading slowdowns.

And probably spending so much time and engineering effort in calibrating indexes does not align with your core business.

Conclusions so far

That’s why you need to give up and think about other ways of storing your data.

The first place you store the data “in general” should not go into a relational SQL database (RDBMs if we want to use a formal language). That might not be the case for specific cases, for example where credit cards or money is involved.

If you are convinced about that, please continue reading. Otherwise please stop reading. There is no value for you to continue.

3. Exploring the solution space

3.1. Hot/Cold

First of all, not all data needs to be hot. Or indexed.

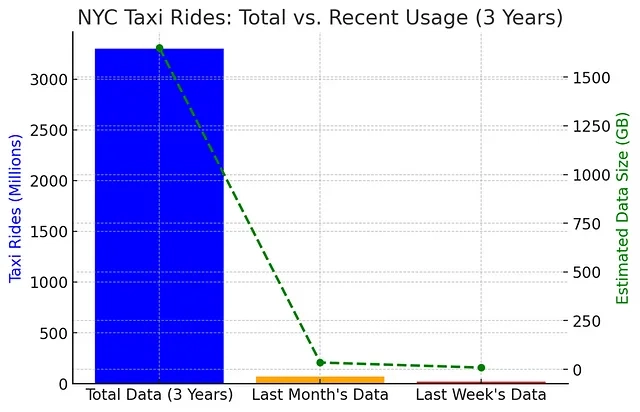

In most of the use cases queries need last week’s data, or last month’s data. Why then are you keeping 3 years’ worth of data in expensive indexed RDBMs?

1 week out of 3 years is less than one percent of the entire data! (0.6% to be precise).

1 month out of 3 years is about 2.5% of the entire data.

Maybe, it makes sense to place “hot” data in a fast (and costly) database (or even a cache), and the cold data in a cheaper (and slower) data storage. It will cost a bit in terms of latency to access such data but… you will save a ton on money.

So why do companies still keep years of data in expensive RDBMS instances? Often, it’s habit — ‘we might need it someday’ — or a lack of proper data lifecycle planning. But in reality, only a fraction of the data needs to remain hot.

A real-world Example

A real-world example of this concept is NYC Taxi ride data:

· Total Dataset: ~1.1 billion rides per year (~550GB of storage).

· Last 3 years of data: ~3.3 billion rides (~1.6 TB of storage).

· Last Month’s Data: ~70 million rides (~35GB of storage).

· Last Week’s Data: ~18 million rides (~9GB of storage).

3.2. Columns

I have often seen relational databases with hundreds — sometimes even thousands — of columns, storing an incredible amount of data. Yet, most of these columns are rarely queried. They can’t be discarded because they hold valuable information, but they still come at a cost.

Every column, whether used frequently or not, adds overhead in disk storage, CPU, and memory — even when it’s not indexed. And that brings us to another issue: we can’t index all hundreds of columns for obvious reasons. As a result, we end up in a paradoxical situation:

We pay for an expensive and often slow relational database system.

Yet, hundreds of columns remain unindexed, making queries inefficient.

This raises an important question: Are we truly benefiting from keeping all columns “hot” and queryable, or are we just paying for an illusion of accessibility?

4. Big Data: an introduction

The term Big Data is thrown around a lot, but how big is “big”?

Here’s my personal definition: Big Data is an amount of data that cannot fit in a small cluster — but it still needs to be queryable.

A modern approach to Big Data consists of several components, and the key principle is separating compute from storage.

4.1. Storage: The Data Lake

Big Data is typically stored in a distributed object storage system, which I loosely call a Data Lake.

Here’s my informal definition of a Data Lake:

Virtually infinite capacity — There is no practical storage limit.

Massively parallel — It can handle many simultaneous operations.

High throughput — Huge input/output bandwidth.

High latency — It is not optimized for low-latency access.

Simple REST API — Accessible through standard cloud APIs.

4.2. Compute: Processing Data from the Data Lake

From the Data Lake, data branches into separate workflows:

Analytics workloads → Data warehouses, batch processing, OLAP queries.

Online applications → Real-time transactional workloads (OLTP), NoSQL solutions.

But before going further, let me clarify something crucial:

4.3. Analytics & Online Processing Should Be Completely Separate

They should not share the same datastore, cluster, or even availability zone.

Why?

Analytics deals with historical data — end-of-day sales reports, customer behavior insights, trend analysis, etc.

Online processing serves live production workloads — API responses, real-time transactions, and user interactions.

Mixing the two is a huge risk. Allowing analytical queries to run on your live production system is an enterprise disaster waiting to happen. You risk:

❌ Slow application performance — Customers may experience delays.

❌ Production downtime — A heavy analytical query could lock tables or exhaust resources.

❌ Jeopardizing business operations — A reporting query should never interfere with live transactions.

![YouTube Announces New Creation Tools for Shorts [Video]](https://www.iclarified.com/images/news/96923/96923/96923-640.jpg)

![Apple Faces New Tariffs but Has Options to Soften the Blow [Kuo]](https://www.iclarified.com/images/news/96921/96921/96921-640.jpg)

.webp?#)

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![Is this a suitable approach to architect a flutter app? [closed]](https://i.sstatic.net/4hMHGb1L.png)

![[DEALS] Microsoft Office Professional 2021 for Windows: Lifetime License (75% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)