Load Balancer vs. Reverse Proxy vs. API Gateway: Understanding the Differences

In modern web architecture, three components often cause confusion due to their overlapping functionalities: load balancers, reverse proxies, and API gateways. While they all sit between clients and servers, they serve different primary purposes and offer unique capabilities. This blog post breaks down each technology and highlights their key differences with practical examples. Load Balancer: The Traffic Director What Is a Load Balancer? A load balancer distributes incoming network traffic across multiple backend servers to ensure no single server becomes overwhelmed. Its primary purpose is to improve application availability and scalability. Key Features Traffic Distribution: Routes requests using algorithms like round-robin, least connections, or IP hash Health Checking: Monitors server health and avoids routing to failed servers Session Persistence: Can keep a user's session on the same server SSL Termination: Can handle encryption/decryption to offload this work from application servers When To Use a Load Balancer When you need to scale horizontally by adding more servers When you need high availability with automatic failover When your application receives variable or high traffic volumes Example: Simple Load Balancing Setup # HAProxy configuration example global log 127.0.0.1 local0 maxconn 4096 user haproxy group haproxy defaults log global mode http option httplog option dontlognull timeout connect 5000 timeout client 50000 timeout server 50000 frontend http_front bind *:80 stats uri /haproxy?stats default_backend http_back backend http_back balance roundrobin server web1 192.168.1.101:80 check server web2 192.168.1.102:80 check server web3 192.168.1.103:80 check In this HAProxy example, incoming requests on port 80 are distributed in a round-robin fashion across three web servers. The load balancer checks the health of each server and only routes traffic to healthy instances. Reverse Proxy: The Protective Intermediary What Is a Reverse Proxy? A reverse proxy sits between clients and web servers, forwarding client requests to the appropriate backend server. Unlike load balancers, reverse proxies primarily focus on security, caching, and request manipulation rather than just distributing load. Key Features Request/Response Modification: Can modify headers, URLs, and content Caching: Stores frequently accessed content to reduce backend load Security: Hides backend server details and can implement web application firewall functionality Compression: Can compress responses before sending to clients URL Rewriting: Can transform URLs between external and internal formats When To Use a Reverse Proxy When you want to add security layers to your application When you need to cache static content When you want to hide your backend architecture from clients When you need centralized SSL management Example: Nginx Reverse Proxy Configuration # nginx.conf - Reverse Proxy Example worker_processes 1; events { worker_connections 1024; } http { # Cache definition proxy_cache_path /var/cache/nginx levels=1:2 keys_zone=my_cache:10m max_size=10g inactive=60m; server { listen 80; server_name example.com; # Security headers add_header X-XSS-Protection "1; mode=block"; add_header X-Content-Type-Options "nosniff"; add_header X-Frame-Options "DENY"; # Cache static files location ~* \.(jpg|jpeg|png|gif|ico|css|js)$ { proxy_pass http://backend_server; proxy_cache my_cache; proxy_cache_valid 200 302 10m; proxy_cache_valid 404 1m; expires 30d; } # URL rewriting example location /blog/ { rewrite ^/blog/(.*)$ /wordpress/$1 break; proxy_pass http://blog_server; } # Main application location / { proxy_pass http://backend_server; proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Proto $scheme; } } } This Nginx configuration demonstrates how a reverse proxy can provide caching for static files, security headers, and URL rewriting while forwarding requests to backend servers. API Gateway: The Sophisticated Service Manager What Is an API Gateway? An API gateway is a specialized reverse proxy designed specifically for API management. It serves as a single entry point for all API requests and provides advanced functionality for API management, including authentication, rate limiting, and request transformation. Key Features API Management: Centralized control of API endpoi

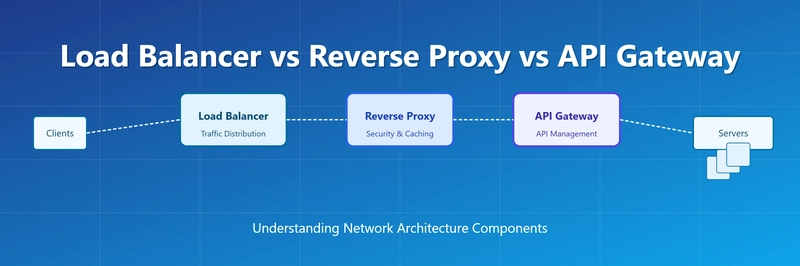

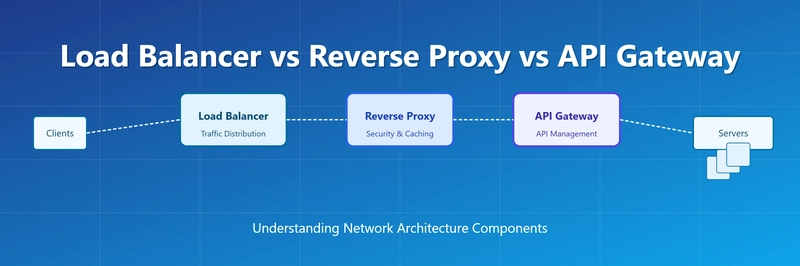

In modern web architecture, three components often cause confusion due to their overlapping functionalities: load balancers, reverse proxies, and API gateways. While they all sit between clients and servers, they serve different primary purposes and offer unique capabilities. This blog post breaks down each technology and highlights their key differences with practical examples.

Load Balancer: The Traffic Director

What Is a Load Balancer?

A load balancer distributes incoming network traffic across multiple backend servers to ensure no single server becomes overwhelmed. Its primary purpose is to improve application availability and scalability.

Key Features

- Traffic Distribution: Routes requests using algorithms like round-robin, least connections, or IP hash

- Health Checking: Monitors server health and avoids routing to failed servers

- Session Persistence: Can keep a user's session on the same server

- SSL Termination: Can handle encryption/decryption to offload this work from application servers

When To Use a Load Balancer

- When you need to scale horizontally by adding more servers

- When you need high availability with automatic failover

- When your application receives variable or high traffic volumes

Example: Simple Load Balancing Setup

# HAProxy configuration example

global

log 127.0.0.1 local0

maxconn 4096

user haproxy

group haproxy

defaults

log global

mode http

option httplog

option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

frontend http_front

bind *:80

stats uri /haproxy?stats

default_backend http_back

backend http_back

balance roundrobin

server web1 192.168.1.101:80 check

server web2 192.168.1.102:80 check

server web3 192.168.1.103:80 check

In this HAProxy example, incoming requests on port 80 are distributed in a round-robin fashion across three web servers. The load balancer checks the health of each server and only routes traffic to healthy instances.

Reverse Proxy: The Protective Intermediary

What Is a Reverse Proxy?

A reverse proxy sits between clients and web servers, forwarding client requests to the appropriate backend server. Unlike load balancers, reverse proxies primarily focus on security, caching, and request manipulation rather than just distributing load.

Key Features

- Request/Response Modification: Can modify headers, URLs, and content

- Caching: Stores frequently accessed content to reduce backend load

- Security: Hides backend server details and can implement web application firewall functionality

- Compression: Can compress responses before sending to clients

- URL Rewriting: Can transform URLs between external and internal formats

When To Use a Reverse Proxy

- When you want to add security layers to your application

- When you need to cache static content

- When you want to hide your backend architecture from clients

- When you need centralized SSL management

Example: Nginx Reverse Proxy Configuration

# nginx.conf - Reverse Proxy Example

worker_processes 1;

events {

worker_connections 1024;

}

http {

# Cache definition

proxy_cache_path /var/cache/nginx levels=1:2 keys_zone=my_cache:10m max_size=10g inactive=60m;

server {

listen 80;

server_name example.com;

# Security headers

add_header X-XSS-Protection "1; mode=block";

add_header X-Content-Type-Options "nosniff";

add_header X-Frame-Options "DENY";

# Cache static files

location ~* \.(jpg|jpeg|png|gif|ico|css|js)$ {

proxy_pass http://backend_server;

proxy_cache my_cache;

proxy_cache_valid 200 302 10m;

proxy_cache_valid 404 1m;

expires 30d;

}

# URL rewriting example

location /blog/ {

rewrite ^/blog/(.*)$ /wordpress/$1 break;

proxy_pass http://blog_server;

}

# Main application

location / {

proxy_pass http://backend_server;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

}

}

This Nginx configuration demonstrates how a reverse proxy can provide caching for static files, security headers, and URL rewriting while forwarding requests to backend servers.

API Gateway: The Sophisticated Service Manager

What Is an API Gateway?

An API gateway is a specialized reverse proxy designed specifically for API management. It serves as a single entry point for all API requests and provides advanced functionality for API management, including authentication, rate limiting, and request transformation.

Key Features

- API Management: Centralized control of API endpoints and versions

- Authentication & Authorization: Secures APIs with various auth methods

- Rate Limiting & Throttling: Prevents abuse and ensures fair usage

- Request/Response Transformation: Converts between protocols and formats

- Analytics & Monitoring: Provides insights into API usage

- Service Discovery: Can dynamically locate and route to microservices

- API Documentation: Often integrates with tools like Swagger/OpenAPI

When To Use an API Gateway

- In microservice architectures to simplify client-service communication

- When you need to implement consistent security policies across APIs

- When you need detailed analytics on API usage

- When you want to expose legacy systems through modern APIs

Example: AWS API Gateway with Lambda Integration

# AWS CloudFormation template for API Gateway

Resources:

MyApiGateway:

Type: AWS::ApiGateway::RestApi

Properties:

Name: MyAPI

Description: API Gateway for microservices

EndpointConfiguration:

Types:

- REGIONAL

# User API resource and methods

UsersResource:

Type: AWS::ApiGateway::Resource

Properties:

RestApiId: !Ref MyApiGateway

ParentId: !GetAtt MyApiGateway.RootResourceId

PathPart: users

GetUsersMethod:

Type: AWS::ApiGateway::Method

Properties:

RestApiId: !Ref MyApiGateway

ResourceId: !Ref UsersResource

HttpMethod: GET

AuthorizationType: COGNITO_USER_POOLS

AuthorizerId: !Ref ApiAuthorizer

Integration:

Type: AWS_PROXY

IntegrationHttpMethod: POST

Uri: !Sub arn:aws:apigateway:${AWS::Region}:lambda:path/2015-03-31/functions/${GetUsersFunction.Arn}/invocations

# API key and usage plan for rate limiting

ApiKey:

Type: AWS::ApiGateway::ApiKey

Properties:

Name: MyApiKey

Description: API Key for rate limiting

Enabled: true

UsagePlan:

Type: AWS::ApiGateway::UsagePlan

Properties:

ApiStages:

- ApiId: !Ref MyApiGateway

Stage: prod

Quota:

Limit: 1000

Period: DAY

Throttle:

BurstLimit: 20

RateLimit: 10

# API Gateway deployment

ApiDeployment:

Type: AWS::ApiGateway::Deployment

DependsOn:

- GetUsersMethod

Properties:

RestApiId: !Ref MyApiGateway

StageName: prod

This AWS CloudFormation template configures an API Gateway with authentication using Amazon Cognito, rate limiting through usage plans, and integration with AWS Lambda functions for handling the actual business logic.

Comparing the Three Technologies

| Feature | Load Balancer | Reverse Proxy | API Gateway |

|---|---|---|---|

| Primary Purpose | Distribute traffic | Security & routing | API management |

| Load Distribution | Strong | Basic | Basic to Strong |

| Caching | Limited | Strong | Moderate |

| Security Features | Basic | Strong | Very Strong |

| Protocol Transformation | Limited | Moderate | Strong |

| Content Modification | Limited | Strong | Very Strong |

| Monitoring & Analytics | Basic | Moderate | Comprehensive |

| Typical Use Case | Scaling web apps | Securing web servers | Managing microservices |

When to Use Which Technology

Use a Load Balancer When:

- You simply need to distribute traffic across identical servers

- High availability is your primary concern

- Your application scales horizontally with identical instances

Use a Reverse Proxy When:

- You need enhanced security features like WAF

- Content caching is important

- You want to hide your backend infrastructure

- You need to route to different backend systems based on URL patterns

Use an API Gateway When:

- You're working with microservices

- You need advanced API management features

- Authentication and authorization are critical

- You need to transform API requests between different formats

- You want detailed API analytics and monitoring

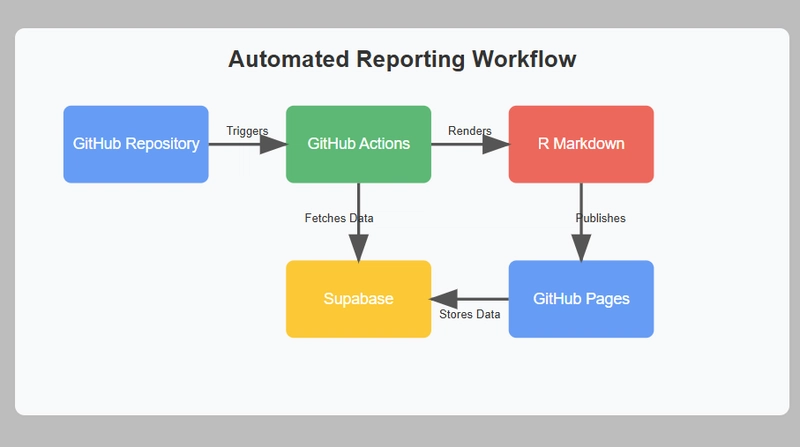

Combining Technologies for Maximum Benefit

In many real-world scenarios, these technologies work together rather than being used exclusively:

-

Load Balancer → Reverse Proxy → Application Servers

- The load balancer distributes traffic across multiple reverse proxies

- Each reverse proxy handles security and caching before forwarding to application servers

-

API Gateway → Load Balancer → Microservices

- The API gateway handles authentication and request transformations

- The load balancer distributes the authenticated requests across microservice instances

Conclusion

While load balancers, reverse proxies, and API gateways share some overlapping functionality, they each excel at different aspects of network architecture. Understanding their strengths helps you choose the right tool for your specific needs, or determine how to combine them effectively in complex systems.

By carefully selecting and configuring these components, you can build scalable, secure, and high-performance web applications that meet modern demands.

![New Apple iPad mini 7 On Sale for $399! [Lowest Price Ever]](https://www.iclarified.com/images/news/96096/96096/96096-640.jpg)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)

_Christophe_Coat_Alamy.jpg?#)

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

![[FREE EBOOKS] The Kubernetes Bible, The Ultimate Linux Shell Scripting Guide & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

.jpg?#)

.png?#)

![[Tutorial] Chapter 4: Task and Comment Plugins](https://media2.dev.to/dynamic/image/width=800%2Cheight=%2Cfit=scale-down%2Cgravity=auto%2Cformat=auto/https%3A%2F%2Fdev-to-uploads.s3.amazonaws.com%2Fuploads%2Farticles%2Ffl10oejjhn82dwrsm2n2.png)