Why the Market is Flooded with New Databases And Why It Shouldn’t Be

Do we really need another database, or is it time to make PostgreSQL, MySQL, and MongoDB truly effortless to use? The database industry has seen an explosion of new players over the past decade. Every year, new databases emerge, each promising better scalability, performance, or developer experience. However, many of these databases fail to deliver long-term value, often introducing new complexities rather than solving fundamental issues. This cycle highlights the real need—not for more databases, but for better modernization of existing, proven solutions. The reality is that most organizations don’t need yet another database. Instead, they need existing, battle-tested databases to be modernized for today’s infrastructure challenges. At Guepard, we believe that rather than reinventing the wheel, the key to unlocking a better developer experience lies in making databases like PostgreSQL, MySQL, and MongoDB more accessible, instantly provisioned, and seamlessly integrated into modern development workflows. Here’s why. Follow this link to read the original article The Problem: Too Many Databases, Not Enough Adoption The Rise of Feature-Driven Databases The influx of new databases often stems from frustrations with existing ones, complex setup, high operational overhead, and difficulty scaling in cloud-native environments. For example, traditional relational databases often require extensive tuning to handle high throughput, while NoSQL databases, despite their scalability, can introduce consistency trade-offs that create new operational challenges. Many companies struggle with managing database migrations, ensuring high availability, and optimizing performance across distributed environments, leading them to consider new solutions that may not necessarily be more efficient. But these issues don’t mean traditional databases are obsolete; they mean the way we deploy and manage them needs to evolve. Many new databases are created just to introduce a single feature, whether it's better scalability, a new indexing mechanism, or improved query optimization. For example, FoundationDB introduced a multi-model approach with transactional NoSQL capabilities, but despite its innovative design, it struggled with widespread adoption due to its complexity and lack of ecosystem support. Similarly, FaunaDB aimed to simplify global data consistency but faced hurdles in gaining traction as enterprises preferred well-established databases with proven scalability and operational maturity. Why Enterprises Resist Change However, enterprises are often reluctant to overhaul their entire infrastructure for the sake of a single feature due to the high costs, potential risks, and the need for specialized expertise. Large-scale migrations can disrupt operations, require extensive retraining, and introduce unforeseen technical challenges, making businesses cautious about adopting entirely new database solutions. Large companies prioritize stability, expertise, and long-term support, which is why they stick with well-established databases. For example, Dolt introduced time-traveling capabilities for databases, allowing users to track changes like in Git. While innovative, most enterprises won't adopt it at scale because they need mature ecosystems, enterprise support, and well-established best practices. Similarly, CockroachDB offers high resilience and global distribution, but enterprises often prefer PostgreSQL with extensions like Citus for distributed workloads because it allows them to leverage existing PostgreSQL expertise, tools, and ecosystem while gaining similar distributed capabilities without the need for a full migration. The cost and complexity of migration often outweigh the benefits of a single feature, making modernization of existing databases a far more practical solution. However, these specialized databases often fall short in real-world use cases, lacking the robustness, ecosystem support, and decades of optimizations that established databases provide. For instance, Google’s Spanner introduced strong consistency across distributed environments, but its high operational complexity and vendor lock-in made it impractical for many businesses outside of Google’s ecosystem. Similarly, ArangoDB attempted to merge graph, document, and key-value storage into a single engine, but struggled with widespread adoption due to its steep learning curve and lack of a mature support network. Instead of constantly switching to new, unproven solutions, modernizing and extending existing databases offers a far more efficient and sustainable path forward. The Strength of Established Databases The Strength of Established Databases In reality, PostgreSQL, MySQL, and MongoDB have been refined over decades, backed by massive ecosystems, battle-tested reliability, and proven performance. PostgreSQL, for instance, has been in development since the 1980s and is now used by major organ

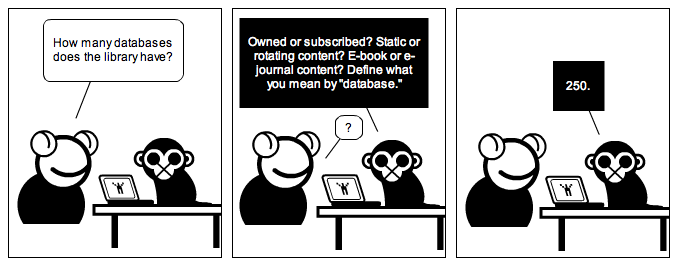

Do we really need another database, or is it time to make PostgreSQL, MySQL, and MongoDB truly effortless to use?

The database industry has seen an explosion of new players over the past decade. Every year, new databases emerge, each promising better scalability, performance, or developer experience. However, many of these databases fail to deliver long-term value, often introducing new complexities rather than solving fundamental issues.

This cycle highlights the real need—not for more databases, but for better modernization of existing, proven solutions. The reality is that most organizations don’t need yet another database. Instead, they need existing, battle-tested databases to be modernized for today’s infrastructure challenges.

At Guepard, we believe that rather than reinventing the wheel, the key to unlocking a better developer experience lies in making databases like PostgreSQL, MySQL, and MongoDB more accessible, instantly provisioned, and seamlessly integrated into modern development workflows. Here’s why.

Follow this link to read the original article

The Problem: Too Many Databases, Not Enough Adoption

The Rise of Feature-Driven Databases

The influx of new databases often stems from frustrations with existing ones, complex setup, high operational overhead, and difficulty scaling in cloud-native environments. For example, traditional relational databases often require extensive tuning to handle high throughput, while NoSQL databases, despite their scalability, can introduce consistency trade-offs that create new operational challenges.

Many companies struggle with managing database migrations, ensuring high availability, and optimizing performance across distributed environments, leading them to consider new solutions that may not necessarily be more efficient. But these issues don’t mean traditional databases are obsolete; they mean the way we deploy and manage them needs to evolve.

Many new databases are created just to introduce a single feature, whether it's better scalability, a new indexing mechanism, or improved query optimization. For example, FoundationDB introduced a multi-model approach with transactional NoSQL capabilities, but despite its innovative design, it struggled with widespread adoption due to its complexity and lack of ecosystem support. Similarly, FaunaDB aimed to simplify global data consistency but faced hurdles in gaining traction as enterprises preferred well-established databases with proven scalability and operational maturity.

Why Enterprises Resist Change

However, enterprises are often reluctant to overhaul their entire infrastructure for the sake of a single feature due to the high costs, potential risks, and the need for specialized expertise. Large-scale migrations can disrupt operations, require extensive retraining, and introduce unforeseen technical challenges, making businesses cautious about adopting entirely new database solutions. Large companies prioritize stability, expertise, and long-term support, which is why they stick with well-established databases.

For example, Dolt introduced time-traveling capabilities for databases, allowing users to track changes like in Git. While innovative, most enterprises won't adopt it at scale because they need mature ecosystems, enterprise support, and well-established best practices. Similarly, CockroachDB offers high resilience and global distribution, but enterprises often prefer PostgreSQL with extensions like Citus for distributed workloads because it allows them to leverage existing PostgreSQL expertise, tools, and ecosystem while gaining similar distributed capabilities without the need for a full migration. The cost and complexity of migration often outweigh the benefits of a single feature, making modernization of existing databases a far more practical solution.

However, these specialized databases often fall short in real-world use cases, lacking the robustness, ecosystem support, and decades of optimizations that established databases provide. For instance, Google’s Spanner introduced strong consistency across distributed environments, but its high operational complexity and vendor lock-in made it impractical for many businesses outside of Google’s ecosystem. Similarly, ArangoDB attempted to merge graph, document, and key-value storage into a single engine, but struggled with widespread adoption due to its steep learning curve and lack of a mature support network. Instead of constantly switching to new, unproven solutions, modernizing and extending existing databases offers a far more efficient and sustainable path forward.

The Strength of Established Databases

The Strength of Established Databases

In reality, PostgreSQL, MySQL, and MongoDB have been refined over decades, backed by massive ecosystems, battle-tested reliability, and proven performance. PostgreSQL, for instance, has been in development since the 1980s and is now used by major organizations like Apple and Netflix, who rely on its robustness, extensibility, and strong support for ACID compliance to manage large-scale, high-performance applications. Apple uses PostgreSQL for data integrity and scalability across various internal services, while Netflix leverages it for analytics and real-time data processing to support its recommendation engine and content management systems.

What Modern Infrastructure Needs

1. Instant Provisioning

Developers shouldn’t have to wait hours—or even minutes—to spin up a new database environment. In modern infrastructure, database provisioning should be as simple as deploying an application container. Whether it’s for development, testing, or production, provisioning should happen instantly, without manual setup.

2. Database-per-Tenant Model

Multi-tenancy is the backbone of SaaS, yet many database architectures still rely on shared schemas or complex partitioning techniques. Instead, the future lies in database-per-tenant architectures that provide isolation, security, and scalability while avoiding the pitfalls of noisy neighbors and the risks associated with schema migrations. A poorly planned schema migration can lead to downtime, data inconsistencies, or even data loss—especially in large-scale applications where multiple services rely on the same database structure. For example, adding a new column with default values in a high-traffic system can cause performance degradation if not properly indexed or executed during off-peak hours.

3. Shift-Left for Databases

Just as DevOps shifted infrastructure concerns left into the development cycle, databases need the same treatment. Developers should be able to branch, test, and roll back database changes as easily as they do with code. Infrastructure should empower teams to experiment without fear of breaking production.

For example, companies adopting shift-left database practices have significantly reduced deployment failures by catching issues earlier in the development cycle. Git-style data branching enables developers to test schema changes in isolated environments before merging them into production, preventing downtime and ensuring consistency across teams.

How Guepard Bridges the Gap

At Guepard, we believe in reinventing databases. Sometime we must take a breath to enhance the databases you already trust like PostgreSQL, MySQL, and MongoDB.

By modernizing the way they’re deployed, managed, and integrated into your workflows:

Instant provisioning: Spin up a fully isolated database in seconds.

Database-per-tenant model: Each customer or team gets their own dedicated database, ensuring security and performance.

Data branching: Create and merge database branches as easily as Git, enabling true shift-left development for data.

By making databases easier to use in a modern infrastructure, Guepard allows teams to focus on building great applications instead of wrestling with database management.

With instant provisioning, dedicated databases per tenant, and seamless data branching, Guepard simplifies database operations, enhances security, and accelerates development cycles.

![Apple to Craft Key Foldable iPhone Components From Liquid Metal [Kuo]](https://www.iclarified.com/images/news/96784/96784/96784-640.jpg)

![Apple to Focus on Battery Life and Power Efficiency in Future Premium Devices [Rumor]](https://www.iclarified.com/images/news/96781/96781/96781-640.jpg)

_Federico_Caputo_Alamy.jpg?#)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![[The AI Show Episode 138]: Introducing GPT-4.5, Claude 3.7 Sonnet, Alexa+, Deep Research Now in ChatGPT Plus & How AI Is Disrupting Writing](https://www.marketingaiinstitute.com/hubfs/ep%20138%20cover.png)

![[FREE EBOOKS] Artificial Intelligence for Cybersecurity & Five More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From hating coding to programming satellites at age 37 with Francesco Ciulla [Podcast #165]](https://cdn.hashnode.com/res/hashnode/image/upload/v1742585568977/09b25b8e-8c92-4f4b-853f-64b7f7915980.png?#)

.jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

.png?#)