Agentic AI: Single vs Multi-Agent Systems

Demonstrated by building a tech news agent in LangGraph The post Agentic AI: Single vs Multi-Agent Systems appeared first on Towards Data Science.

One of the interesting areas into these Agentic Ai systems is the difference between building a single versus multi-agent workflow, or perhaps the difference between working with more flexible vs controlled systems.

This article will help you understand what agentic AI is, how to build simple workflows with LangGraph, and the differences in results you can achieve with the different architectures. I’ll demonstrate this by building a tech news agent with various data sources.

As for the use case, I’m a bit obsessed with getting automatic news updates, based on my preferences, without me drowning in information overload every day.

Working with summarizing and gathering research is one of those areas that agentic AI can really shine.

So follow along while I keep trying to make AI do the grunt work for me, and we’ll see how single-agent compares to multi-agent setups.

I always keep my work jargon-free, so if you’re new to agentic AI, this piece should help you understand what it is and how to work with it. If you’re not new to it, you can scroll past some of the sections.

Agentic AI (& LLMs)

Agentic AI is about programming with natural language. Instead of using rigid, explicit code, you’re instructing large language models (LLMs) to route data and perform actions through plain language in automating tasks.

Using natural language in workflows isn’t new, we’ve used NLP for years to extract and process data. What’s new is the amount of freedom we can now give language models, allowing them to handle ambiguity and make decisions dynamically.

But just because LLMs can understand nuanced language doesn’t mean they inherently validate facts or maintain data integrity. I see them primarily as a communication layer that sits on top of structured systems and existing data sources.

I usually explain it like this to non-technical people: they work a bit like we do. If we don’t have access to clean, structured data, we start making things up. Same with LLMs. They generate responses based on patterns, not truth-checking.

So just like us, they do their best with what they’ve got. If we want better output, we need to build systems that give them reliable data to work with. So, with Agentic systems we integrate ways for them to interact with different data sources, tools and systems.

Now, just because we can use these larger models in more places, doesn’t mean we should. LLMs shine when interpreting nuanced natural language, think customer service, research, or human-in-the-loop collaboration.

But for structured tasks — like extracting numbers and sending them somewhere — you need to use traditional approaches. LLMs aren’t inherently better at math than a calculator. So, instead of having an LLM do calculations, you give an LLM access to a calculator.

So whenever you can build parts of a workflow programmatically, that will still be the better option.

Nevertheless, LLMs are great at adapting to messy real-world input and interpreting vague instructions so combining the two can be a great way to build systems.

Agentic Frameworks

I know a lot of people jump straight to CrewAI or AutoGen here, but I’d recommend checking out LangGraph, Agno, Mastra, and Smolagents. Based on my research, these frameworks have received some of the strongest feedback so far.

LangGraph is more technical and can be complex, but it’s the preferred choice for many developers. Agno is easier to get started with but less technical. Mastra is a solid option for JavaScript developers, and Smolagents shows a lot of promise as a lightweight alternative.

In this case, I’ve gone with LangGraph — built on top of LangChain — not because it’s my favorite, but because it’s becoming a go-to framework that more devs are adopting.

So, it’s worth being familiar with.

It has a lot of abstractions though, where you may want to rebuild some of it just to be able to control and understand it better.

I will not go into detail on LangGraph here, so I decided to build a quick guide for those that need to get a review.

As for this use case, you’ll be able to run the workflow without coding anything, but if you’re here to learn you may also want to understand how it works.

Choosing an LLM

Now, you might jump into this and wonder why I’m choosing certain LLMs as the base for the agents.

You can’t just pick any model, especially when working within a framework. They need to be compatible. Key things to look for are tool calling support and the ability to generate structured outputs.

I’d recommend checking HuggingFace’s Agent Leaderboard to see which models actually perform well in real-world agentic systems.

For this workflow, you should be fine using models from Anthropic, OpenAI, or Google. If you’re considering another one, just make sure it’s compatible with LangChain.

Single vs. Multi-Agent Systems

If you build a system around one LLM and give it a bunch of tools you want it to use, you’re working with a single-agent workflow. It’s fast, and if you’re new to agentic AI, it might seem like the model should just figure things out on its own.

But the thing is these workflows are just another form of system design. Like any software project, you need to plan the process, define the steps, structure the logic, and decide how each part should behave.

This is where multi-agent workflows come in.

Not all of them are hierarchical or linear though, some are collaborative. Collaborative workflows would then also fall into the more flexible approach that I find more difficult to work with, at least as it is now with the capabilities that exist.

However, collaborative workflows do also break apart different functions into their own modules.

Single-agent and collaborative workflows are great to start with when you’re just playing around, but they don’t always give you the precision needed for actual tasks.

For the workflow I will build here, I already know how the APIs should be used — so it’s my job to guide the system to use it the right way.

We’ll go through comparing a single-agent setup with a hierarchical multi-agent system, where a lead agent delegates tasks across a small team so you can see how they behave in practice.

Building a Single Agent Workflow

With a single thread — i.e., one agent — we give an LLM access to several tools. It’s up to the agent to decide which tool to use and when, based on the user’s question.

The challenge with a single agent is control.

No matter how detailed the system prompt is, the model may not follow our requests (this can happen in more controlled environments too). If we give it too many tools or options, there’s a good chance it won’t use all of them or even use the right ones.

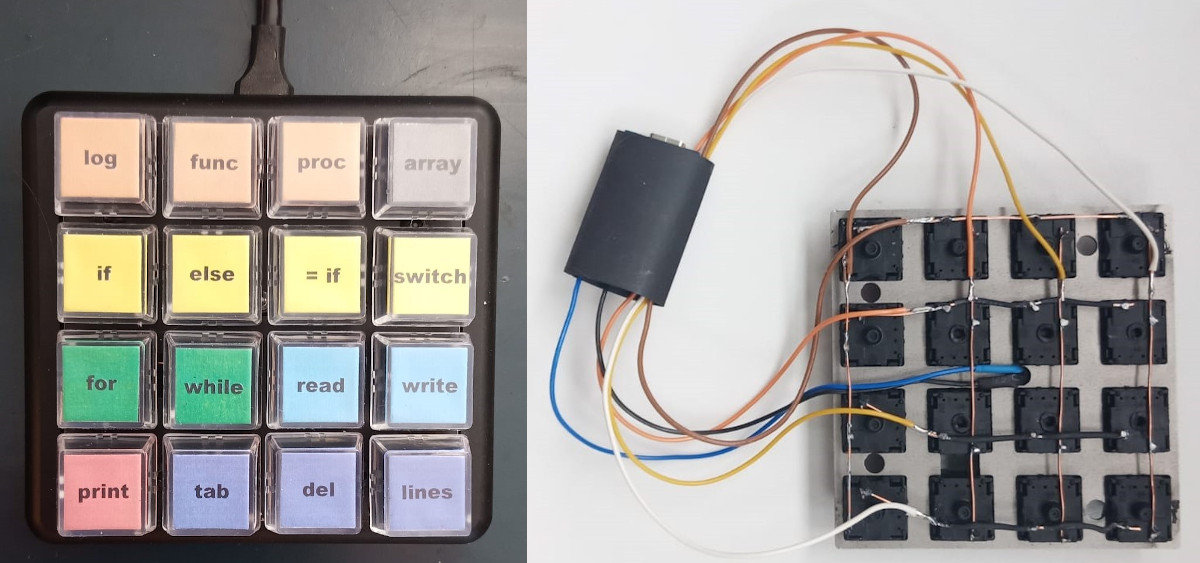

To illustrate this, we’ll build a tech news agent that has access to several API endpoints with custom data with several options as parameters in the tools. It’s up to the agent to decide how many to use and how to setup the final summary.

Remember, I build these workflows using LangGraph. I won’t go into LangGraph in depth here, so if you want to learn the basics to be able to tweak the code, go here.

You can find the single-agent workflow here. To run it, you’ll need LangGraph Studio and the latest version of Docker installed.

Once you’re set up, open the project folder on your computer, add your GOOGLE_API_KEY in a .env file, and save. You can get a key from Google here.

Gemini Flash 2.0 has a generous free tier, so running this shouldn’t cost anything (but you may run into errors if you use it too much).

If you want to switch to another LLM or tools, you can tweak the code directly. But, again, remember the LLM needs to be compatible.

After setup, launch LangGraph Studio and select the correct folder.

This will boot up our workflow so we can test it.

If you run into issues booting this up, double-check that you’re using the latest version of Docker.

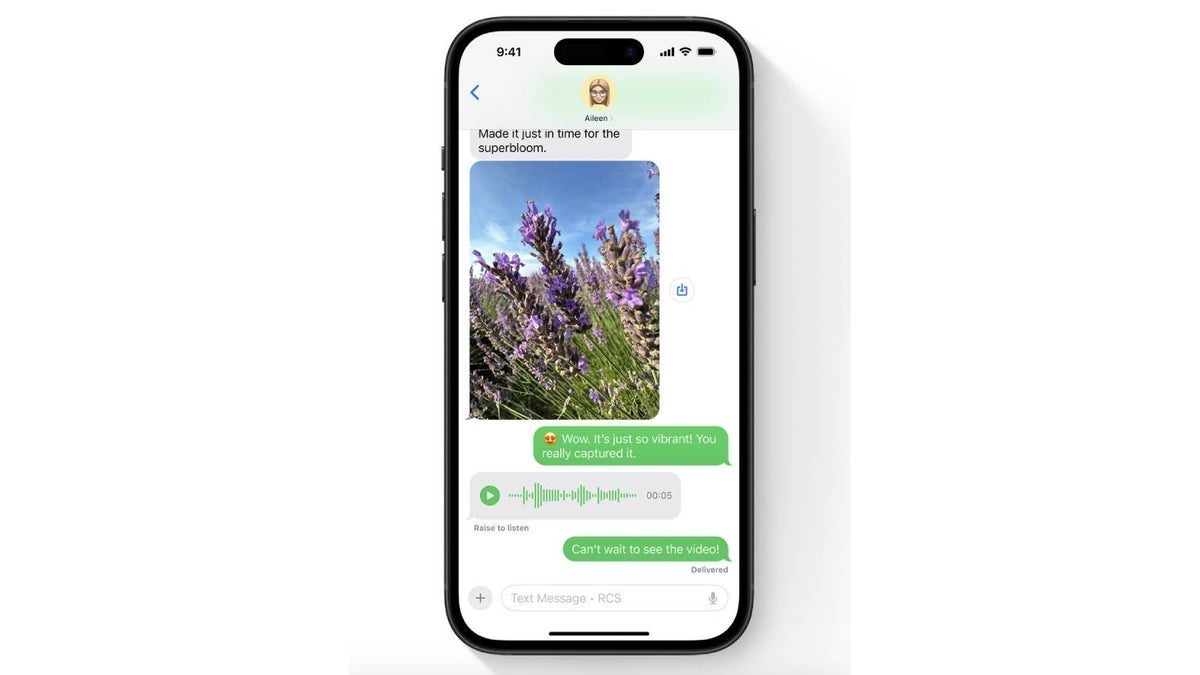

Once it’s loaded, you can test the workflow by entering a human message and hitting submit.

You can see me run the workflow below.

You can see the final response below.

For this prompt it decided that it would check weekly trending keywords filtered by the category ‘companies’ only, and then it fetched the sources of those keywords and summarized for us.

It had some issues in giving us a unified summary, where it simply used the information it got last and failed to use all of the research.

In reality we want it to fetch both trending and top keywords within several categories (not just companies), check sources, track specific keywords, and reason and summarize it all nicely before returning a response.

We can of course probe it and keep asking it questions but as you can imagine if we need something more complex it would start to make shortcuts in the workflow.

The key thing is, an agent system isn’t just gonna think the way we expect, we have to actually orchestrate it to do what we want.

So a single agent is great for something simple but as you can imagine it may not think or behave as we are expecting.

This is why going for a more complex system where each agent is responsible for one thing can be really useful.

Testing a Multi-Agent Workflow

Building multiagent workflows is a lot more difficult than building a single agent with access to some tools. To do this, you need to carefully think about the architecture beforehand and how data should flow between the agents.

The multi-agent workflow I’ll set up here uses two different teams — a research team and an editing team — with several agents under each.

Every agent has access to a specific set of tools.

We’re introducing some new tools, like a research pad that acts as a shared space — one team writes their findings, the other reads from it. The last LLM will read everything that has been researched and edited to make a summary.

An alternative to using a research pad is to store data in a scratchpad in state, isolating short-term memory for each team or agent. But that also means thinking carefully about what each agent’s memory should include.

I also decided to build out the tools a bit more to provide richer data upfront, so the agents don’t have to fetch sources for each keyword individually. Here I’m using normal programmatic logic because I can.

A key thing to remember: if you can use normal programming logic, do it.

Since we’re using multiple agents, you can lower costs by using cheaper models for most agents and reserving the more expensive ones for the important stuff.

Here, I’m using Gemini Flash 2.0 for all agents except the summarizer, which runs on OpenAI’s GPT-4o. If you want higher-quality summaries, you can use an even more advanced LLM with a larger context window.

The workflow is set up for you here. Before loading it, make sure to add both your OpenAI and Google API keys in a .env file.

In this workflow, the routes (edges) are setup dynamically instead of manually like we did with the single agent. It’ll look more complex if you peek into the code.

Once you boot up the workflow in LangGraph Studio — same process as before — you’ll see the graph with all these nodes ready.

LangGraph Studio lets us visualize how the system delegates work between agents when we run it—just like we saw in the simpler workflow above.

Since I understand the tools each agent is using, I can prompt the system in the right way. But regular users won’t know how to do this properly. So if you’re building something similar, I’d suggest introducing an agent that transforms the user’s query into something the other agents can actually work with.

We can test it out by setting a message.

“I’m an investor and I’m interested in getting an update for what has happened within the week in tech, and what people are talking about (this means categories like companies, people, websites and subjects are interesting). Please also track these specific keywords: AI, Google, Microsoft, and Large Language Models”

Then choosing “supervisor” as the Next parameter (we’d normally do this programmatically).

This workflow will take several minutes to run, unlike the single-agent workflow we ran earlier which finished in under a minute.

So be patient while the tools are running.

In general, these systems take time to gather and process information and that’s just something we need to get used to.

The final summary will look something like this:

You can read the whole thing here instead if you want to check it out.

The news will obviously vary depending on when you run the workflow. I ran it the 28th of March so the example report will be for this date.

It should save the summary to a text document, but if you’re running this inside a container, you likely won’t be able to access that file easily. It’s better to send the output somewhere else — like Google Docs or via email.

As for the results, I’ll let you decide for yourself the difference between using a more complex system versus a simple one, and how it gives us more control over the process.

Finishing Notes

I’m working with a good data source here. Without that, you’d need to add a lot more error handling, which would slow everything down even more.

Clean and structured data is key. Without it, the LLM won’t perform at its best.

Even with solid data, it’s not perfect. You still need to work on the agents to make sure they do what they’re supposed to.

You’ve probably already noticed the system works — but it’s not quite there yet.

There are still several things that need improvement: parsing the user’s query into a more structured format, adding guardrails so agents always use their tools, summarizing more effectively to keep the research doc concise, improving error handling, and introducing long-term memory to better understand what the user actually needs.

State (short-term memory) is especially important if you want to optimize for performance and cost.

Right now, we’re just pushing every message into state and giving all agents access to it, which isn’t ideal. We really want to separate state between the teams. In this case, it’s something I haven’t done, but you can try it by introducing a scratchpad in the state schema to isolate what each team knows.

Regardless, I hope it was a fun experience to understand the results we can get by building different Agentic Workflows.

If you want to see more of what I’m working on, you can follow me here but also on Medium, GitHub, or LinkedIn (though I’m hoping to move over to X soon). I also have a Substack, where I hope to publishing shorter pieces in.

The post Agentic AI: Single vs Multi-Agent Systems appeared first on Towards Data Science.

![Some T-Mobile customers can track real-time location of other users and random kids without permission [UPDATED]](https://m-cdn.phonearena.com/images/article/169135-two/Some-T-Mobile-customers-can-track-real-time-location-of-other-users-and-random-kids-without-permission-UPDATED.jpg?#)

![Apple Releases macOS Sequoia 15.5 Beta to Developers [Download]](https://www.iclarified.com/images/news/96915/96915/96915-640.jpg)

![Amazon Makes Last-Minute Bid for TikTok [Report]](https://www.iclarified.com/images/news/96917/96917/96917-640.jpg)

![Apple Releases iOS 18.5 Beta and iPadOS 18.5 Beta [Download]](https://www.iclarified.com/images/news/96907/96907/96907-640.jpg)

![Apple Seeds watchOS 11.5 to Developers [Download]](https://www.iclarified.com/images/news/96909/96909/96909-640.jpg)

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![Is this a suitable approach to architect a flutter app? [closed]](https://i.sstatic.net/4hMHGb1L.png)