The Case for Centralized AI Model Inference Serving

Optimizing highly parallel AI algorithm execution The post The Case for Centralized AI Model Inference Serving appeared first on Towards Data Science.

In this post, we address a common challenge: efficiently processing large-scale inputs through algorithmic pipelines that include deep learning models. A typical solution is to run multiple independent jobs, each responsible for processing a single input. This setup is often managed with job orchestration frameworks (e.g., Kubernetes). However, when deep learning models are involved, this approach can become inefficient as loading and executing the same model in each individual process can lead to resource contention and scaling limitations. As AI models become increasingly prevalent in algorithmic pipelines, it is crucial that we revisit the design of such solutions.

In this post we evaluate the benefits of centralized Inference serving, where a dedicated inference server handles prediction requests from multiple parallel jobs. We define a toy experiment in which we run an image-processing pipeline based on a ResNet-152 image classifier on 1,000 individual images. We compare the runtime performance and resource utilization of the following two implementations:

- Decentralized inference — each job loads and runs the model independently.

- Centralized inference — all jobs send inference requests to a dedicated inference server.

To keep the experiment focused, we make several simplifying assumptions:

- Instead of using a full-fledged job orchestrator (like Kubernetes), we implement parallel process execution using Python’s multiprocessing module.

- While real-world workloads often span multiple nodes, we run everything on a single node.

- Real-world workloads typically include multiple algorithmic components. We limit our experiment to a single component — a ResNet-152 classifier running on a single input image.

- In a real-world use case, each job would process a unique input image. To simplify our experiment setup, each job will process the same kitty.jpg image.

- We will use a minimal deployment of a TorchServe inference server, relying mostly on its default settings. Similar results are expected with alternative inference server solutions such as NVIDIA Triton Inference Server or LitServe.

The code is shared for demonstrative purposes only. Please do not interpret our choice of TorchServe — or any other component of our demonstration — as an endorsement of its use.

Toy Experiment

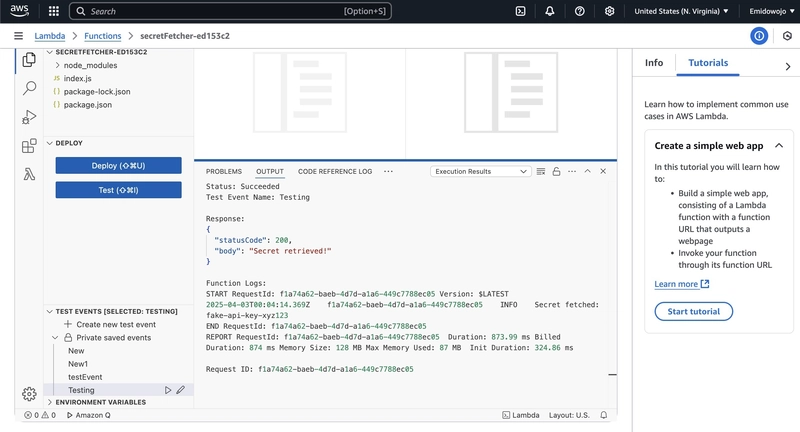

We conduct our experiments on an Amazon EC2 c5.2xlarge instance, with 8 vCPUs and 16 GiB of memory, running a PyTorch Deep Learning AMI (DLAMI). We activate the PyTorch environment using the following command:

source /opt/pytorch/bin/activateStep 1: Creating a TorchScript Model Checkpoint

We begin by creating a ResNet-152 model checkpoint. Using TorchScript, we serialize both the model definition and its weights into a single file:

import torch

from torchvision.models import resnet152, ResNet152_Weights

model = resnet152(weights=ResNet152_Weights.DEFAULT)

model = torch.jit.script(model)

model.save("resnet-152.pt")Step 2: Model Inference Function

Our inference function performs the following steps:

- Load the ResNet-152 model.

- Load an input image.

- Preprocess the image to match the input format expected by the model, following the implementation defined here.

- Run inference to classify the image.

- Post-process the model output to return the top five label predictions, following the implementation defined here.

We define a constant MAX_THREADS hyperparameter that we use to restrict the number of threads used for model inference in each process. This is to prevent resource contention between the multiple jobs.

import os, time, psutil

import multiprocessing as mp

import torch

import torch.nn.functional as F

import torchvision.transforms as transforms

from PIL import Image

def predict(image_id):

# Limit each process to 1 thread

MAX_THREADS = 1

os.environ["OMP_NUM_THREADS"] = str(MAX_THREADS)

os.environ["MKL_NUM_THREADS"] = str(MAX_THREADS)

torch.set_num_threads(MAX_THREADS)

# load the model

model = torch.jit.load('resnet-152.pt').eval()

# Define image preprocessing steps

transform = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

])

# load the image

image = Image.open('kitten.jpg').convert("RGB")

# preproc

image = transform(image).unsqueeze(0)

# perform inference

with torch.no_grad():

output = model(image)

# postproc

probabilities = F.softmax(output[0], dim=0)

probs, classes = torch.topk(probabilities, 5, dim=0)

probs = probs.tolist()

classes = classes.tolist()

return dict(zip(classes, probs))

Step 3: Running Parallel Inference Jobs

We define a function that spawns parallel processes, each processing a single image input. This function:

- Accepts the total number of images to process and the maximum number of concurrent jobs.

- Dynamically launches new processes when slots become available.

- Monitors CPU and memory usage throughout execution.

def process_image(image_id):

print(f"Processing image {image_id} (PID: {os.getpid()})")

predict(image_id)

def spawn_jobs(total_images, max_concurrent):

start_time = time.time()

max_mem_utilization = 0.

max_utilization = 0.

processes = []

index = 0

while index < total_images or processes:

while len(processes) < max_concurrent and index < total_images:

# Start a new process

p = mp.Process(target=process_image, args=(index,))

index += 1

p.start()

processes.append(p)

# sample memory utilization

mem_usage = psutil.virtual_memory().percent

max_mem_utilization = max(max_mem_utilization, mem_usage)

cpu_util = psutil.cpu_percent(interval=0.1)

max_utilization = max(max_utilization, cpu_util)

# Remove completed processes from list

processes = [p for p in processes if p.is_alive()]

total_time = time.time() - start_time

print(f"\nTotal Processing Time: {total_time:.2f} seconds")

print(f"Max CPU Utilization: {max_utilization:.2f}%")

print(f"Max Memory Utilization: {max_mem_utilization:.2f}%")

spawn_jobs(total_images=1000, max_concurrent=32)Estimating the Maximum Number of Processes

While the optimal number of maximum concurrent processes is best determined empirically, we can estimate an upper bound based on the 16 GiB of system memory and the size of the resnet-152.pt file, 231 MB.

The table below summarizes the runtime results for several configurations:

Although memory becomes fully saturated at 50 concurrent processes, we observe that maximum throughput is achieved at 8 concurrent jobs — one per vCPU. This indicates that beyond this point, resource contention outweighs any potential gains from additional parallelism.

The Inefficiencies of Independent Model Execution

Running parallel jobs that each load and execute the model independently introduces significant inefficiencies and waste:

- Each process needs to allocate the appropriate memory resources for storing its own copy of the AI model.

- AI models are compute-intensive. Executing them in many processes in parallel can lead to resource contention and reduced throughput.

- Loading the model checkpoint file and initializing the model in each process adds overhead and can further increase latency. In the case of our toy experiment, model initialization makes up for roughly 30%(!!) of the overall inference processing time.

A more efficient alternative is to centralize inference execution using a dedicated model inference server. This approach would eliminate redundant model loading and reduce overall system resource utilization.

In the next section we will set up an AI model inference server and assess its impact on resource utilization and runtime performance.

Note: We could have modified our multiprocessing-based approach to share a single model across processes (e.g., using torch.multiprocessing or another solution based on shared memory). However, the inference server demonstration better aligns with real-world production environments, where jobs often run in isolated containers.

TorchServe Setup

The TorchServe setup described in this section loosely follows the resnet tutorial. Please refer to the official TorchServe documentation for more in-depth guidelines.

Installation

The PyTorch environment of our DLAMI comes preinstalled with TorchServe executables. If you are running in a different environment run the following installation command:

pip install torchserve torch-model-archiverCreating a Model Archive

The TorchServe Model Archiver packages the model and its associated files into a “.mar” file archive, the format required for deployment on TorchServe. We create a TorchServe model archive file based on our model checkpoint file and using the default image_classifier handler:

mkdir model_store

torch-model-archiver \

--model-name resnet-152 \

--serialized-file resnet-152.pt \

--handler image_classifier \

--version 1.0 \

--export-path model_storeTorchServe Configuration

We create a TorchServe config.properties file to define how TorchServe should operate:

model_store=model_store

load_models=resnet-152.mar

models={\

"resnet-152": {\

"1.0": {\

"marName": "resnet-152.mar"\

}\

}\

}

# Number of workers per model

default_workers_per_model=1

# Job queue size (default is 100)

job_queue_size=100After completing these steps, our working directory should look like this:

├── config.properties

֫├── kitten.jpg

├── model_store

│ ├── resnet-152.mar

├── multi_job.pyStarting TorchServe

In a separate shell we start our TorchServe inference server:

source /opt/pytorch/bin/activate

torchserve \

--start \

--disable-token-auth \

--enable-model-api \

--ts-config config.propertiesInference Request Implementation

We define an alternative prediction function that calls our inference service:

import requests

def predict_client(image_id):

with open('kitten.jpg', 'rb') as f:

image = f.read()

response = requests.post(

"http://127.0.0.1:8080/predictions/resnet-152",

data=image,

headers={'Content-Type': 'application/octet-stream'}

)

if response.status_code == 200:

return response.json()

else:

print(f"Error from inference server: {response.text}")Scaling Up the Number of Concurrent Jobs

Now that inference requests are being processed by a central server, we can scale up parallel processing. Unlike the earlier approach where each process loaded and executed its own model, we have sufficient CPU resources to allow for many more concurrent processes. Here we choose 100 processes in accordance with the default job_queue_size capacity of the inference server:

spawn_jobs(total_images=1000, max_concurrent=100)Results

The performance results are captured in the table below. Keep in mind that the comparative results can vary greatly based on the details of the AI model and the runtime environment.

By using a centralized inference server, not only have we have increased overall throughput by more than 2X, but we have freed significant CPU resources for other computation tasks.

Next Steps

Now that we have effectively demonstrated the benefits of a centralized inference serving solution, we can explore several ways to enhance and optimize the setup. Recall that our experiment was intentionally simplified to focus on demonstrating the utility of inference serving. In real-world deployments, additional enhancements may be required to tailor the solution to your specific needs.

- Custom Inference Handlers: While we used TorchServe’s built-in image_classifier handler, defining a custom handler provides much greater control over the details of the inference implementation.

- Advanced Inference Server Configuration: Inference server solutions will typically include many features for tuning the service behavior according to the workload requirements. In the next sections we will explore some of the features supported by TorchServe.

- Expanding the Pipeline: Real world models will typically include more algorithm blocks and more sophisticated AI models than we used in our experiment.

- Multi-Node Deployment: While we ran our experiments on a single compute instance, production setups will typically include multiple nodes.

- Alternative Inference Servers: While TorchServe is a popular choice and relatively easy to set up, there are many alternative inference server solutions that may provide additional benefits and may better suit your needs. Importantly, it was recently announced that TorchServe would no longer be actively maintained. See the documentation for details.

- Alternative Orchestration Frameworks: In our experiment we use Python multiprocessing. Real-world workloads will typically use more advanced orchestration solutions.

- Utilizing Inference Accelerators: While we executed our model on a CPU, using an AI accelerator (e.g., an NVIDIA GPU, a Google Cloud TPU, or an AWS Inferentia) can drastically improve throughput.

- Model Optimization: Optimizing your AI models can greatly increase efficiency and throughput.

- Auto-Scaling for Inference Load: In some use cases inference traffic will fluctuate, requiring an inference server solution that can scale its capacity accordingly.

In the next sections we explore two simple ways to enhance our TorchServe-based inference server implementation. We leave the discussion on other enhancements to future posts.

Batch Inference with TorchServe

Many model inference service solutions support the option of grouping inference requests into batches. This usually results in increased throughput, especially when the model is running on a GPU.

We extend our TorchServe config.properties file to support batch inference with a batch size of up to 8 samples. Please see the official documentation for details on batch inference with TorchServe.

model_store=model_store

load_models=resnet-152.mar

models={\

"resnet-152": {\

"1.0": {\

"marName": "resnet-152.mar",\

"batchSize": 8,\

"maxBatchDelay": 100,\

"responseTimeout": 200\

}\

}\

}

# Number of workers per model

default_workers_per_model=1

# Job queue size (default is 100)

job_queue_size=100Results

We append the results in the table below:

Enabling batched inference increases the throughput by an additional 26.5%.

Multi-Worker Inference with TorchServe

Many model inference service solutions will support creating multiple inference workers for each AI model. This enables fine-tuning the number of inference workers based on expected load. Some solutions support auto-scaling of the number of inference workers.

We extend our own TorchServe setup by increasing the default_workers_per_model setting that controls the number of inference workers assigned to our image classification model.

Importantly, we must limit the number of threads allocated to each worker to prevent resource contention. This is controlled by the number_of_netty_threads setting and by the OMP_NUM_THREADS and MKL_NUM_THREADS environment variables. Here we have set the number of threads to equal the number of vCPUs (8) divided by the number of workers.

model_store=model_store

load_models=resnet-152.mar

models={\

"resnet-152": {\

"1.0": {\

"marName": "resnet-152.mar"\

"batchSize": 8,\

"maxBatchDelay": 100,\

"responseTimeout": 200\

}\

}\

}

# Number of workers per model

default_workers_per_model=2

# Job queue size (default is 100)

job_queue_size=100

# Number of threads per worker

number_of_netty_threads=4The modified TorchServe startup sequence appears below:

export OMP_NUM_THREADS=4

export MKL_NUM_THREADS=4

torchserve \

--start \

--disable-token-auth \

--enable-model-api \

--ts-config config.propertiesResults

In the table below we append the results of running with 2, 4, and 8 inference workers:

By configuring TorchServe to use multiple inference workers, we are able to increase the throughput by an additional 36%. This amounts to a 3.75X improvement over the baseline experiment.

Summary

This experiment highlights the potential impact of inference server deployment on multi-job deep learning workloads. Our findings suggest that using an inference server can improve system resource utilization, enable higher concurrency, and significantly increase overall throughput. Keep in mind that the precise benefits will greatly depend on the details of the workload and the runtime environment.

Designing the inference serving architecture is just one part of optimizing AI model execution. Please see some of our many posts covering a wide range AI model optimization techniques.

The post The Case for Centralized AI Model Inference Serving appeared first on Towards Data Science.

![Some T-Mobile customers can track real-time location of other users and random kids without permission [UPDATED]](https://m-cdn.phonearena.com/images/article/169135-two/Some-T-Mobile-customers-can-track-real-time-location-of-other-users-and-random-kids-without-permission-UPDATED.jpg?#)

![Apple Releases macOS Sequoia 15.5 Beta to Developers [Download]](https://www.iclarified.com/images/news/96915/96915/96915-640.jpg)

![Amazon Makes Last-Minute Bid for TikTok [Report]](https://www.iclarified.com/images/news/96917/96917/96917-640.jpg)

![Apple Releases iOS 18.5 Beta and iPadOS 18.5 Beta [Download]](https://www.iclarified.com/images/news/96907/96907/96907-640.jpg)

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![Is this a suitable approach to architect a flutter app? [closed]](https://i.sstatic.net/4hMHGb1L.png)