AI Algorithm Makes Smarter Text Generation Decisions by Looking Ahead

This is a Plain English Papers summary of a research paper called AI Algorithm Makes Smarter Text Generation Decisions by Looking Ahead. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter. Overview ϕ-Decoding is a new method that enhances large language model (LLM) text generation Balances exploration (trying diverse options) and exploitation (choosing likely outcomes) Uses adaptive "foresight sampling" to look ahead in the decision tree Achieves higher quality outputs than existing methods like beam search Reduces computational costs while maintaining or improving text quality Works across different LLM architectures (encoder-decoder and decoder-only) Plain English Explanation Think of a chess player planning their moves. A novice might only think one move ahead, while a grandmaster considers multiple possible futures before deciding. ϕ-Decoding works similarly with language models. Traditional text generation methods like [beam search](https://aimo... Click here to read the full summary of this paper

This is a Plain English Papers summary of a research paper called AI Algorithm Makes Smarter Text Generation Decisions by Looking Ahead. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter.

Overview

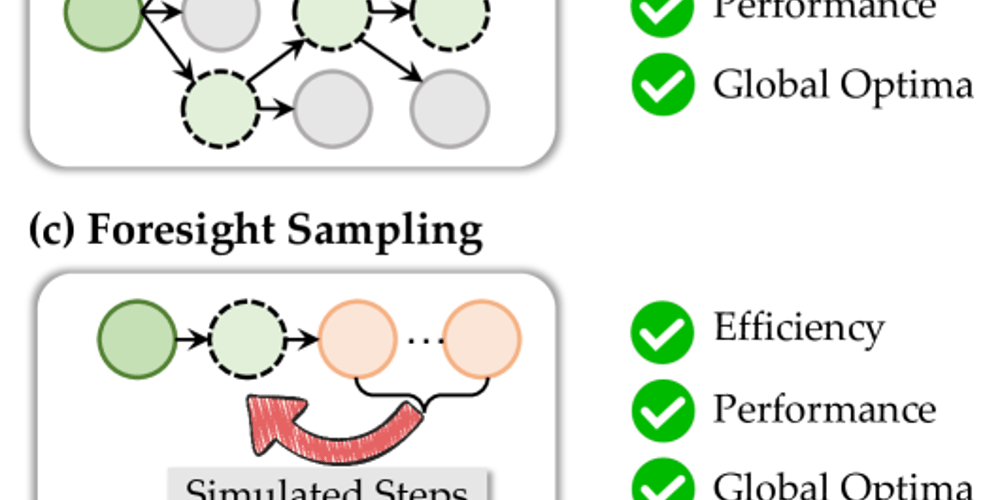

- ϕ-Decoding is a new method that enhances large language model (LLM) text generation

- Balances exploration (trying diverse options) and exploitation (choosing likely outcomes)

- Uses adaptive "foresight sampling" to look ahead in the decision tree

- Achieves higher quality outputs than existing methods like beam search

- Reduces computational costs while maintaining or improving text quality

- Works across different LLM architectures (encoder-decoder and decoder-only)

Plain English Explanation

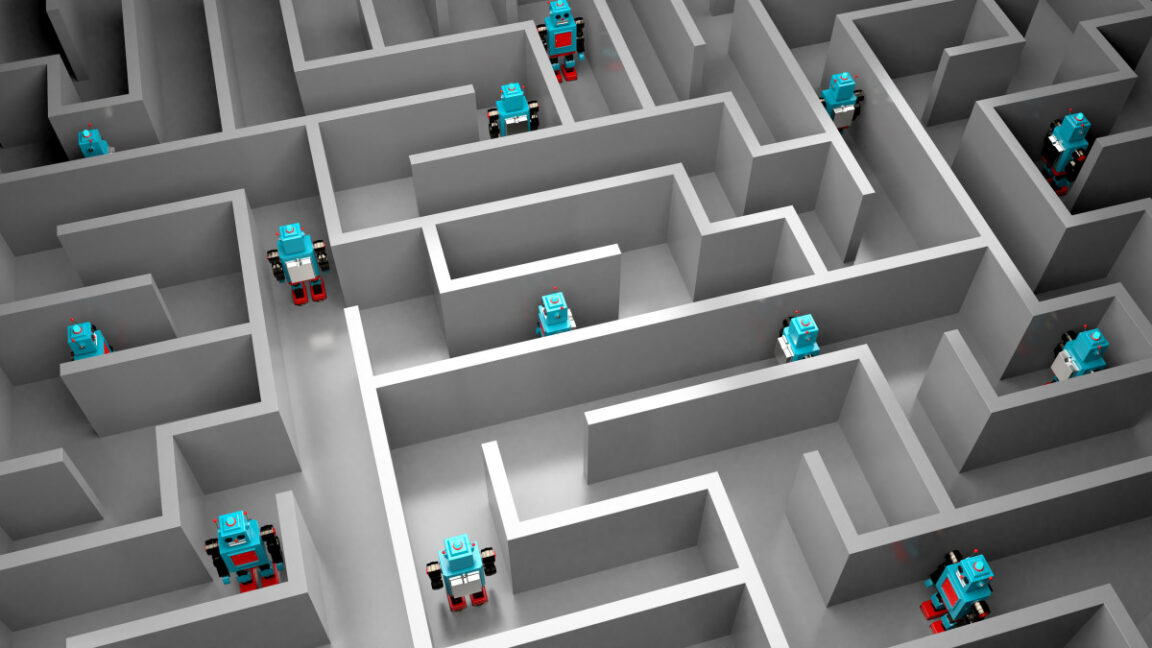

Think of a chess player planning their moves. A novice might only think one move ahead, while a grandmaster considers multiple possible futures before deciding. ϕ-Decoding works similarly with language models.

Traditional text generation methods like [beam search](https://aimo...

![Apple M4 Mac Mini on Sale for $499 [Lowest Price Ever]](https://www.iclarified.com/images/news/96788/96788/96788-640.jpg)

![Apple Shares First Look and Premiere Date for 'The Buccaneers' Season Two [Video]](https://www.iclarified.com/images/news/96786/96786/96786-640.jpg)

![Apple to Craft Key Foldable iPhone Components From Liquid Metal [Kuo]](https://www.iclarified.com/images/news/96784/96784/96784-640.jpg)

_Federico_Caputo_Alamy.jpg?#)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![[The AI Show Episode 138]: Introducing GPT-4.5, Claude 3.7 Sonnet, Alexa+, Deep Research Now in ChatGPT Plus & How AI Is Disrupting Writing](https://www.marketingaiinstitute.com/hubfs/ep%20138%20cover.png)

![From hating coding to programming satellites at age 37 with Francesco Ciulla [Podcast #165]](https://cdn.hashnode.com/res/hashnode/image/upload/v1742585568977/09b25b8e-8c92-4f4b-853f-64b7f7915980.png?#)

![[FREE EBOOKS] Artificial Intelligence for Cybersecurity & Five More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

.jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

.png?#)