Building my first AI agent with NeuronAI and Ollama

I’ve always been fascinated by AI and its potential to enhance productivity. As a PHP developer, I wanted to explore how I could integrate AI capabilities into my applications. That led me to NeuronAI, a powerful PHP framework for building AI-driven agents. Artificial Intelligence (AI) is transforming how developers create intelligent applications. AI agents can perform various tasks, from automating workflows to providing insights based on text analysis. I recently built my first AI agent using NeuronAI and ran it locally with Ollama. Building a PHP AI Agent with NeuronAI was an opportunity to learn how to set up and interact with a local AI model using Ollama, while also solving a real-world problem: improving technical content through AI analysis. In this article, I’ll walk you through how I built my first AI agent. Understanding NeuronAI and Ollama Before diving into the implementation, let's briefly explore the technologies used in this project: NeuronAI: A PHP framework that simplifies AI integration, allowing developers to create AI-powered applications without dealing with complex APIs. Ollama: A lightweight AI model runner that enables local execution of machine learning models, ensuring privacy and control over AI interactions. PHP (Hypertext Preprocessor): a popular open-source scripting language primarily used for web development. It runs on the server side and enables developers to create dynamic and interactive websites by embedding code within HTML. In addition to web applications, PHP is also great for writing scripts and command-line interface (CLI) tools, making it a versatile choice for automation, data processing, and system administration tasks. Why NeuronAI? NeuronAI is a powerful and developer-friendly PHP framework for integrating AI capabilities into applications. Here’s why I chose it for my project: Simplicity: NeuronAI abstracts complex AI interactions, making it easy to integrate and use. Flexibility: it supports multiple AI providers, allowing seamless switching between different models and backends. Extensibility: the framework allows developers to define custom AI agents tailored to specific use cases. Local execution: with support for local models like Ollama, NeuronAI provides a privacy-friendly AI solution without relying on cloud services. Setting up NeuronAI NeuronAI is distributed as an open-source PHP package. Installing it is straightforward using Composer: composer require inspector-apm/neuron-ai This sets up all the necessary dependencies, allowing you to start building AI-powered agents immediately. Creating an AI Agent The goal: as a PHP developer, I wanted to create an AI agent to review technical articles and provide suggestions for improvement. Below is the PHP code that defines my AI agent (creating my-agent.php file):

I’ve always been fascinated by AI and its potential to enhance productivity. As a PHP developer, I wanted to explore how I could integrate AI capabilities into my applications. That led me to NeuronAI, a powerful PHP framework for building AI-driven agents.

Artificial Intelligence (AI) is transforming how developers create intelligent applications. AI agents can perform various tasks, from automating workflows to providing insights based on text analysis.

I recently built my first AI agent using NeuronAI and ran it locally with Ollama.

Building a PHP AI Agent with NeuronAI was an opportunity to learn how to set up and interact with a local AI model using Ollama, while also solving a real-world problem: improving technical content through AI analysis.

In this article, I’ll walk you through how I built my first AI agent.

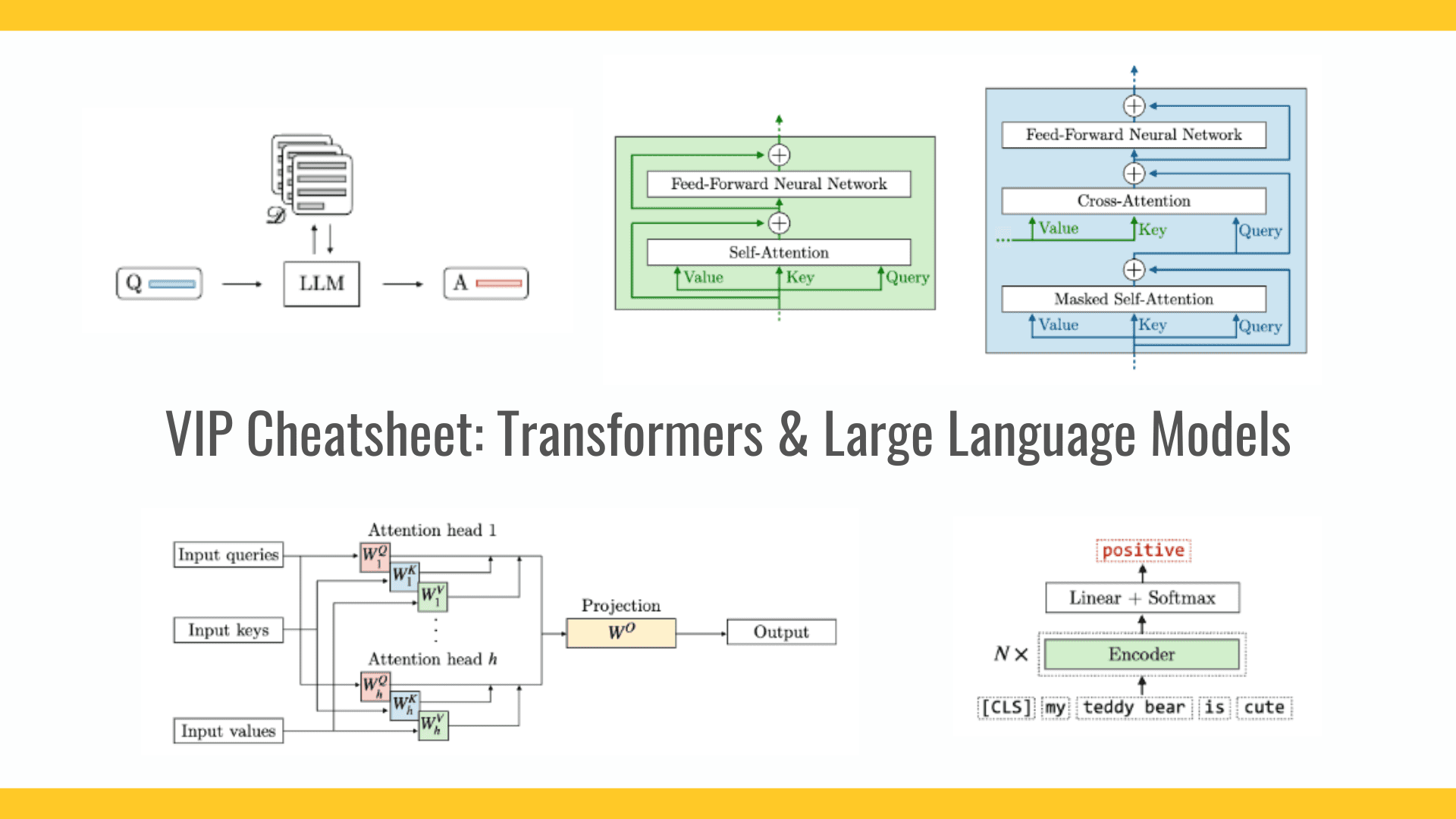

Understanding NeuronAI and Ollama

Before diving into the implementation, let's briefly explore the technologies used in this project:

- NeuronAI: A PHP framework that simplifies AI integration, allowing developers to create AI-powered applications without dealing with complex APIs.

- Ollama: A lightweight AI model runner that enables local execution of machine learning models, ensuring privacy and control over AI interactions.

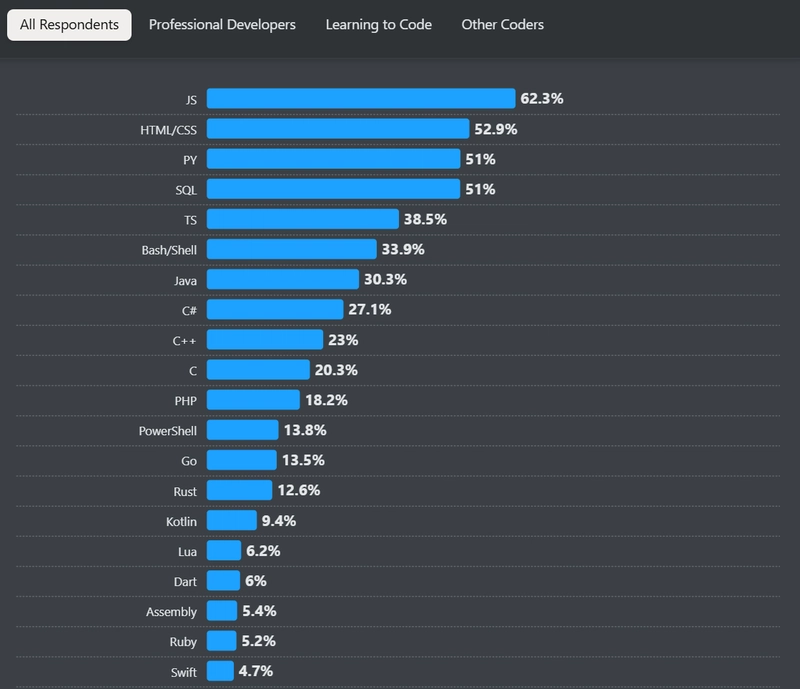

- PHP (Hypertext Preprocessor): a popular open-source scripting language primarily used for web development. It runs on the server side and enables developers to create dynamic and interactive websites by embedding code within HTML. In addition to web applications, PHP is also great for writing scripts and command-line interface (CLI) tools, making it a versatile choice for automation, data processing, and system administration tasks.

Why NeuronAI?

NeuronAI is a powerful and developer-friendly PHP framework for integrating AI capabilities into applications. Here’s why I chose it for my project:

- Simplicity: NeuronAI abstracts complex AI interactions, making it easy to integrate and use.

- Flexibility: it supports multiple AI providers, allowing seamless switching between different models and backends.

- Extensibility: the framework allows developers to define custom AI agents tailored to specific use cases.

- Local execution: with support for local models like Ollama, NeuronAI provides a privacy-friendly AI solution without relying on cloud services.

Setting up NeuronAI

NeuronAI is distributed as an open-source PHP package.

Installing it is straightforward using Composer:

composer require inspector-apm/neuron-ai

This sets up all the necessary dependencies, allowing you to start building AI-powered agents immediately.

Creating an AI Agent

The goal: as a PHP developer, I wanted to create an AI agent to review technical articles and provide suggestions for improvement.

Below is the PHP code that defines my AI agent (creating my-agent.php file):

namespace App\Agents;

require './vendor/autoload.php';

use NeuronAI\Agent;

use NeuronAI\Chat\Messages\UserMessage;

use NeuronAI\Providers\AIProviderInterface;

use NeuronAI\Providers\Ollama\Ollama;

class MyAgent extends Agent

{

public function provider(): AIProviderInterface

{

return new Ollama(

url: 'http://localhost:11434/api/generate',

// Using 'llama3.2:latest' as it provides improved performance and accuracy for natural language processing tasks.

// This version ensures better contextual understanding and more refined responses for technical content analysis.

model: 'llama3.2:latest',

);

}

public function instructions(): ?string

{

return 'You are a technical article content reviewer. '.

'Your role is to analyze a text of an article and provide suggestions '.

'on how the content can be improved to be more clear, and effective.';

}

}

$seoAgent = MyAgent::make();

$articleMarkdownFile = './article-1.md';

$response = $seoAgent->chat(new UserMessage('Who are you?'));

echo $response->getContent();

echo PHP_EOL.PHP_EOL.'---------------'.PHP_EOL.PHP_EOL;

$response = $seoAgent->chat(

new UserMessage('What do you think about the following article? --- '.file_get_contents($articleMarkdownFile))

);

echo $response->getContent();

How It Works

I created the MyAgent class extending Agent.

In the MyAgent class, I implemented two methods for:

- Defining the provider: The

provider()method specifies that the AI agent will use Ollama as the backend model, running locally. The nice thing here is that you can replace the provider if you prefer to run a remote agent with OpenAI or some other platform. - Setting instructions: the

instructions()method defines the role of the AI—analyzing technical articles and providing suggestions. So here you can provide more context to your agent to act with specific skills/roles/tone of voice.

Once I created the agent class, I started using it:

- Creating the agent instance via

MyAgent::make(). - Interacting with the agent, using the

chat()method to send messages and get responses.

Running the AI agent

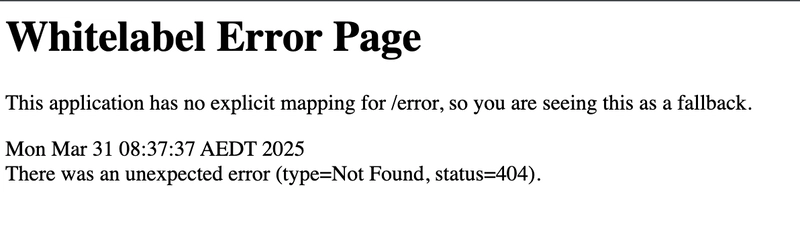

To run the agent, ensure Ollama is installed and running at http://localhost:11434.

If the local Ollama service is unavailable, the script may fail to connect to the AI model.

To handle potential issues, consider adding basic error handling in your script:

try {

$seoAgent = MyAgent::make();

$response = $seoAgent->chat(new UserMessage('Who are you?'));

echo $response->getContent();

} catch (Exception $e) {

echo 'Error: Unable to communicate with the AI service. Please ensure Ollama is running.';

}

This will help catch errors and provide a useful message if the AI service is unavailable.

Then, execute the PHP script:

php my-agent.php

The AI will respond to queries and analyze the $articleMarkdownFile file, providing insights into improving the article’s clarity and effectiveness.

Final Thoughts

Building this AI agent with NeuronAI and Ollama was a smooth and rewarding experience. The simplicity of integrating an AI model in PHP opens up many possibilities for content analysis, automation, and beyond.

To ensure long-term effectiveness, consider the following best practices for maintaining and updating your AI agent:

Regularly update dependencies: keep NeuronAI, Ollama, and other dependencies updated to benefit from performance improvements and security patches.

Monitor AI responses: evaluate the AI’s responses periodically to ensure they align with the intended use case and adjust the model or prompts as needed.

Optimize performance: If the AI agent starts slowing down, consider optimizing input prompts, using caching mechanisms, or experimenting with different AI models.

Implement logging and error handling: maintain logs of AI interactions to troubleshoot issues and improve accuracy over time.

Enhance security: validate and sanitize inputs to prevent unintended interactions or potential exploits.

If you’re interested in AI-driven PHP applications, I highly recommend experimenting with NeuronAI!

![Get Up to 69% Off Anker and Eufy Products on Final Day of Amazon's Big Spring Sale [Deal]](https://www.iclarified.com/images/news/96888/96888/96888-640.jpg)

![Apple Officially Releases macOS Sequoia 15.4 [Download]](https://www.iclarified.com/images/news/96887/96887/96887-640.jpg)

![Oppo ditches Alert Slider in teaser for smaller Find X8s, five-camera Find X8 Ultra [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/03/oppo-find-x8s-ultra-teaser-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

-xl-xl.jpg)

![[The AI Show Episode 141]: Road to AGI (and Beyond) #1 — The AI Timeline is Accelerating](https://www.marketingaiinstitute.com/hubfs/ep%20141.1.png)

![[The AI Show Episode 140]: New AGI Warnings, OpenAI Suggests Government Policy, Sam Altman Teases Creative Writing Model, Claude Web Search & Apple’s AI Woes](https://www.marketingaiinstitute.com/hubfs/ep%20140%20cover.png)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![From broke musician to working dev. How college drop-out Ryan Furrer taught himself to code [Podcast #166]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743189826063/2080cde4-6fc0-46fb-b98d-b3d59841e8c4.png?#)

-1280x720.jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)