Caching Strategies Across Application Layers: Building Faster, More Scalable Products

Sarah’s phone buzzed at 2:43 AM. Half-asleep, she answered. On the other end, the on-call engineer sounded stressed: "The database load just spiked. The app is crawling, and users are reporting timeouts everywhere." As the product lead for a fast-growing SaaS platform with over 200,000 daily active users, Sarah knew that every minute of slowdown meant frustrated customers—and possibly some of them leaving for good. The cause? A small feature update had unintentionally bypassed key caching layers, sending every request straight to the database. What should have been a routine release turned into an emergency, taking hours to fix and forcing a partial rollback. These kinds of issues happen more often than we’d like to admit. In the rush to ship features, caching can feel like an afterthought—something only engineers worry about. But in reality, caching affects all of us, from product managers thinking about user experience to developers balancing performance and reliability. Caching is essential for applications, from mobile apps to web platforms and APIs. When done right, it prevents delays and enhances user experience. When implemented correctly, caching reduces unnecessary work, enhances efficiency, and improves user experience. But when we overlook caching, it can lead to stale data, inconsistent behavior, or even system outages like the one Sarah’s team faced. In this article, we’ll walk through different types of caching, from browser caches that speed up loading times to database caches that reduce repeated queries. Along the way, we’ll share real-world examples, practical strategies, and common mistakes we’ve encountered. By the end, we hope to have a clearer understanding of how caching fits into product development—not just as a technical detail, but as a tool we can all use to build faster, more reliable applications. Now that we understand why caching is crucial for performance and scalability, let's start by exploring the first layer of caching: the browser cache. This is often the fastest and easiest way to improve load times for end users. Browser Caching: The First Line of Defense Picture this: we're sipping our morning coffee, opening our favorite news app, and it loads instantly. Not because we have the world's fastest internet connection, but because our browser remembers what it downloaded yesterday. That's browser caching at work—the quiet, behind-the-scenes optimization that makes the web feel fast. When we visit a website for the first time, our browser doesn’t just display the page and forget about it. It stores key resources—the JavaScript that makes buttons work, the CSS that styles the layout, and the images that make the page visually engaging. The next time we visit, instead of downloading everything again, the browser retrieves these cached files in a fraction of the time. HTTP headers dictate what gets cached and for how long, serving as caching instructions from the server: Cache-Control: Defines how long to keep a resource before checking for a new version. ETag: Works like a version number—if the file hasn’t changed, the browser skips downloading it again. Expires: Sets a specific expiration date for content. Last-Modified: Lets the browser ask, “Has this changed since the last time I checked?” and reloads only if necessary. To implement browser caching effectively, developers often use tools like Webpack (for asset bundling and versioning), Workbox.js (for managing service worker caching), and browser DevTools (Chrome, Firefox, Safari) to inspect cache behavior. Performance audit tools like Google Lighthouse help measure caching effectiveness alongside other optimizations. Implementation Strategies Here are some strategies we can use to make browser caching more effective: Version-stamping assets. Instead of naming a file main.js, we can use main.d41ef2c.js. The unique fingerprint tells the browser when to fetch a new version, preventing users from seeing outdated files. Service workers for offline caching. Service workers store and serve critical assets even when there's no internet connection, ensuring smooth offline experiences. Balancing memory and disk cache. Browsers store frequently used assets in fast memory cache, while less critical ones go to disk cache. Structuring assets properly helps optimize performance. Preloading and prefetching. Good websites anticipate what users need next. Using , we can tell the browser to fetch key assets early. With , we can load resources before users even request them—for example, preloading images for the next page they’re likely to visit. Why This Matters When browser caching is well-implemented, it does more than just speed up loading times—it improves the overall user experience and optimizes infrastructure efficiency. Faster load times. Returning visitors don’t have to re-download assets they already have, making pages feel instant

Sarah’s phone buzzed at 2:43 AM. Half-asleep, she answered. On the other end, the on-call engineer sounded stressed:

"The database load just spiked. The app is crawling, and users are reporting timeouts everywhere."

As the product lead for a fast-growing SaaS platform with over 200,000 daily active users, Sarah knew that every minute of slowdown meant frustrated customers—and possibly some of them leaving for good.

The cause? A small feature update had unintentionally bypassed key caching layers, sending every request straight to the database. What should have been a routine release turned into an emergency, taking hours to fix and forcing a partial rollback.

These kinds of issues happen more often than we’d like to admit. In the rush to ship features, caching can feel like an afterthought—something only engineers worry about. But in reality, caching affects all of us, from product managers thinking about user experience to developers balancing performance and reliability.

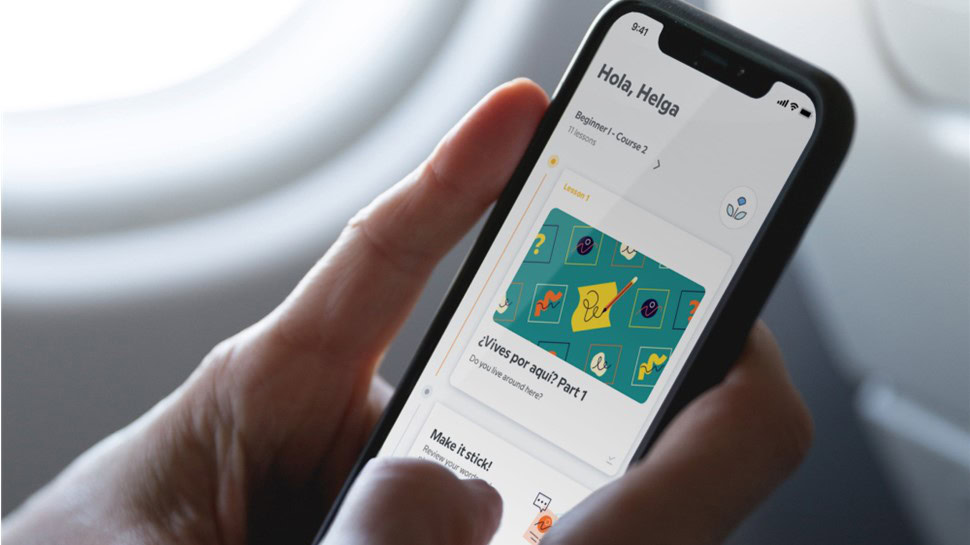

Caching is essential for applications, from mobile apps to web platforms and APIs. When done right, it prevents delays and enhances user experience.

When implemented correctly, caching reduces unnecessary work, enhances efficiency, and improves user experience. But when we overlook caching, it can lead to stale data, inconsistent behavior, or even system outages like the one Sarah’s team faced.

In this article, we’ll walk through different types of caching, from browser caches that speed up loading times to database caches that reduce repeated queries. Along the way, we’ll share real-world examples, practical strategies, and common mistakes we’ve encountered.

By the end, we hope to have a clearer understanding of how caching fits into product development—not just as a technical detail, but as a tool we can all use to build faster, more reliable applications.

Now that we understand why caching is crucial for performance and scalability, let's start by exploring the first layer of caching: the browser cache. This is often the fastest and easiest way to improve load times for end users.

Browser Caching: The First Line of Defense

Picture this: we're sipping our morning coffee, opening our favorite news app, and it loads instantly. Not because we have the world's fastest internet connection, but because our browser remembers what it downloaded yesterday. That's browser caching at work—the quiet, behind-the-scenes optimization that makes the web feel fast.

When we visit a website for the first time, our browser doesn’t just display the page and forget about it. It stores key resources—the JavaScript that makes buttons work, the CSS that styles the layout, and the images that make the page visually engaging. The next time we visit, instead of downloading everything again, the browser retrieves these cached files in a fraction of the time.

HTTP headers dictate what gets cached and for how long, serving as caching instructions from the server:

- Cache-Control: Defines how long to keep a resource before checking for a new version.

- ETag: Works like a version number—if the file hasn’t changed, the browser skips downloading it again.

- Expires: Sets a specific expiration date for content.

- Last-Modified: Lets the browser ask, “Has this changed since the last time I checked?” and reloads only if necessary.

To implement browser caching effectively, developers often use tools like Webpack (for asset bundling and versioning), Workbox.js (for managing service worker caching), and browser DevTools (Chrome, Firefox, Safari) to inspect cache behavior. Performance audit tools like Google Lighthouse help measure caching effectiveness alongside other optimizations.

Implementation Strategies

Here are some strategies we can use to make browser caching more effective:

Version-stamping assets. Instead of naming a file

main.js, we can usemain.d41ef2c.js. The unique fingerprint tells the browser when to fetch a new version, preventing users from seeing outdated files.Service workers for offline caching. Service workers store and serve critical assets even when there's no internet connection, ensuring smooth offline experiences.

Balancing memory and disk cache. Browsers store frequently used assets in fast memory cache, while less critical ones go to disk cache. Structuring assets properly helps optimize performance.

Preloading and prefetching. Good websites anticipate what users need next. Using

, we can tell the browser to fetch key assets early. With, we can load resources before users even request them—for example, preloading images for the next page they’re likely to visit.

Why This Matters

When browser caching is well-implemented, it does more than just speed up loading times—it improves the overall user experience and optimizes infrastructure efficiency.

Faster load times. Returning visitors don’t have to re-download assets they already have, making pages feel instantly responsive. A content-heavy news site, for example, saw noticeable improvements when implementing proper browser caching, as frequent readers no longer had to reload the same images and scripts every time they visited.

Lower bounce rates. Users expect websites to load quickly, and slow performance often leads to frustration and abandonment. An e-commerce company that optimized its caching strategy found that customers stayed on their product pages longer, leading to better engagement and higher conversion rates.

Improved session continuity. For applications that rely on frequent interactions, caching can make navigation smoother. A media streaming platform optimized caching for its homepage and video thumbnails, ensuring that users could browse without unnecessary delays when switching between content.

Reduced data usage. For mobile users, caching reduces the need to re-download resources, which is particularly valuable for those on limited data plans or in regions with slower network connections. A mobile app improved its usability in areas with spotty internet access by caching key interface elements, allowing users to continue browsing seamlessly even with temporary network disruptions.

Common Pitfalls

While caching improves performance, it comes with challenges that need careful handling:

Over-aggressive caching. Setting cache durations too long for dynamic content can cause users to see outdated information. An e-commerce site once cached its inventory page for six months, leading customers to try purchasing out-of-stock items. To prevent this, it's important to set appropriate expiration times and ensure cache invalidation mechanisms are in place for frequently changing data.

Not caching enough. Some assets, like logos or icons, rarely change but are often fetched repeatedly due to improper caching rules. Without caching, users waste bandwidth downloading the same files on every visit. Identifying truly static assets and assigning them long cache durations helps optimize performance without risking outdated content.

Cache invalidation issues. Even after a successful deployment, users may still see outdated versions of a website due to cache not being properly refreshed. This often happens when file names remain unchanged after an update. Using versioned filenames like

main.abc123.jsensures browsers fetch the latest files while still benefiting from caching.Security and privacy risks. Without proper controls, caching sensitive data can lead to privacy breaches. A banking app once cached account summaries incorrectly, momentarily exposing one user’s balance to another. To prevent such risks, sensitive content should be marked as non-cacheable using headers like

Cache-Control: no-store, ensuring it is never stored or served from cache.

While browser caching speeds up individual page loads, it has limitations. Users across the globe may experience delays when fetching content from a single server. A visitor in New York might enjoy instant access, while someone in Tokyo faces sluggish load times. This is where Content Delivery Networks (CDNs) step in, delivering content closer to users for a seamless experience.

CDN Caching: Bringing Content Closer to Users

How It Works

Imagine we’re running a global coffee chain. Instead of brewing all our coffee in Jakarta and shipping it worldwide, which would result in cold, stale coffee, we build local shops in every city. That’s essentially what a Content Delivery Network (CDN) does for digital content.

CDNs maintain a vast network of servers—often called edge nodes—strategically positioned around the world. When Marco in Milan or Priya in Pune wants to see our website’s hero image, they don’t have to wait for data to travel from a server in Virginia. Instead, they receive a copy from a nearby edge server, making everything feel much faster no matter where they are.

Modern CDNs go beyond static file caching. Many now support caching API responses, running small programs at the edge using tools like Cloudflare Workers or Lambda@Edge, and even protecting against malicious traffic. It’s like having a combination of a local warehouse, a smart assistant, and a security guard in every city where our users live.

One of the biggest advantages of CDNs is geographical awareness. If our app suddenly gains popularity in South Korea, the CDN automatically ensures those users get the same fast experience as someone near our headquarters.

Different teams select CDNs based on their needs. For example, Cloudflare is widely used for its security and DDoS protection, Fastly is known for real-time cache purging and low latency, AWS CloudFront integrates seamlessly with other AWS services, and Akamai offers a massive global network, making it a preferred choice for large enterprises.

Implementation Strategies

-

Cache rules configuration. Setting different caching policies for different types of content ensures a balance between freshness and performance. For example, a news organization might set up cache rules as follows:

- Breaking news pages cached for 5 minutes.

- Weekly feature articles for 24 hours.

- Archived content for 30 days.

Cache keys. Cache keys determine what makes content unique. Should two versions of the same product page—one with

?ref=emailand one without—be cached separately or treated as the same? One e-commerce company unknowingly created thousands of duplicate caches because they weren’t handling session IDs properly in URLs. A small change to their cache key strategy significantly reduced their CDN costs.Dynamic content acceleration. Even frequently changing content can benefit from short-lived caching. A financial services app caches personalized portfolio summaries for just 30 seconds, eliminating unnecessary database hits while keeping data fresh enough for users.

Edge functions and workers. Some CDNs allow small programs to run at the edge to dynamically modify responses. A gaming company used edge functions to insert region-specific tournament details into a single cached page, avoiding the need to generate separate pages for each region.

Why This Matters

CDN caching improves performance in ways that browser caching alone cannot. Here’s how:

- Faster page load times. By reducing the distance between users and content, CDNs significantly decrease delays, especially for users far from the origin server.

- Global consistency. Users across different regions experience similar performance, whether they’re in Brazil, Japan, or the U.S.

- Reduced load on origin servers. CDNs absorb the bulk of traffic, reducing direct requests to backend infrastructure and preventing overload during high-traffic events. A retail company that experienced traffic spikes on Black Friday relied on a CDN to handle the surge without increasing server capacity.

- Optimized bandwidth costs. CDNs apply compression and delivery optimizations, reducing data transfer costs. A video streaming startup switched to a CDN with better video compression, cutting delivery expenses while improving streaming quality.

Common Pitfalls

Overly complex cache configurations. Some teams implement overly complex caching rules, making them difficult to modify. One engineering manager put it this way: “Our CDN config has become our legacy code.” Keeping rules simple and well-documented makes ongoing maintenance easier.

Cache coherency issues. Keeping content synchronized across different regions isn’t always straightforward. A company launching a new product found that European users saw the update two hours before U.S. users due to inconsistent cache invalidation. This led to confusion, support tickets, and customer complaints on social media.

Mismanaged CDN costs. CDN pricing models vary—some charge primarily for bandwidth, while others focus on request volume. A streaming service attempted to reduce bandwidth costs but overlooked the fact that their CDN charged mostly for requests, causing their costs to rise instead of fall. Understanding pricing structures is crucial to avoiding unexpected expenses.

Security gaps at the CDN level. Security measures applied at the origin server don’t automatically carry over to the CDN. A financial services company carefully configured security headers on its main servers but forgot to apply them at the CDN level, leaving key vulnerabilities exposed. Ensuring that security policies are consistently enforced across all layers helps prevent such oversights.

CDNs are fantastic for delivering static assets like images, CSS, and JavaScript files quickly, but what about dynamic data? API calls, such as product listings, user dashboards, or flight availability, often require fresh data. If every request hits the backend, it can slow down the entire system. API Gateway caching steps in as a solution, reducing redundant requests and improving API response times.

API Gateway Caching: The Request Filter

How It Works

Imagine we’re running a popular restaurant where the maître d' intercepts repeat orders before they reach the kitchen. "The table in the corner wants another plate of the special pasta? I already know exactly how the chef prepares that—no need to bother the kitchen again." That’s essentially what API Gateway caching does for our applications.

Acting as a middle layer between client applications and backend services, API Gateway caching reduces redundant processing by storing commonly requested API responses. While CDNs are optimized for static assets like images and scripts, API Gateways are designed to cache structured data, such as JSON or XML responses, helping to offload repeated database queries and reduce API latency.

A travel booking platform initially had uncached API calls taking 600-800ms to return flight results. With API Gateway caching enabled, identical searches took just 40ms, significantly improving responsiveness.

Many teams use AWS API Gateway for cloud-native applications, while Kong is a popular choice for self-hosted and Kubernetes environments. For enterprise-scale API management, solutions like Apigee (Google Cloud) are widely used. If we’re already using NGINX, the MicroCache module offers a lightweight alternative. The best choice depends on factors like infrastructure, compliance needs, and scale.

Key Features of API Gateway Caching

Full response caching. Unlike some caching layers that store fragments, API Gateways typically cache entire API responses. A financial services app implemented was making thousands of identical market data queries per minute—API Gateway caching reduced their backend load by 94%.

Security and authentication handling. API Gateways can authenticate requests before checking the cache, ensuring unauthorized users don’t access cached responses they shouldn’t see. This is especially critical for applications handling sensitive data.

Cache key customization. We can define which parts of a request—headers, query parameters, path segments, or even body elements—should determine cache uniqueness. A media streaming service I advised improved cache efficiency by including device type in cache keys but excluding session identifiers, dramatically reducing redundant caching.

Granular TTL control. Different API endpoints have different freshness needs. A banking app implemented cached account history for 30 minutes, transaction statuses for 60 seconds, and current balances were never cached.

Rate limiting and quota management. Even when serving cached responses, API Gateways can enforce rate limits, helping prevent traffic spikes from overwhelming backend services.

Implementation Strategies

-

Cache per endpoint

Each API has different requirements—some are read-heavy, others update frequently. A product catalog API implementation cached:- Category listings for 30 minutes

- Product details for 5 minutes

- Inventory status for 30 seconds

-

Cache segmentation

Sometimes, the same API endpoint needs different caching rules depending on user type. A B2B platform implementation cached pricing API responses for:- Anonymous users → Cached for 1 hour

- Authenticated partners → Cached for 5 minutes to reflect negotiated pricing updates

-

Selective caching

Not all HTTP methods should be cached.- GET requests are typically safe to cache.

- POST, PUT, and DELETE modify data and should bypass the cache. One team mistakenly mistakenly cached POST requests, leading to orders not appearing in customer history for 15 minutes.

Using vary headers

Many applications deliver different responses based on content type, language, or device. Configuring cache keys properly can prevent unnecessary duplicate caching. A global e-commerce site implementation doubled cache efficiency by properly implementingVaryheaders for different languages.Cache bypass options

Some users need real-time data. We can implement a query parameter like?fresh=trueto allow users to bypass the cache when necessary. One investment platform implementation added a “Refresh” button to ensure users saw real-time financial reports while still benefiting from caching during normal browsing.

Why This Matters

API Gateway caching improves application performance in multiple ways:

Faster API responses. Caching API calls can reduce response times by 70-95%, making applications feel much snappier.

Lower backend load. By serving cached responses, API Gateways can reduce redundant API calls by over 80%, easing database strain and improving scalability. A social media analytics platform implemented reduced database queries from 3 million to 400,000 per hour just by enabling API caching.

Consistent performance. One e-commerce platform implementation didn’t just improve average API response times—they also eliminated unpredictable latency spikes during peak traffic hours. As their CTO put it, "Users notice inconsistency more than they notice raw speed."

Improved API availability. When a payment service implementation had database slowdowns, their API Gateway continued serving cached responses, preventing an outage. Their team estimated that caching bought them 30 minutes of breathing room before backend fixes took effect.

Common Pitfalls

Over-aggressive caching

Caching sensitive, user-specific data can lead to serious security issues. One financial app briefly showed User A’s balance to User B due to improper cache key settings. Always include user identifiers in cache keys when necessary.Inconsistent user experience

When caching is misconfigured, some users see fresh data while others get stale responses. A document editing platform implemented cached document statuses too aggressively, causing team members to see outdated content for several minutes, even after refreshing.Cache poisoning

If an error response or incorrect data gets cached, it can spread to multiple users. A healthcare app implementation cached incomplete patient records due to a database migration issue—turning a 30-second problem into a 15-minute one.Hidden bugs

When caching is working well, we may not notice backend failures immediately. One team mistakenly discovered that their API was throwing errors 20% of the time, but the cache had masked the problem for weeks. Regular cache bypass testing helps detect hidden failures.Cache stampede

When a frequently accessed item expires, multiple clients may request fresh data at the same time. This sudden spike can overload the backend, causing unexpected performance issues. A sports statistics platform implemented saw their database crash during a major game because their player stats cache expired just as a star player scored.

Solution: Use staggered expirations and background refreshes to avoid traffic spikes when cache entries expire.

API Gateway caching optimizes API calls, but what if we need to cache frequently accessed data within the application itself? Imagine a dashboard that displays the same metrics for hundreds of users—fetching this data from the database every time would be inefficient. Instead, application caching allows us to store frequently used data in memory, significantly improving performance and reducing backend strain.

Application Layer Caching: The Middle Tier

How It Works

Remember the last time we asked a friend the same question twice in five minutes? They probably gave us a look that said, "I just told you that." With application layer caching, we avoid recalculating or fetching data we’ve already seen, making our systems much more efficient.

Sitting between the application and the database, application caching acts as a short-term memory store. It holds frequently accessed data in fast, in-memory storage like Redis or Memcached—think of them as digital scratch pads that can be read thousands of times faster than even the most optimized database.

Many teams rely on Redis for its versatility and support for different data structures. Managed services like AWS ElastiCache and Azure Cache for Redis simplify operations, while language-specific libraries like Caffeine (Java) and node-cache (Node.js) provide efficient caching within specific tech stacks.

Unlike browser and CDN caching, which primarily handle static assets, application caching deals with dynamic data. It stores information that changes frequently but doesn’t need to be recalculated or retrieved every time, such as:

- User profile details

- Product inventories

- Complex API responses

- Results of computationally expensive tasks

A team optimizing a recommendation engine once spent three days refining an algorithm to generate product suggestions. They later discovered that caching those recommendations for a few minutes provided an even greater performance boost within just three hours. "We were trying to build a faster car," they noted, "when we really just needed to stop making unnecessary trips."

Common Caching Patterns

Data caching. Storing database records or API responses in memory to reduce repetitive database queries. By keeping frequently accessed data readily available, this approach reduces database load and improves response times while maintaining relatively fresh data.

Computation caching. Storing the results of expensive calculations so they don’t have to be recomputed repeatedly. For example, a financial services platform calculating risk assessment scores for users might cache the results for a short period. Instead of recalculating the same data for every request, the system retrieves it instantly from cache, significantly improving response times and reducing the load on computing resources.

Session caching. Storing user session data in memory for quick access. This ensures that applications can efficiently maintain user authentication, preferences, and shopping cart data across multiple requests or page reloads without frequent database lookups.

Rate limiting. Using a cache to track and limit API requests from individual users. This helps prevent accidental or intentional overload of the system by enforcing request thresholds while reducing unnecessary processing.

Implementation Strategies

Cache-aside (lazy loading). Before making a request to the database, the application first checks the cache. If the data isn’t there, it fetches the information, stores it in the cache, and serves it to the user. This pattern is widely used because it keeps cache management simple. A login API implementation reduced response time from 600ms to 40ms by caching user authentication data this way.

Read-through caching. The cache itself is responsible for retrieving missing data. If the requested data isn’t in the cache, it automatically fetches it from the source. This simplifies application logic but requires a more sophisticated caching layer.

Write-through caching. Every time data is updated, it’s written to both the cache and the backend database simultaneously. This ensures the cache is always in sync with the latest data but adds some latency to write operations. A ticket-booking platform used this approach to ensure seat availability information was always accurate.

Write-behind caching. Data is first written to the cache and then asynchronously updated in the backend. This improves write performance but carries some risk if the system fails before syncing with the database.

-

Time-based expiration. Different types of data have different expiration needs. An e-commerce site implemented a layered approach:

- Product descriptions cached for 1 week

- Inventory levels cached for 5 minutes

- Flash sale prices cached for 30 seconds

Why This Matters

Application caching improves both user experience and infrastructure efficiency:

Faster user interactions. Caching frequently accessed data can reduce API response times by 50-95%, making applications feel more responsive. A mobile app reduced its average API response time from 300ms to just 35ms after implementing application caching.

Lower infrastructure costs. Optimized caching reduces CPU and memory usage, allowing teams to handle more traffic with fewer resources. A B2B platform reduced its server count by 60% while handling more requests.

Reduced database load. Caching minimizes expensive database queries, keeping systems stable under heavy traffic. An analytics dashboard lowered database CPU utilization from 85% to 30%, eliminating timeouts during peak hours.

Better scalability without extra cost. Caching allows systems to handle traffic spikes without requiring a massive increase in infrastructure. As one CTO put it, "Before caching, each new marketing campaign meant an emergency infrastructure meeting. Now we just watch the metrics and smile, knowing the system will handle it."

Common Pitfalls

Cache invalidation challenges. Knowing when to refresh or discard cached data is surprisingly complex. Some engineering teams have created cache invalidation diagrams that look more like abstract art than structured designs.

Stale data issues. If cache invalidation isn’t handled correctly, users may see outdated information. A marketplace app once displayed "In Stock" labels for products that had already sold out, frustrating customers and increasing support tickets.

Cache penetration. If non-existent data is frequently requested, it can bypass the cache and overload the database. A system experiencing slowdowns due to bots requesting random product IDs mitigated the issue by implementing a "negative result cache" to remember which IDs didn’t exist.

Cache avalanche. If many cached items expire simultaneously, the sudden surge of database queries can cause system failure. A social platform crashed during a product launch when all promotional content caches expired simultaneously, triggering thousands of database queries.

Local vs. distributed caching challenges. Local in-memory caches work well for small applications, but as traffic grows, a distributed caching system becomes essential. A startup struggling with inconsistent user experiences found that switching to Redis as a centralized cache immediately resolved the issue.

Application caching keeps frequently accessed data readily available, but what happens when the database itself becomes the bottleneck? Every database query consumes CPU and memory, leading to slow response times under heavy load. Database caching steps in as the final layer of defense, storing precomputed results and frequently queried data in memory to keep things running smoothly.

Database Caching: The Foundation Layer

How It Works

Imagine walking into a library where the librarian already has our favorite books set aside because "we always ask for these." That’s similar to how database caching works—it anticipates frequently accessed data and keeps it readily available, making our applications run more efficiently.

Database caching is the deepest, most fundamental layer of caching in our application stack. Unlike other caching layers that store pre-processed responses, database caching optimizes how data is retrieved, reducing redundant work and improving performance.

Modern database systems do much more than just store data—they actively optimize how information is accessed and processed. They incorporate multiple caching mechanisms, much like a master chess player thinking several moves ahead:

Buffer cache. Stores frequently accessed disk pages directly in memory, reducing the need to fetch data from disk. This is like keeping our most-read pages in a high-speed binder instead of going back to the filing cabinet every time.

Query cache. Remembers answers to frequently asked questions. If multiple users request "How many users signed up last Tuesday?" the database can return a cached result instead of recalculating it. While MySQL had a built-in query cache (now removed due to inefficiencies), databases like PostgreSQL offer alternatives through prepared statements.

Execution plan cache. Before executing a query, the database determines the most efficient way to fetch the data. This planning process can be expensive, but caching the execution plan avoids recalculating it every time. It’s like a delivery driver optimizing a route once and reusing it for similar trips.

Materialized views. store pre-computed query results as tables, eliminating redundant calculations and speeding up queries. Instead of recalculating "total sales by region, by month" each time someone loads a dashboard, a materialized view keeps this report pre-generated.

These caching mechanisms vary by database. PostgreSQL supports prepared statements and buffer management tools like pgFincore, while MySQL/MariaDB optimize performance through the InnoDB buffer pool. SQL Server provides buffer pool extensions and execution plan caching.

For high-read scenarios, tools like PgBouncer and Amazon RDS Proxy help manage database connections efficiently, while materialized views (supported in PostgreSQL, Oracle, and SQL Server) provide powerful query caching capabilities.

Implementation Strategies

Index optimization. Indexes act like pre-sorted lookup tables, allowing databases to quickly locate specific data. A well-designed index can turn a slow, full-table scan into a lightning-fast lookup. One team reduced a product search query from 30 seconds to 25 milliseconds simply by adding the right index.

Query optimization. Small changes in how queries are written can significantly impact performance. Queries that leverage cached execution plans often run much faster than those that force full table scans. Two engineering teams wrote nearly identical queries—one was 50 times faster because it aligned with the database’s caching mechanisms.

Connection pooling. Establishing database connections is expensive, involving authentication, state setup, and memory allocation. Connection pooling maintains pre-established connections that applications can reuse, preserving cached execution plans and reducing response times. An e-commerce platform reduced checkout page load time by 300ms simply by implementing connection pooling.

Read replicas. For read-heavy workloads, having multiple read-only copies of the database can reduce the load on the primary database. News websites, for example, use read replicas to handle peak morning traffic without slowing down content updates.

Why This Matters

Database caching improves deep system-level performance, directly impacting user experience and scalability:

Faster queries. Caching reduces query execution time dramatically. In some cases, complex analytics queries that originally took 30 seconds can be reduced to milliseconds, improving application responsiveness.

Lower database load. Caching minimizes redundant computations and disk access, reducing CPU and I/O usage. A healthcare platform lowered database CPU utilization from 95% to 30% just by optimizing buffer cache settings.

More concurrent users. Optimized database caching enables applications to support more simultaneous users. One team improved their system capacity from 5,000 to 20,000 concurrent users without upgrading infrastructure.

Reduced infrastructure costs. Efficient caching delays or eliminates the need for expensive database upgrades. A CTO once noted, "We were about to spend $10,000 a month on a database upgrade. After optimizing our caching strategy, we achieved better performance on our existing hardware and postponed that expense for 18 months."

Common Pitfalls

Cache size allocation. Allocating the right amount of memory for database caching is critical. Too little memory reduces caching benefits, while too much can starve other processes or cause the system to swap to disk, leading to performance degradation.

Query plan instability. Cached execution plans are optimized based on current data distribution. As data changes, a previously efficient query plan can suddenly become inefficient, leading to performance issues. A retail company saw checkout times slow dramatically when their database chose a suboptimal execution plan due to shifting customer behavior.

Over-indexing. While indexes speed up reads, they slow down writes because every update requires index maintenance. One team once had 25 indexes on a single table—so many that inserts and updates were spending more time updating indexes than modifying the actual data.

Isolation level mismatches. Database transactions follow isolation rules that control how changes are visible to concurrent requests. A financial services app showed inconsistent account balances because its caching strategy conflicted with its chosen isolation level.

We’ve explored each caching layer independently, but the real magic happens when they work together. A robust caching strategy isn't just about optimizing one layer—it’s about ensuring browser, CDN, API, application, and database caching function cohesively to prevent bottlenecks. Let’s explore how to align these layers for maximum efficiency and scalability.

Integrated Caching Strategy: Putting It All Together

Layered Caching Architecture

An effective caching strategy leverages multiple layers, with each layer optimized for specific types of data and access patterns:

- Browser Cache. Stores static assets like images, stylesheets, and scripts directly on the user’s device, reducing load times for repeat visits.

- CDN. Distributes cached content across globally distributed edge servers, ensuring fast delivery regardless of location.

- API Gateway Cache. Speeds up API response times by caching frequently requested data before it reaches backend services.

- Application Cache. Reduces redundant computations by caching frequently accessed data and computed results in memory.

- Database Cache. Optimizes query execution by storing precomputed results, reducing database load and improving scalability.

Cache Coherency Across Layers

One of the most challenging aspects of multi-layer caching is maintaining consistency across layers. Strategies include:

- Cache Invalidation Chains. Ensures that when data is updated in one layer, all dependent caches are invalidated, preventing stale responses.

- TTL Hierarchies. Higher caching layers (e.g., browser and CDN) expire cached content more quickly than lower layers, balancing freshness and efficiency.

- Event-Based Invalidation. Uses pub/sub messaging (e.g., Redis Pub/Sub, Kafka) to notify caching layers when data changes, improving consistency.

- Versioned Cache Keys. Embeds data versions into cache keys, ensuring clients retrieve the latest content without requiring manual cache clearing.

Monitoring and Optimization

A successful caching implementation requires ongoing monitoring and refinement:

- Cache Hit Ratio. measures how often data is served from the cache instead of being fetched from the database. A higher ratio means fewer expensive API or database calls. Regular monitoring helps fine-tune caching strategies.

- Cache Size and Eviction. Ensuring caches aren’t overfilled prevents excessive evictions, which can lead to performance drops.

- Response Time Distribution. Comparing cached vs. non-cached response times highlights areas where caching can be improved.

- Cost-Benefit Analysis. Balances the savings from caching with the risk of serving stale data, ensuring an optimal caching strategy.

Caching isn’t just about improving performance—it’s a core architectural decision that directly impacts user experience and scalability. A well-designed caching strategy ensures applications remain fast, efficient, and resilient under heavy load. Now, let’s summarize the key takeaways and best practices to implement caching successfully.

Conclusion: Caching as a Product Strategy

As explored throughout this article, caching isn’t merely a technical optimization—it’s a fundamental product strategy that impacts everything from user experience to operational costs. When implemented thoughtfully across all application layers, caching provides a competitive edge through superior performance, lower infrastructure costs, and improved scalability.

The most successful product teams treat caching as a core architectural decision rather than an afterthought. They recognize that different layers require different caching approaches and design their systems to maximize the strengths of each layer.

A well-designed caching strategy goes beyond speed—it enhances user experience, optimizes infrastructure costs, and ensures applications scale efficiently. By treating caching as an integral part of system architecture from the start, products can be built to handle growth and traffic surges while providing a seamless experience for users, no matter where they are.

![Apple Seeds visionOS 2.4 RC With Apple Intelligence to Developers [Download]](https://www.iclarified.com/images/news/96797/96797/96797-640.jpg)

![Apple Seeds tvOS 18.4 RC to Developers [Download]](https://www.iclarified.com/images/news/96800/96800/96800-640.jpg)

![Apple Seeds watchOS 11.4 RC to Developers [Download]](https://www.iclarified.com/images/news/96803/96803/96803-640.jpg)

![Apple Releases macOS Sequoia 15.4 RC to Developers [Download]](https://www.iclarified.com/images/news/96805/96805/96805-640.jpg)

_Rokas_Tenys_Alamy.jpg?#)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![[The AI Show Episode 138]: Introducing GPT-4.5, Claude 3.7 Sonnet, Alexa+, Deep Research Now in ChatGPT Plus & How AI Is Disrupting Writing](https://www.marketingaiinstitute.com/hubfs/ep%20138%20cover.png)

![From hating coding to programming satellites at age 37 with Francesco Ciulla [Podcast #165]](https://cdn.hashnode.com/res/hashnode/image/upload/v1742585568977/09b25b8e-8c92-4f4b-853f-64b7f7915980.png?#)

.png?#)

.jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)