How MCP Can Supercharge GenAI-Powered BI Dashboards with Real-Time Data Access from Your Databases

We have already seen most of the Business Intelligence dashboards are now powered with GenAI and it has already revolutionized how businesses interact with data. However, LLMs often struggle to provide real-time, context-aware insights since they rely on pre-trained knowledge rather than live database access. To bridge this gap, MCP server could be used to enable LLMs access business data by accessing in-housed databases, ensuring real-time, actionable insights are delivered directly through BI dashboards like Power BI, Tableau and many more What is Model Context Protocol (MCP)? As per Anthropic’s official website, “MCP is an open protocol that standardizes how applications provide context to LLMs. Think of MCP as a USB-C port for AI applications. Just as USB-C provides a standardized way to connect your devices to various peripherals and accessories, MCP provides a standardized way to connect AI models to different data sources and tools.” How would MCP work on Business Intelligence Dashboards? 1) User Query via BI Dashboard → A user asks a natural language question (e.g., "What were last month’s sales by region?"). 2) LLM Consults MCP Server → Instead of using static training data, the LLM queries the MCP server, which connects databases, APIs, or cloud data lakes. 3) MCP Fetches Real-Time Data → The protocol retrieves the latest structured data from SQL, Snowflake, Databricks, or other sources. 4) Data Contextualization & Response Generation → The LLM processes the data, generates insights, and presents them in the BI dashboard as a report, visualization, or narrative summary. How is MCP different from RAG? Looking at MCP’s framework it might feel like it is very similar to RAG. Well, it is in some manner, but there are differences in my opinion. Let’s understand what those are 1) How data is retrieved MCP: Direct APIs and database queries RAG: Semantic search from vector databases 2) LLM interaction: MCP: Directly queries from data sources (SQL, APIs, Snowflake, etc.) RAG: Finds and injects text-based context into prompts References 1) Anthropic Official Blog – Introducing the Model Context Protocol Describes MCP as an open standard and explains its USB-C analogy for AI tools. https://www.anthropic.com/news/model-context-protocol 2) Anthropic Developer Docs – MCP Overview Technical documentation on how MCP works, how to build a server/client, and use it with Claude. https://docs.anthropic.com/en/docs/agents-and-tools/mcp 3) Model Context Protocol GitHub Repositories GitHub org hosting MCP specifications, sample servers, and client libraries in Python, TypeScript, and more. https://github.com/modelcontextprotocol 4) “Claude's Model Context Protocol (MCP): The Standard for AI Interaction” – dev.to A developer breakdown of how MCP simplifies interactions between AI and external data sources. https://dev.to/foxgem/claudes-model-context-protocol-mcp-the-standard-for-ai-interaction-5gko 5) Retrieval-Augmented Generation (RAG): A Survey Research paper on how RAG architectures retrieve information using semantic search and vector databases. https://arxiv.org/abs/2005.11401 6) Pinecone – RAG 101: Retrieval-Augmented Generation Explained Explains how RAG retrieves knowledge from vector databases and integrates it with LLMs. https://www.pinecone.io/learn/retrieval-augmented-generation/ 7) Microsoft Power BI + Copilot Overview Demonstrates how GenAI is embedded into dashboards for natural language querying. https://powerbi.microsoft.com/en-us/blog/introducing-microsoft-fabric-and-copilot-in-power-bi/ 8) Tableau GPT: How Tableau Integrates GenAI for Business Intelligence Overview of how Tableau brings LLM-powered insights into data visualizations. https://www.salesforce.com/news/press-releases/2023/05/04/tableau-gpt-announcement/

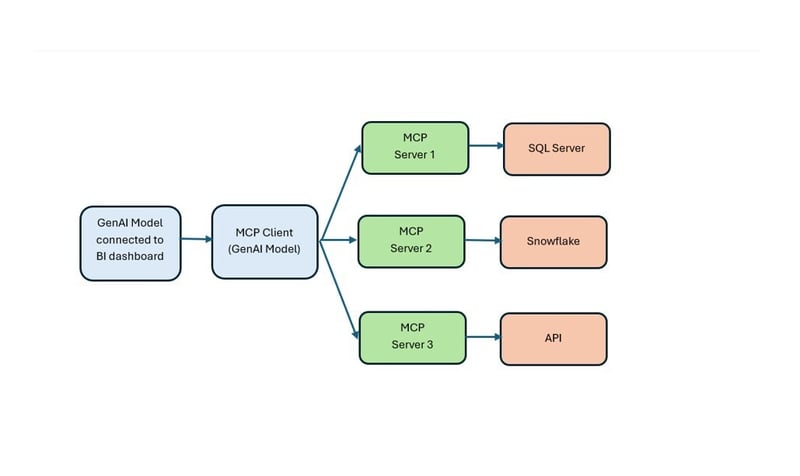

We have already seen most of the Business Intelligence dashboards are now powered with GenAI and it has already revolutionized how businesses interact with data. However, LLMs often struggle to provide real-time, context-aware insights since they rely on pre-trained knowledge rather than live database access.

To bridge this gap, MCP server could be used to enable LLMs access business data by accessing in-housed databases, ensuring real-time, actionable insights are delivered directly through BI dashboards like Power BI, Tableau and many more

What is Model Context Protocol (MCP)?

As per Anthropic’s official website, “MCP is an open protocol that standardizes how applications provide context to LLMs. Think of MCP as a USB-C port for AI applications. Just as USB-C provides a standardized way to connect your devices to various peripherals and accessories, MCP provides a standardized way to connect AI models to different data sources and tools.”

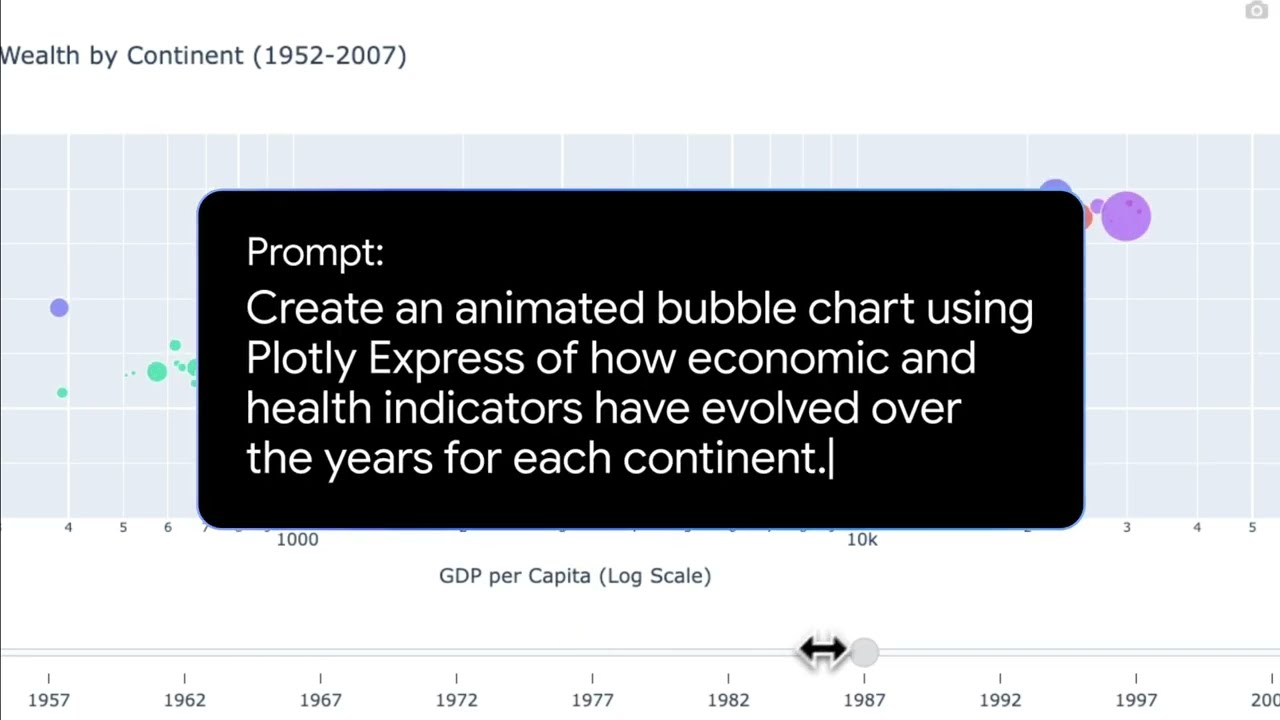

How would MCP work on Business Intelligence Dashboards?

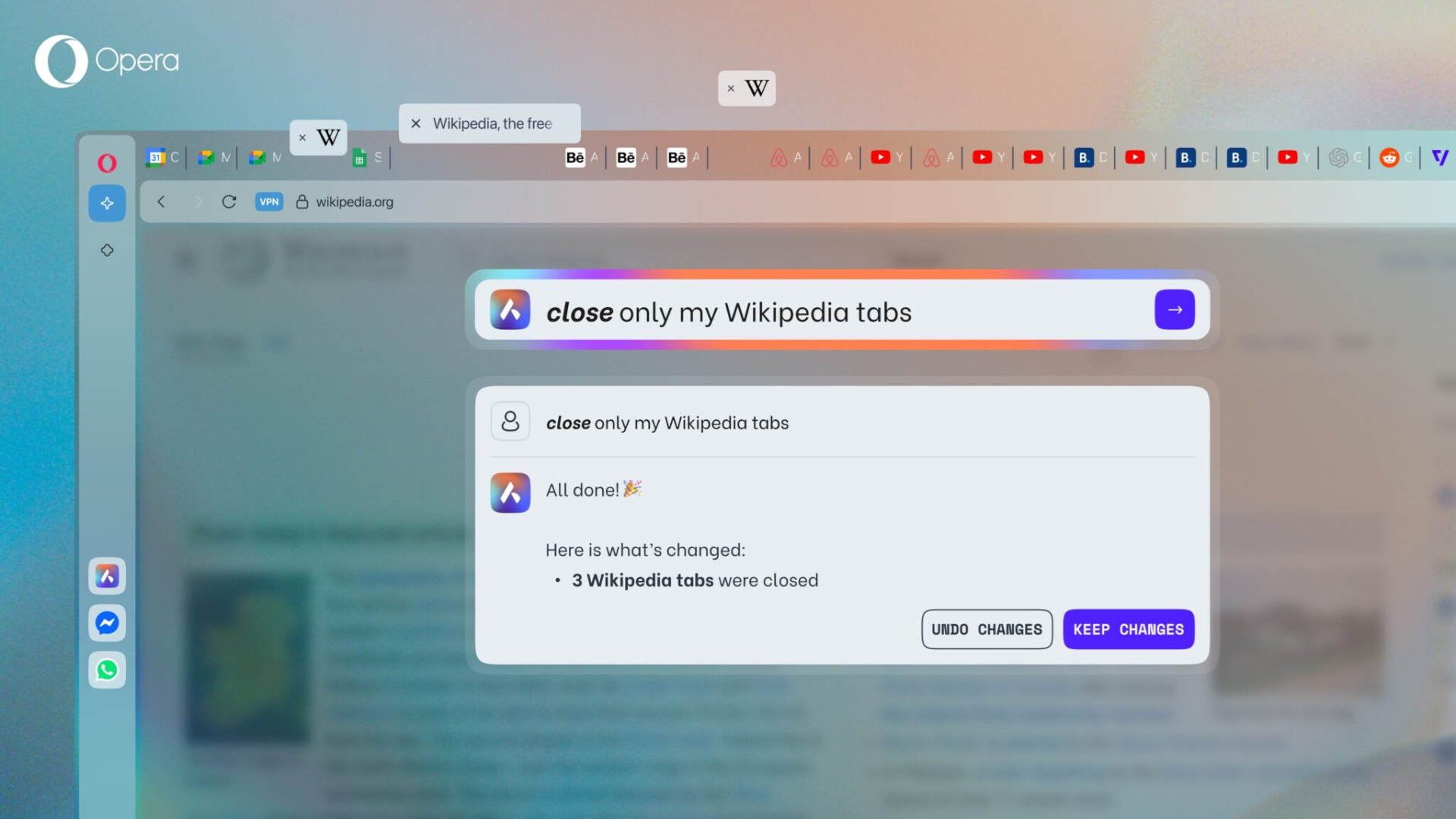

1) User Query via BI Dashboard → A user asks a natural language question (e.g., "What were last month’s sales by region?").

2) LLM Consults MCP Server → Instead of using static training data, the LLM queries the MCP server, which connects databases, APIs, or cloud data lakes.

3) MCP Fetches Real-Time Data → The protocol retrieves the latest structured data from SQL, Snowflake, Databricks, or other sources.

4) Data Contextualization & Response Generation → The LLM processes the data, generates insights, and presents them in the BI dashboard as a report, visualization, or narrative summary.

How is MCP different from RAG?

Looking at MCP’s framework it might feel like it is very similar to RAG. Well, it is in some manner, but there are differences in my opinion. Let’s understand what those are

1) How data is retrieved

MCP: Direct APIs and database queries

RAG: Semantic search from vector databases

2) LLM interaction:

MCP: Directly queries from data sources (SQL, APIs, Snowflake, etc.)

RAG: Finds and injects text-based context into prompts

References

1) Anthropic Official Blog – Introducing the Model Context Protocol

Describes MCP as an open standard and explains its USB-C analogy for AI tools.

https://www.anthropic.com/news/model-context-protocol

2) Anthropic Developer Docs – MCP Overview

Technical documentation on how MCP works, how to build a server/client, and use it with Claude.

https://docs.anthropic.com/en/docs/agents-and-tools/mcp

3) Model Context Protocol GitHub Repositories

GitHub org hosting MCP specifications, sample servers, and client libraries in Python, TypeScript, and more.

https://github.com/modelcontextprotocol

4) “Claude's Model Context Protocol (MCP): The Standard for AI Interaction” – dev.to

A developer breakdown of how MCP simplifies interactions between AI and external data sources.

https://dev.to/foxgem/claudes-model-context-protocol-mcp-the-standard-for-ai-interaction-5gko

5) Retrieval-Augmented Generation (RAG): A Survey

Research paper on how RAG architectures retrieve information using semantic search and vector databases.

https://arxiv.org/abs/2005.11401

6) Pinecone – RAG 101: Retrieval-Augmented Generation Explained

Explains how RAG retrieves knowledge from vector databases and integrates it with LLMs.

https://www.pinecone.io/learn/retrieval-augmented-generation/

7) Microsoft Power BI + Copilot Overview

Demonstrates how GenAI is embedded into dashboards for natural language querying.

https://powerbi.microsoft.com/en-us/blog/introducing-microsoft-fabric-and-copilot-in-power-bi/

8) Tableau GPT: How Tableau Integrates GenAI for Business Intelligence

Overview of how Tableau brings LLM-powered insights into data visualizations.

https://www.salesforce.com/news/press-releases/2023/05/04/tableau-gpt-announcement/

![Apple Shares Behind-the-Scenes Look at The Making of 'Someday' [Video]](https://www.iclarified.com/images/news/96836/96836/96836-640.jpg)

![Ring Battery Doorbell On Sale for 45% Off [Big Spring Sale]](https://www.iclarified.com/images/news/96837/96837/96837-640.jpg)

![Apple TV+ Presents 'The Art of the Perfect Shot' [Video]](https://www.iclarified.com/images/news/96838/96838/96838-640.jpg)

.webp?#)

_Ted_Hsu_Alamy_.jpg?#)

![[The AI Show Episode 140]: New AGI Warnings, OpenAI Suggests Government Policy, Sam Altman Teases Creative Writing Model, Claude Web Search & Apple’s AI Woes](https://www.marketingaiinstitute.com/hubfs/ep%20140%20cover.png)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)