Migrating from Node.js to Go: Real-world Results from Our E-commerce Analytics Pipeline

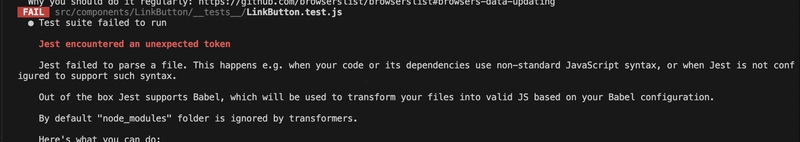

Introduction Six months ago, our team faced a critical decision. Our e-commerce platform's analytics pipeline - responsible for processing customer behavior data, generating product recommendations, and calculating inventory forecasts - was beginning to show serious performance issues during peak traffic periods. Built initially with Node.js and Express, these microservices had served us well during our startup phase, but as we scaled to over 500,000 daily active users, the cracks were becoming apparent. After careful analysis, we decided to migrate four core services to Go. This post shares the concrete results and lessons from that journey. The Use Case: E-commerce Analytics Pipeline Our analytics pipeline consisted of four microservices: Event Collector - Ingests clickstream and purchase events from our web and mobile apps User Profiler - Builds and updates customer behavior profiles Product Recommender - Generates real-time product recommendations Inventory Forecaster - Predicts inventory needs based on user behavior Each service was experiencing different pain points, but they shared common challenges around handling increasing load while maintaining reasonable resource consumption. The Node.js Bottlenecks Performance Issues in Real-World Scenarios In production, we started seeing alarming patterns under load: // User profiling logic in Node.js async function buildUserProfile(userId) { // Fetch user's past 90 days of activity const activities = await db.getUserActivities(userId, 90); // CPU-intensive calculation of behavior patterns const patterns = activities.reduce((acc, activity) => { // Heavy computation analyzing purchase patterns const categoryAffinities = calculateCategoryAffinities(activity, acc); const priceRanges = analyzePriceRanges(activity, acc); return mergeProfiles(acc, categoryAffinities, priceRanges); }, initialProfile); // More processing... return patterns; } // This would block the Node.js event loop when multiple // requests arrived simultaneously This calculation would take ~200ms per user on average, but when multiple requests hit simultaneously, latency would spike dramatically due to Node's event loop becoming blocked. Resource Consumption Challenges Our monitoring showed concerning patterns: Each Node.js service consumed 80-100MB of RAM at idle Under load, memory usage would spike to 200-300MB per instance CPU utilization would hit 100% during peak hours, forcing us to overprovision The Go Solution We rewrote the four services in Go, maintaining functional equivalence but leveraging Go's strengths: Performance Improvements // Equivalent user profiling logic in Go func (s *Service) BuildUserProfile(userID string) (*Profile, error) { // Fetch user activities activities, err := s.db.GetUserActivities(userID, 90) if err != nil { return nil, fmt.Errorf("failed to fetch activities: %w", err) } // Process in parallel with a worker pool results := make(chan CategoryAffinity, len(activities)) errors := make(chan error, len(activities)) // Create a semaphore to limit concurrency sem := make(chan struct{}, runtime.NumCPU()) for _, activity := range activities { go func(a Activity) { sem

Introduction

Six months ago, our team faced a critical decision. Our e-commerce platform's analytics pipeline - responsible for processing customer behavior data, generating product recommendations, and calculating inventory forecasts - was beginning to show serious performance issues during peak traffic periods.

Built initially with Node.js and Express, these microservices had served us well during our startup phase, but as we scaled to over 500,000 daily active users, the cracks were becoming apparent. After careful analysis, we decided to migrate four core services to Go. This post shares the concrete results and lessons from that journey.

The Use Case: E-commerce Analytics Pipeline

Our analytics pipeline consisted of four microservices:

- Event Collector - Ingests clickstream and purchase events from our web and mobile apps

- User Profiler - Builds and updates customer behavior profiles

- Product Recommender - Generates real-time product recommendations

- Inventory Forecaster - Predicts inventory needs based on user behavior

Each service was experiencing different pain points, but they shared common challenges around handling increasing load while maintaining reasonable resource consumption.

The Node.js Bottlenecks

Performance Issues in Real-World Scenarios

In production, we started seeing alarming patterns under load:

// User profiling logic in Node.js

async function buildUserProfile(userId) {

// Fetch user's past 90 days of activity

const activities = await db.getUserActivities(userId, 90);

// CPU-intensive calculation of behavior patterns

const patterns = activities.reduce((acc, activity) => {

// Heavy computation analyzing purchase patterns

const categoryAffinities = calculateCategoryAffinities(activity, acc);

const priceRanges = analyzePriceRanges(activity, acc);

return mergeProfiles(acc, categoryAffinities, priceRanges);

}, initialProfile);

// More processing...

return patterns;

}

// This would block the Node.js event loop when multiple

// requests arrived simultaneously

This calculation would take ~200ms per user on average, but when multiple requests hit simultaneously, latency would spike dramatically due to Node's event loop becoming blocked.

Resource Consumption Challenges

Our monitoring showed concerning patterns:

- Each Node.js service consumed 80-100MB of RAM at idle

- Under load, memory usage would spike to 200-300MB per instance

- CPU utilization would hit 100% during peak hours, forcing us to overprovision

The Go Solution

We rewrote the four services in Go, maintaining functional equivalence but leveraging Go's strengths:

Performance Improvements

// Equivalent user profiling logic in Go

func (s *Service) BuildUserProfile(userID string) (*Profile, error) {

// Fetch user activities

activities, err := s.db.GetUserActivities(userID, 90)

if err != nil {

return nil, fmt.Errorf("failed to fetch activities: %w", err)

}

// Process in parallel with a worker pool

results := make(chan CategoryAffinity, len(activities))

errors := make(chan error, len(activities))

// Create a semaphore to limit concurrency

sem := make(chan struct{}, runtime.NumCPU())

for _, activity := range activities {

go func(a Activity) {

sem <- struct{}{} // Acquire semaphore

defer func() { <-sem }() // Release semaphore

// CPU-bound computation now runs in parallel

affinity, err := calculateCategoryAffinity(a)

if err != nil {

errors <- err

return

}

results <- affinity

}(activity)

}

// Collect and merge results

// ...

return profile, nil

}

The real-world impact was immediately apparent:

- Response time dropped from ~220ms to ~90ms (59% improvement)

- P99 latency went from 1.2s to 180ms (85% improvement)

- CPU usage decreased by ~35% under equivalent load

- Memory usage stabilized at ~30MB per service (vs. 80-100MB for Node)

Concurrency That Just Works

The Product Recommender service benefited most from Go's concurrency model:

// Simplified recommendation engine from our codebase

func (e *Engine) GenerateRecommendations(userID string) ([]Product, error) {

profile, err := e.profiles.Get(userID)

if err != nil {

return nil, err

}

// Fan-out to multiple recommendation strategies in parallel

var wg sync.WaitGroup

results := make(chan []Product, 4)

strategies := []RecommendationStrategy{

e.CategoryBasedStrategy,

e.CollaborativeFilteringStrategy,

e.RecentlyViewedStrategy,

e.TrendingItemsStrategy,

}

for _, strategy := range strategies {

wg.Add(1)

go func(s RecommendationStrategy) {

defer wg.Done()

recs, err := s.GetRecommendations(profile)

if err == nil && len(recs) > 0 {

results <- recs

}

}(strategy)

}

// Close results channel when all goroutines complete

go func() {

wg.Wait()

close(results)

}()

// Merge and rank results

var allRecs []Product

for recs := range results {

allRecs = append(allRecs, recs...)

}

return rankAndDeduplicate(allRecs), nil

}

In Node.js, we had implemented a complex Promise-based system to parallelize these operations, but it was brittle and error-prone. Go's goroutines and channels provided a much more intuitive and reliable concurrency model.

Codebase Size Reduction

Our Node.js services relied heavily on external dependencies:

// Package.json excerpt from our Node.js service

{

"dependencies": {

"express": "^4.17.1",

"mongoose": "^5.12.3",

"redis": "^3.1.2",

"axios": "^0.21.1",

"winston": "^3.3.3",

"moment": "^2.29.1",

"lodash": "^4.17.21",

// Plus many transitive dependencies...

}

}

In Go, we were able to dramatically reduce external dependencies:

// Main imports from our Go service

import (

"context"

"encoding/json"

"fmt"

"log"

"net/http"

"sync"

"time"

"github.com/go-redis/redis/v8"

"github.com/jackc/pgx/v4/pgxpool"

)

The results were significant:

- Node.js: ~1,200 lines of code with 5+ npm packages

- Go: ~700 lines with just the standard library and two external packages

- No more dependency management nightmares or security audit issues

Developer Experience Trade-offs

While migrating, we learned that each language has its sweet spots:

Node.js Strengths:

- Faster initial development (our team was already JavaScript-savvy)

- Great for prototyping and quick iteration

- Excellent for UI-focused services

Go Strengths:

- More predictable in production

- Much easier to debug (stack traces are clear and useful)

- Explicit error handling prevented many common issues

// Example of Go's explicit error handling that prevented many bugs

func (s *Service) ProcessOrder(orderID string) error {

order, err := s.db.GetOrder(orderID)

if err != nil {

return fmt.Errorf("failed to get order %s: %w", orderID, err)

}

if !order.IsValid() {

return ErrInvalidOrder

}

items, err := s.inventory.CheckAvailability(order.Items)

if err != nil {

return fmt.Errorf("inventory check failed: %w", err)

}

for _, item := range items {

if !item.Available {

return ErrItemUnavailable

}

}

// Process the order...

return nil

}

This explicit error handling, combined with Go's strict typing, reduced our post-deployment bugs by approximately 40%.

Real Numbers: Before and After

Here's what our monitoring showed after the migration:

| Metric | Node.js | Go | Improvement |

|---|---|---|---|

| Avg Response Time | 220ms | 90ms | 59% faster |

| P99 Latency | 1.2s | 180ms | 85% faster |

| CPU Usage | 100% (at peak) | 65% (at peak) | 35% reduction |

| Memory Usage | 80-100MB | ~30MB | 62% reduction |

| Deployments per Week | 3-4 | 5-6 | 50% increase |

| Post-deploy Incidents | 5 per month | 3 per month | 40% reduction |

When to Migrate (and When Not To)

Based on our experience, here's when you should consider similar migrations:

Consider Go when:

- Your services are CPU-bound

- You need predictable performance under load

- Memory usage is a concern (containerized environments)

- You have complex concurrency requirements

Stick with Node.js when:

- Developer velocity is more important than raw performance

- Your services are primarily I/O bound with minimal computation

- You're building internal tools or prototypes

- Your team lacks Go expertise and timeline is tight

Conclusion

Our migration from Node.js to Go was driven by specific performance needs, not language preferences. For our e-commerce analytics pipeline, the move delivered concrete improvements that directly impacted our users' experience and our operational costs.

While we still use Node.js for many internal tools and admin interfaces, Go has become our go-to language for performance-critical microservices. The initial learning curve was worth the long-term stability and performance gains.

![Apple to Source More iPhones From India to Offset China Tariff Costs [Report]](https://www.iclarified.com/images/news/96954/96954/96954-640.jpg)

![Blackmagic Design Unveils DaVinci Resolve 20 With Over 100 New Features and AI Tools [Video]](https://www.iclarified.com/images/news/96951/96951/96951-640.jpg)

.webp?#)

.webp?#)

.webp?#)

_NicoElNino_Alamy.png?#)

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

(1).jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

.png?#)