MongoDB TTL and Disk Storage

In a previous blog post, I explained how MongoDB TTL indexes work and their optimization to avoid fragmentation during scans. However, I didn’t cover the details of on-disk storage. A recent Reddit question is the occasion to explore this aspect further. Reproducible example Here is a small program that inserts documents in a loop, with a timestamp and some random text: // random string to be not too friendly with compression function getRandomString(length) { const characters = 'ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz0123456789'; let result = ''; const charactersLength = characters.length; for (let i = 0; i { const stats = await db.ttl.stats(); const throughput = insertedCount / 10; // assuming measure over 10 seconds const collectionCount = stats.count; const collectionSizeMB = stats.size / 1024 / 1024; const storageSizeMB = stats.storageSize / 1024 / 1024; const totalIndexSizeMB = stats.totalIndexSize / 1024 / 1024; maxThroughput = Math.max(maxThroughput, throughput); maxCollectionCount = Math.max(maxCollectionCount, collectionCount); maxCollectionSize = Math.max(maxCollectionSize, collectionSizeMB); maxStorageSize = Math.max(maxStorageSize, storageSizeMB); maxTotalIndexSize = Math.max(maxTotalIndexSize, totalIndexSizeMB); console.log(`Collection Name: ${stats.ns} Throughput: ${throughput.toFixed(0).padStart(10)} docs/min (max: ${maxThroughput.toFixed(0)} docs/min) Collection Size: ${collectionSizeMB.toFixed(0).padStart(10)} MB (max: ${maxCollectionSize.toFixed(0)} MB) Number of Records: ${collectionCount.toFixed(0).padStart(10)} (max: ${maxCollectionCount.toFixed(0)} docs) Storage Size: ${storageSizeMB.toFixed(0).padStart(10)} MB (max: ${maxStorageSize.toFixed(0)} MB) Total Index Size: ${totalIndexSizeMB.toFixed(0).padStart(10)} MB (max: ${maxTotalIndexSize.toFixed(0)} MB)`); insertedCount = 0; }, 60000); // every minute I created the collection with a TTL index, which automatically expires data older than five minutes: // TT expire after 5 minutes db.ttl.drop(); db.ttl.createIndex({ ts: 1 }, { expireAfterSeconds: 300 }); I let this running to see how the sotrage size evolves. Output after 3 hours The homogeneous insert rate, combined with TTL expiration, keeps the number of documents in the collection relatively stable. Deletions occur every minute, ensuring that the overall document count remains consistent. I observe the same from the statistics I log every minute: The storage size also remains constant, at 244MB for the table and 7MB for the indexes. The size of files has increased for the first 6 minutes and then remained constant: This is sufficient to show that the deletion and insertion do not have a fragmentation effect that would require additional consideration. About 25% is marked as available for reuse and is effectively reused. It is possible to force a compaction, to temporarily reclaim more space, but it is not necessary: Collection Name: test.ttl Throughput: 3286 docs/min (max: 3327 docs/min) Collection Size: 170 MB (max: 198 MB) Number of Records: 171026 (max: 198699 docs) Storage Size: 244 MB (max: 244 MB) Total Index Size: 7 MB (max: 7 MB) Collection Name: test.ttl Throughput: 3299 docs/min (max: 3327 docs/min) Collection Size: 170 MB (max: 198 MB) Number of Records: 170977 (max: 198699 docs) Storage Size: 244 MB (max: 244 MB) Total Index Size: 6 MB (max: 7 MB) Collection Name: test.ttl Throughput: 3317 docs/min (max: 3327 docs/min) Collection Size: 170 MB (max: 198 MB) Number of Records: 170985 (max: 198699 docs) Storage Size: 244 MB (max: 244 MB) Total Index Size: 6 MB (max: 7 MB) test> db.runCommand({ compact: 'ttl' }); { bytesFreed: 49553408, ok: 1 } Collection Name: test.ttl Throughput: 1244 docs/min (max: 3327 docs/min) Collection Size: 150 MB (max: 198 MB) Number of Records: 150165 (max: 198699 docs) Storage Size: 197 MB (max: 244 MB) Total Index Size: 6 MB (max: 7 MB) Collection Name: test.ttl Throughput: 3272 docs/min (max: 3327 docs/min) Collection Size: 149 MB (max: 198 MB) Number of Records: 149553 (max: 198699 docs) Storage Size: 203 MB (max: 244 MB) Total Index Size: 6 MB (max: 7 MB) While this has reduced storage, it eventually returns to its normal volume. It's typical for a B-tree to maintain some free space, which helps to minimize frequent space allocations and reclaim. Here is a focus when I've run manual compaction: Conclusion TTL deletion makes space available for reuse instead of reclaiming it immediat

In a previous blog post, I explained how MongoDB TTL indexes work and their optimization to avoid fragmentation during scans. However, I didn’t cover the details of on-disk storage. A recent Reddit question is the occasion to explore this aspect further.

Reproducible example

Here is a small program that inserts documents in a loop, with a timestamp and some random text:

// random string to be not too friendly with compression

function getRandomString(length) {

const characters = 'ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz0123456789';

let result = '';

const charactersLength = characters.length;

for (let i = 0; i < length; i++) {

result += characters.charAt(Math.floor(Math.random() * charactersLength));

}

return result;

}

// MongoDB loop for inserting documents with a random string

while (true) {

const doc = { text: getRandomString(1000), ts: new Date() };

db.ttl.insertOne(doc);

insertedCount++;

}

Before executing this, I ran a background function to display statistics every minute, including the number of records, memory usage, and disk size.

// Prints stats every minute

let insertedCount = 0;

let maxThroughput = 0;

let maxCollectionCount = 0;

let maxCollectionSize = 0;

let maxStorageSize = 0;

let maxTotalIndexSize = 0;

setInterval(async () => {

const stats = await db.ttl.stats();

const throughput = insertedCount / 10; // assuming measure over 10 seconds

const collectionCount = stats.count;

const collectionSizeMB = stats.size / 1024 / 1024;

const storageSizeMB = stats.storageSize / 1024 / 1024;

const totalIndexSizeMB = stats.totalIndexSize / 1024 / 1024;

maxThroughput = Math.max(maxThroughput, throughput);

maxCollectionCount = Math.max(maxCollectionCount, collectionCount);

maxCollectionSize = Math.max(maxCollectionSize, collectionSizeMB);

maxStorageSize = Math.max(maxStorageSize, storageSizeMB);

maxTotalIndexSize = Math.max(maxTotalIndexSize, totalIndexSizeMB);

console.log(`Collection Name: ${stats.ns}

Throughput: ${throughput.toFixed(0).padStart(10)} docs/min (max: ${maxThroughput.toFixed(0)} docs/min)

Collection Size: ${collectionSizeMB.toFixed(0).padStart(10)} MB (max: ${maxCollectionSize.toFixed(0)} MB)

Number of Records: ${collectionCount.toFixed(0).padStart(10)} (max: ${maxCollectionCount.toFixed(0)} docs)

Storage Size: ${storageSizeMB.toFixed(0).padStart(10)} MB (max: ${maxStorageSize.toFixed(0)} MB)

Total Index Size: ${totalIndexSizeMB.toFixed(0).padStart(10)} MB (max: ${maxTotalIndexSize.toFixed(0)} MB)`);

insertedCount = 0;

}, 60000); // every minute

I created the collection with a TTL index, which automatically expires data older than five minutes:

// TT expire after 5 minutes

db.ttl.drop();

db.ttl.createIndex({ ts: 1 }, { expireAfterSeconds: 300 });

I let this running to see how the sotrage size evolves.

Output after 3 hours

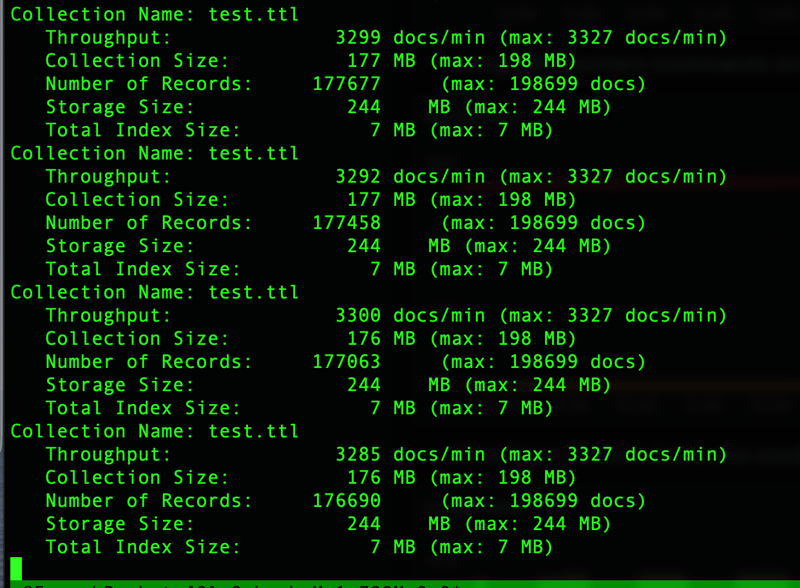

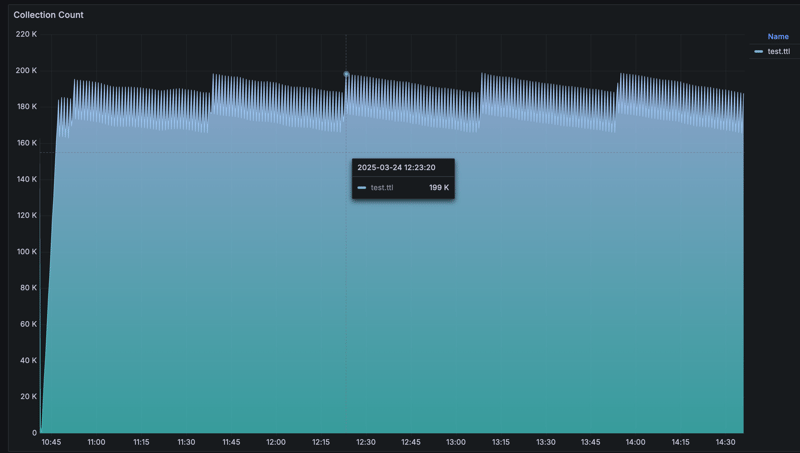

The homogeneous insert rate, combined with TTL expiration, keeps the number of documents in the collection relatively stable. Deletions occur every minute, ensuring that the overall document count remains consistent.

I observe the same from the statistics I log every minute:

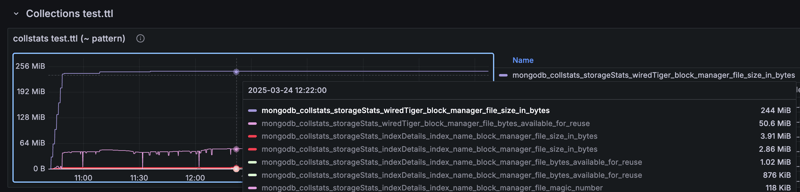

The storage size also remains constant, at 244MB for the table and 7MB for the indexes. The size of files has increased for the first 6 minutes and then remained constant:

This is sufficient to show that the deletion and insertion do not have a fragmentation effect that would require additional consideration. About 25% is marked as available for reuse and is effectively reused.

It is possible to force a compaction, to temporarily reclaim more space, but it is not necessary:

Collection Name: test.ttl

Throughput: 3286 docs/min (max: 3327 docs/min)

Collection Size: 170 MB (max: 198 MB)

Number of Records: 171026 (max: 198699 docs)

Storage Size: 244 MB (max: 244 MB)

Total Index Size: 7 MB (max: 7 MB)

Collection Name: test.ttl

Throughput: 3299 docs/min (max: 3327 docs/min)

Collection Size: 170 MB (max: 198 MB)

Number of Records: 170977 (max: 198699 docs)

Storage Size: 244 MB (max: 244 MB)

Total Index Size: 6 MB (max: 7 MB)

Collection Name: test.ttl

Throughput: 3317 docs/min (max: 3327 docs/min)

Collection Size: 170 MB (max: 198 MB)

Number of Records: 170985 (max: 198699 docs)

Storage Size: 244 MB (max: 244 MB)

Total Index Size: 6 MB (max: 7 MB)

test> db.runCommand({ compact: 'ttl' });

{ bytesFreed: 49553408, ok: 1 }

Collection Name: test.ttl

Throughput: 1244 docs/min (max: 3327 docs/min)

Collection Size: 150 MB (max: 198 MB)

Number of Records: 150165 (max: 198699 docs)

Storage Size: 197 MB (max: 244 MB)

Total Index Size: 6 MB (max: 7 MB)

Collection Name: test.ttl

Throughput: 3272 docs/min (max: 3327 docs/min)

Collection Size: 149 MB (max: 198 MB)

Number of Records: 149553 (max: 198699 docs)

Storage Size: 203 MB (max: 244 MB)

Total Index Size: 6 MB (max: 7 MB)

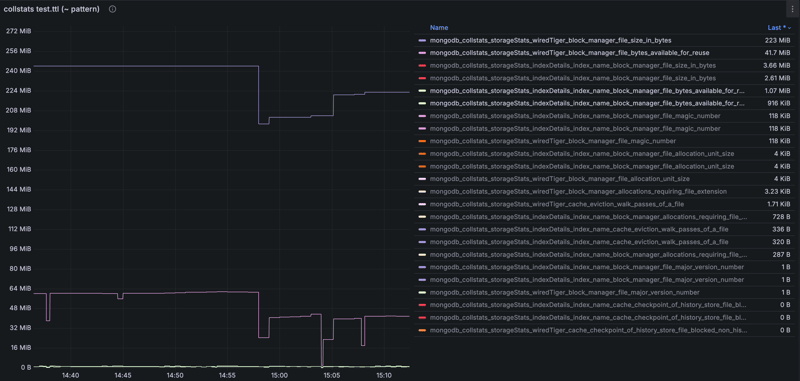

While this has reduced storage, it eventually returns to its normal volume. It's typical for a B-tree to maintain some free space, which helps to minimize frequent space allocations and reclaim.

Here is a focus when I've run manual compaction:

Conclusion

TTL deletion makes space available for reuse instead of reclaiming it immediately, but this doesn't increase the fragmentation. This space is reused automatically to maintain a total size proportional to the document count, with a constant free space of 25% in my case, to minimize frequent allocations typical of B-Tree implementations.

The TTL mechanism operates autonomously, requiring no manual compaction. If you have any doubt, monitor it. MongoDB offers statistics to compare logical and physical sizes at both the MongoDB layer and WiredTiger storage.

![iOS 19 Leak: First Look at Alleged VisionOS Inspired Redesign [Video]](https://www.iclarified.com/images/news/96824/96824/96824-640.jpg)

![OpenAI Announces 4o Image Generation [Video]](https://www.iclarified.com/images/news/96821/96821/96821-640.jpg)

![Do you care about Find My Device privacy settings? [Poll]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/06/Chipolo-One-Point-with-Find-My-Device-app.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![[The AI Show Episode 140]: New AGI Warnings, OpenAI Suggests Government Policy, Sam Altman Teases Creative Writing Model, Claude Web Search & Apple’s AI Woes](https://www.marketingaiinstitute.com/hubfs/ep%20140%20cover.png)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

.png?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)