DeepSeek-V3 Now Runs At 20 Tokens Per Second On Mac Studio

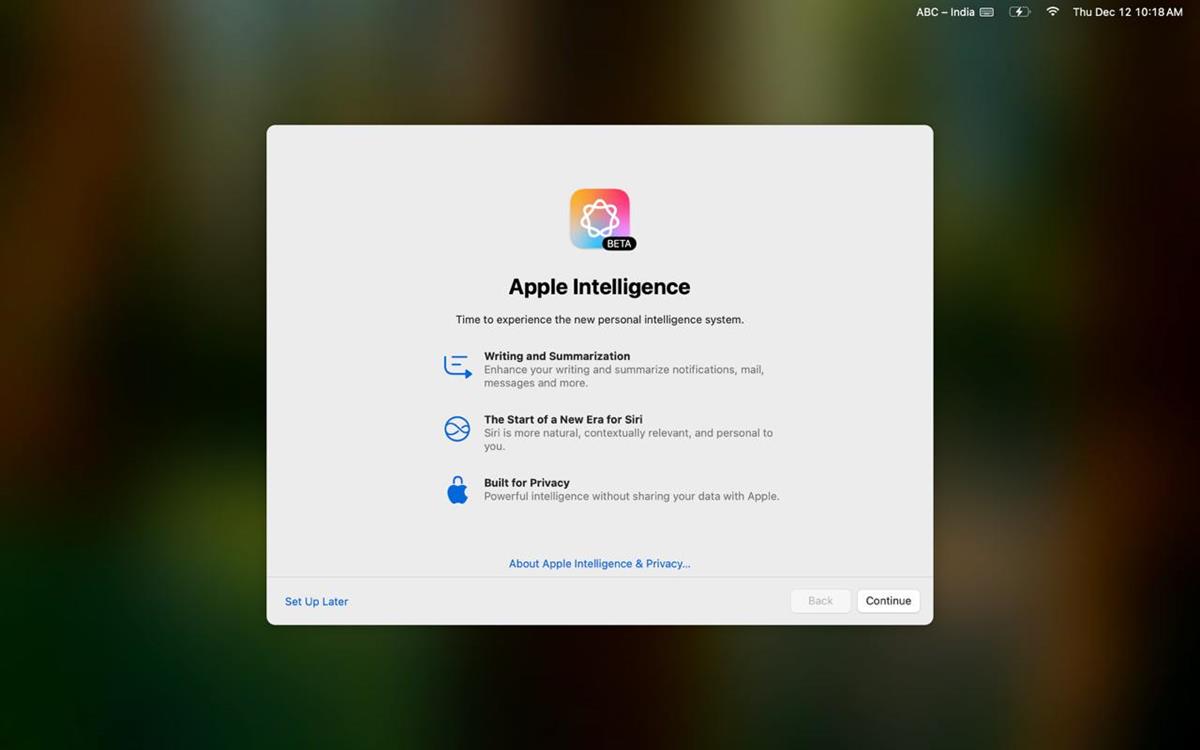

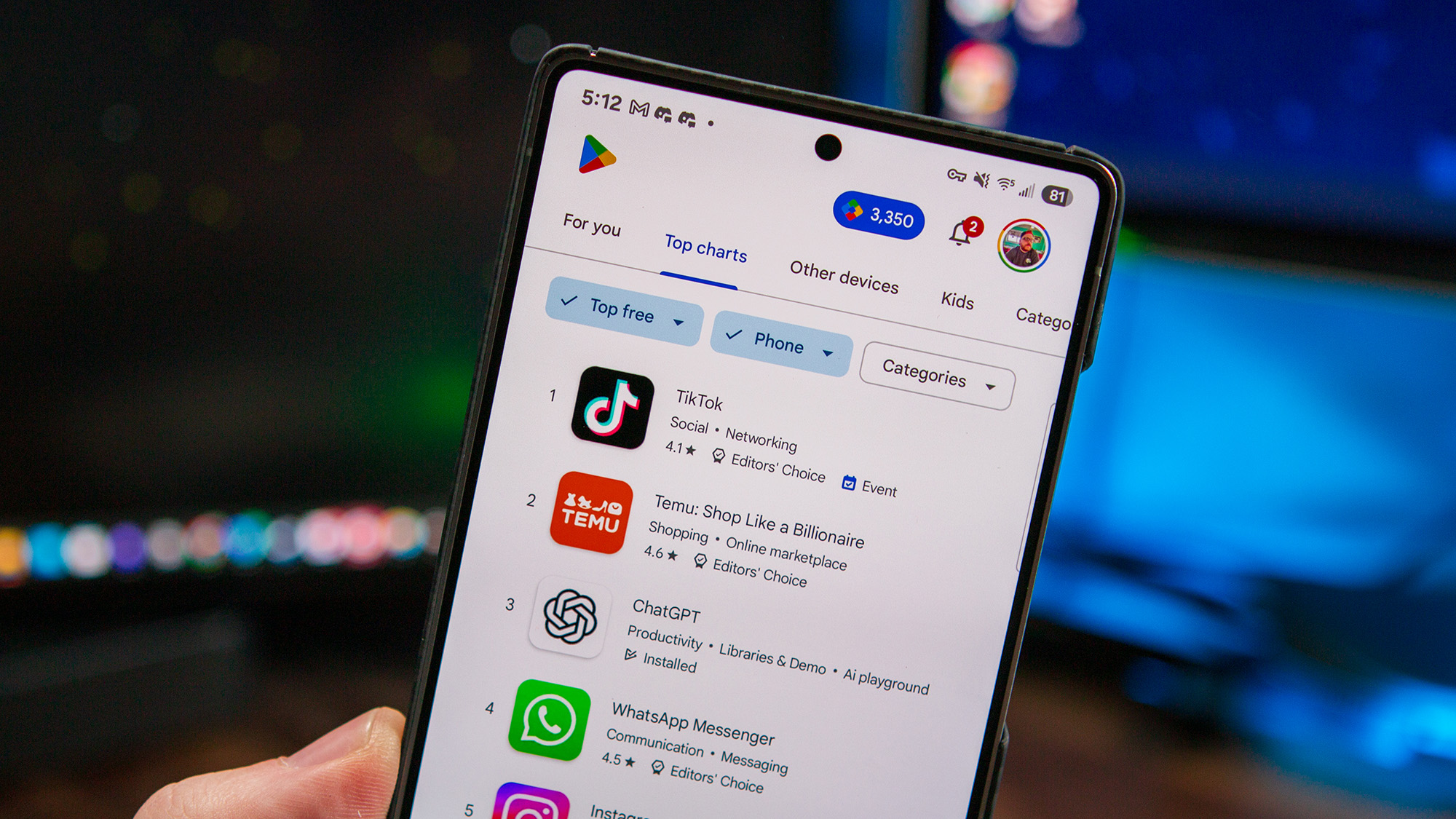

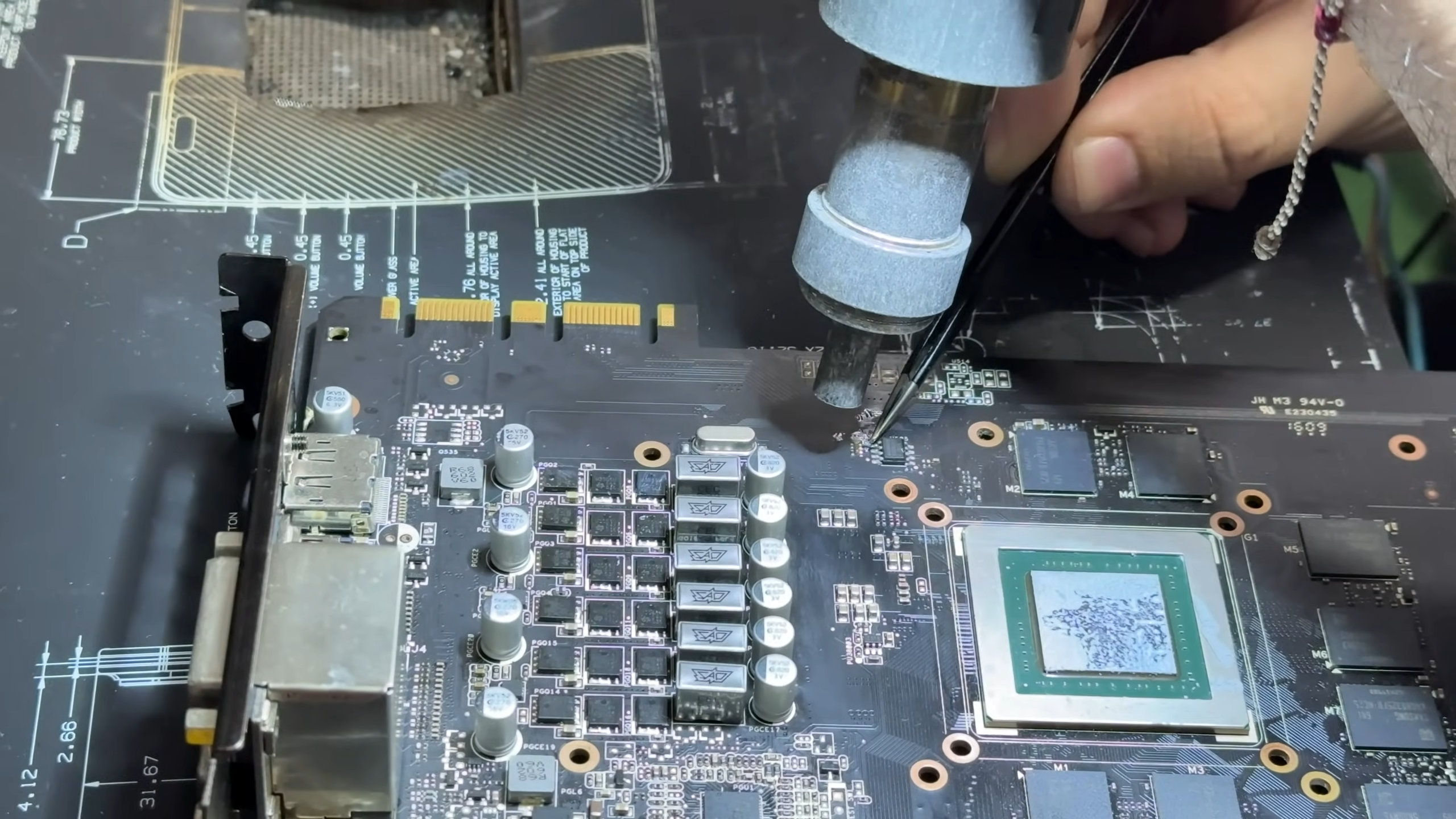

An anonymous reader quotes a report from VentureBeat: Chinese AI startup DeepSeek has quietly released a new large language model that's already sending ripples through the artificial intelligence industry -- not just for its capabilities, but for how it's being deployed. The 641-gigabyte model, dubbed DeepSeek-V3-0324, appeared on AI repository Hugging Face today with virtually no announcement (just an empty README file), continuing the company's pattern of low-key but impactful releases. What makes this launch particularly notable is the model's MIT license -- making it freely available for commercial use -- and early reports that it can run directly on consumer-grade hardware, specifically Apple's Mac Studio with M3 Ultra chip. "The new DeepSeek-V3-0324 in 4-bit runs at > 20 tokens/second on a 512GB M3 Ultra with mlx-lm!" wrote AI researcher Awni Hannun on social media. While the $9,499 Mac Studio might stretch the definition of "consumer hardware," the ability to run such a massive model locally is a major departure from the data center requirements typically associated with state-of-the-art AI. [...] Simon Willison, a developer tools creator, noted in a blog post that a 4-bit quantized version reduces the storage footprint to 352GB, making it feasible to run on high-end consumer hardware like the Mac Studio with M3 Ultra chip. This represents a potentially significant shift in AI deployment. While traditional AI infrastructure typically relies on multiple Nvidia GPUs consuming several kilowatts of power, the Mac Studio draws less than 200 watts during inference. This efficiency gap suggests the AI industry may need to rethink assumptions about infrastructure requirements for top-tier model performance. "The implications of an advanced open-source reasoning model cannot be overstated," reports VentureBeat. "Current reasoning models like OpenAI's o1 and DeepSeek's R1 represent the cutting edge of AI capabilities, demonstrating unprecedented problem-solving abilities in domains from mathematics to coding. Making this technology freely available would democratize access to AI systems currently limited to those with substantial budgets." "If DeepSeek-R2 follows the trajectory set by R1, it could present a direct challenge to GPT-5, OpenAI's next flagship model rumored for release in coming months. The contrast between OpenAI's closed, heavily-funded approach and DeepSeek's open, resource-efficient strategy represents two competing visions for AI's future." Read more of this story at Slashdot.

Read more of this story at Slashdot.

.webp?#)

.webp?#)

![iOS 19 Leak: First Look at Alleged VisionOS Inspired Redesign [Video]](https://www.iclarified.com/images/news/96824/96824/96824-640.jpg)

![OpenAI Announces 4o Image Generation [Video]](https://www.iclarified.com/images/news/96821/96821/96821-640.jpg)

![[The AI Show Episode 140]: New AGI Warnings, OpenAI Suggests Government Policy, Sam Altman Teases Creative Writing Model, Claude Web Search & Apple’s AI Woes](https://www.marketingaiinstitute.com/hubfs/ep%20140%20cover.png)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)