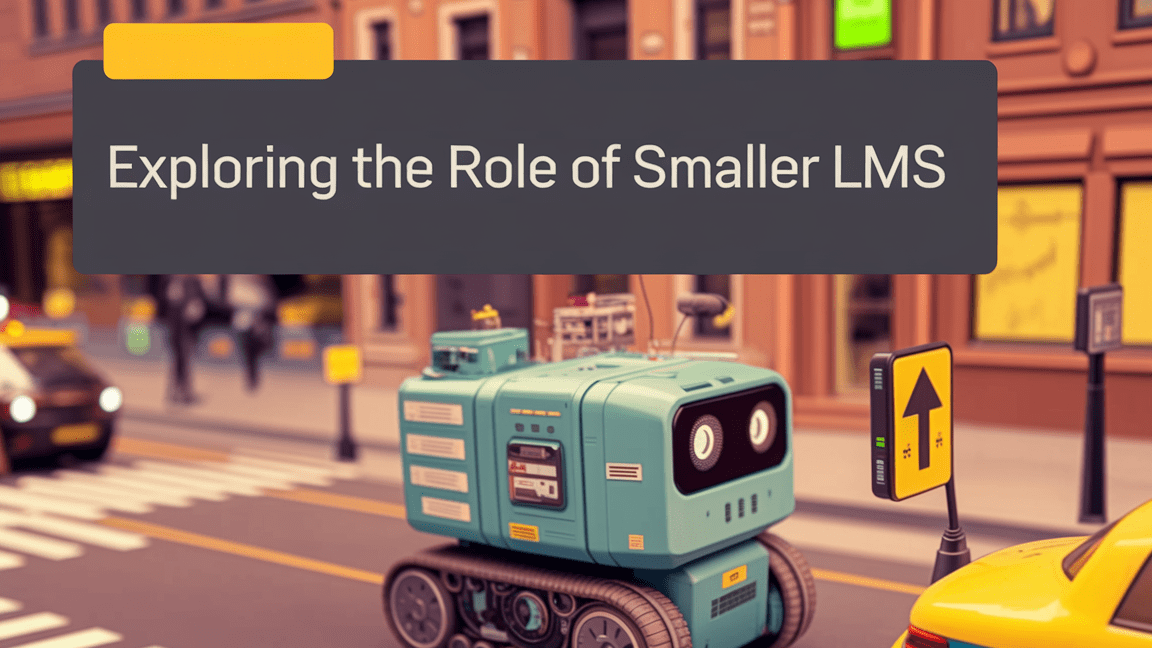

The rise of agentic AI: the need for guardrails while shaping the future of work

The rise of Agentic AI holds immense potential to transform the workforce.

As artificial intelligence (AI) evolves, the rise of Agentic AI – systems that can act autonomously and make decisions without constant human oversight – holds immense potential to transform the workforce. These systems can automate complex tasks, boost productivity, and drive operational efficiency.

However, as AI gains autonomy, it also introduces new challenges, particularly around accuracy and reliability. Issues like AI hallucinations underscore the need for careful management. And as businesses increasingly rely on AI for customer service, decision-making, and content creation, ensuring these systems are trustworthy becomes crucial.

The future of work will be shaped by AI, not by replacing humans but by augmenting their abilities. While some jobs may be displaced, new opportunities will emerge, allowing workers to focus on tasks requiring creativity, strategy, and emotional intelligence. The key to this future lies in establishing strong guardrails to ensure AI’s responsible, effective use.

The Challenge of AI Hallucinations and Their Impact

Despite its capabilities, Agentic AI is far from flawless. One of the most significant challenges AI systems face is the phenomenon of "hallucinations," or instances where the AI generates information that is inaccurate, misleading, or completely false.

These hallucinations occur because AI systems, particularly large language models (LLMs), learn from vast amounts of data, but they don’t always have a full understanding of the context or accuracy of that data. When the information fed into these models is incorrect or incomplete, the AI can produce responses that seem plausible but are factually incorrect.

Hallucinations are particularly dangerous because they are difficult to detect. Unlike traditional software bugs that result in obvious error messages, AI hallucinations can appear as coherent and confident responses, making it challenging for users to spot errors.

This poses significant risks for businesses that deploy AI in customer-facing roles. If an AI chatbot gives customers inaccurate answers, it can damage the company’s reputation, erode trust, and result in lost sales. For instance, a customer relying on an AI-driven customer service bot for urgent information could be misled by a hallucinated response, leading to frustration and dissatisfaction.

For organizations, these hallucinations represent more than just a minor annoyance. They can have real financial and reputational costs. Businesses must ensure that their AI systems are both accurate and trustworthy if they are to capitalize on the benefits of automation without jeopardizing customer loyalty or operational efficiency.

The Role of Dynamic Meaning Theory in AI

To mitigate hallucinations and improve AI accuracy, it’s essential to focus on how AI understands and processes meaning. One framework that can help improve AI’s performance is Dynamic Meaning Theory (DMT). According to this theory, the meaning of words and phrases is not fixed but evolves dynamically based on context.

In other words, the meaning of a term may shift depending on the surrounding information and the situation in which it is used. This concept is crucial for AI systems that need to generate contextually appropriate and accurate responses. DMT helps AI systems better understand the nuances of language, reducing the likelihood of errors caused by misunderstanding context. With this framework, AI models can become more adept at producing relevant, accurate, and nuanced outputs.

Mitigating Hallucinations and Ensuring Responsible AI Use

The key to preventing hallucinations is ensuring that AI systems are capable of continually learning from new data, feedback, and evolving language trends, so they can adapt to the ever-changing contexts in which they operate. This adaptability is critical in minimizing the occurrence of hallucinations.

There are several strategies businesses can use leverage:

Quality Data and Robust Training: One of the most effective ways to reduce hallucinations is to ensure that AI systems are trained on high-quality, accurate, and contextually relevant data. Enterprises can combine internal, proprietary data with external sources to provide a more comprehensive and accurate base for AI training.

Retrieval-Augmented Generation (RAG): This approach integrates AI systems with real-time data retrieval mechanisms, ensuring that responses are grounded in the most up-to-date, relevant information available.

Fine-Tuning: Regularly fine-tuning AI models with fresh, accurate data is essential to ensure that they remain aligned with the latest knowledge and trends. This process helps reduce bias and ensures AI systems are better prepared to handle complex queries.

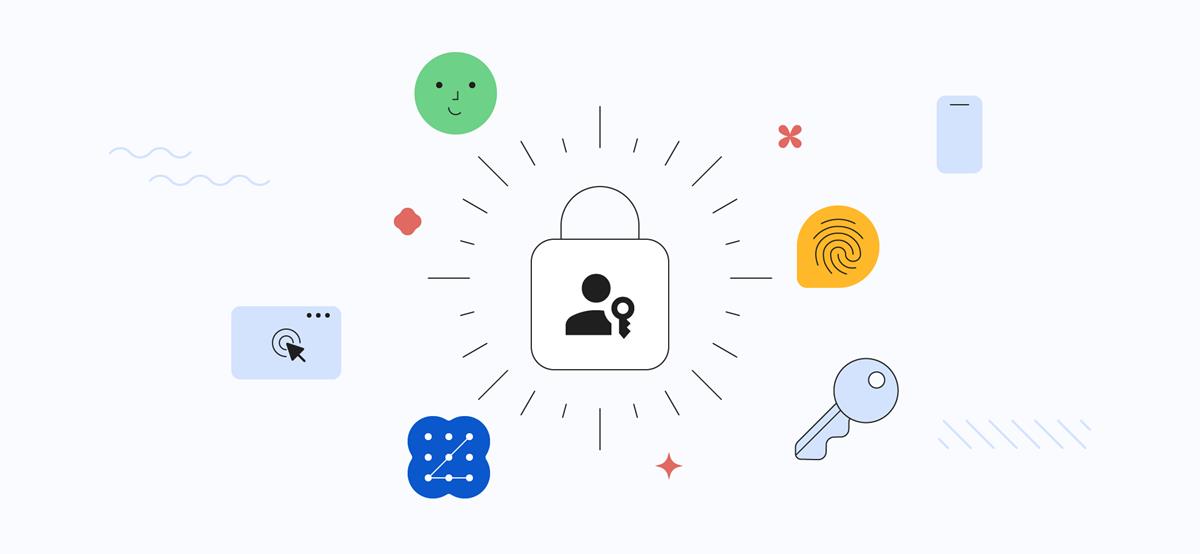

Implementing Guardrails for Responsible AI

To navigate the growing power of Agentic AI, businesses must implement ethical guardrails. These include clear guidelines, transparent development practices, and systems for continuous monitoring. Human-in-the-loop systems can ensure that AI remains accountable by allowing for human intervention when necessary. Additionally, businesses should work with regulators to develop standards for AI use that prioritize safety, transparency, and accountability.

In this new era of intelligent automation, AI can be a powerful ally, but only if we establish the right safeguards to guide its development and use. The future of work is at the intersection of technology and humanity, and the responsible use of Agentic AI will determine whether we realize its full potential or encounter unforeseen risks.

Read up about the best AI tools.

This article was produced as part of TechRadarPro's Expert Insights channel where we feature the best and brightest minds in the technology industry today. The views expressed here are those of the author and are not necessarily those of TechRadarPro or Future plc. If you are interested in contributing find out more here: https://www.techradar.com/news/submit-your-story-to-techradar-pro

![Apple Releases iOS 18.5 Beta and iPadOS 18.5 Beta [Download]](https://www.iclarified.com/images/news/96907/96907/96907-640.jpg)

![Apple Seeds watchOS 11.5 to Developers [Download]](https://www.iclarified.com/images/news/96909/96909/96909-640.jpg)

![Apple Seeds visionOS 2.5 Beta to Developers [Download]](https://www.iclarified.com/images/news/96911/96911/96911-640.jpg)

![Apple Seeds tvOS 18.5 Beta to Developers [Download]](https://www.iclarified.com/images/news/96913/96913/96913-640.jpg)

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![Is this a suitable approach to architect a flutter app? [closed]](https://i.sstatic.net/4hMHGb1L.png)