Understand and Code DeepSeek V3

DeepSeek V3 is a cutting-edge large language model. It leverages sophisticated techniques like a unique Multi-Head Latent Attention mechanism and a Mixture of Experts architecture for enhanced efficiency and capability. Understanding this model provi...

DeepSeek V3 is a cutting-edge large language model. It leverages sophisticated techniques like a unique Multi-Head Latent Attention mechanism and a Mixture of Experts architecture for enhanced efficiency and capability. Understanding this model provides valuable insights into the latest advancements shaping the future of artificial intelligence.

We've just launched a brand-new, in-depth course on the freeCodeCamp.org YouTube channel that will teach you to understand & Code DeepSeek V3 From Scratch. Taught by Vuk Rosić of Beam.AI, this comprehensive course dives into one of the latest advancements in large language models.

DeepSeek V3 has quickly gained attention, positioned as a top-performing non-reasoning model. This course offers a unique opportunity to truly understand its inner workings.

What You'll Learn

This isn't just a high-level overview. The course aims to equip you with a thorough understanding of both the underlying research paper and the practical coding implementation. You'll explore the core components that make DeepSeek V3 unique, including:

Multi-Head Latent Attention (MLA): Learn about this novel attention mechanism contributed by the DeepSeek team. Vuk breaks down the formulas and concepts, starting from basic attention principles.

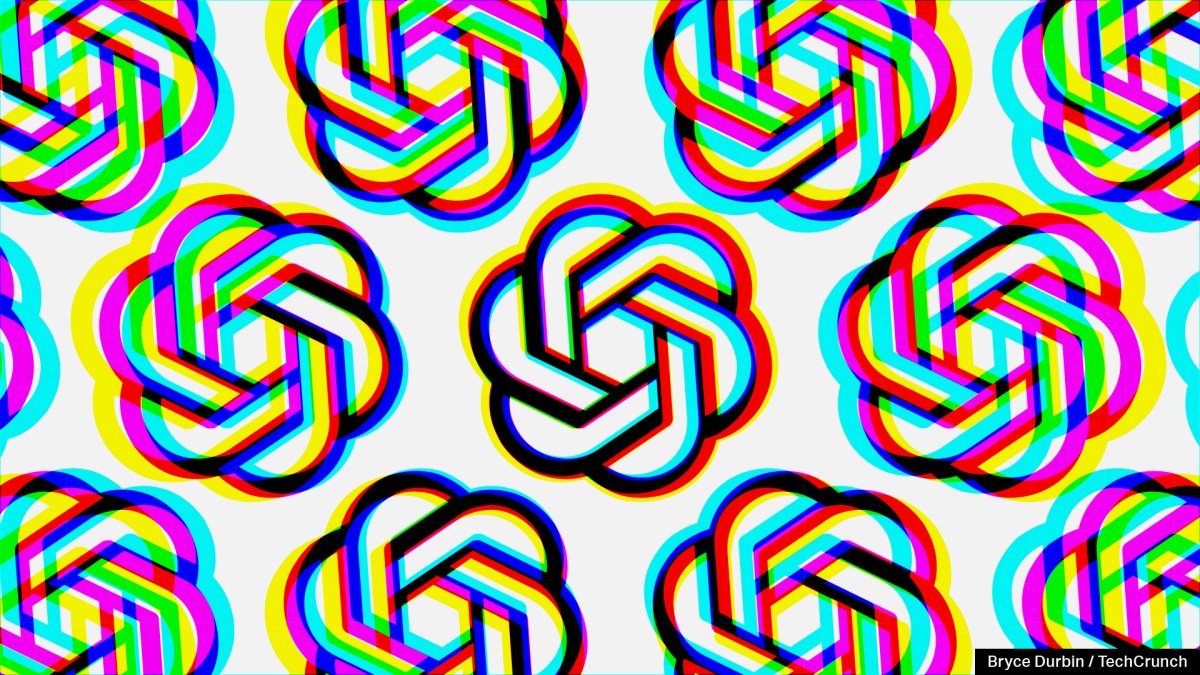

Query, Key, Value (QKV): Gain a fundamental understanding of the QKV mechanism. The course explains how token embeddings are transformed into query, key, and value vectors, how similarity is calculated using dot products, the importance of masking future tokens during training, and how softmax is applied to get attention weights.

Mixture of Experts (MoE): Understand the MoE architecture, including concepts like gating mechanisms and the role of individual expert multi-layer perceptrons.

Advanced Concepts: Learn about rotary positional embeddings (RoPE) and techniques for parallelizing matrix multiplications across GPUs for efficient computation.

From Theory to Code

Throughout the course, Vuk emphasizes not just what these components do, but how they work, encouraging viewers to follow along, take notes, and even try explaining the concepts themselves. You'll see how the theoretical concepts translate directly into code, aiming for a complete understanding of the provided code files by the end.

If you're ready to deepen your understanding of state-of-the-art language models and gain hands-on experience, this course is for you.

Head over to the freeCodeCamp.org YouTube channel now to watch the full course (4-hour watch).

![Some T-Mobile customers can track real-time location of other users and random kids without permission [UPDATED]](https://m-cdn.phonearena.com/images/article/169135-two/Some-T-Mobile-customers-can-track-real-time-location-of-other-users-and-random-kids-without-permission-UPDATED.jpg?#)

![Apple Releases macOS Sequoia 15.5 Beta to Developers [Download]](https://www.iclarified.com/images/news/96915/96915/96915-640.jpg)

![Amazon Makes Last-Minute Bid for TikTok [Report]](https://www.iclarified.com/images/news/96917/96917/96917-640.jpg)

![Apple Releases iOS 18.5 Beta and iPadOS 18.5 Beta [Download]](https://www.iclarified.com/images/news/96907/96907/96907-640.jpg)

![Apple Seeds watchOS 11.5 to Developers [Download]](https://www.iclarified.com/images/news/96909/96909/96909-640.jpg)

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![Is this a suitable approach to architect a flutter app? [closed]](https://i.sstatic.net/4hMHGb1L.png)