typia (20,000x faster validator) challenges to Agentic AI framework, with its compiler skill

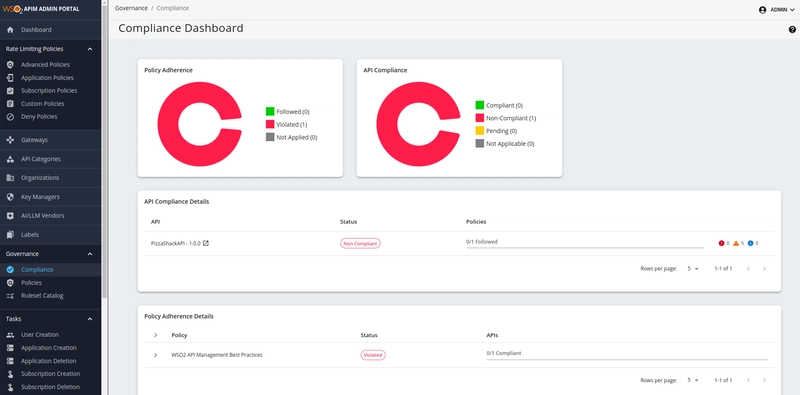

1. Preface typia challenges to Agentic AI, with its compiler skills. The new challenge comes with a new open source framework, @agentica. It is specialized in LLM Function Calling, and doing everything with it. Just list up functions to call, then you also can make the Agentic AI. If you're a TypeScript developer, then you are an AI developer now. Let's become the new era's AI developer. @agentica: https://wrtnlabs.io/agentica typia: https://typia.io import { Agentica } from "@agentica/core"; import { HttpLlm } from "@samchon/openapi"; import typia from "typia"; const agent = new Agentica({ controllers: [ HttpLlm.application({ model: "chatgpt", document: await fetch( "https://shopping-be.wrtn.ai/editor/swagger.json", ).then(r => r.json()), }), typia.llm.application(), typia.llm.application(), typia.llm.application(), ], }); await agent.conversate("I wanna buy MacBook Pro"); 2. Outline 2.1. Transformer Library //---- // src/checkString.ts //---- import typia, { tags } from "typia"; export const checkString = typia.createIs(); //---- // bin/checkString.js //---- import typia from "typia"; export const checkString = (() => { return (input) => "string" === typeof input; })(); typia is a transformer library converting TypeScript type to a runtime function. If you call one of the typia function, it would be compiled like below. This is the key concept of typia, transforming TypeScript type to a runtime function. The typia.is() function is transformed to a dedicated type checker by analyzing the target type T in the compilation level. This feature enables developers to ensure type safety in their applications, leveraging TypeScript’s static typing while also providing runtime validation. Instead of defining additional schema of hand made, you can simply utilize the pure TypeScript type itself. Also, as validation (or serialization) logics are generated by compiler analyzing TypeScript source codes, it is accurate and faster than any other competitive libraries. Measured on AMD Ryzen 9 7940HS, Rog Flow x13 2.2. Challenge to Agentic AI Jensen Huwang Graph, and his advocacy: https://youtu.be/R0Erk6J8o70 With the TypeScript compilation skill, typia challenges to Agentic AI framework. The new framework's name is @agentica, and it is specialized in LLM Function Calling, and doing everything with the function calling. The function calling schema, it comes from typia.llm.application() function. Here is a demonstration of Shopping Mall chatbot, composed by Swagger/OpenAPI document of the shopping mall backend of enterprise level, consist of 289 API functions. And as you can see from the below video, everything works fine. One thing amazing is, the shopping chatbot has accomplished the Agentic AI just by small sized model (gpt-4o-mini, 8b parameters). This is the new Agentic AI era opened by typia with compiler skill. Just by listing up functions to call, you can accomplish Agentic AI, pioneered by Jensen Huang. If you're TypeScript developer, you are now AI developer. import { Agentica } from "@agentica/core"; import { HttpLlm } from "@samchon/openapi"; import typia from "typia"; const agent = new Agentica({ controllers: [ HttpLlm.application({ model: "chatgpt", document: await fetch( "https://shopping-be.wrtn.ai/editor/swagger.json", ).then(r => r.json()), }), typia.llm.application(), typia.llm.application(), typia.llm.application(), ], }); await agent.conversate("I wanna buy MacBook Pro"); 2.3. LLM Function Calling https://platform.openai.com/docs/guides/function-calling LLM (Large Language Model) Function Calling, it means that AI selects proper function to call, and fill arguments of the function by analyzing conversation contexts with the user. typia and @agentica concenstrates and specializes on this concept, function calling to achieve the Agentic AI. Just looking at the definition of LLM function calling, it's such a cool concept that you wonder why it's not used. Wouldn't it be possible to achieve Agentic AI by listing the functions needed at that time? In this document, we will learn why the function calling could not be accepted generally, and see how typia and @agentica make them to be acceptable for general purpose again. 3. Concepts 3.1. Traditional AI Development In the traditional AI development, AI developers had concentrated on agent workflow, composed by multiple graph nodes. And they have concenstrated on dedicated purposed AI agent development, instead of making general purposed agent. By the way, this agent workflow has a critical weak point; scalability and flexibility. If an agent's functionality is expanded, AI developers have to make more complex agent workflow with more and more graph nodes. Furthermore, increase of number of agent graph nodes means

1. Preface

typia challenges to Agentic AI, with its compiler skills.

The new challenge comes with a new open source framework, @agentica.

It is specialized in LLM Function Calling, and doing everything with it. Just list up functions to call, then you also can make the Agentic AI. If you're a TypeScript developer, then you are an AI developer now. Let's become the new era's AI developer.

-

@agentica: https://wrtnlabs.io/agentica -

typia: https://typia.io

import { Agentica } from "@agentica/core";

import { HttpLlm } from "@samchon/openapi";

import typia from "typia";

const agent = new Agentica({

controllers: [

HttpLlm.application({

model: "chatgpt",

document: await fetch(

"https://shopping-be.wrtn.ai/editor/swagger.json",

).then(r => r.json()),

}),

typia.llm.application<ShoppingCounselor, "chatgpt">(),

typia.llm.application<ShoppingPolicy, "chatgpt">(),

typia.llm.application<ShoppingSearchRag, "chatgpt">(),

],

});

await agent.conversate("I wanna buy MacBook Pro");

2. Outline

2.1. Transformer Library

//----

// src/checkString.ts

//----

import typia, { tags } from "typia";

export const checkString = typia.createIs<string>();

//----

// bin/checkString.js

//----

import typia from "typia";

export const checkString = (() => {

return (input) => "string" === typeof input;

})();

typia is a transformer library converting TypeScript type to a runtime function.

If you call one of the typia function, it would be compiled like below. This is the key concept of typia, transforming TypeScript type to a runtime function. The typia.is function is transformed to a dedicated type checker by analyzing the target type T in the compilation level.

This feature enables developers to ensure type safety in their applications, leveraging TypeScript’s static typing while also providing runtime validation. Instead of defining additional schema of hand made, you can simply utilize the pure TypeScript type itself.

Also, as validation (or serialization) logics are generated by compiler analyzing TypeScript source codes, it is accurate and faster than any other competitive libraries.

Measured on AMD Ryzen 9 7940HS, Rog Flow x13

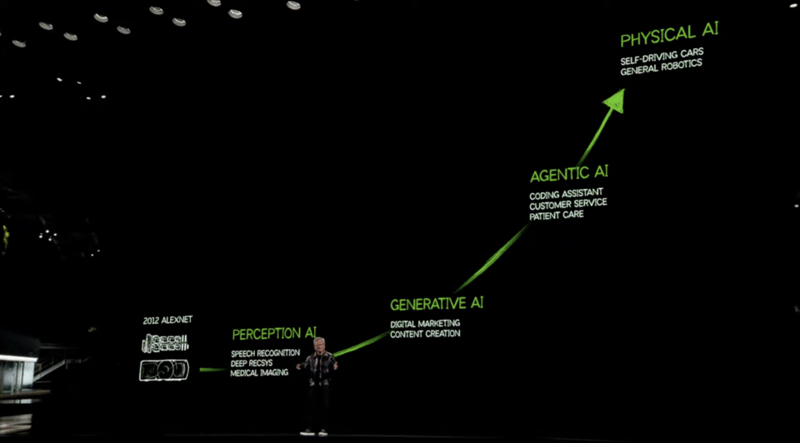

2.2. Challenge to Agentic AI

Jensen Huwang Graph, and his advocacy: https://youtu.be/R0Erk6J8o70

With the TypeScript compilation skill, typia challenges to Agentic AI framework.

The new framework's name is @agentica, and it is specialized in LLM Function Calling, and doing everything with the function calling. The function calling schema, it comes from typia.llm.application function.

Here is a demonstration of Shopping Mall chatbot, composed by Swagger/OpenAPI document of the shopping mall backend of enterprise level, consist of 289 API functions. And as you can see from the below video, everything works fine. One thing amazing is, the shopping chatbot has accomplished the Agentic AI just by small sized model (gpt-4o-mini, 8b parameters).

This is the new Agentic AI era opened by typia with compiler skill. Just by listing up functions to call, you can accomplish Agentic AI, pioneered by Jensen Huang. If you're TypeScript developer, you are now AI developer.

import { Agentica } from "@agentica/core";

import { HttpLlm } from "@samchon/openapi";

import typia from "typia";

const agent = new Agentica({

controllers: [

HttpLlm.application({

model: "chatgpt",

document: await fetch(

"https://shopping-be.wrtn.ai/editor/swagger.json",

).then(r => r.json()),

}),

typia.llm.application<ShoppingCounselor, "chatgpt">(),

typia.llm.application<ShoppingPolicy, "chatgpt">(),

typia.llm.application<ShoppingSearchRag, "chatgpt">(),

],

});

await agent.conversate("I wanna buy MacBook Pro");

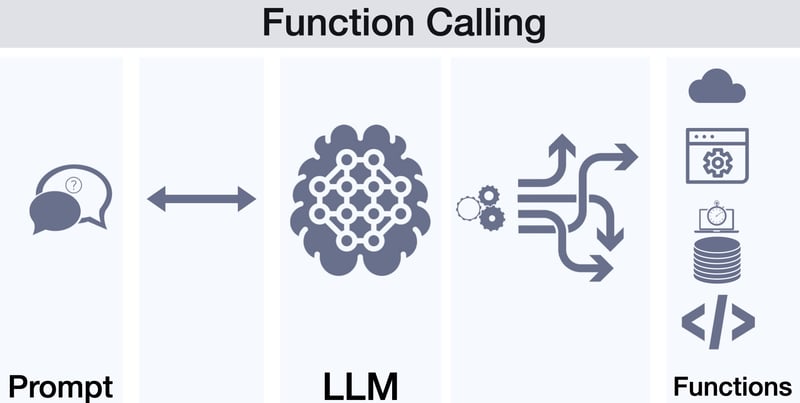

2.3. LLM Function Calling

https://platform.openai.com/docs/guides/function-calling

LLM (Large Language Model) Function Calling, it means that AI selects proper function to call, and fill arguments of the function by analyzing conversation contexts with the user.

typia and @agentica concenstrates and specializes on this concept, function calling to achieve the Agentic AI. Just looking at the definition of LLM function calling, it's such a cool concept that you wonder why it's not used. Wouldn't it be possible to achieve Agentic AI by listing the functions needed at that time?

In this document, we will learn why the function calling could not be accepted generally, and see how typia and @agentica make them to be acceptable for general purpose again.

3. Concepts

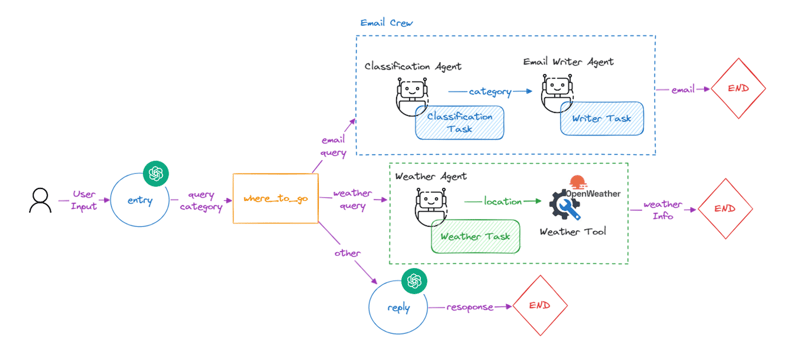

3.1. Traditional AI Development

In the traditional AI development, AI developers had concentrated on agent workflow, composed by multiple graph nodes. And they have concenstrated on dedicated purposed AI agent development, instead of making general purposed agent.

By the way, this agent workflow has a critical weak point; scalability and flexibility. If an agent's functionality is expanded, AI developers have to make more complex agent workflow with more and more graph nodes.

Furthermore, increase of number of agent graph nodes means that decrease of success rate. It is because the success rate decreases following the number of nodes multiplied by Cartesian Product. For example, if success rate of each node is 80 %, and there are five nodes sequentially, success rate of the agent workflow becomes 32.77 % (0.85).

To hedge the Cartesian Product disaster, AI developers need to make a new workflow of supervisor, attaching to main workflow's node as add-on. And in that case, if the functionality expanded again, fractal of workflows are required. To avoid Cartesian Product disaster, AI developers must face another Fractal disaster.

In such agent workflow way, would it be possible to make shopping chatbot like agent? Is it possible to make enterprise level chatbot? This is why only special-purpose chatbots or chatbots that are like toy projects are floating around in the world.

The problem arises from the fact that agent workflow itself is very difficult to create and has extremely low scalability and flexibility.

3.2. Document Driven Development

Documentation on each function independently.

To escape from disaster of Cartesian Product and Fractal of Agent Workflow, I suggest a new way "Document Driven Development". It is similar with the "Domain Driven Development" methodology separating complicate project to small domains, so that makes development easier and scalable. Only difference is, documentation comments are additionally required in the separation concept.

Write documentation comments on each function independently, describing the purpose of each function to the AI. Believe @agentica and LLM function calling, and take every other responsibiities to them. Just by writing documentation comments independently for each function, you can make your agent to be scalable, flexible and mass productive.

If there's a relationship between some functions, do not make agent workflow, but just write it down as a description comment too. Here is a list of good documented functions and schemas.

- Functions

- DTO schemas

export class BbsArticleService {

/**

* Get all articles.

*

* List up every articles archived in the BBS DB.

*

* @returns List of every articles

*/

public index(): IBbsArticle[];

/**

* Create a new article.

*

* Writes a new article and archives it into the DB.

*

* @param props Properties of create function

* @returns Newly created article

*/

public create(props: {

/**

* Information of the article to create

*/

input: IBbsArticle.ICreate;

}): IBbsArticle;

/**

* Update an article.

*

* Updates an article with new content.

*

* @param props Properties of update function

* @param input New content to update

*/

public update(props: {

/**

* Target article's {@link IBbsArticle.id}.

*/

id: string & tags.Format<"uuid">;

/**

* New content to update.

*/

input: IBbsArticle.IUpdate;

}): void;

/**

* Erase an article.

*

* Erases an article from the DB.

*

* @param props Properties of erase function

*/

public erase(props: {

/**

* Target article's {@link IBbsArticle.id}.

*/

id: string & tags.Format<"uuid">;

}): void;

}

3.3. Compiler Driven Development

LLM function calling schema must be written by compiler.

@agentica is an Agentic AI framework specialized in LLM Function Calling, and doing everything with the function calling. So, one of the most important is, how to build LLM schema safely and effectively.

By the way, in the traditional AI development, AI developers had defined hand-made LLM function calling schemas. It is a typical duplicated coding, and dangerous approach to entity definition.

If there's a mistake on the hand made schema definition, human can intuitively avoid it. However, AI never forgives such mistake. Invalid hand made schema definition, it will break the entire agent system.

So, if LLM schema is such difficult and annoying, @agentica becomes difficult and annoying framework. Also, if LLM schema building is such dangerous, @agentica also becomes dangerous framework.

import { ILlmApplication } from "@samchon/openapi";

import typia from "typia";

import { BbsArticleService } from "./BbsArticleService";

const app: ILlmApplication<"chatgpt"> = typia.llm.application<

BbsArticleService,

"chatgpt"

>();

console.log(app);

![iOS 19 Leak: First Look at Alleged VisionOS Inspired Redesign [Video]](https://www.iclarified.com/images/news/96824/96824/96824-640.jpg)

![OpenAI Announces 4o Image Generation [Video]](https://www.iclarified.com/images/news/96821/96821/96821-640.jpg)

![Do you care about Find My Device privacy settings? [Poll]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/06/Chipolo-One-Point-with-Find-My-Device-app.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![[The AI Show Episode 140]: New AGI Warnings, OpenAI Suggests Government Policy, Sam Altman Teases Creative Writing Model, Claude Web Search & Apple’s AI Woes](https://www.marketingaiinstitute.com/hubfs/ep%20140%20cover.png)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

.png?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)