AI makes us humans unlearn how to think – and we mistake it for progress

Are We Unlearning How to Think? Artificial intelligence is regarded as a breakthrough — a »milestone« that makes everyday life easier, boosts our productivity, and opens up new horizons. Euphoria is everywhere: the media celebrate »smart assistants«, corporations promise »life-hacks for every situation«, and advertising presents AI as an extension of our own thinking. Yet […]

Are We Unlearning How to Think?

Artificial intelligence is regarded as a breakthrough — a »milestone« that makes everyday life easier, boosts our productivity, and opens up new horizons. Euphoria is everywhere: the media celebrate »smart assistants«, corporations promise »life-hacks for every situation«, and advertising presents AI as an extension of our own thinking. Yet amid all the fascination with what is technically possible, many overlook something that is changing quietly and radically at the same time: the way we ourselves think — and how often we still actually do it.

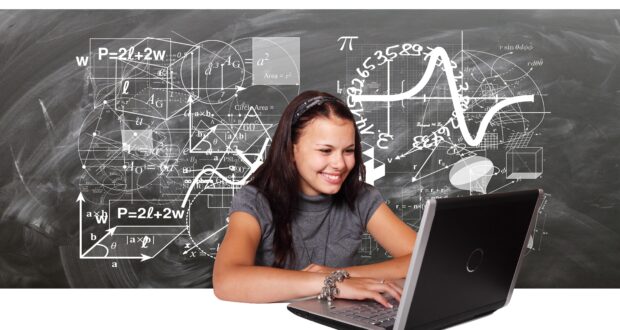

What is happening here is not mere unease or a vague worry about some distant future. It is a prediction that can already be observed in the present. More and more people — especially young people — hand over their thinking before they have even had a chance to practise it. Questions that used to be solved with a glance at a book, by trying things out, or by plain common sense are now reflexively delegated to an AI. Not because it is necessary — but because it is more convenient.

We are at the beginning of a cultural shift: thinking is no longer regarded as a matter of course but as an option — one that people increasingly spare themselves. And that is where the danger lies. Anyone who stops thinking forgets not only problem-solving but also questioning, weighing up, remembering — in short: the mental depth of being human.

Hinweis

For context: this text is directed at no particular generation, nor is it an expression of technophobia. It describes a development I observe daily — as the father of two children and as a lecturer in IT security who teaches students. This is precisely why I take such a critical view of today’s use of AI. This contribution is an opinion piece reflecting my personal view — not a scientific analysis. I welcome factual discussions, which I consider urgently necessary to foster a responsible approach to the topic.From Tool to Steward of Thinking

Until quite recently, artificial intelligence was merely a tool — a practical aid that took on clearly defined tasks: correcting texts or sorting information. A digital add-on to human thought, not its replacement. But that role has shifted quietly yet fundamentally. Today AI not only performs operative tasks, it increasingly makes cognitive decisions: Which phrasing sounds confident? Which tone seems empathetic? Which line of argument convinces in a conflict? It is precisely against this backdrop that I view our current use of AI critically.

Such decisions of expression and tone used to be a matter of experience, reflection, and practice. Today they are a menu item. Whether a shopping choice, a text snippet, or a relationship tip — the AI supplies the suggestion, often without the user being involved in the process at all. The phrasing is adopted without question. The thought is delivered at the press of a button instead of being developed.

What begins as relief can turn into dependence. Reaching for the AI becomes the first reaction — not the last resort. That is the core of the problem: we no longer delegate just work but also thought processes. Not out of necessity, but out of convenience. The result is not sudden intellectual impoverishment but a gradual weaning from our own judgement. The ability to orient ourselves linguistically, cognitively, and emotionally is increasingly replaced by automated suggestions — and therefore scarcely practised, let alone passed on.

The AI steward of thought is always available and never tires. But anyone who allows themselves to be permanently represented will eventually lose the feel for their own voice. Thinking does not wither suddenly — it evaporates, layer by layer, unnoticed.

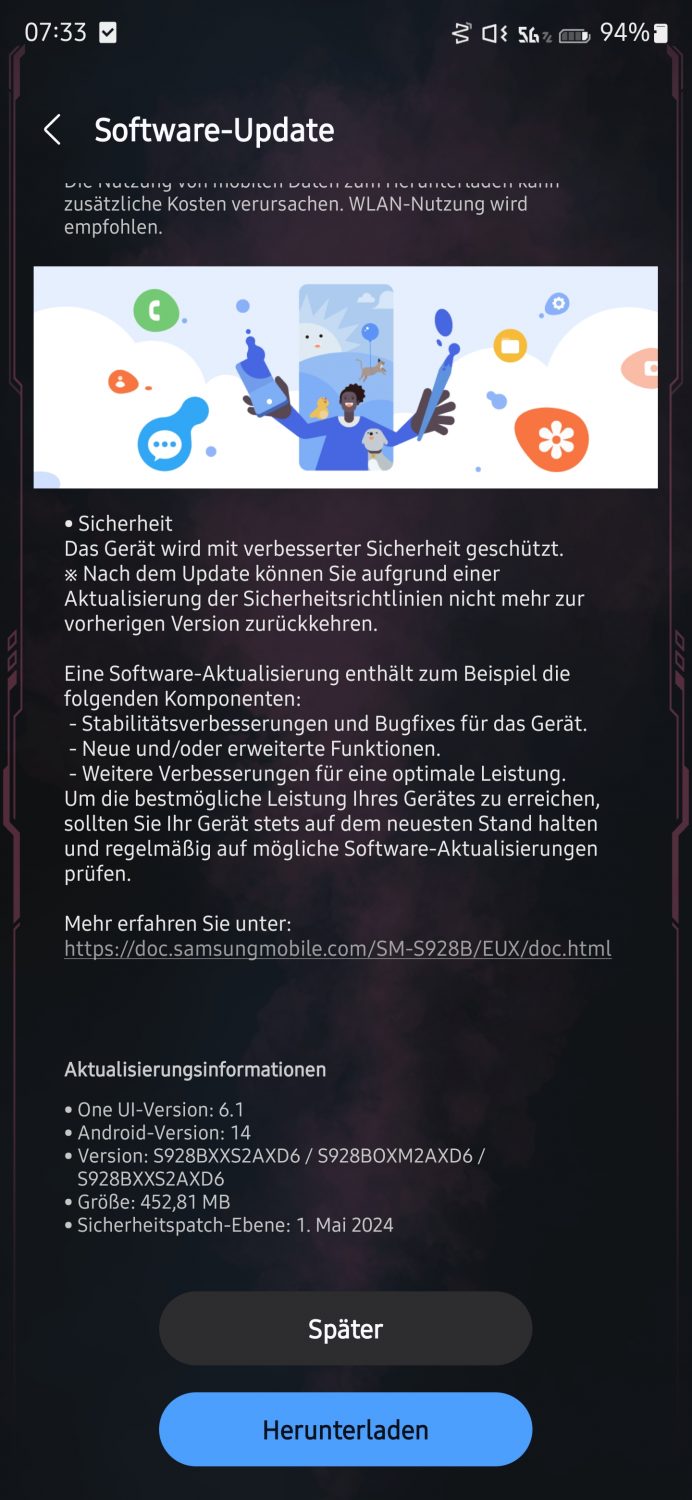

Example Samsung: »AI Lifehacks« for Generation Z

A particularly striking example is Samsung’s current campaign. The Galaxy S25 Ultra is presented as an everyday solution for »small and large mishaps« — with Google Gemini as the built-in problem-solver. The AI writes cover letters, plans trips, explains recipes, helps with excuses. The idea: you no longer have to think for yourself if the AI can do it for you.

One spot from the campaign shows a young woman asking, »How do you actually wash a sequin dress properly?« Instead of looking at the care label or searching online for tried-and-tested tips, she turns straight to the AI on her smartphone.

What is rarely addressed: those who become accustomed early on to handing over complex — or even trivial — decisions forget how to make them at all. If even simple tasks such as washing a garment are delegated to an AI, there is a danger that basic everyday skills will be lost. The ability to analyse and solve problems independently is undermined by the constant availability of AI solutions.

Lazy Thinking in Everyday Life — a Quiet Cultural Shift

Today, AI systems are fed questions that, in earlier times, warranted little more than a quick glance at a manual. Instead of thinking — or reading — many immediately type: »How do I do X?«, »How do I solve this problem?«, »Which decision should I make?«

Even the most trivial tasks are delegated to machines: How do I sort my waste correctly? Which colour goes with my jumper? How do I apologise to my girlfriend? This development is not harmless — it signals a creeping erosion of common sense.

Of course, it is more convenient to ask an AI than to read instructions. But that convenience comes at a price: we grow numb to detail, lose the ability to find our bearings without outside help, and become accustomed to a world in which every uncertainty is immediately »resolved« externally.

Convenience Is Not Education

This development has nothing to do with lack of intelligence — rather, it reflects a profound shift in cultural self-conception. Thinking is no longer understood as everyday practice but as something that, in case of doubt, can be delegated — to a system that is faster, runs more smoothly, and rarely puts up resistance.

Yet genuine education does not arise from frictionless processes. It thrives on grappling with material, on enduring uncertainty, on understanding rather than merely exploiting. It is not the perfect answer on the first attempt, but the road there — full of detours, dead ends, and repetitions.

»AI lifehacks« offer functionality, not education. They solve problems before one has even engaged with them. They deliver results — but not the process needed to learn to think independently. This is not only an impoverishment of experience but a recoding of what counts as »competence«.

Convenience increasingly replaces the will to engage. And those who have never learned to truly grapple with a topic will not realise what they are missing. The automation of thinking does not create emptiness but a quiet disinterest in depth — and that is the very opposite of education.

Between Relief and Disempowerment

Of course, AI can be a meaningful aid where real barriers exist — disabilities, language problems, overwhelm. But if every hesitation, every uncertainty is immediately treated as a »malfunction« to be eliminated by smart technology, one loses confidence in one’s own judgement.

Children and adolescents who grow up assuming they can ask an algorithm about every little thing will not learn how to develop answers themselves. They will learn how to delegate questions — and with each delegation give up a piece of thinking competence.

»AI Is Just Like Google, a Calculator or Sat-Nav«

Update 27.05.2025

Since my article prompted repeated comparisons such as »AI is just like Google, a calculator or a sat-nav«, I have added the following sub-chapter to explain why such comparisons are, in my view, misleading.A calculator does not make decisions for you. It processes numbers, not language, contexts, or values. It does exactly what you feed into it — and nothing more. The same input yields the same output everywhere. No interpretation, no weighting, no »suggestions«, no autonomous phrasing. AI, by contrast, processes language, makes (weighted) decisions, generates content — often opaque and unverifiable. The process is not transparent, the result not verifiable. What would be an error in a calculator is euphemistically called a »hallucination« in AI.

Google Search shows you links. You must select, filter, read, compare, evaluate. It conveys knowledge — it does not think for you. An AI such as ChatGPT or Gemini, however, formulates the answer outright. It replaces thinking about sources with a smooth, finished response. You receive the result — not the path to it. The cognitive process is therefore not merely supported but bypassed.

Still less apt is the comparison with navigation systems like TomTom. They guide you from A to B — nothing more. They neither influence your destination nor formulate reasons why you want to go there. They navigate space, not thought. An AI, by contrast, not only accompanies but shapes; it intervenes in linguistic, argumentative, and emotional decisions. Not »directions«, but »interpretation of the world«.

Technologies such as the calculator or a search engine support cognitive processes. AI, however, begins to replace them. The comparison is not merely wrong — it dangerously trivialises the issue. The same goes for other analogies: anyone who equates AI with Wikipedia ignores that Wikipedia provides content, whereas AI invents, formulates, and weights it — apparently neutral, but without source, transparency, or responsibility. Such equations/comparisons are not only inaccurate — they distort the debate and obscure what is really at stake: the gradual outsourcing of intellectual autonomy.

Conclusion: If You Don’t Think, You’ll Be Thought For

AI can be useful. It can support, structure, relieve. But it must not replace what makes us human at the core: the ability to think, to judge, to question for ourselves. Not every question calls for an algorithm; not every problem demands a technical solution. Sometimes a brief moment of reflection will suffice — or the courage to form an opinion at all.

Yet that courage is growing quieter. Those who get used too early to handing over responsibility — for decisions, for language, for thoughts — unlearn even recognising responsibility. AI is then no longer used as a tool but as a proxy: for knowledge, for experience, for judgement. And what is meant to relieve us begins to replace us.

The formula sounds harmless: efficiency, comfort, automation. Yet it entails a dangerous inversion. If you no longer think for yourself, you will be thought for — by systems whose workings we barely understand and whose interests do not coincide with ours. Those who no longer ask for themselves receive prefabricated answers. Those who no longer seek their own words become linguistically dependent. And those who have never learned to cope with uncertainty will believe every statement — even a false one.

In the comfort zone of digital thinking, we unlearn what constitutes education, freedom, and maturity: active engagement with the world. It is not about progress or regress but about the price we are willing to pay for convenience. If we leave thinking to machines, all that remains of the human being is a user profile in an endless data landscape — and for many, this reduction is already under way today.

,regionOfInterest=(267,198)&hash=6b09ba291ef39bc72e25dff163ab2d9c9d6cff796f82c8b6647f659134332c55#)

,regionOfInterest=(1005,585)&hash=50654f920e8a4ff0d91b61439570ce284204e789fe4594036d25869137c2d1b0#)

,regionOfInterest=(1256,559)&hash=431b8db82485484fd1097905c8f95fcbf7ad5792be9b5e3b0f1be5d8fcbc6b50#)

![waipu.tv Comfort nur 1 Euro pro Monat [mehr als 230 TV-Sender, 200+ in HD]](https://www.macerkopf.de/wp-content/uploads/2025/06/waipu190625.jpg)

![SEO-Relaunch: 7 Punkte, die nicht verhandelbar sein dürfen! [Search Camp 377]](https://blog.bloofusion.de/wp-content/uploads/2025/06/Search-Camp-Canva-377.png)

![Das HR-Power-Pack: SEO, SEA + Social Ads für die Mitarbeitergewinnung [Search Camp 376]](https://blog.bloofusion.de/wp-content/uploads/2025/05/Search-Camp-Canva-376.png)

![SEO-Monatsrückblick Mai 2025: AIOs, AI Mode, llms.txt + mehr [Search Camp 375]](https://blog.bloofusion.de/wp-content/uploads/2025/06/Search-Camp-Canva-375.png)

![Deals: XL-Eismaschine - Dieses starke Gerät hat das Zeug ein wahrer Sommer-Hit zu werden! [Anzeige]](https://images.cgames.de/images/gamestar/4/klarstein-sweet-swirl-eismaschine-teaser_6359887.jpg?#)

:quality(80)/p7i.vogel.de/wcms/f9/41/f9414ce31e8109abe69fe576bf3bfdcb/0125224403v2.jpeg?#)

:quality(80)/p7i.vogel.de/wcms/09/6a/096ad833b4ff28862dfb18e0dc3ea094/0125079793v1.jpeg?#)

:quality(80)/p7i.vogel.de/wcms/f5/6a/f56a8dc563be39d4430039d1a931f114/0124922822v1.jpeg?#)

:quality(80)/p7i.vogel.de/wcms/57/10/5710f3147838b737126b59f13e6fcf62/0125079681v2.jpeg?#)