The Future of Tech: Where Cloud Native Meets Artificial Intelligence

Imagine a world where machines think, adapt, and scale effortlessly, all while running on a system as flexible as the clouds above us. That’s the promise of Cloud Native Artificial Intelligence (CNAI), a fascinating fusion of two of today’s most transformative tech trends: Cloud Native computing and Artificial Intelligence (AI). A recent white paper from the CNCF AI Working Group, published on March 20, 2024, dives deep into this intersection, offering a roadmap for engineers, businesses, and even curious minds like ours to understand how these technologies are reshaping our digital landscape. Let’s break it down. What’s Cloud Native, Anyway? Think of Cloud Native as the backbone of modern apps. It’s a way of building software that thrives in the cloud—scalable, resilient, and ready to adapt. Picture a bustling city: instead of one giant skyscraper, Cloud Native is a network of smaller, modular buildings (microservices) connected by bridges (containers) and managed by a brilliant city planner (Kubernetes). Since 2013, when Docker kicked off the container craze, this approach has exploded, helping companies deploy apps faster and more reliably across public, private, or hybrid clouds. The Cloud Native Computing Foundation (CNCF) defines it as tech that empowers us to “build and run scalable applications in modern, dynamic environments.” It’s less about rigid rules and more about flexibility—like a recipe you can tweak to suit your taste. AI: From Sci-Fi to Everyday Life Now, let’s talk AI. It’s been around since 1956, when the term was coined, but it’s only recently that it’s become a household name. Remember when IBM’s Watson crushed it on Jeopardy!? Or how about ChatGPT helps you figure out a plan? AI has evolved from clunky algorithms to systems that mimic human smarts—think speech recognition, image processing, or even predicting your next Netflix binge. The real game-changers? Deep learning and neural networks, especially transformers (born in 2017 from the minds at Google and the University of Toronto), which power those massive Large Language Models (LLMs) we can’t stop talking about. AI now splits into two camps: discriminative (spotting patterns, like spam filters) and generative (creating new stuff, like art or stories). It’s like having a super-smart assistant who can both analyze your schedule and write you a poem. When Cloud Meets AI: The Birth of CNAI So, what happens when you marry Cloud Native’s scalability with AI’s brainpower? You get CNAI—a way to build, deploy, and scale AI systems using Cloud Native principles. It’s like giving AI a turbocharged engine. Kubernetes, the go-to cloud orchestrator, manages the heavy lifting—handling compute power (CPUs, GPUs), networking, and storage—so AI practitioners can focus on their magic without sweating the infrastructure. Companies like OpenAI (scaling Kubernetes to 7,500 nodes!) and Hugging Face (teaming up with Microsoft Azure) are already proving how this combo can supercharge AI workloads. Why does this matter? Because AI isn’t just a lab experiment anymore—it’s a cloud workload that’s growing fast. Whether it’s training a model to spot cat pics or generating a novel from a prompt, CNAI makes it repeatable, scalable, and cost-effective. The cloud’s elasticity lets startups and giants alike prototype quickly, tap into vast resources, and scale without breaking the bank. It’s like renting a spaceship instead of building one from scratch. The Bumps in the Road Of course, it’s not all smooth sailing. The white paper lays out some real challenges. Data prep is a beast—think massive datasets doubling every 18 months, needing to be cleaned, synced, and governed for privacy (hello, GDPR!). Training models, especially LLMs, demands crazy computing power—GPUs are hot commodities, and sharing them efficiently is still a work in progress. Serving those models? You’ve got latency issues and the need to juggle resources smartly. And don’t get me started on the user experience—AI experts shouldn’t need a PhD in Kubernetes to get started. Then there’s the bigger stuff: sustainability (training an LLM can emit as much carbon as a cross-country flight), security (protecting sensitive data), and observability (keeping tabs on model performance). It’s a lot to wrestle with, but the CNCF crew sees these as opportunities, not roadblocks. Solutions on the Horizon The good news? The tech world’s already rolling up its sleeves. Tools like Kubeflow are streamlining the AI pipeline—from data prep to model serving—using Kubernetes’ muscle. Vector databases (think Redis or Milvus) are boosting LLMs with extra context, making responses sharper. Projects like OpenLLMetry are bringing observability to AI, so we can peek under the hood of these black-box systems. And on the scheduling front, Kubernetes is evolving with tools like Yunikorn and Kueue to better manage those precious GPUs. Sustainability’s getting

Imagine a world where machines think, adapt, and scale effortlessly, all while running on a system as flexible as the clouds above us. That’s the promise of Cloud Native Artificial Intelligence (CNAI), a fascinating fusion of two of today’s most transformative tech trends: Cloud Native computing and Artificial Intelligence (AI). A recent white paper from the CNCF AI Working Group, published on March 20, 2024, dives deep into this intersection, offering a roadmap for engineers, businesses, and even curious minds like ours to understand how these technologies are reshaping our digital landscape. Let’s break it down.

What’s Cloud Native, Anyway?

Think of Cloud Native as the backbone of modern apps. It’s a way of building software that thrives in the cloud—scalable, resilient, and ready to adapt. Picture a bustling city: instead of one giant skyscraper, Cloud Native is a network of smaller, modular buildings (microservices) connected by bridges (containers) and managed by a brilliant city planner (Kubernetes).

Since 2013, when Docker kicked off the container craze, this approach has exploded, helping companies deploy apps faster and more reliably across public, private, or hybrid clouds. The Cloud Native Computing Foundation (CNCF) defines it as tech that empowers us to “build and run scalable applications in modern, dynamic environments.” It’s less about rigid rules and more about flexibility—like a recipe you can tweak to suit your taste.

AI: From Sci-Fi to Everyday Life

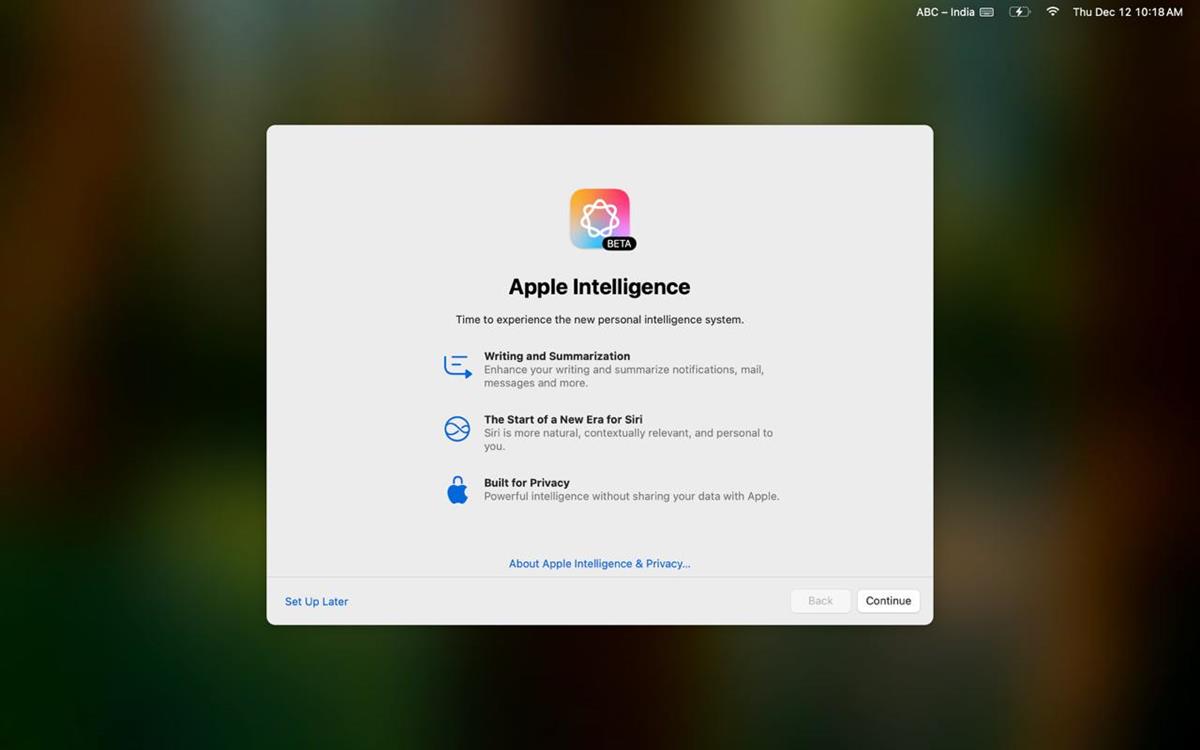

Now, let’s talk AI. It’s been around since 1956, when the term was coined, but it’s only recently that it’s become a household name. Remember when IBM’s Watson crushed it on Jeopardy!? Or how about ChatGPT helps you figure out a plan? AI has evolved from clunky algorithms to systems that mimic human smarts—think speech recognition, image processing, or even predicting your next Netflix binge.

The real game-changers? Deep learning and neural networks, especially transformers (born in 2017 from the minds at Google and the University of Toronto), which power those massive Large Language Models (LLMs) we can’t stop talking about. AI now splits into two camps: discriminative (spotting patterns, like spam filters) and generative (creating new stuff, like art or stories). It’s like having a super-smart assistant who can both analyze your schedule and write you a poem.

When Cloud Meets AI: The Birth of CNAI

So, what happens when you marry Cloud Native’s scalability with AI’s brainpower? You get CNAI—a way to build, deploy, and scale AI systems using Cloud Native principles. It’s like giving AI a turbocharged engine.

Kubernetes, the go-to cloud orchestrator, manages the heavy lifting—handling compute power (CPUs, GPUs), networking, and storage—so AI practitioners can focus on their magic without sweating the infrastructure. Companies like OpenAI (scaling Kubernetes to 7,500 nodes!) and Hugging Face (teaming up with Microsoft Azure) are already proving how this combo can supercharge AI workloads.

Why does this matter? Because AI isn’t just a lab experiment anymore—it’s a cloud workload that’s growing fast. Whether it’s training a model to spot cat pics or generating a novel from a prompt, CNAI makes it repeatable, scalable, and cost-effective. The cloud’s elasticity lets startups and giants alike prototype quickly, tap into vast resources, and scale without breaking the bank. It’s like renting a spaceship instead of building one from scratch.

The Bumps in the Road

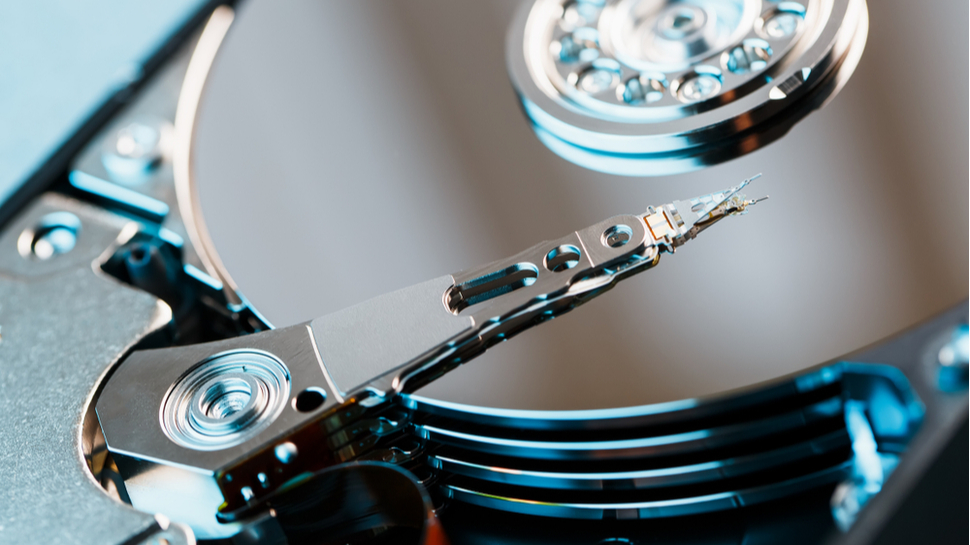

Of course, it’s not all smooth sailing. The white paper lays out some real challenges. Data prep is a beast—think massive datasets doubling every 18 months, needing to be cleaned, synced, and governed for privacy (hello, GDPR!). Training models, especially LLMs, demands crazy computing power—GPUs are hot commodities, and sharing them efficiently is still a work in progress. Serving those models? You’ve got latency issues and the need to juggle resources smartly. And don’t get me started on the user experience—AI experts shouldn’t need a PhD in Kubernetes to get started.

Then there’s the bigger stuff: sustainability (training an LLM can emit as much carbon as a cross-country flight), security (protecting sensitive data), and observability (keeping tabs on model performance). It’s a lot to wrestle with, but the CNCF crew sees these as opportunities, not roadblocks.

Solutions on the Horizon

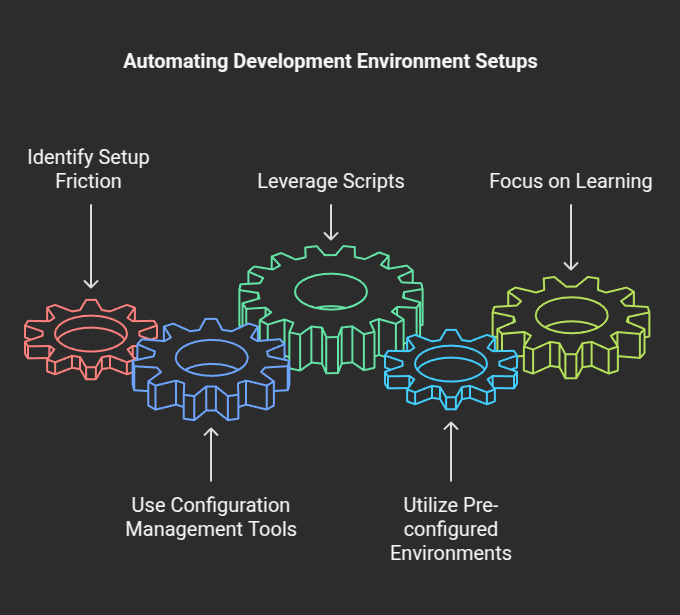

The good news? The tech world’s already rolling up its sleeves. Tools like Kubeflow are streamlining the AI pipeline—from data prep to model serving—using Kubernetes’ muscle. Vector databases (think Redis or Milvus) are boosting LLMs with extra context, making responses sharper. Projects like OpenLLMetry are bringing observability to AI, so we can peek under the hood of these black-box systems. And on the scheduling front, Kubernetes is evolving with tools like Yunikorn and Kueue to better manage those precious GPUs.

Sustainability’s getting love too—think smaller, efficient models like DistiBERT or smarter autoscaling with KServe. Plus, there’s a push for transparency: imagine model “nutrition labels” showing their carbon footprint. Education’s another frontier—why not teach kids the basics of AI and Cloud Native early, so they’re ready for this future?

AI Giving Back to Cloud Native

Here’s the cool twist: AI isn’t just riding Cloud Native’s coattails—it’s giving back. Imagine an LLM chatting with you to manage a Kubernetes cluster, spotting security threats, or predicting when to scale resources.

At a 2023 event in Chicago, engineers demoed a natural language interface for Kubernetes—think “Hey, launch a chaos test” and it just happens. AI’s also digging into logs, boosting orchestration, and making cloud systems smarter. It’s a two-way street, and we’re just scratching the surface.

Where We’re Headed

The white paper wraps up with a call to action: embrace this synergy. For businesses, it’s a chance to innovate—automate tasks, analyze mountains of data, or personalize everything. For developers, it’s about simpler tools and a smoother ride. For society, it’s about trust, safety, and sustainability—building AI that’s responsible, not reckless. New roles like “MLDevOps Engineer” might even pop up, bridging data science and cloud ops.

This isn’t just tech jargon—it’s the future unfolding. Cloud Native and AI are like peanut butter and jelly: great alone, unstoppable together. As the CNCF AI Working Group puts it, the possibilities are endless. So, whether you’re a coder, a dreamer, or just someone who loves a good chatbot, keep an eye on CNAI—it’s about to change the game.

![Apple to Avoid EU Fine Over Browser Choice Screen [Report]](https://www.iclarified.com/images/news/96813/96813/96813-640.jpg)

![iOS 18.4 Release Candidate comes bundled with over 50 new changes and features [Video]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/03/iOS-18.4-Release-Candidate-Visual-Intelligence-Action-Button-iPhone-16e.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

-xl-xl.jpg)

_alon_harel_Alamy.jpg?#)

![[The AI Show Episode 140]: New AGI Warnings, OpenAI Suggests Government Policy, Sam Altman Teases Creative Writing Model, Claude Web Search & Apple’s AI Woes](https://www.marketingaiinstitute.com/hubfs/ep%20140%20cover.png)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

.png?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

.png?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)