We should still teach coding

Software written using generative AI is all over the web. Performance and security issues abound. Open source projects are being overwhelmed by bot traffic. There's a lot of harm being caused, but as an educator who cares about lowering barriers to software creation, I can't ignore the democratizing potential of these tools either. In this post I’d like to explore the opportunities for learning that this paradigm shift presents – from how to make your projects more efficient and secure, to learning about the intellectual property and labor exploitation that might have gone into training the models you used. Understanding incentives One thing I have learned working in education is the value of accepting people’s motivations – having a goal that a skill will help you achieve is the most effective motivator for learning. Clearly many people are finding ways to use AI to help them achieve goals. Motivations are determined by complex socioeconomic factors beyond our ability to reason away. Let’s instead keep the door to the good internet open by empowering people to make responsible choices, equipping them with knowledge of the technical systems they’re creating within. Gen AI might help you spin up an MVP, but eventually you will have to dig into the code. You’ll either learn software engineering skills, or have to bring in the experts. Far from making engineers obsolete, I worry that less investment in these skills will make the barriers around the profession more extreme. A select few having insight into how critical systems work is not a future I’m enthusiastic about. Instead I’d like to find learning paths that these new ways of building software present. The conditions for learning are in place Projects developed using gen AI can support a range of good practices in education, including some key activities that we often neglect in both formal learning and the workplace: Personalization: We can work on something meaningful to the learner rather than dragging them through a one-size-fits-all experience. Fast feedback loops: Automation can fuel adaptive learning through short iterative cycles. Reflection: Reflecting on an experience helps you internalize skills you’ve acquired and reapply them in different contexts. Code generated using LLMs enables and requires these perhaps more than “traditional” ways of making software. There are also some coding pedagogy techniques that gen AI projects can lend themselves to: Reading before writing – code comprehension is a crucial software engineering skill, consider an experienced engineer performing code reviews and mentoring junior teammates. Starting from a functioning application instead of a blank slate. Running an app to see what it does, then investigating how it did it. Taking gradual ownership of a project by making a first edit, then turning it into something new. ⚠️ Generative AI is not an appropriate substitute for the many interpersonal aspects of effective learning IMO. “Mentoring” powered by biased LLM content is incredibly dangerous. Encouraging inquiry and independent thought I wonder about a gen AI coding exercise that gives you a broken project to fix, or prompts you to explore where the code came from – this kind of inquiry is being used in the humanities to support the development of critical thinking skills, especially important since these tools might by default erode those very skills. Then we have the wealth of problems that arise when people deploy applications with little to no understanding of their implementation. What happens over the long term, what happens if your project becomes a real thing people depend on, what happens when it breaks, what happens when it causes harm, how do we navigate the obfuscation of accountability? These are learning opportunities we’ll embrace if we want people to make more informed choices about how they use technology. Software engineering is a very privileged profession, largely because it requires access to education. Vibe coding creates new paths into building with tech. The starting point may be different, but we might even manage to invite more people into the spaces where we shape the future of the web. Learn how Fastly is making AI more sustainable. Cover image is The March of Intellect by Robert Seymour

Software written using generative AI is all over the web. Performance and security issues abound. Open source projects are being overwhelmed by bot traffic. There's a lot of harm being caused, but as an educator who cares about lowering barriers to software creation, I can't ignore the democratizing potential of these tools either.

In this post I’d like to explore the opportunities for learning that this paradigm shift presents – from how to make your projects more efficient and secure, to learning about the intellectual property and labor exploitation that might have gone into training the models you used.

Understanding incentives

One thing I have learned working in education is the value of accepting people’s motivations – having a goal that a skill will help you achieve is the most effective motivator for learning. Clearly many people are finding ways to use AI to help them achieve goals.

Motivations are determined by complex socioeconomic factors beyond our ability to reason away. Let’s instead keep the door to the good internet open by empowering people to make responsible choices, equipping them with knowledge of the technical systems they’re creating within.

Gen AI might help you spin up an MVP, but eventually you will have to dig into the code. You’ll either learn software engineering skills, or have to bring in the experts. Far from making engineers obsolete, I worry that less investment in these skills will make the barriers around the profession more extreme. A select few having insight into how critical systems work is not a future I’m enthusiastic about. Instead I’d like to find learning paths that these new ways of building software present.

The conditions for learning are in place

Projects developed using gen AI can support a range of good practices in education, including some key activities that we often neglect in both formal learning and the workplace:

- Personalization: We can work on something meaningful to the learner rather than dragging them through a one-size-fits-all experience.

- Fast feedback loops: Automation can fuel adaptive learning through short iterative cycles.

- Reflection: Reflecting on an experience helps you internalize skills you’ve acquired and reapply them in different contexts.

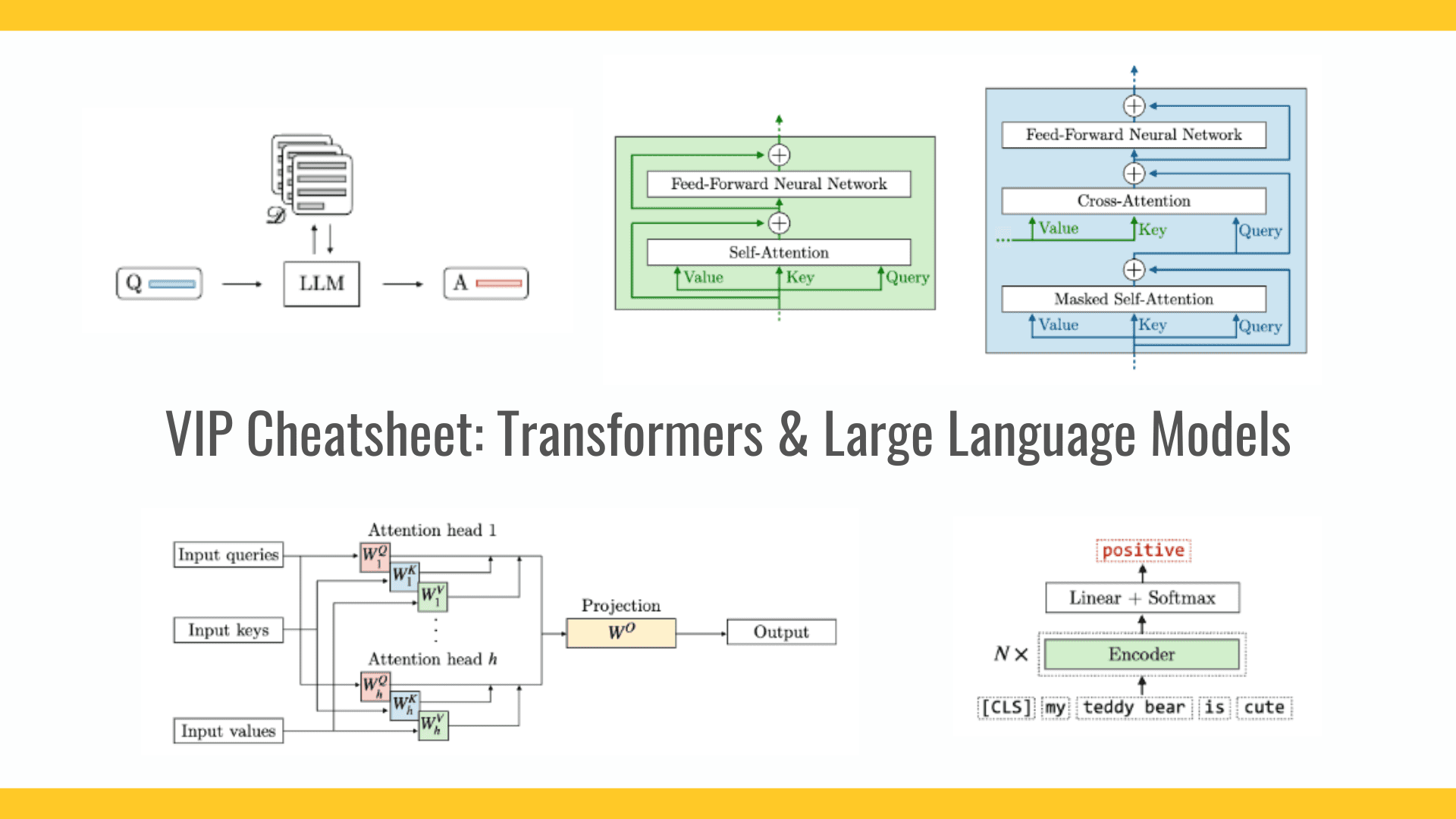

Code generated using LLMs enables and requires these perhaps more than “traditional” ways of making software. There are also some coding pedagogy techniques that gen AI projects can lend themselves to:

- Reading before writing – code comprehension is a crucial software engineering skill, consider an experienced engineer performing code reviews and mentoring junior teammates.

- Starting from a functioning application instead of a blank slate.

- Running an app to see what it does, then investigating how it did it.

- Taking gradual ownership of a project by making a first edit, then turning it into something new.

⚠️ Generative AI is not an appropriate substitute for the many interpersonal aspects of effective learning IMO. “Mentoring” powered by biased LLM content is incredibly dangerous.

Encouraging inquiry and independent thought

I wonder about a gen AI coding exercise that gives you a broken project to fix, or prompts you to explore where the code came from – this kind of inquiry is being used in the humanities to support the development of critical thinking skills, especially important since these tools might by default erode those very skills.

Then we have the wealth of problems that arise when people deploy applications with little to no understanding of their implementation. What happens over the long term, what happens if your project becomes a real thing people depend on, what happens when it breaks, what happens when it causes harm, how do we navigate the obfuscation of accountability? These are learning opportunities we’ll embrace if we want people to make more informed choices about how they use technology.

Software engineering is a very privileged profession, largely because it requires access to education. Vibe coding creates new paths into building with tech. The starting point may be different, but we might even manage to invite more people into the spaces where we shape the future of the web.

Learn how Fastly is making AI more sustainable.

Cover image is The March of Intellect by Robert Seymour

![Nomad Goods Launches 15% Sitewide Sale for 48 Hours Only [Deal]](https://www.iclarified.com/images/news/96899/96899/96899-640.jpg)

![Apple Watch Series 10 Prototype with Mystery Sensor Surfaces [Images]](https://www.iclarified.com/images/news/96892/96892/96892-640.jpg)

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![Is this a suitable approach to architect a flutter app? [closed]](https://i.sstatic.net/4hMHGb1L.png)

![From broke musician to working dev. How college drop-out Ryan Furrer taught himself to code [Podcast #166]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743189826063/2080cde4-6fc0-46fb-b98d-b3d59841e8c4.png?#)

_kYeMsh2.png?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

.png?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)